In the last issue, we talked about the Nginx configuration of a single-page application. In this issue, we will briefly introduce the optimization of page loading from a front-end perspective. Of course, this is just a trivial matter. After all, I am not a professional Nginx optimizer.

Page load

First of all, we have to look at what process is in the middle of our web page loading. Those things are more time-consuming. For example, we visit github:

- Queued, Queueing: If it is

HTTP/1.1, there will beline head blocking, and the browser can open up to 6 concurrent connections for each domain name. - Stalled: The browser must allocate resources in advance and schedule connections.

- DNS Lookup: DNS resolves the domain name.

- Initial connection, SSL: to establish a connection with the server, TCP handshake, of course, if you are https, there is also a TLS handshake.

- Request sent: The server sends data.

- TTFB: Waiting for the returned data, network transmission, that is, the response time of the first byte of

. - Content Dowload: Receive data.

It can be seen from the figure that from establishing a connection with the server to receiving data, there is a lot of time spent here. Of course, there is DNS resolution, but there is a local cache, so there is basically no time.

gzip-reduce loading volume

First, we can compress js and css

vue.config.js:

const CompressionWebpackPlugin = require('compression-webpack-plugin')

buildcfg = {

productionGzipExtensions: ['js', 'css']

}

configureWebpack: (config) => {

config.plugins.push(

new CompressionWebpackPlugin({

test: new RegExp('\\.(' + buildcfg.productionGzipExtensions.join('|') + ')$'),

threshold: 8192,

minRatio: 0.8

})

)

}Open gzip in nginx:

server module:

# 使用gzip实时压缩

gzip on;

gzip_min_length 1024;

gzip_buffers 4 16k;

gzip_comp_level 6;

gzip_types text/plain application/javascript application/x-javascript text/css application/xml text/javascript;

gzip_vary on;

gzip_disable "MSIE [1-6]\.";

# 使用gzip_static

gzip_static on;Here is a brief explanation,

gzip_static.gzfile that will automatically find the corresponding file. This one isgzipturned on or not andgzip_typesetc. You can understand that the.gzfile is returned first.gzipis for real-time compression of the requested file. This will consumecpuContent-Lengthof the requested file above is greater thangzip_min_length, it will be compressed and returned.

To sum up, if you have .gz files after packaging, you only need to open gzip_static . If not, then you have to enable gzip real-time compression, but I recommend using the former. In addition, gzip is suitable for text types, pictures and the like will be counterproductive. Therefore, please set gzip_types

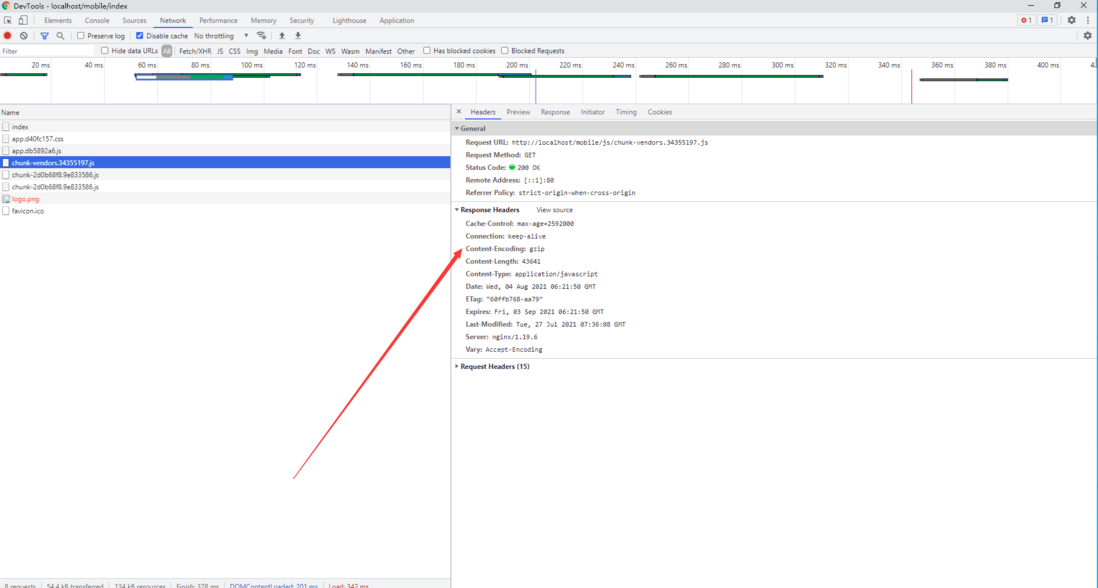

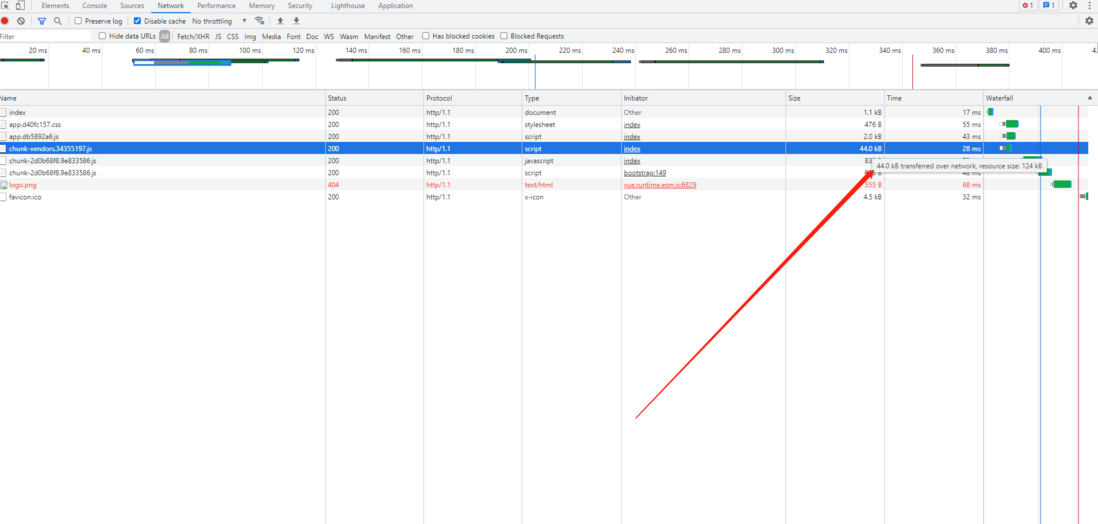

If you want to see if gzip is successfully enabled, you can check the returned header Content-Encoding: gzip and the size of the file. Here you can see that our original file is 124kb, and the returned gzip file is 44kb. The compression efficiency is still quite large:

Cache control-no request is the best request

The cache interaction between the browser and the server is much more detailed. If you want to know the completeness, please read the articles compiled by other people. I will just talk about the configuration here:

location /mobile {

alias /usr/share/nginx/html/mobile/;

try_files $uri $uri/ /mobile/index.html;

if ($request_filename ~ .*\.(htm|html)$){

add_header Cache-Control no-cache;

}

if ($request_uri ~* /.*\.(js|css)$) {

# add_header Cache-Control max-age=2592000;

expires 30d;

}

index index.html;

}Negotiation cache

Last-Modified

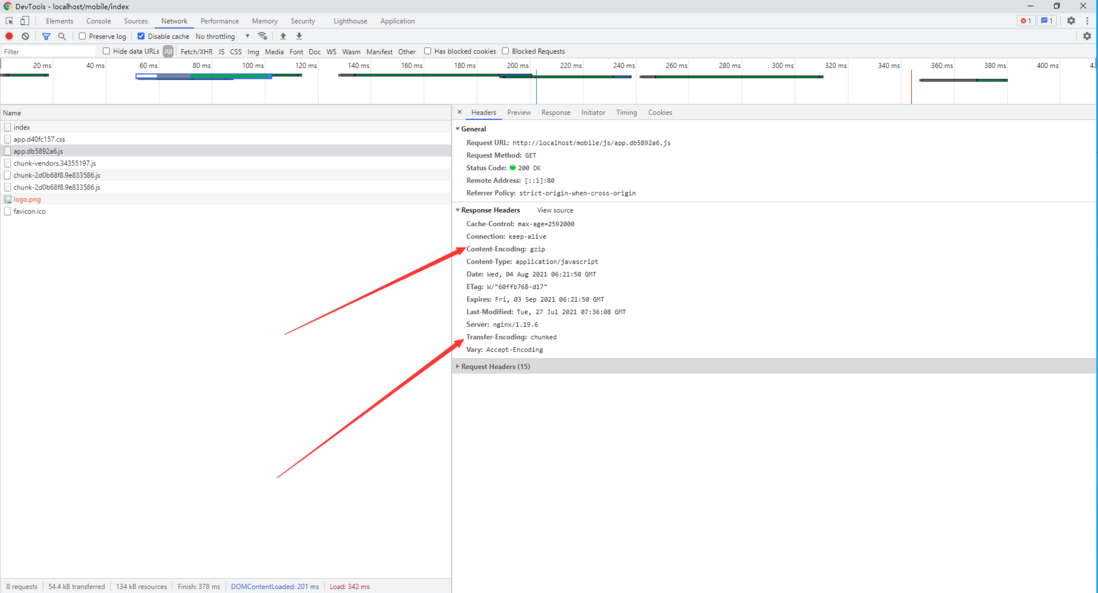

Our single-page entry file is index.html , this file determines the js and css we want to load, so we set the negotiation cache Cache-Control no-cache for the html file. When we first load the http status code as 200, the server will return a Last-Modified . The last modification time of this file. When refreshing again, the browser will send this modification time If-Modified-Since . If there is no change (Etag will also check), then the server will return a 304 status code, saying that my file has not changed. Use the cache directly.

Etag

HTTP protocol interpretation Etag is the entity tag of the requested variable . You can understand it as an id. When the file changes, the id will also change. This is Last-Modified . The server will return a Etag . The browser will bring it with the next request. If-None-Match and compare the results. Some servers have different Etag calculations, so there may be problems when doing distributed, and the file will not be cached if there is no change. Of course, you can turn this off and only use Last-Modified .

Strong cache

When our single-page application is packaged, tools such as webpack will generate the corresponding js according to the change of the file, that is, if the file does not change, the hash value of js will not change, so we can use strong caching when loading files such as js, so The browser does not make a request in the cache time category, but directly fetches the value from the cache. For example, we set expires (Cache-Control is also OK, this priority is higher) to 30 days, then the browser accesses our same cache When js and css have been passed (caching time), they will be taken directly from the cache (200 from cache) without requesting our server.

Note: This method is based on the above packaging and generating hash. If you generate 1.js, 2.js and the like, then you modify the class content in 1.js, and the packaged version is still 1.js. Then the browser will still take it from the cache and will not make a request. That is to say, to use this method, you need to ensure that the modified hash value after you modify the file packaging needs to be changed.

Forced refresh

If strong cache is used well, it will feel like flying, but if you use it in an error situation, you will always use the browser cache. How to clear this, we usually use Ctrl+F5 or check Disable cache on the browser console. Cache-Control: no-cache , in fact, this is to automatically add a header header 061124e2a3de78 when requesting a file, that is to say, if I don’t want to cache, then the browser will honestly send the request to the server.

Long connection-reduce the number of handshake

TCP handshake and TLS handshake are relatively time-consuming. For example, the previous connection before http1.1 had to go through the TCP three-way handshake. It was super time-consuming. Fortunately, 1.1 uses long connections by default, which can reduce the handshake overhead, but if you When uploading a large file, you will find that it will be disconnected after a certain period of time. This is because the Nginx is 75s, and it will be disconnected if you exceed it. You can extend this time appropriately when your web page does have to load a lot of things. One point, in order to reduce the number of handshake (keepalive_requests can limit the maximum number of keep alive requests), as for large file uploads, you can choose to upload in fragments, which will not be introduced here.

server:

keepalive_timeout 75;

keepalive_requests 100;HTTP/2-more secure HTTP, faster HTTPS

Now many websites have enabled HTTP/2 . HTTP/2 biggest advantages of 061124e2a3df3a is that it is fully HTTP/1 . The HTTP/2 protocol itself does not require SSL to be enabled, but browsers require that SSL be enabled to use HTTP/2 , header compression, and header compression. Virtual "streaming" transmission, multiplexing and other technologies can make full use of bandwidth, reduce latency, and greatly improve Internet experience. Nginx is quite simple to start:

server {

listen 443 ssl http2;

ssl_certificate /etc/nginx/conf.d/ssl/xxx.com.pem;

ssl_certificate_key /etc/nginx/conf.d/ssl/xxx.com.key;

ssl_ciphers ECDHE-ECDSA-AES256-GCM-SHA384:ECDHE-RSA-AES256-GCM-SHA384:ECDHE-RSA-AES128-GCM-SHA256:ECDHE-RSA-CHACHA20-POLY1305:ECDHE+AES128:!MD5:!SHA1; # 弃用不安全的加密套件

ssl_prefer_server_ciphers on; # 缓解 BEAST 攻击

}HSTS-Reduce 302 redirects

Most websites are now https , but there is a problem that users generally do not actively enter https when entering URLs, and they still go to port 80. We generally rewrite rewrite

server{

listen 80;

server_name test.com;

rewrite ^(.*)$ https://$host$1 permanent;

}But this kind of redirection increases the network cost, and one more request is made. I want to directly access https next time. How to deal with it? We can use HSTS , the port 80 remains unchanged, and the server on port 443 is added:

add_header Strict-Transport-Security "max-age=15768000; includeSubDomains;";This is the equivalent of telling the browser: I use this site must be strictly HTTPS agreement, in max-age time are not allowed to use HTTP , next time you use direct access HTTPS it, then the browser will only take 80 at the time of the first visit The port is redirected, and then the access is directly HTTPS (If includeSubDomains is specified, then this rule also applies to all subdomains of the website).

Session Ticket-https session reuse

We know that the SSL handshake will consume a lot of time during https communication, and asymmetric encryption is used to protect the generation of the session key. The real transmission is communication transmission through symmetric encryption. Then it takes too much time for us to perform an SSL handshake every refresh. Since both parties have got the session key, it is not enough to communicate with this key. This is session multiplexing.

The server encrypts the key and generates a session ticket and sends it to the client. After the request is closed, if the client initiates a subsequent connection (within the timeout period), the next time the client establishes a SSL connection with the server, the session ticket will be sent to the server , The server unlocks session ticket then takes out the session key for encrypted communication.

ssl_protocols TLSv1.2 TLSv1.3; # 开启TLS1.2 以上的协议

ssl_session_timeout 5m; # 过期时间,分钟

ssl_session_tickets on; # 开启浏览器的Session Ticket缓存This series of updates can only be organized during weekends and after get off work hours. If there are more content, the update will be slower. I hope it can be helpful to you. Please support it by star or like favorites.

The address of this article: https://xuxin123.com/web/nginx-spa-load

**粗体** _斜体_ [链接](http://example.com) `代码` - 列表 > 引用。你还可以使用@来通知其他用户。