This article was first published on : https://www.yuque.com/17sing

| Version | date | Remark |

|---|---|---|

| 1.0 | 2022.1.26 | Article first published |

0. Preface

A few days ago, a small partner in the group asked me, "Why does Flink go through so many graph transformations from our code to the real executable state? What is the benefit of doing this?" I did have it when I saw the design here early. I had the same doubts. At that time, because I was still looking at other things in my hand, I turned the page after consulting some materials. Now that I have encountered such a problem again, I might as well clarify it in this article.

The source code of this article is based on Flink 1.14.0 .1. Layered Design

This picture is from Jark's blog: http://wuchong.me/blog/2016/05/03/flink-internals-overview/

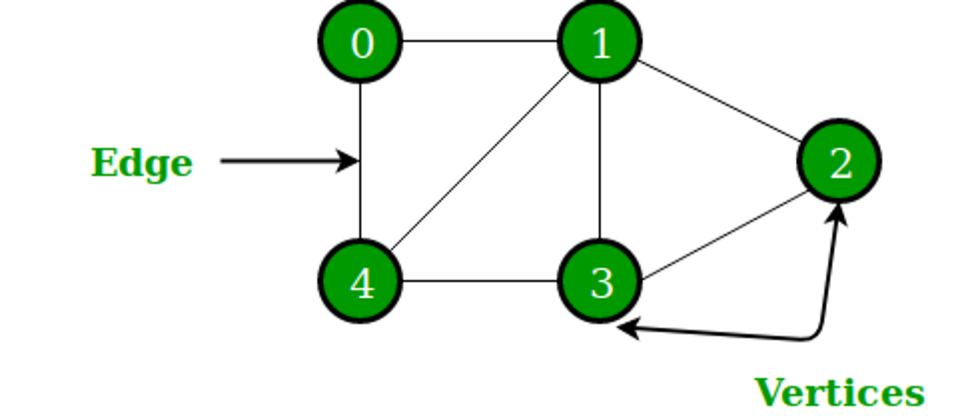

The above is the Graph hierarchy diagram of Flink. In the following content, we will unveil them one by one and learn the meaning of their existence.

1.1 OptimizedPlan of BatchAPI

In this subsection, we will see the process of converting a DataSet from Plan to OptimizedPlan. In order to facilitate readers to have a concept, we explain a few terms here:

- DataSet: User-facing batch API.

- Plan: A plan describing how DataSource and DataSink and Operation interact.

- OptimizedPlan: The optimized execution plan.

Code entry:

|--ClientFrontend#main

\-- parseAndRun

\-- runApplication

\-- getPackagedProgram

\-- buildProgram

\-- executeProgram

|-- ClientUtils#executeProgram

|-- PackagedProgram#invokeInteractiveModeForExecution

\-- callMainMethod //调用用户编写的程序入口

|-- ExecutionEnvironment#execute

\-- executeAsync // 创建Plan

|-- PipelineExecutorFactory#execute

|-- EmbeddedExecutor#execute

\-- submitAndGetJobClientFuture

|-- PipelineExecutorUtils#getJobGraph

|-- FlinkPipelineTranslationUtil#getJobGraph

|-- FlinkPipelineTranslator#translateToJobGraph //如果传入的是Plan,则会在内部实现中先转换出OptimizedPlan,再转换到JobGraph;如果是StreamGraph,则会直接转换出JobGraph

|-- PlanTranslator#translateToJobGraph

\-- compilePlanLet's take a look at this code:

private JobGraph compilePlan(Plan plan, Configuration optimizerConfiguration) {

Optimizer optimizer = new Optimizer(new DataStatistics(), optimizerConfiguration);

OptimizedPlan optimizedPlan = optimizer.compile(plan);

JobGraphGenerator jobGraphGenerator = new JobGraphGenerator(optimizerConfiguration);

return jobGraphGenerator.compileJobGraph(optimizedPlan, plan.getJobId());

}Very clear. It is from OptimizedPlan to JobGraph . For the conversion process of OptimizedPlan, we look at the Optimizer#compile method. First look at the comments on the method signature:

/**

* Translates the given program to an OptimizedPlan. The optimized plan describes for each

* operator which strategy to use (such as hash join versus sort-merge join), what data exchange

* method to use (local pipe forward, shuffle, broadcast), what exchange mode to use (pipelined,

* batch), where to cache intermediate results, etc,

*

* <p>The optimization happens in multiple phases:

*

* <ol>

* <li>Create optimizer dag implementation of the program.

* <p><tt>OptimizerNode</tt> representations of the PACTs, assign parallelism and compute

* size estimates.

* <li>Compute interesting properties and auxiliary structures.

* <li>Enumerate plan alternatives. This cannot be done in the same step as the interesting

* property computation (as opposed to the Database approaches), because we support plans

* that are not trees.

* </ol>

*

* @param program The program to be translated.

* @param postPasser The function to be used for post passing the optimizer's plan and setting

* the data type specific serialization routines.

* @return The optimized plan.

* @throws CompilerException Thrown, if the plan is invalid or the optimizer encountered an

* inconsistent situation during the compilation process.

*/

private OptimizedPlan compile(Plan program, OptimizerPostPass postPasser)It is mentioned here that there will be several steps to optimize:

- Create an optimized DAG, and the OptimizerNode generated for it follows the PACT model , and assigns concurrency and computing resources to it.

- Generate some important properties and auxiliary data structures.

- Enumerate all alternatives.

In the implementation of the method, a large number of Visitors will be created to optimize the traversal of the program.

1.1.1 GraphCreatingVisitor

The first is to create a GraphCreatingVisitor, optimize the original Plan, optimize each operator into an OptimizerNode, and connect the OptimizerNodes through DagConnection. DagConnection is equivalent to an edge model, with source and target, which can represent the input and output of the OptimizerNode. During this process the following things will be done:

- Create an OptimizerNode for each operator - closer to the Node that executes the description (estimates the size of the data, where the data flow is split and merged, etc.)

- Connect them with Channel

- Generate the corresponding strategy according to the suggestion: what strategy is used by the operator to execute: such as Hash Join or Sort Merge Join; the data exchange strategy between operators is Local Pipe Forward, Shuffle, or Broadcast; the data exchange mode between operators is Pipelined or Batch.

1.1.2 IdAndEstimatesVisitor

As the name suggests, an id is generated for each operator and its data volume is estimated. See OptimizerNode#computeOutputEstimates for the implementation of the estimate - this is an abstract function, we can look at the implementation in the DataSourceNode, which will derive an estimate based on a series of properties (such as number of rows, size) of the upstream data source. **But this code is not appropriate here

, the author's original intention seems to be to focus on the upstream of the file type, the comment says: see, if we have a statistics object that can tell us a bit about the file **.

1.1.3 UnionParallelismAndForwardEnforcer

This will ensure the concurrency of UnionNode and the downstream alignment, to avoid data inaccuracy due to incorrect data distribution (see https://github.com/apache/flink/pull/5742 ).

1.1.4 BranchesVisitor

Computes a graph of downstream sub-DAGs that will not be closed. See its definition:

/**

* Description of an unclosed branch. An unclosed branch is when the data flow branched (one

* operator's result is consumed by multiple targets), but these different branches (targets)

* have not been joined together.

*/

public static final class UnclosedBranchDescriptor {1.1.5 InterestingPropertyVisitor

Estimate the cost based on the properties of the Node.

See the estimation algorithm: node.computeInterestingPropertiesForInputs

- WorksetIterationNode

- TwoInputNode

- SingleInputNode

- BulkIterationNode

Then a series of execution plans will be calculated based on the cost:

// the final step is now to generate the actual plan alternatives

List<PlanNode> bestPlan = rootNode.getAlternativePlans(this.costEstimator);Here, OptimizerNode is optimized into PlanNode. PlanNode is the final optimized node type. It contains more attributes of nodes. Nodes are connected through Channel. Channel is also an edge model and determines the data exchange between nodes. The methods ShipStrategyType and DataExchangeMode, ShipStrategyType represents the data transmission strategy between two nodes, such as whether to perform data partitioning, hash partitioning, range partitioning, etc.; DataExchangeMode represents the mode of data exchange between two nodes, including PIPELINED and BATCH, Same as ExecutionMode, ExecutionMode determines DataExchangeMode - send it directly or drop it first.

1.1.6 PlanFinalizer.createFinalPlan

PlanFinalizer.createFinalPlan(). The general implementation is to add nodes to sources, sinks, allNodes, and possibly set the memory occupied by tasks for each node.

1.1.7 BinaryUnionReplacer

As the name implies, the upstream operations that are also Union are deduplicated and replaced, and merged together. The author believes that this reduces the generation of Node when the output is equivalent.

1.1.8 RangePartitionRewriter

When using the feature of range partitioning, it is necessary to ensure that the data sets processed by each partition are balanced as much as possible to maximize the utilization of computing resources and reduce the execution time of jobs. To this end, the optimizer provides a range partition rewriter (RangePartitionRewriter) to optimize the partition strategy of range partitions to distribute data as evenly as possible and avoid data skew.

If you want to distribute the data as evenly as possible, you definitely need to estimate the data source. But obviously it is impossible to read all the data for estimation. Here Flink uses an improved version of the ReservoirSampling algorithm - you can refer to the paper Optimal Random Sampling from Distributed Streams Revisited , which is implemented in the code by org.apache.flink.api.java.sampling.ReservoirSamplerWithReplacement and org.apache.flink.api.java.sampling.ReservoirSamplerWithoutReplacement .

It is worth mentioning that bothPlanandOptimizerNodeimplement theVisitableinterface, which is a typical use of the strategy mode , which makes the code very flexible, as the comments say - the traversal method can be freely written.

package org.apache.flink.util;

import org.apache.flink.annotation.Internal;

/**

* This interface marks types as visitable during a traversal. The central method <i>accept(...)</i>

* contains the logic about how to invoke the supplied {@link Visitor} on the visitable object, and

* how to traverse further.

*

* <p>This concept makes it easy to implement for example a depth-first traversal of a tree or DAG

* with different types of logic during the traversal. The <i>accept(...)</i> method calls the

* visitor and then send the visitor to its children (or predecessors). Using different types of

* visitors, different operations can be performed during the traversal, while writing the actual

* traversal code only once.

*

* @see Visitor

*/

@Internal

public interface Visitable<T extends Visitable<T>> {

/**

* Contains the logic to invoke the visitor and continue the traversal. Typically invokes the

* pre-visit method of the visitor, then sends the visitor to the children (or predecessors) and

* then invokes the post-visit method.

*

* <p>A typical code example is the following:

*

* <pre>{@code

* public void accept(Visitor<Operator> visitor) {

* boolean descend = visitor.preVisit(this);

* if (descend) {

* if (this.input != null) {

* this.input.accept(visitor);

* }

* visitor.postVisit(this);

* }

* }

* }</pre>

*

* @param visitor The visitor to be called with this object as the parameter.

* @see Visitor#preVisit(Visitable)

* @see Visitor#postVisit(Visitable)

*/

void accept(Visitor<T> visitor);

}

1.2 StreamGraph of StreamAPI

The entry function for constructing StreamGraph is StreamGraphGenerator.generate() . This function will be called by the method StreamExecutionEnvironment.execute() that triggers the execution of the program. Like OptimizedPlan, StreamGraph is also constructed on the client side.

In this process, the pipeline is first converted into a Transformation pipeline, and then mapped into a StreamGraph, which has nothing to do with the specific execution. The core is the logic that expresses the calculation process.

Regarding the introduction of Transformation , you can see the community's issue: https://issues.apache.org/jira/browse/FLINK-2398 . The essence is to avoid the coupling of the DataStream layer to the StreamGraph, so this layer is introduced for decoupling.

Transformation focuses on the attributes that are internal to the framework, such as: name (operator name), uid (the same state as before is assigned when the job is restarted, and the state is persisted), bufferTimeout, parallelism, outputType, soltSharingGroup, etc. In addition, Transformation is divided into physical Transformation and virtual Transformation, which is related to the StreamGraph implementation of the next layer.

There are two core objects of StreamGraph:

- StreamNode: It can have multiple outputs as well as multiple inputs. Converted from Transformation - the physical StreamNode will eventually become the object operator, and the virtual StreamNode will be attached to the StreamEdge.

- StreamEdge: The edge of the StreamGraph, used to connect two StreamNodes. As said above - a StreamNode can have multiple outgoing and incoming edges. StreamEdge contains information about bypass output, partitioner, field filter output (same logic as SQL Select for field selection), etc.

The specific conversion code is in org.apache.flink.streaming.api.graph.StreamGraphGenerator , and each Transformation has corresponding conversion logic:

static {

@SuppressWarnings("rawtypes")

Map<Class<? extends Transformation>, TransformationTranslator<?, ? extends Transformation>>

tmp = new HashMap<>();

tmp.put(OneInputTransformation.class, new OneInputTransformationTranslator<>());

tmp.put(TwoInputTransformation.class, new TwoInputTransformationTranslator<>());

tmp.put(MultipleInputTransformation.class, new MultiInputTransformationTranslator<>());

tmp.put(KeyedMultipleInputTransformation.class, new MultiInputTransformationTranslator<>());

tmp.put(SourceTransformation.class, new SourceTransformationTranslator<>());

tmp.put(SinkTransformation.class, new SinkTransformationTranslator<>());

tmp.put(LegacySinkTransformation.class, new LegacySinkTransformationTranslator<>());

tmp.put(LegacySourceTransformation.class, new LegacySourceTransformationTranslator<>());

tmp.put(UnionTransformation.class, new UnionTransformationTranslator<>());

tmp.put(PartitionTransformation.class, new PartitionTransformationTranslator<>());

tmp.put(SideOutputTransformation.class, new SideOutputTransformationTranslator<>());

tmp.put(ReduceTransformation.class, new ReduceTransformationTranslator<>());

tmp.put(

TimestampsAndWatermarksTransformation.class,

new TimestampsAndWatermarksTransformationTranslator<>());

tmp.put(BroadcastStateTransformation.class, new BroadcastStateTransformationTranslator<>());

tmp.put(

KeyedBroadcastStateTransformation.class,

new KeyedBroadcastStateTransformationTranslator<>());

translatorMap = Collections.unmodifiableMap(tmp);

}1.3 JobGraph integrated with stream and batch

The code entry is almost the same as Section 1.1, the entry class of DataSet is ExecutionEnvironment , and the entry of DataStream is StreamExecutionEnvironment . PlanTranslator becomes StreamGraphTranslator . Therefore, the conversion of StreamGraph to JobGraph is also carried out on the client side, and the main work is to optimize. One of the most important optimizations is the Operator Chain , which merges the operators that are allowed by the conditions to avoid cross-thread and cross-network transfer.

Whether to open the adjustment that OperationChain can display in the program.

Next, let's take a look at what JobGraph is. Look at the notes first:

/**

* The JobGraph represents a Flink dataflow program, at the low level that the JobManager accepts.

* All programs from higher level APIs are transformed into JobGraphs.

*

* <p>The JobGraph is a graph of vertices and intermediate results that are connected together to

* form a DAG. Note that iterations (feedback edges) are currently not encoded inside the JobGraph

* but inside certain special vertices that establish the feedback channel amongst themselves.

*

* <p>The JobGraph defines the job-wide configuration settings, while each vertex and intermediate

* result define the characteristics of the concrete operation and intermediate data.

*/

public class JobGraph implements Serializable {It's a graph consisting of vertices and intermediate . And it's a low-level API for JobMaster - all high-level APIs are converted to JobGraph. The next objects we need to pay attention to are JobVertex , JobEdge , and IntermediateDataSet . Among them, the input of JobVertex is JobEdge, and the output is IntermediateDataSet.

1.3.1 JobVertex

After optimization, multiple StreamNodes that meet the conditions may be merged together to generate a JobVertex, that is, a JobVertex contains one or more operators (interested students can see StreamingJobGraphGenerator#buildChainedInputsAndGetHeadInputs or read related Issues: https://issues .apache.org/jira/browse/FLINK-19434 ).

1.3.2 JobEdge

JobEdge is an edge connecting IntermediateDatSet and JobVertex, representing a data flow channel in JobGraph. Its upstream is IntermediateDataSet, and its downstream is JobVertex. Data is passed from IntermediateDataSet to target JobVertex through JobEdge.

Here, we want to focus on one of its member variables:

/**

* A distribution pattern determines, which sub tasks of a producing task are connected to which

* consuming sub tasks.

*

* <p>It affects how {@link ExecutionVertex} and {@link IntermediateResultPartition} are connected

* in {@link EdgeManagerBuildUtil}

*/

public enum DistributionPattern {

/** Each producing sub task is connected to each sub task of the consuming task. */

ALL_TO_ALL,

/** Each producing sub task is connected to one or more subtask(s) of the consuming task. */

POINTWISE

}

The distribution mode will directly affect the data connection relationship between tasks during execution: point-to-point connection or full connection (or broadcast).

1.3.3 IntermediateDataSet

The intermediate data set IntermediateDataSet is a logical structure used to represent the output of the JobVertex, that is, the data set generated by the operators contained in the JobVertex. Here we need to focus on ResultPartitionType:

- Blocking: As the name suggests. After the upstream has processed the data, it is then handed over to the downstream for processing. This data partition can be consumed multiple times or concurrently. This partition will not be automatically destroyed, but will be handed over to the scheduler for judgment.

- BlokingPersistent: Similar to Blocking, but its life cycle is specified by the user. Call the JobMaster or ResourceManager API to destroy, not controlled by the scheduler.

- Pipelined: Stream exchange pattern. Can be used for both bounded and unbounded streams. Data of this type of partition can only be consumed once by each consumer. And this partition can hold arbitrary data.

- PipelinedBounded: Unlike Pipelined, this partition holds limited data, which does not delay data and checkpoints for too long. Therefore, it is suitable for stream computing scenarios (note that there is no CheckpointBarrier in batch mode).

- Pipelined_Approximate: The strategy introduced in 1.12 is used for the partition strategy of fast failover for a single task. Interested students can read the related issue: https://issues.apache.org/jira/browse/FLINK-18112 .

Under different execution modes, the corresponding result partition types are different, which determines the mode of data exchange at execution time.

The number of IntermediateDataSets is the same as the number of outgoing edges of the StreamNode corresponding to the JobVertext, which can be one or more.

1.4 ExecutionGraph

After the JobManager receives the JobGraph submitted by the client and its dependent Jar, it will start to schedule and run the task, but the JobGraph is still a logical graph, which needs to be further transformed into a parallelized and schedulable execution graph. This action is done by JobMaster - triggered by SchedulerBase, and the actual action is handed over to DefaultExecutionGraphBuilder#buildGraph . In these actions, ExecutionJobVertex (logical concept) and ExecutionVertex corresponding to JobVertex, IntermediateResult (logical concept) and IntermediateResultPartition corresponding to IntermediateDataSet, etc. will be generated. The so-called parallelism will also be achieved through the above classes.

Next, I will talk about some details of ExecutionGraph, which will involve some logical concepts, so I drew a picture here for easy reference.

1.4.1 ExecutionJobVertex and ExecutionVertex

ExecutionJobVertex corresponds to JobVertex in JobGraph one-to-one. The object also contains a set of ExecutionVertex, the number is consistent with the parallelism of the StreamNode contained in the JobVertex, as shown in the figure above, if the parallelism is N, then there will be N ExecutionVertex. So each instance of parallel execution is ExecutionVertex. At the same time, the output IntermediateResult of the ExecutionVertex is also constructed.

So ExecutionJobVertex is more like a logical concept.

1.4.2 IntermediaResult and IntermediaResultParitition

IntermediateResult represents the output of ExecutionJobVertex, which corresponds to the IntermediateDataSet in JobGraph. This object is also a logical concept. Similarly, an ExecutionJobVertex can have multiple intermediate results, depending on how many JobEdges the current JobVertex has.

An intermediate result set contains multiple intermediate result partitions, the number of which is equal to the degree of concurrency of the Job Vertext, or the degree of parallelism of the operator. Each IntermediateResultPartition represents 1 ExecutionVertex output result.

1.4.3 Execution

ExecutionVertex corresponds to a Task at Runtime. ExecutionVerterx will be wrapped as an Execution when it is actually executed.

How to submit JobGraph to JobMaster is not the focus of this article. Interested students can check the relevant call stack of org.apache.flink.runtime.dispatcher.DispatcherGateway#submitJob by themselves.1.4.5 From JobGraph to ExecutionGraph

Several important concepts were introduced above. Next, let's take a look at the construction process of ExecutionGraph. The primary reference method is org.apache.flink.runtime.executiongraph.DefaultExecutionGraph#attachJobGraph .

The first is to build ExecutionJobVertex (refer to its construction method), set its parallelism, share Solt, CoLocationGroup, and build IntermediaResult and IntermediaResuktParitition, create ExecutionVertex according to the degree of concurrency, and check whether IntermediateResults has duplicate references. Finally, the severable data source will be sharded.

The second is to build the Edge (see org.apache.flink.runtime.executiongraph.EdgeManagerBuildUtil#connectVertexToResult). Create EdgeManager according to DistributionPattern, and associate ExecutionVertex and IntermediateResult to establish data exchange between Tasks at runtime, which is based on this to establish a physical transmission channel of data.

1.4.6 Appetizers: From ExecutionGraph to Real Execution

When the JobMaster generates the ExecutionGraph, it enters the job scheduling phase. This involves different scheduling strategies, resource application, task distribution and Failover management. There is so much involved that it will be discussed in a separate article. For students who are curious about this, you can look DefaultExecutionGraphDeploymentTest#setupScheduler first. The code inside is relatively simple, and you can observe the process from ExecutionGraph to Scheduling.

private SchedulerBase setupScheduler(JobVertex v1, int dop1, JobVertex v2, int dop2)

throws Exception {

v1.setParallelism(dop1);

v2.setParallelism(dop2);

v1.setInvokableClass(BatchTask.class);

v2.setInvokableClass(BatchTask.class);

DirectScheduledExecutorService executorService = new DirectScheduledExecutorService();

// execution graph that executes actions synchronously

final SchedulerBase scheduler =

SchedulerTestingUtils.newSchedulerBuilder(

JobGraphTestUtils.streamingJobGraph(v1, v2),

ComponentMainThreadExecutorServiceAdapter.forMainThread())

.setExecutionSlotAllocatorFactory(

SchedulerTestingUtils.newSlotSharingExecutionSlotAllocatorFactory())

.setFutureExecutor(executorService)

.setBlobWriter(blobWriter)

.build();

final ExecutionGraph eg = scheduler.getExecutionGraph();

checkJobOffloaded((DefaultExecutionGraph) eg);

// schedule, this triggers mock deployment

scheduler.startScheduling();

Map<ExecutionAttemptID, Execution> executions = eg.getRegisteredExecutions();

assertEquals(dop1 + dop2, executions.size());

return scheduler;

}2. Summary

Through this article, we understand the meaning of the existence of each layer map:

- StreamGraph and OptimizedPlan: From the external API to the internal API, the basic properties of the Graph are generated. In the case of batch processing, a series of optimizations are performed.

- JobGraph: Stream batch unified Graph. Do some general optimizations here, like OperatorChain.

- ExecutionGraph: Execution-level graph, focusing on a large number of execution details during construction: such as concurrency, Checkpoint configuration validity, monitoring dot setting, duplicate reference checking, slicing of severable data sources, etc.

Through the layering of graphs, Flink places different optimization items and inspection items at the appropriate level, which is also the embodiment of the single responsibility principle.

**粗体** _斜体_ [链接](http://example.com) `代码` - 列表 > 引用。你还可以使用@来通知其他用户。