Preface

Apache ShardingSphere is an ecosystem composed of a set of open source distributed database solutions. It consists of three products that can be deployed independently and support mixed deployment; the next few This article will focus on the analysis of ShardingSphere-JDBC, from data sharding, distributed primary keys, distributed transactions, separation of reads and writes, and elastic scaling.

Introduction

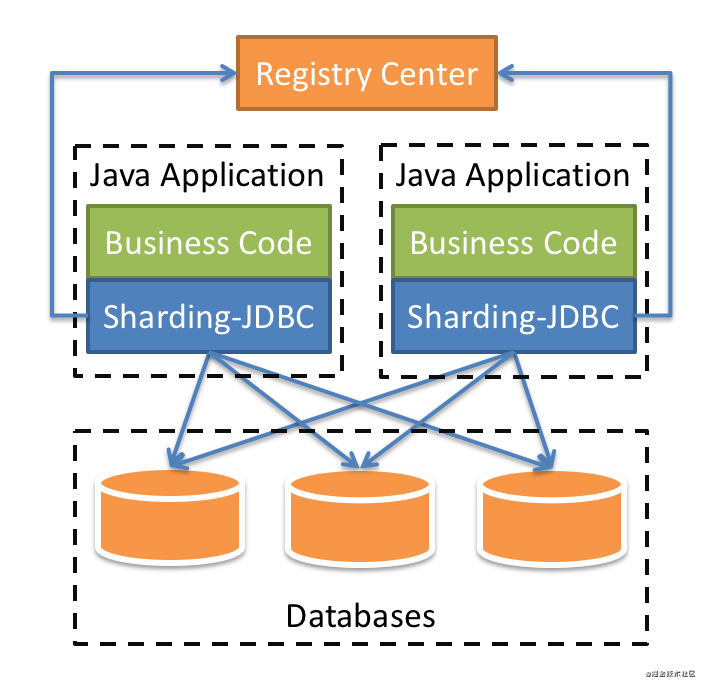

ShardingSphere-JDBC is positioned as a lightweight Java framework and provides additional services in the JDBC layer of Java. It uses the client to directly connect to the database and provides services in the form of jar packages without additional deployment and dependencies. It can be understood as an enhanced version of the JDBC driver, fully compatible with JDBC and various ORM frameworks. The overall architecture diagram is as follows (from the official website):

ShardingSphere-JDBC contains many functional modules including data sharding, distributed primary keys, distributed transactions, read-write separation, elastic scaling, etc.; as a database middleware, the core function is data sharding, ShardingSphere-JDBC Provides a lot of strategies and algorithms for sub-database and table, let's take a look at how to use these strategies;

Data fragmentation

As a developer, we hope that middleware can help us shield the underlying details, so that we can be as simple as using a single database and a single table in the scenario of sub-database and sub-table; of course ShardingSphere-JDBC will not let everyone down, introduce The concepts of fragmented data sources and logical tables are introduced;

Sharded data source and logical table

- Logical table: The logical table is relative to the physical table. Usually, a table is divided into multiple tables. For example, the order table is divided into 10 tables, which are t_order_0 to t_order_9, and the corresponding The logical table is

t_order, for developers only need to use the logical table; - Sharded data sources: For sharding, there are usually multiple libraries, or multiple data sources, so these data sources need to be managed in a unified manner, introducing the concept of sharding data sources, the common

ShardingDataSource

Having the above two basic concepts is of course not enough. We also need a database and table strategy algorithm to help us do routing processing; but these two concepts can give developers a feeling of using a single database and a single table, as shown below A simple example:

DataSource dataSource = ShardingDataSourceFactory.createDataSource(dataSourceMap, shardingRuleConfig,

new Properties());

Connection conn = dataSource.getConnection();

String sql = "select id,user_id,order_id from t_order where order_id = 103";

PreparedStatement preparedStatement = conn.prepareStatement(sql);

ResultSet set = preparedStatement.executeQuery();Based on the list of real data sources, the database and table strategy has generated an abstract data source, which can be simply understood as ShardingDataSource ; the next operation is no different from the normal single database and single table that we use jdbc to operate;

Sharding Strategy Algorithm

ShardingSphere-JDBC introduced the sharding algorithm and sharding strategy sharding strategy. Of course, the sharding key is also a core concept that can be easily understood in the sharding process; Strategy = Sharding Algorithm + Sharding Key; As for why it is designed like this, it should be ShardingSphere-JDBC that considers more flexibility and abstracts the sharding algorithm separately to facilitate developers to expand;

Sharding Algorithm

Provides abstract sharding algorithm class: ShardingAlgorithm , which is further divided into: precise sharding algorithm, interval slicing algorithm, compound sharding algorithm and Hint sharding algorithm according to the type;

- Accurate fragmentation algorithm: corresponding to the

PreciseShardingAlgorithmclass, mainly used to process the=andIN; - Interval fragmentation algorithm: corresponding to

RangeShardingAlgorithmclass, mainly used to processBETWEEN AND,>,<,>=,<=fragmentation; - Compound sharding algorithm: corresponding to

ComplexKeysShardingAlgorithmclass, used to deal with scenes that use multiple keys as sharding keys for sharding; - Hint fragmentation algorithm: corresponding to the

HintShardingAlgorithmclass, used to processHintrow fragmentation;

All the above algorithm classes are interface classes, and the specific implementation is left to the developer;

Sharding strategy

The fragmentation strategy basically corresponds to the above fragmentation algorithm, including: standard fragmentation strategy, compound fragmentation strategy, Hint fragmentation strategy, inline fragmentation strategy, and non-fragmentation strategy;

Standard fragmentation strategy: corresponding to

StandardShardingStrategycategory, two fragmentation algorithmsPreciseShardingAlgorithmandRangeShardingAlgorithmPreciseShardingAlgorithmis required,RangeShardingAlgorithmoptional;public final class StandardShardingStrategy implements ShardingStrategy { private final String shardingColumn; private final PreciseShardingAlgorithm preciseShardingAlgorithm; private final RangeShardingAlgorithm rangeShardingAlgorithm; }Compound sharding strategy: corresponding to

ComplexShardingStrategyclass, providingComplexKeysShardingAlgorithmsharding algorithm;public final class ComplexShardingStrategy implements ShardingStrategy { @Getter private final Collection<String> shardingColumns; private final ComplexKeysShardingAlgorithm shardingAlgorithm; }It can be found that multiple shard keys are supported;

Hint fragmentation strategy: corresponding to the

HintShardingStrategyclass, the fragmentation strategy is specified by Hint instead of extracting the fragmentation value from SQL;HintShardingAlgorithmfragmentation algorithm is provided;public final class HintShardingStrategy implements ShardingStrategy { @Getter private final Collection<String> shardingColumns; private final HintShardingAlgorithm shardingAlgorithm; }- Inline fragmentation strategy: corresponding to

InlineShardingStrategycategory, no fragmentation algorithm is provided, routing rules are implemented through expressions; NoneShardingStrategyfragmentation strategy: corresponding to 0607e6debd462f category, non-fragmentation strategy;

Fragmentation strategy configuration class

In use, we did not directly use the above sharding strategy class. ShardingSphere-JDBC provides corresponding strategy configuration classes including:

StandardShardingStrategyConfigurationComplexShardingStrategyConfigurationHintShardingStrategyConfigurationInlineShardingStrategyConfigurationNoneShardingStrategyConfiguration

Actual combat

With the above related basic concepts, let's do a simple actual battle for each sharding strategy. First, prepare the library and table before the actual battle;

ready

Prepare two libraries: ds0 and ds1 ; then each library contains two tables: t_order0 and t_order1 ;

CREATE TABLE `t_order0` (

`id` bigint(20) NOT NULL AUTO_INCREMENT,

`user_id` bigint(20) NOT NULL,

`order_id` bigint(20) NOT NULL,

PRIMARY KEY (`id`)

) ENGINE=InnoDB DEFAULT CHARSET=utf8Prepare real data sources

We have two data sources here, both of which are configured using java code:

// 配置真实数据源

Map<String, DataSource> dataSourceMap = new HashMap<>();

// 配置第一个数据源

BasicDataSource dataSource1 = new BasicDataSource();

dataSource1.setDriverClassName("com.mysql.jdbc.Driver");

dataSource1.setUrl("jdbc:mysql://localhost:3306/ds0");

dataSource1.setUsername("root");

dataSource1.setPassword("root");

dataSourceMap.put("ds0", dataSource1);

// 配置第二个数据源

BasicDataSource dataSource2 = new BasicDataSource();

dataSource2.setDriverClassName("com.mysql.jdbc.Driver");

dataSource2.setUrl("jdbc:mysql://localhost:3306/ds1");

dataSource2.setUsername("root");

dataSource2.setPassword("root");

dataSourceMap.put("ds1", dataSource2);The two data sources configured here are common data sources, and finally the dataSourceMap will be handed over to ShardingDataSourceFactory management;

Table rule configuration

The table rule configuration class TableRuleConfiguration contains five elements: logical tables, real data nodes, database fragmentation strategy, data table fragmentation strategy, and distributed primary key generation strategy;

TableRuleConfiguration orderTableRuleConfig = new TableRuleConfiguration("t_order", "ds${0..1}.t_order${0..1}");

orderTableRuleConfig.setDatabaseShardingStrategyConfig(

new StandardShardingStrategyConfiguration("user_id", new MyPreciseSharding()));

orderTableRuleConfig.setTableShardingStrategyConfig(

new StandardShardingStrategyConfiguration("order_id", new MyPreciseSharding()));

orderTableRuleConfig.setKeyGeneratorConfig(new KeyGeneratorConfiguration("SNOWFLAKE", "id"));- Logical table: The logical table configured here is t_order, and the corresponding physical tables are t_order0, t_order1;

Real data node: The configuration using line expressions here simplifies the configuration; the above configuration is equivalent to the configuration:

db0 ├── t_order0 └── t_order1 db1 ├── t_order0 └── t_order1Database Partitioning Strategies: Here are five types of library partitioning strategy described above is used here

StandardShardingStrategyConfiguration, you need to specify shard key and slicing algorithm used here is precise slicing algorithm ;public class MyPreciseSharding implements PreciseShardingAlgorithm<Integer> { @Override public String doSharding(Collection<String> availableTargetNames, PreciseShardingValue<Integer> shardingValue) { Integer index = shardingValue.getValue() % 2; for (String target : availableTargetNames) { if (target.endsWith(index + "")) { return target; } } return null; } }The shardingValue here is the actual value corresponding to user_id, and the remainder is taken with 2 each time; availableTargetNames can be selected as {ds0, ds1}; depending on which library matches the remainder, it indicates which library is routed to;

- Data table fragmentation strategy: the specified fragmentation key (order_id) inconsistent with the database fragmentation strategy, and everything else is the same;

- Distributed primary key generation strategy: ShardingSphere-JDBC provides a variety of distributed primary key generation strategies, which will be described in detail later, and the snowflake algorithm is used here;

Configure fragmentation rules

Configure fragmentation rule ShardingRuleConfiguration , including multiple configuration rules: table rule configuration, binding table configuration, broadcast table configuration, default data source name, default database fragmentation strategy, default table fragmentation strategy, default primary key generation strategy, master-slave rule Configuration, encryption rule configuration;

- Table rule configuration

tableRuleConfigs : that is, the library fragmentation strategy and table fragmentation strategy configured above, which are also the most commonly used configurations; - Binding table configuration

bindingTableGroups : Refers to the main table and sub-table with consistent separation rules; multi-table association queries between binding tables will not appear Cartesian product associations, and the efficiency of association queries will be greatly improved; - Broadcast table configuration

broadcastTables : Tables that exist in all distributed data sources. The structure of the table and the data in the table are identical in each database. It is suitable for scenarios where the amount of data is not large and it is necessary to perform associative query with tables with massive data; - Default data source name

defaultDataSourceName : Tables that are not configured for fragmentation will be located through the default data source; - Default database sharding strategy

defaultDatabaseShardingStrategyConfig: Table rule configuration can set the database fragmentation strategy, if there is no configuration, you can configure the default here; - Default table fragmentation strategy

defaultTableShardingStrategyConfig : Table rule configuration can set the table fragmentation strategy, if there is no configuration, you can configure the default here; - Default primary key generation strategy

defaultKeyGeneratorConfig : Table rule configuration can set the primary key generation strategy, if there is no configuration, you can configure the default here; built-in UUID, SNOWFLAKE generator; - Master-slave rule configuration

masterSlaveRuleConfigs : Used to realize the separation of read and write, you can configure one master table and multiple slave tables, and load balancing strategies can be configured for multiple slave libraries when reading faces; - Encryption rule configuration

encryptRuleConfig : Provides the function of encrypting certain sensitive data, and provides a complete, secure, transparent, and low-cost data encryption integration solution;

Data insertion

The above is ready, you can operate the database, here to perform the insert operation:

DataSource dataSource = ShardingDataSourceFactory.createDataSource(dataSourceMap, shardingRuleConfig,

new Properties());

Connection conn = dataSource.getConnection();

String sql = "insert into t_order (user_id,order_id) values (?,?)";

PreparedStatement preparedStatement = conn.prepareStatement(sql);

for (int i = 1; i <= 10; i++) {

preparedStatement.setInt(1, i);

preparedStatement.setInt(2, 100 + i);

preparedStatement.executeUpdate();

}ShardingDataSource through the real data source, fragmentation rules and property files configured above; then you can operate the database and table like a single database and single table, and you can directly use logical tables and fragmentation algorithms in SQL Route processing based on specific values;

After routing, finally: odd number enters ds1.t_order1, even number enters ds0.t_order0; the most common precise fragmentation algorithm is used above, let's continue to look at other fragmentation algorithms;

Sharding Algorithm

In the precise sharding algorithm introduced above, the PreciseShardingValue . ShardingSphere-JDBC provides a corresponding ShardingValue for each sharding algorithm, which specifically includes:

- PreciseShardingValue

- RangeShardingValue

- ComplexKeysShardingValue

- HintShardingValue

Interval slicing algorithm

When used in interval queries, such as the following query SQL:

select * from t_order where order_id>2 and order_id<9The above two interval values 2 and 9 will be directly saved to RangeShardingValue . Here, user_id is not specified for library routing, so two libraries will be accessed;

public class MyRangeSharding implements RangeShardingAlgorithm<Integer> {

@Override

public Collection<String> doSharding(Collection<String> availableTargetNames,

RangeShardingValue<Integer> shardingValue) {

Collection<String> result = new LinkedHashSet<>();

Range<Integer> range = shardingValue.getValueRange();

// 区间开始和结束值

int lower = range.lowerEndpoint();

int upper = range.upperEndpoint();

for (int i = lower; i <= upper; i++) {

Integer index = i % 2;

for (String target : availableTargetNames) {

if (target.endsWith(index + "")) {

result.add(target);

}

}

}

return result;

}

}It can be found that each value in the beginning and end of the interval and the remainder of 2 are checked to see if they can match the real table;

Compound sharding algorithm

Multiple shard keys can be used at the same time, for example, user_id and order_id can be used as shard keys at the same time;

orderTableRuleConfig.setDatabaseShardingStrategyConfig(

new ComplexShardingStrategyConfiguration("order_id,user_id", new MyComplexKeySharding()));

orderTableRuleConfig.setTableShardingStrategyConfig(

new ComplexShardingStrategyConfiguration("order_id,user_id", new MyComplexKeySharding()));As above, when configuring the database and table sharding strategy, two sharding keys are specified, separated by commas; the sharding algorithm is as follows:

public class MyComplexKeySharding implements ComplexKeysShardingAlgorithm<Integer> {

@Override

public Collection<String> doSharding(Collection<String> availableTargetNames,

ComplexKeysShardingValue<Integer> shardingValue) {

Map<String, Collection<Integer>> map = shardingValue.getColumnNameAndShardingValuesMap();

Collection<Integer> userMap = map.get("user_id");

Collection<Integer> orderMap = map.get("order_id");

List<String> result = new ArrayList<>();

// user_id,order_id分片键进行分表

for (Integer userId : userMap) {

for (Integer orderId : orderMap) {

int suffix = (userId+orderId) % 2;

for (String s : availableTargetNames) {

if (s.endsWith(suffix+"")) {

result.add(s);

}

}

}

}

return result;

}

}Hint fragmentation algorithm

In some application scenarios, the sharding condition does not exist in SQL, but in external business logic; you can HintManager programmatically, and the sharding condition only takes effect in the current thread;

// 设置库表分片策略

orderTableRuleConfig.setDatabaseShardingStrategyConfig(new HintShardingStrategyConfiguration(new MyHintSharding()));

orderTableRuleConfig.setTableShardingStrategyConfig(new HintShardingStrategyConfiguration(new MyHintSharding()));

// 手动设置分片条件

int hitKey1[] = { 2020, 2021, 2022, 2023, 2024 };

int hitKey2[] = { 3020, 3021, 3022, 3023, 3024 };

DataSource dataSource = ShardingDataSourceFactory.createDataSource(dataSourceMap, shardingRuleConfig,

new Properties());

Connection conn = dataSource.getConnection();

for (int i = 1; i <= 5; i++) {

final int index = i;

new Thread(new Runnable() {

@Override

public void run() {

try {

HintManager hintManager = HintManager.getInstance();

String sql = "insert into t_order (user_id,order_id) values (?,?)";

PreparedStatement preparedStatement = conn.prepareStatement(sql);

// 分别添加库和表分片条件

hintManager.addDatabaseShardingValue("t_order", hitKey1[index - 1]);

hintManager.addTableShardingValue("t_order", hitKey2[index - 1]);

preparedStatement.setInt(1, index);

preparedStatement.setInt(2, 100 + index);

preparedStatement.executeUpdate();

} catch (SQLException e) {

e.printStackTrace();

}

}

}).start();

}In the above example, the fragmentation conditions are manually set, and the fragmentation algorithm is as follows:

public class MyHintSharding implements HintShardingAlgorithm<Integer> {

@Override

public Collection<String> doSharding(Collection<String> availableTargetNames,

HintShardingValue<Integer> shardingValue) {

List<String> shardingResult = new ArrayList<>();

for (String targetName : availableTargetNames) {

String suffix = targetName.substring(targetName.length() - 1);

Collection<Integer> values = shardingValue.getValues();

for (int value : values) {

if (value % 2 == Integer.parseInt(suffix)) {

shardingResult.add(targetName);

}

}

}

return shardingResult;

}

}

Not Fragmented

Configure NoneShardingStrategyConfiguration :

orderTableRuleConfig.setDatabaseShardingStrategyConfig(new NoneShardingStrategyConfiguration());

orderTableRuleConfig.setTableShardingStrategyConfig(new NoneShardingStrategyConfiguration());In this way, the data will be inserted into each table of each library, which can be understood as the broadcast table

Distributed primary key

In the face of multiple database tables that need to have a unique primary key, the distributed primary key function is introduced. The built-in primary key generator includes: UUID, SNOWFLAKE;

UUID

Use UUID.randomUUID() directly to generate, there are no rules for the primary key; the corresponding primary key generation class: UUIDShardingKeyGenerator ;

SNOWFLAKE

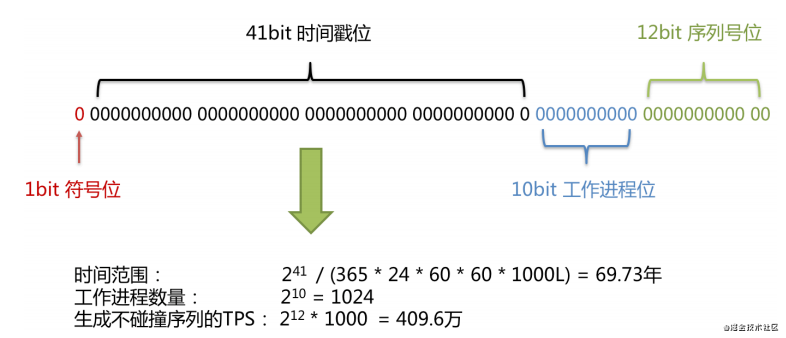

Implementation class: SnowflakeShardingKeyGenerator ; the primary key generated by the snowflake algorithm, the binary representation form contains 4 parts, from high to low sub-table: 1bit sign bit, 41bit timestamp bit, 10bit working process bit and 12bit sequence Number; picture from the official website:

Expand

Implement interface: ShardingKeyGenerator , implement your own primary key generator;

public interface ShardingKeyGenerator extends TypeBasedSPI {

Comparable<?> generateKey();

}Actual combat

It is also very simple to use, just use KeyGeneratorConfiguration directly to configure the corresponding algorithm type and field name:

orderTableRuleConfig.setKeyGeneratorConfig(new KeyGeneratorConfiguration("SNOWFLAKE", "id"));The snowflake algorithm generator is used here, and the generated field is id; the results are as follows:

mysql> select * from t_order0;

+--------------------+---------+----------+

| id | user_id | order_id |

+--------------------+---------+----------+

| 589535589984894976 | 0 | 0 |

| 589535590504988672 | 2 | 2 |

| 589535590718898176 | 4 | 4 |

+--------------------+---------+----------+Distributed transaction

ShardingSphere-JDBC uses distributed transactions and local transactions are no different, providing transparent distributed transactions; supported transaction types include: local transactions, XA transactions and flexible transactions, the default is local transactions;

public enum TransactionType {

LOCAL, XA, BASE

}rely

According to the specific use of XA transaction or flexible transaction, different modules need to be introduced;

<dependency>

<groupId>org.apache.shardingsphere</groupId>

<artifactId>sharding-transaction-xa-core</artifactId>

</dependency>

<dependency>

<groupId>org.apache.shardingsphere</groupId>

<artifactId>shardingsphere-transaction-base-seata-at</artifactId>

</dependency>achieve

ShardingSphere-JDBC provides a distributed transaction manager ShardingTransactionManager , which includes:

- XAShardingTransactionManager: XA-based distributed transaction manager;

- SeataATShardingTransactionManager: Seata-based distributed transaction manager;

The specific implementation of XA's distributed transaction manager includes: Atomikos, Narayana, Bitronix; the default is Atomikos;

Actual combat

The default transaction type is TransactionType.LOCAL. ShardingSphere-JDBC is inherently oriented towards multiple data sources. The local mode actually commits the transaction of each data source cyclically, and data consistency cannot be guaranteed. Therefore, distributed transactions are required. The specific use is also very simple. :

//改变事务类型为XA

TransactionTypeHolder.set(TransactionType.XA);

DataSource dataSource = ShardingDataSourceFactory.createDataSource(dataSourceMap, shardingRuleConfig,

new Properties());

Connection conn = dataSource.getConnection();

try {

//关闭自动提交

conn.setAutoCommit(false);

String sql = "insert into t_order (user_id,order_id) values (?,?)";

PreparedStatement preparedStatement = conn.prepareStatement(sql);

for (int i = 1; i <= 5; i++) {

preparedStatement.setInt(1, i - 1);

preparedStatement.setInt(2, i - 1);

preparedStatement.executeUpdate();

}

//事务提交

conn.commit();

} catch (Exception e) {

e.printStackTrace();

//事务回滚

conn.rollback();

}It can be found that it is still very simple to use. ShardingSphere-JDBC will judge whether to submit by local transaction or use the distributed transaction manager ShardingTransactionManager according to the current transaction type.

Read and write separation

For an application system with a large number of concurrent read operations and fewer write operations at the same time, the database is divided into a main library and a slave library. The main library is responsible for processing transactional additions, deletions and changes, and the slave library is responsible for processing query operations. Effectively avoid row locks caused by data updates, which greatly improves the query performance of the entire system.

Master-slave configuration

When the fragmentation rules were introduced in the above section, one of them talked about the master-slave rule configuration, the purpose of which is to realize the separation of read and write, the core configuration class: MasterSlaveRuleConfiguration :

public final class MasterSlaveRuleConfiguration implements RuleConfiguration {

private final String name;

private final String masterDataSourceName;

private final List<String> slaveDataSourceNames;

private final LoadBalanceStrategyConfiguration loadBalanceStrategyConfiguration;

}- name: Configuration name, version 4.1.0 currently in use, here must be the name of the main library;

- masterDataSourceName: the name of the main database data source;

- slaveDataSourceNames: slave database data source list, you can configure one master and multiple slaves;

- LoadBalanceStrategyConfiguration: Faced with multiple slave libraries, the load algorithm will be used to select when reading;

Master-slave load algorithm class: MasterSlaveLoadBalanceAlgorithm , implementation classes include: random and cyclic;

- ROUND_ROBIN: Implementation class

RoundRobinMasterSlaveLoadBalanceAlgorithm - RANDOM: Implementation class

RandomMasterSlaveLoadBalanceAlgorithm

Actual combat

Prepare slave libraries for ds0 and ds1: ds01 and ds11, respectively, configure master-slave synchronization; read-write separation configuration is as follows:

List<String> slaveDataSourceNames0 = new ArrayList<String>();

slaveDataSourceNames0.add("ds01");

MasterSlaveRuleConfiguration masterSlaveRuleConfiguration0 = new MasterSlaveRuleConfiguration("ds0", "ds0",

slaveDataSourceNames0);

shardingRuleConfig.getMasterSlaveRuleConfigs().add(masterSlaveRuleConfiguration0);

List<String> slaveDataSourceNames1 = new ArrayList<String>();

slaveDataSourceNames1.add("ds11");

MasterSlaveRuleConfiguration masterSlaveRuleConfiguration1 = new MasterSlaveRuleConfiguration("ds1", "ds1",

slaveDataSourceNames1);

shardingRuleConfig.getMasterSlaveRuleConfigs().add(masterSlaveRuleConfiguration1);In this way, when performing query operations, it will be automatically routed to the slave library to achieve read-write separation;

to sum up

This article focuses on the data sharding function of ShardingSphere-JDBC, which is also the core function of all database middleware; of course, functions such as distributed primary key, distributed transaction, read-write separation are also essential; at the same time, ShardingSphere also introduces flexibility Scaling function, this is a very eye-catching function, because the database sharding itself is stateful, so we fixed how many libraries and tables at the beginning of the project, and then routed to each library table through the sharding algorithm, but Business development often exceeds our expectations. At this time, it will be very troublesome if you want to expand the table and database. At present, the ShardingSphere official website elastic scaling is in the alpha , and I look forward to this feature very much.

reference

https://shardingsphere.apache.org/document/current/cn/overview/

Thanks for attention

You can follow the WeChat public "1607e6debeb04d roll back code ", read it as soon as possible, the article is continuously updated; focus on Java source code, architecture, algorithm and interview.

**粗体** _斜体_ [链接](http://example.com) `代码` - 列表 > 引用。你还可以使用@来通知其他用户。