1. What is MLOps?

Machine learning operations (MLOps) are based on the principles and practices DevOps For example, continuous integration, continuous delivery, and continuous deployment. MLOps applies these principles to the machine learning process, and its goals are:

- Experiment and develop models faster

- Deploy models to production environments faster

- quality assurance

As the name suggests, MLOps is the DevOps of the machine learning era. Its main role is to connect the model building team and the business, operation and maintenance team, and establish a standardized model development, deployment, and operation and maintenance process, so that enterprise organizations can better use machine learning capabilities to promote business growth.

To give a simple example, our impression of machine learning a few years ago was mainly to get a bunch of excel/csv data, try to do some model experiments through notebooks, etc., and finally produce a prediction result. However, everyone may not have a very idea about how to use this forecast result and what impact it has on the business. This can easily lead to machine learning projects staying in the laboratory stage and doing POC one by one, but they cannot be successfully "landed".

In recent years, everyone has paid more and more attention to the implementation of machine learning projects, and their understanding of business and model application processes have become better and better, and more and more models have been deployed in real business scenarios. But when the business actually starts to use, there will be various demand feedbacks on the model, and algorithm engineers will need to iteratively develop and deploy frequently. With the development of business, there are more and more model application scenarios, and it has become a real challenge to manage and maintain so many model systems.

Looking back on this development, does it feel familiar? Twenty years ago, the software industry encountered similar challenges on the path of digital evolution. From deploying a Web service to deploying dozens or even hundreds of different applications, under the challenges of various scaled delivery, DevOps technology was born. Various best practices in software engineering fields such as virtualization, cloud computing, continuous integration/release, automated testing, etc. are basically related to this direction. In the near future, perhaps smart models will be as common as today's software systems. An enterprise needs to use a lot of business systems to implement digital processes, and it also needs a lot of models to implement data-driven intelligent decision-making, and derive more model-related development and operation, permissions, privacy, security, audits, etc. Enterprise-level requirements.

Therefore, in recent years, MLOps has gradually become a hot topic. With good MLOps practice, algorithm engineers can focus more on the model building process they are good at, and reduce the "perception" of the deployment and maintenance . On the other hand, it also makes the direction of model development and iteration clearer and more practical. Generate value for the business. Just like today’s software engineers rarely need to pay attention to details such as the operating environment, test integration, release process, etc., but they achieve agile and efficient releases several times a day. In the future, algorithm engineers should also be able to focus more on data insights acquisition and release models. Become an almost insensible and fast automated process.

Second, the various steps of MLOps

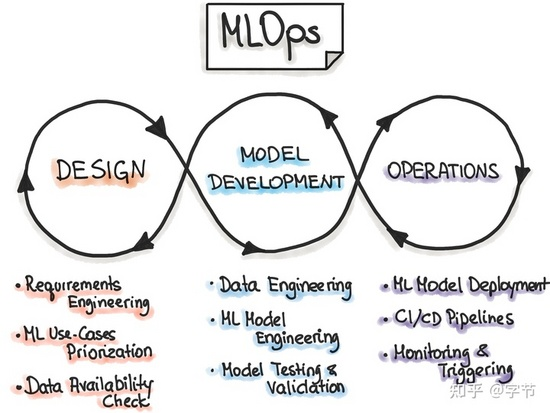

From a broad perspective, MLOps is divided into 3 steps:

- Project design, including requirement collection, scenario design, data availability check, etc.

- Model development, including data engineering, model engineering, and evaluation and verification.

- Model operation and maintenance, including model deployment, CI/CD/CT workflow, monitoring and scheduling triggering, etc.

DevOps iterates software products faster by shortening the time of development and deployment, making the company's business continue to evolve. The logic of MLOps also speeds up the acquisition of value from data to insights through similar forms of automation and iteration.

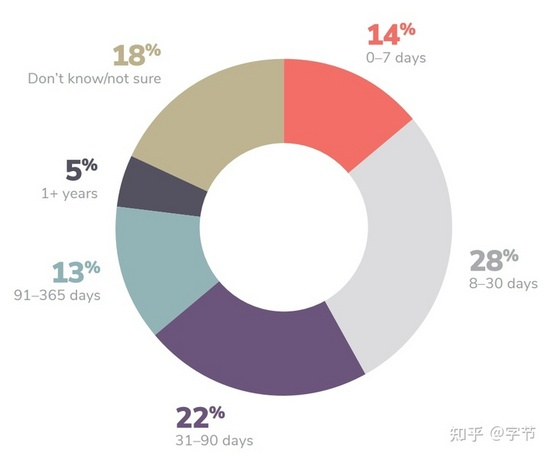

One of the core problems to be solved by is to shorten the iterative cycle of model development and deployment. , that is, various efficiency problems of . From the 2020 report of Algorithmia, we can see that a large number of companies need 31-90 days to launch a model, and 18% of them need more than 90 days to launch a model. And among small and medium-sized companies, algorithm engineers spend significantly more time on model deployment. MLOps hopes to improve the overall efficiency of model delivery through more standardized and automated processes and infrastructure support.

On the other hand, MLOps also hopes that can provide a platform for seamless collaboration between various roles in the enterprise. and enable business, data, algorithm, operation and maintenance and other roles to collaborate more efficiently and increase business value output, that is, transparency. Demand. We will also counter-copy these two core demands in our detailed discussion later.

Three, the principle of MLOps

Automation

We should automate all the links that can be automated in the entire workflow, from data access to final deployment. Google’s classic MLOps guide proposed three levels of automation, which are worth learning from, and we will introduce them in detail later.

Continuous

When it comes to DevOps, it is easy for everyone to think of CI/CD, which also confirms the importance of this principle from the side. On the basis of continuous integration, continuous deployment, and continuous monitoring, MLOps also adds the concept of continuous training, that is, the model can be continuously trained and updated automatically during the online operation process. When we design and develop a machine learning system, we must continue to think about the "continuous" support of various components, including various artifacts used in the process, their version management and orchestration series, etc.

Versioning

Versioning management is also one of the important best practices of DevOps. In the field of MLOps, in addition to the version management of pipeline code, the version management of data and models is a newly emerging demand point, and it also poses new challenges to the underlying infra.

Experiment Tracking

Experiment management can be understood as the enhancement of commit message in version control. For code changes related to model construction, we should be able to record the corresponding data, code version, and corresponding model artifacts at the time, as an important basis for subsequent analysis of models and selection of specific online versions.

Testing

The machine learning system mainly involves three different pipelines, namely data pipeline, model pipeline and application pipeline (similar to the integration of model and application system). For these three pipelines, it is necessary to build corresponding data feature tests, model tests and application infra tests to ensure that the output of the overall system is consistent with the expected business goals and achieve the purpose of transforming data insights into business value. In this regard, Google's ML test score is a good reference.

Monitoring

Monitoring is also a traditional best practice in software engineering. Part of the ML test score mentioned above is also related to monitoring. In addition to traditional system monitoring, such as logs, system resources, etc., machine learning systems also need to monitor input data and model predictions to ensure the quality of predictions, and automatically trigger some response mechanisms, such as data or models, when abnormal conditions occur. The downgrade of the model, the retraining and deployment of the model, etc.

Reproducibility

Different from the deterministic behavior of traditional software systems, machine learning contains a lot of "randomization" elements, which poses certain challenges to the investigation of various problems, version rollbacks, and the certainty of output effects. Therefore, in the development process, we also need to keep the principle of reproducibility in mind at all times and design corresponding best practices (such as setting random number seeds, versioning of various dependencies such as operating environment, etc.).

Four, MLOps process details

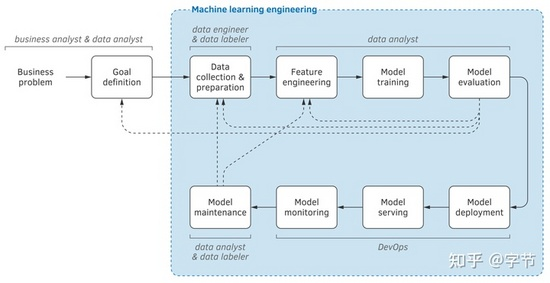

Let's take a look at the specific machine learning project process and expand in detail the support that MLOps needs to provide in each module.

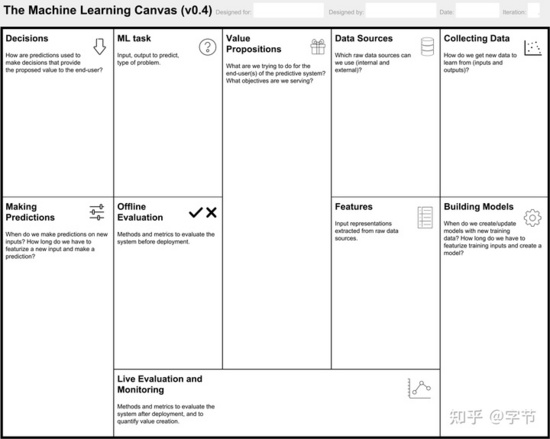

Project Design

There is no doubt about the degree of importance that the project design needs to receive. We also have a lot of space to introduce in the course introduction of Fullstack Deep Learning. In the field of MLOps, we should also design a series of standards and documents for this part of the work. There are many materials that the industry can refer to, such as Machine Learning Canvas , Data Landscape and so on.

Data access

In terms of data access, we will use mature data platforms, such as various data warehouses, data lakes or real-time data sources. For data storage after connecting to the platform, priority can be given to components with data version support, such as Delta Lake. Of course, DVC or self metadata maintenance can also be used to manage ML-related data assets.

data analysis

After data access, various types of EDA analysis are generally required. The traditional approach is generally to use notebooks for interactive analysis, but for the preservation and management of analysis results, sharing and collaboration, automatic refresh after data updates, and advanced interactive analysis capabilities, the native notebook itself still has many shortcomings, which are difficult to satisfy. . Some research and products have made some improvements in this direction, such as Polynote, Facets, Wrattler, etc.

Data check

For the raw data that is accessed, various quality problems or changes in data type, meaning, distribution, etc. usually occur. The machine learning pipeline can basically run successfully even when the data changes, causing various unexpected "silent failure" problems, which will be very difficult to troubleshoot and consume a lot of time and energy for algorithm engineers. Therefore, it is particularly important to set up various automated data checks. For example, Tensorflow Data Validation is a well-known library in this regard.

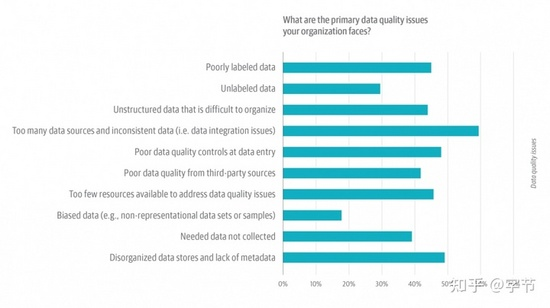

O'Reilly has done a survey on data quality in 20 years, and found that the main data problems in enterprises are as follows:

In addition to the above problems involving model applications, the detection of various drifts is also very important, such as changes in the distribution of input data (data drift), or changes in the relationship between input data and predicted targets (concept drift). In order to deal with these data quality problems, we need to design corresponding data quality inspection templates according to different business fields, and conduct various attributes, statistics, and even model-based data problem inspections based on specific conditions.

Data engineering

This part of the work includes data cleaning, data conversion, feature engineering . According to different business forms, the proportion of this part may vary, but in general, the proportion of this part in the entire model development process and the challenges encountered are relatively large. include:

- For the management of large amounts of data processing logic, scheduling execution and operation and maintenance processing.

- For the management and use of data versions.

- For the management of complex data dependencies, such as data blood relationship.

- Compatibility and logical consistency for different forms of data sources, such as the processing of batch and realtime data source types by lambda architecture.

- Satisfaction of demand for offline and online data services, such as offline model prediction and online model services.

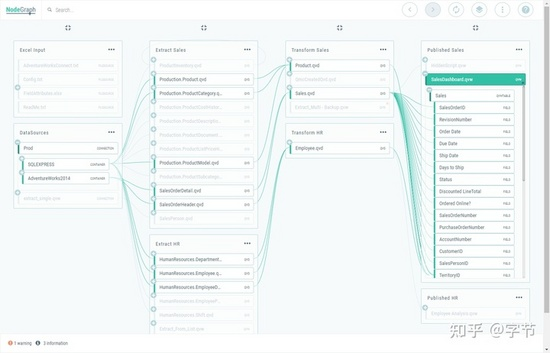

Taking data blood relationship as an example, a frequently encountered scenario is that when we find that there is a problem with downstream data, we can quickly locate upstream dependencies through the data blood relationship graph and conduct separate investigations. After the problem is fixed, all affected downstream nodes can be re-run through blood relationship to perform relevant test verification.

In the field of modeling applications, there are many data processing feature engineering operations and applications that will be more complicated, such as:

The model needs to be used to generate features, such as embedding information learned by various expression learning.

It is necessary to consider the trade-off between the practical cost of feature calculation and the improvement of the model effect brought by it.

Cross-organizational feature sharing and use.

Under these challenges, the concept of feature store has gradually emerged.

This aspect is another big topic, so let's not expand it in detail. One of the basic features that can be seen from the above figure is that we will choose different storage systems to store characteristic data according to different online and offline access patterns. In addition, the feature version information should also be considered during downstream consumption to ensure the stability and reproducibility of the entire process.

Model building

Model construction is generally the part that has received more attention and discussion. There are many mature machine learning frameworks to help users train models and evaluate model effects. The support that this piece of MLOps needs to provide includes:

- Evaluation and analysis of results during model development, including indicator error analysis, model interpretation tools, visualization, etc.

- Various metadata management of the model itself, experimental information, result records (indicators, detailed data, charts), documents (model card), etc.

- Version management of model training, including various dependent libraries, training code, data, and the final generated model, etc.

- Model online update and offline retraining, incremental training support.

- The integration of some model strategies, such as the extraction and preservation of embedding, stratified/ensemble model support, and incremental training support such as transfer learning.

- AutoML type automatic model search, model selection support.

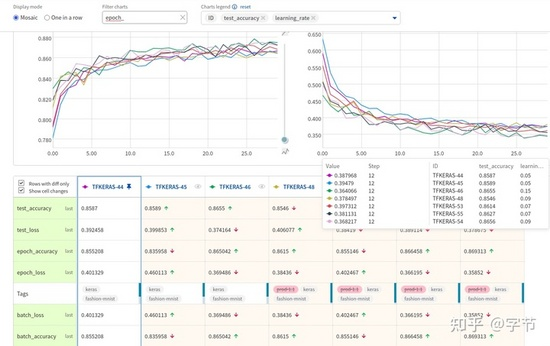

In terms of model experiment management, products that can be used for reference are MLflow , neptune.ai, Weights and Biases, etc.

From a model-centric point of view, like the feature store, we need to further introduce model repository to support the recording of experimental results, as well as the connection of model deployment status, online monitoring feedback and other information. Various operations related to model operation and maintenance can be supported in this component. The implementation of open source can focus on ModelDB.

Integration Testing

After completing the construction of the data and model pipelines, we need to perform a series of test verifications to evaluate whether the new model can be deployed online. include:

- Model prediction tests, such as accuracy, prediction stability, specific case regression, etc.

- Pipeline execution efficiency test, such as overall execution time, computing resource overhead, etc.

- Testing of integration with business logic, such as whether the format of model output meets the requirements of downstream consumers, etc.

Refer to Google’s classic ML Test Score, which includes the following types of tests:

- In the data verification test, in addition to the data quality check on the original data input, the processing of various data features in the machine learning pipeline also requires a series of tests to verify that it meets expectations.

- Feature importance test. For various constructed features, we need to ensure their contribution in the model to avoid waste of computing resources and feature storage. Useless features also need to be cleaned up in time to control the overall complexity of the pipeline.

- Privacy audit and other related requirements testing.

- Model training test, the model should be able to use data for effective training, such as loss will show a downward trend in training. And the forecast target is an improvement relative to the business target.

- Model timeliness test, compare the effect of the old version of the model, test the decline speed of the model index, and design the retraining cycle of the model.

- Model cost testing ensures that the input-output ratio of training time for complex models is significantly improved compared to simple rules and baseline models.

- Model indicator testing to ensure that the model’s test set verification or specific regression problem verification can pass.

- Model fairness test, for sensitive information, such as gender, age, etc., the model should show fair prediction probability under different feature groups.

- The model disturbance test is to perform a slight disturbance to the input data of the model, and the variation range of its output value should meet expectations.

- Model version comparison test. For models that have not undergone major updates, such as routinely triggered retrain, there should not be too much difference between the output of the two model versions.

- Model training framework testing, such as repeating the same training twice, should give stable and reproducible results.

- Model API testing, to verify the input and output of the model service.

- The integration test runs and verifies the entire pipeline to ensure the correct connection of all links.

- On-line testing, before the model is deployed but externally serviced, a series of verification tests that are the same as the off-line environment are required to ensure that the running results are correct.

Model deployment

After passing the test, we can deploy the model online. Here are divided into many different types according to different business forms, and different publishing methods need to be considered, for example:

- Batch prediction pipeline

- Real-time model service

- Edge device deployment, such as mobile apps, browsers, etc.

In addition to the model itself, the assets deployed by the model also need to include end-to-end test cases, test data, and corresponding result evaluations. The relevant test cases can be executed again after the model is deployed to ensure that the results obtained in the development and deployment environments are consistent.

For models with more critical output, a series of model governance requirements need to be considered. For example, various manual reviews are required before the model is deployed, and the corresponding sign-off mechanism is designed. By the way, responsible AI has also received more and more attention in recent years, and all links in MLOps also need to pay attention to the support of corresponding functions.

Model Service

In the model service process, many checks and strategies are also needed to ensure the reliability and rationality of the overall output. The logic of various test inspections can be used for reference from the previous test links.

Model services are also very changeable in form:

Therefore, there are many topics involved. For example, real-time model services need to consider model serialization, heterogeneous hardware utilization, inference performance optimization, dynamic batch, deployment form (container, serverless), serving cache, model streaming, etc. If it involves online updates, you also need to consider the implementation of online learning.

For edge deploy, we need to consider the different packaging methods of the model, model compression, etc. It can even do hybrid-style serving or federated learning. For example, like smart speakers, a simple model can be deployed on the device to receive wake-up instructions, and subsequent complex questions and answers can be sent to the complex model in the cloud for processing.

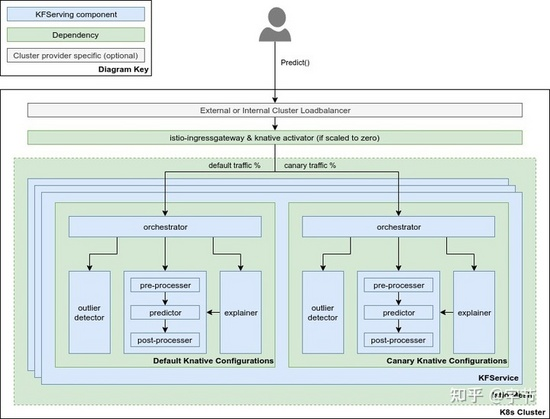

When the above model deployment steps are completed, it is generally not considered a formal release. Generally, some strategies are used to gradually replace the old models with new models, including shadow model, canary deployment, A/B testing, and even MAB automatic model selection strategies.

In the cloud native era Kubeflow provides a series of serverless serving, elastic scaling, traffic management, and additional components (anomaly detection, model interpretation) and other aspects are very powerful, worth learning:

Model monitoring

Finally, for the operation of the online model, we need to continuously monitor, including:

- Monitoring of model dependent components, such as data version, upstream system, etc.

- Monitoring of model input data to ensure consistency between schema and distribution

- Consistency monitoring of offline feature construction and online feature construction output, such as sampling some samples,--comparing online and offline results, or monitoring distribution statistics

- Monitor the numerical stability of the model, record NaN and Inf, etc.

- Monitoring of model computing resource overhead

- Model metric monitoring

- Monitoring the model update cycle to ensure that there is no model that has not been updated for a long time

- Monitoring the number of downstream consumption to ensure that there is no model in the "abandoned" state

- Log records useful for troubleshooting

- Information records useful for improving the model

- External attack prevention and monitoring

The above-mentioned various types of monitoring must cooperate with the corresponding automatic/manual response mechanism.

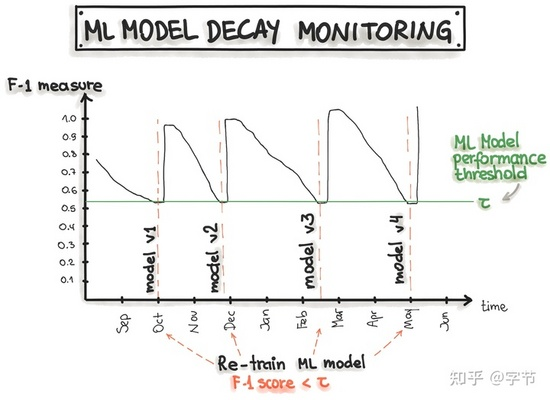

Take model effect monitoring as an example. When the effect decreases, we need to intervene in investigation and processing in time, or trigger retraining. For retraining, it is necessary to comprehensively consider model effect changes, data update frequency, training overhead, deployment overhead, and retraining promotion, etc., and select an appropriate time point for triggering. Although many models also support online real-time updates, their stability control, automated testing, etc. lack reference to standard practices. In most cases, retraining tends to have better effects and stability than online update training.

And if there is a problem of relying on data, we can also design a series of downgrading strategies, such as using the latest version of normal historical data, or discarding some non-core features, and using more basic models/strategies to make predictions.

In addition, there is a more interesting trade-off here. If the environment changes quickly and the cost of model retraining is high, sometimes you can consider using a simpler model strategy, which is often less sensitive to environmental changes. High, but the price is that there may be some loss of effect.

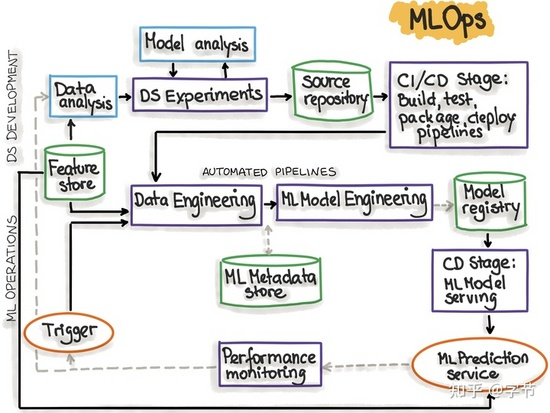

Process series

In this article of Google, three levels of MLOps process automation are proposed, and the parts that can be automated in the above-mentioned various processes are integrated in series, which can be called one of the best practices of MLOps. Two of the key automation enhancements are pipeline automation and CI/CD/CT automation. Another core idea is deployment of the 1609b5193bee59 model is not just to deploy an API for the model to provide services to the outside world, but to package and deploy the entire pipeline. Another methodology worthy of reference comes from Martin Fowler's CD4ML, which also contains many specific component selection suggestions.

In the overall concatenation process, some common dependencies are:

- Version control system, including data, code, and various machine learning related artifacts.

- The test and build system can automatically execute corresponding tests after the version is updated, and package them into a component image for pipeline execution after passing.

- The deployment system can deploy the pipeline as a whole to the application environment, including online services and clients.

- The model center, which stores the trained models, is especially important for scenarios with a long training time.

- Feature store stores various features and serves batch consumption in offline scenarios and real-time query consumption in online scenarios.

- ML meta store stores all kinds of data generated in experimental training, including experiment name, data used, code version information, hyperparameters, data and charts related to model prediction, etc.

- Pipeline framework, the execution framework of a series of work processes, including scheduling execution, breakpoint running, automatic parallelism and so on.

Many of these dependent components are new requirements in MLOps, and the industry has also begun to have various corresponding products, such as Michelangelo, FBLearner, BigHead, MLflow, Kubeflow, TFX, Feast, and so on. However, it seems that the various components are far from reaching the standardization and maturity level of continuous integration in Web development. For example, for the selection of workflow/pipeline components, you can refer to this survey. In terms of CI/CD, traditional Jenkins, GoCD, CircleCI, Spinnaker, etc. can basically meet the needs. Of course, you can also consider the CML produced by DVC, which is more customized for machine learning scenarios. This introduction to the overall ML infra by Arize AI contains a more comprehensive scope, and can provide some references for the selection of various components in MLOps. The corresponding open source resources can refer to awesome production ML .

Finally, in the process of designing and selecting, you can think and plan based on the following canvas.

For the development and evolution of the entire process, it is recommended to proceed in the form of agile iteration. That is, first develop a basic pipeline that can run through, and use the most basic data and simple models to build the entire process. Follow-up business feedback is used to discover important improvements in the entire process, and gradually iterative delivery.

Five, Summary

If MLOps can do well, they can get a lot of rewards. I personally feel that there are two things that have the greatest value. One is to improve the efficiency of the team's overall development and delivery model through various engineering best practices. Second, due to the reduction of project operation and maintenance costs, we will have the opportunity to greatly improve the scale capabilities of machine learning applications, such as launching thousands of models in the enterprise to generate value for various business scenarios.

**粗体** _斜体_ [链接](http://example.com) `代码` - 列表 > 引用。你还可以使用@来通知其他用户。