Foreword:

Java concurrent programming learning and sharing goals:

Usage and usage of tools commonly used in Java concurrent programming;

Implementation principles and design ideas of Java concurrent programming tools;

Common problems and solutions encountered in concurrent programming;

Choose more suitable tools according to the actual situation to complete an efficient design plan

Learning sharing team:

Xueersi Peiyou-Operation R&D Team

Java Concurrent Programming Sharing Group:

@沈健@曹伟伟@张俊勇@田新文@张晨

Sharer of this chapter: @张晨

Learning sharing outline:

01 first acquaintance with concurrency

What is concurrency and what is parallelism?

Let's use an example of JVM to explain that when the garbage collector does concurrent marking, at this time JVM can not only mark garbage, but also handle some requirements of the program. This is called concurrency. When doing garbage collection, multiple threads of the JVM do the collection at the same time, which is called parallelism.

02 Why learn concurrent programming

intuitive reason

1) JD mandatory requirements

With the rapid development of the Internet industry, concurrent programming has become a very popular field, and it is also a necessary skill for service-side job recruitment in major companies.

2) The only way from the calf to the big cow

The architect is a very important role in the software development team. To become an architect is the goal of many technical people. The measure of an architect’s ability is to design a system that solves high concurrency. This shows that high concurrency technology The importance of, and concurrent programming is the underlying foundation. Regardless of the game or the Internet industry, whether software development or large-scale websites, there is a huge demand for highly concurrency technical talents. Therefore, in order to improve yourself in order to work, it is urgent to learn high concurrency technology.

3) It is easy to step on the pit during the interview

In the interview, in order to examine the mastery of concurrent programming, they often examine the knowledge related to concurrent security and the knowledge of thread interaction. For example, how to implement a thread-safe singleton mode under concurrency, and how to complete the interactive execution of functions in two threads.

following is the use of double retrieval to achieve a thread-safe singleton lazy mode. Of course, enumeration or singleton hungry mode can also be used.

private static volatile Singleton singleton;

private Singleton(){};

public Singleton getSingleton(){

if(null == singleton){

synchronized(Singleton.class){

if(null == singleton){

singleton = new Singleton();

}

}

}

return singleton;

}The first level of null judgment here is to reduce the granularity of lock control. The volatile modification is used because new Singleton() in jvm will have instruction rearrangement. Volatile avoids happens before and avoids the problem of null pointers. A lot of thread-safe singleton models can be derived, such as the implementation principles of volatile and synchronized, JMM model, MESI protocol, instruction rearrangement, and more detailed illustrations about the sequence of the JMM model.

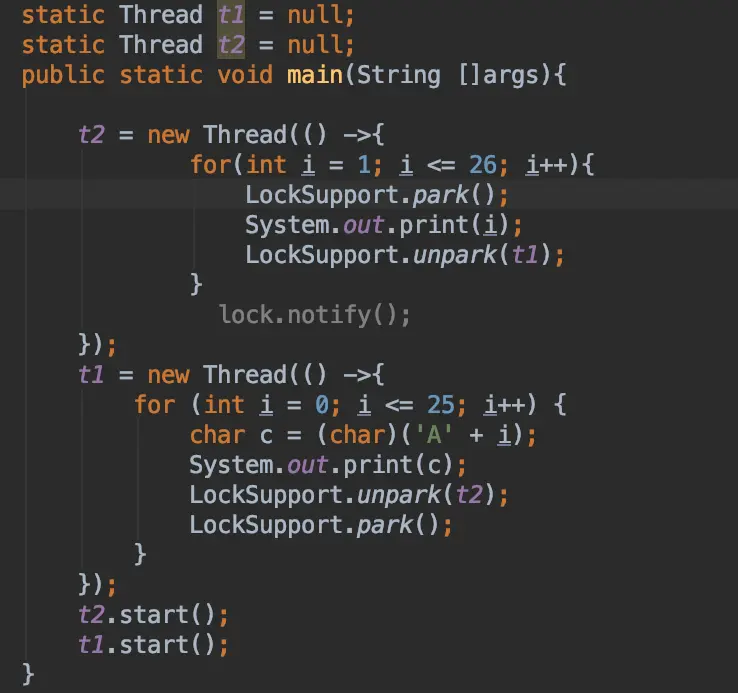

In addition to thread safety issues, for example, using two threads to alternately print out A1B2C3...Z26

The focus of the inspection is not to simply implement this function. Through this interview question, you can investigate the overall mastery of knowledge. Multiple solutions can be implemented. Atomicinteger, ReentrantLock, and CountDownLat ch can be used. The following figure is an example of using LockSupport to control alternate printing of two threads. The internal implementation principle of LockSupport is to use UNSAFE to control a semaphore to change between 0 and 1, so that the alternate printing of two threads can be controlled.

4) Concurrency can be seen everywhere in the framework we use at work, tom cat, netty, jvm, Disruptor

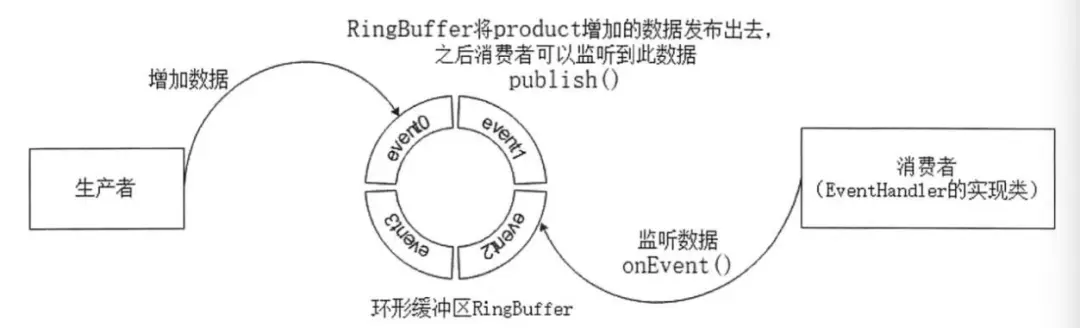

Familiar with the basics of JAVA concurrent programming is the cornerstone of mastering the underlying knowledge of these frameworks. Here is a brief introduction to the underlying implementation principle of the high-concurrency framework Disruptor, to make an outline:

Martin Fowler introduced in an LMAX article that this high-performance asynchronous processing framework has a single-threaded one-second throughput of up to 6 million

Disruptor core concept

Disruptor features

- Event-driven

- Based on the "observer" model, the "producer-consumer" model

- Can realize network queue operation without lock

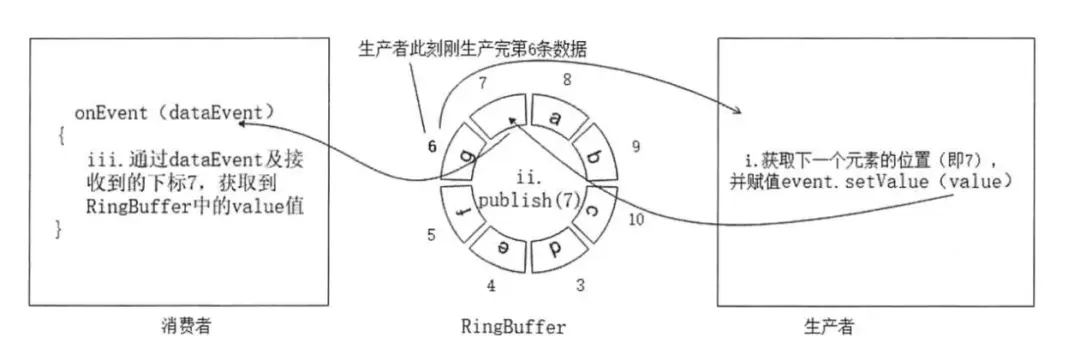

RingBuffer execution process

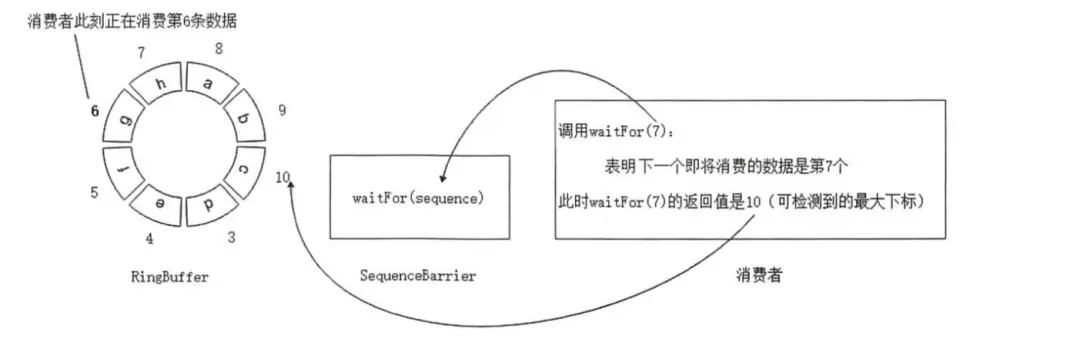

Disruptor underlying components, RingBuffer closely related objects: SequenceBarrier and Sequencer;

SequenceBarrier is the bridge between consumers and RingBuffer. In the Disruptor, the consumer directly accesses the SequenceBarrier, and the SequenceBarrier reduces the queue conflicts of the RingBuffer.

SequenceBarrier uses the waitFor method when the consumer's speed is greater than the producer's production speed, the consumer can give the producer a certain buffer time through the waitFor method, coordinate the speed of the producer and the consumer, and waitFor execution timing:

Sequencer is the bridge between the producer and the buffer RingBuffer. The producer applies for data storage space from the RingBuffer through the Sequencer, and uses the publish method to notify the consumer through the WaitStrategy. The WaitStrategy is the waiting strategy when the consumer has no data to consume. Each producer or consumer thread will first apply for the position of the operable element in the array. After the application is obtained, it will directly write or read data at that position. The entire process uses the atomic variable CAS to ensure the thread safety of the operation. This is the lock-free design of Disruptor.

Here are five common waiting strategies:

- BlockingWaitStrategy: The default strategy of Disruptor is BlockingWaitStrategy. Inside the BlockingWaitStrategy, locks and conditions are used to control thread wakeup. BlockingWaitStrategy is the least efficient strategy, but it consumes the least CPU and can provide more consistent performance in a variety of different deployment environments.

- SleepingWaitStrategy: The performance of SleepingWaitStrategy is similar to that of BlockingWaitStrategy, and it consumes similar CPUs, but it has the least impact on the producer thread. Loop waiting is realized by using LockSupport.parkNanos(1).

- YieldingWaitStrategy: YieldingWaitStrategy is one of the strategies that can be used in low-latency systems. YieldingWaitStrategy will spin to wait for the sequence to increase to the appropriate value. Inside the loop, Thread.yield() will be called to allow other queued threads to run. This strategy is recommended in scenarios where extremely high performance is required and the number of event processing lines is less than the number of logical cores of the CPU; for example, the CPU has the feature of enabling hyperthreading.

- BusySpinWaitStrategy: The best performance, suitable for low-latency systems. This strategy is recommended in scenarios where extremely high performance is required and the number of event processing threads is less than the number of logical cores of the CPU; for example, the CPU has the feature of enabling hyperthreading.

At present, many well-known projects including Apache Storm, Camel, Log4j2 have applied Disruptor to obtain high performance.

5) JUC is the soul masterpiece of the concurrency god Doug Lea, which can be called a model (the first mainstream attempt, it raised the abstraction level beyond threads, locks and events to a more approachable way: concurrent collection, fork/join, etc.)

Through the study of concurrent programming design thinking, take advantage of the use of multithreading

- Unleash the power of multi-processors

- Simplicity of modeling

- Simplified handling of asynchronous events

- More responsive user interface

So what problems will be caused by not learning the basics of concurrent programming?

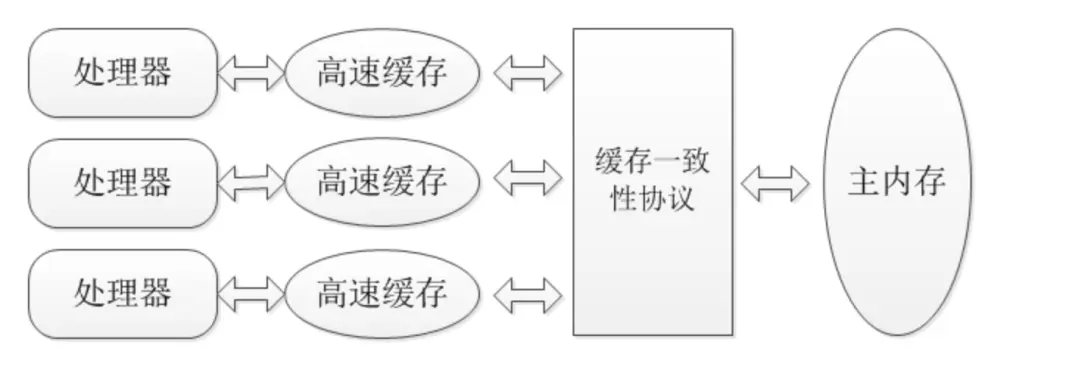

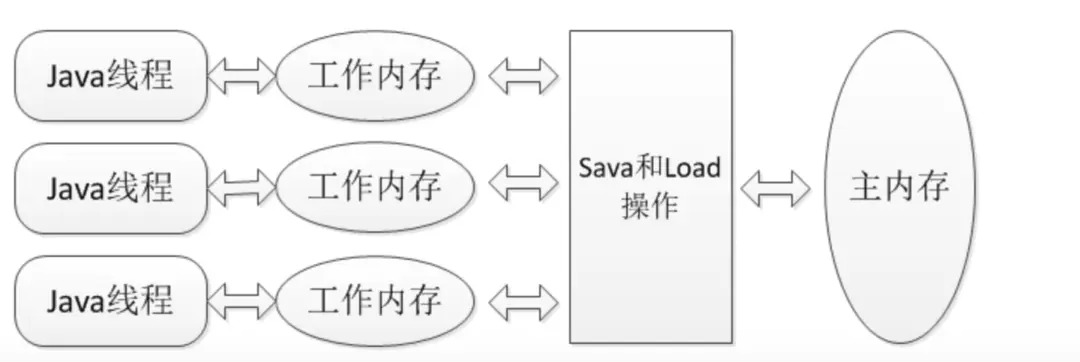

1) Multithreading is used everywhere in daily development, jvm, tomcat, netty, learning java concurrent programming is the prerequisite for a deeper understanding and mastering of such tools and frameworks. Because of the computer's cpu operation speed and memory io speed, there are several A gap of orders of magnitude, so modern computers have to add a cache that is as close as possible to the processing speed of the processor to buffer: the data needed for the operation in the memory is copied to the cache first, and then synchronized back to the memory when the operation is completed. As shown below:

Because JVM needs to implement cross-hardware platforms, JVM defines its own memory model, but because the JVM memory model is ultimately mapped to the hardware, the JVM memory model is almost the same as the hardware model:

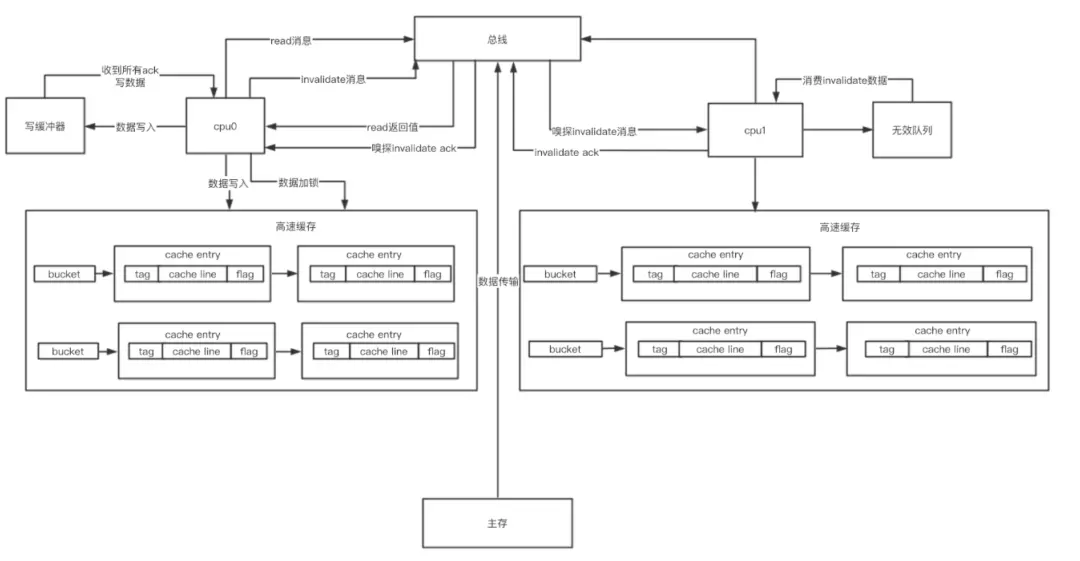

The underlying data structure of the operating system, the data structure in the cache corresponding to each CPU is a linked list of bucket storage, where tag represents the address in the main memory, cache line is the offset, and the flag corresponds to the MESI cache consistency The various states in the agreement.

MESI cache coherency states are:

M: Modify, on behalf of modify

E: Exclusive, stands for exclusive

S: Share, on behalf of sharing

I: Invalidate, which means invalid

following is the flow of a cpu0 data write:

When CPU0 executes load, read and write once, the status of flag will be S before doing write, and then send an invalidate message to the bus;

Other CPUs will monitor the bus message, change the flag status in the cache entry corresponding to each CPU from S to I, and send an invalidate ack to the bus

After cpu0 receives the invalidate ack returned by all cpu, cpu0 changes the flag to E, executes data writing, and changes the state to M, similar to a lock process

Taking into account performance issues, the efficiency of writing modified data is too long, so the write buffer and invalid queue are introduced. All modification operations will be written to the write buffer first, and other CPUs will first write to the invalid queue after receiving the message. , And return the ack message, and then consume the message from the invalid queue in an asynchronous form. Of course, this will cause order problems. For example, the flag in some entries is still S, but in fact it should be marked as I, so that the accessed data will have problems. Volitale is used to solve the disorder problem caused by instruction rearrangement. Volitale is a keyword at the jvm level, and MESI is at the cpu level. The two are several levels different.

2) The performance is not up to standard, and no solution can be found.

3) In the work, thread-unsafe methods may be written

The following is a step-by-step optimization case of multi-threaded printing time

new Thread(new Runnable() {

@Override

public void run() {

System.out.println(new ThreadLocalDemo01().date(10));

}

}).start();

new Thread(new Runnable() {

@Override

public void run() {

System.out.println(new ThreadLocalDemo01().date(1007));

}

}).start();Optimization 1, multiple threads use thread pool reuse

for(int i = 0; i < 1000; i++){

int finalI = i;

executorService.submit(new Runnable() {

@Override

public void run() {

System.out.println(new ThreadLocalDemo01().date2(finalI));

}

});

}

executorService.shutdown();

public String date2(int seconds){

Date date = new Date(1000 * seconds);

String s = null;

// synchronized (ThreadLocalDemo01.class){

// s = simpleDateFormat.format(date);

// }

s = simpleDateFormat.format(date);

return s;

}Optimization 2, thread pool combined with ThreadLocal

public String date2(int seconds){

Date date = new Date(1000 * seconds);

SimpleDateFormat simpleDateFormat = ThreadSafeFormatter.dateFormatThreadLocal.get();

return simpleDateFormat.format(date);

}When multiple threads take a SimpleDateFormat, thread safety issues will occur, and the execution results will be printed out at the same time. In optimization 2, thread pool combined with ThreadLocal is used to achieve resource isolation and thread safety.

4) Many problems cannot be located correctly

Stepping on the pit: the crm simulation timing task is blocked and cannot be continued

Problem: crm simulation uses the timed task configured by schedule, all the timed tasks after a certain time node are not executed

Reason: the problem caused by the timing task configuration. If the thread pool is not configured for the timing task configured by @Schedule, single thread will be used by default when using @EnableScheduling to enable the timing task in the startup class. If the backend is configured with multiple timing tasks, problems will occur. There are two timed tasks A and B. If A has occupied resources and has not been released, B will remain in a waiting state until A task releases resources, and B will start to execute. To avoid problems caused by multitasking, you need to use The following method configuration:

@Bean

public ThreadPoolTaskScheduler taskScheduler(){

ThreadPoolTaskScheduler scheduler = new ThreadPoolTaskScheduler();

scheduler.setPoolSize(10);

return scheduler;

}The crm service does not have many timing tasks configured, and when the resources are sufficient, the task execution speed is relatively fast, and there is no thread pool for timing tasks.

How does the program method in the timed task cause the thread to remain unreleased, resulting in blocking.

When locating the problem, the problem arises from the fact that CountDownLatch cannot be reset to zero, causing the entire main thread to hang there and cannot be released.

When calling await in the api, the calling thread is in a waiting state until the count becomes 0 before continuing. The general principle is as follows:

Therefore, the focus is shifted to the await method, so that the current thread waits until the latch counts down to zero, unless the thread is interrupted or the specified waiting time is exceeded. If the current count is zero, this method immediately returns a true value. If the current count is greater than zero, the current thread will be disabled for thread scheduling purposes, and the thread will remain dormant until one of the following three situations occurs: the count reaches zero due to the call to the countDown() method; or other A thread interrupted the current thread; or the specified wait time has expired.

Executors.newFixedThreadPool This is a fixed number of active threads. When the number of tasks submitted to the pool is greater than the number of fixed active threads, the tasks will be placed in the blocking queue to wait. In order to speed up the task processing in CRM, this timed task uses multi-threaded processing. The set count of CountDownLatch is greater than the number of fixed active threads of ThreadPoolExecutor. As a result, the task is always in a waiting state, and the count cannot be reset to zero. As a result, the main thread cannot be released all the time. The timing task of the station simulation service is in a paralyzed state.

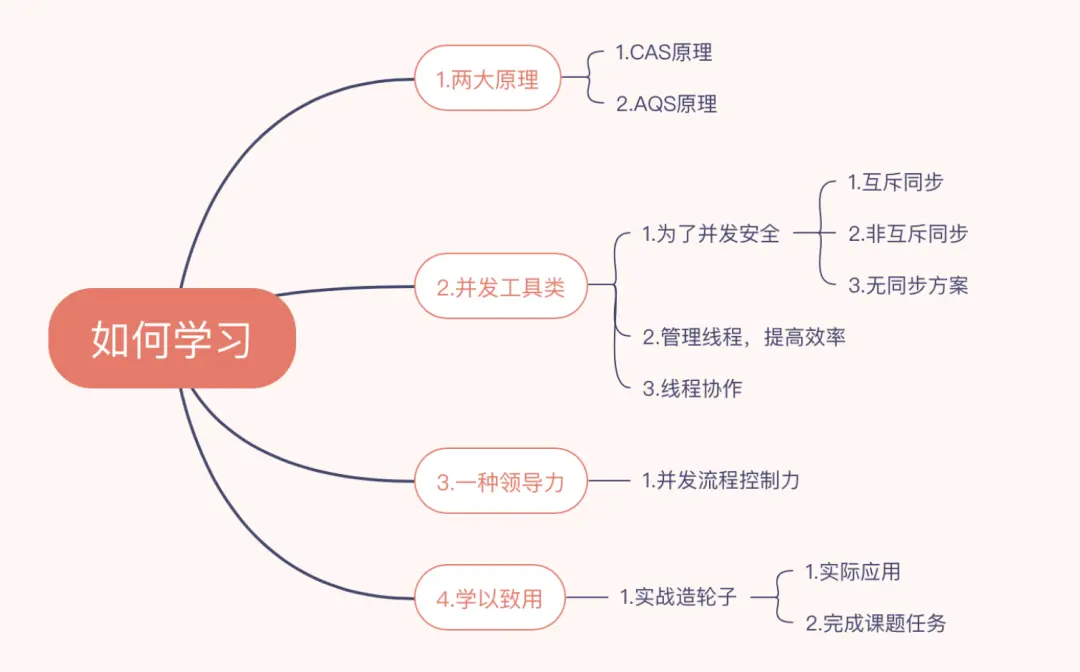

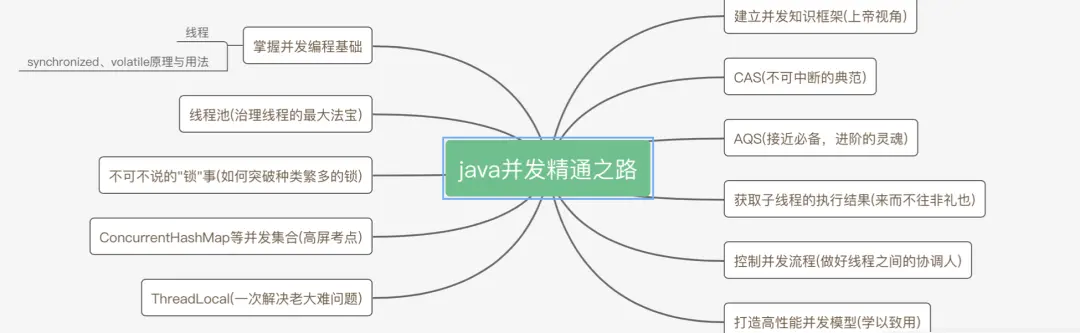

03How to learn java concurrent programming

In order to learn the basics of concurrent programming, we need to have a God's perspective, a macro concept, and then master the necessary knowledge points from point to depth. We can learn step by step from the following two mind maps.

essential knowledge points

04 thread

Enumerated so many cases are developed around the thread, so we need to have a deeper grasp of the thread, its concept, its principles, how to achieve interactive communication.

below 1609b51a010d7b can explain the difference between processes and threads more

process : A process is like a program, it is the smallest unit of resource allocation. The number of processes executed at the same time will not exceed the number of cores. But if you ask whether a single-core CPU can run multiple processes? The answer is yes again. A single-core CPU can also run multiple processes, but not at the same time, but a multi-process realized by switching back and forth between processes extremely quickly. There are many processes in the computer that need to be "simultaneously" open, and the rapid switching of the CPU between processes can achieve "simultaneously" running multiple programs. Process switching means that you need to keep the state before the process switch, so that you can continue to work when you switch back. So the process has its own address space, global variables, file descriptors, various hardware and other resources. The operating system schedules the CPU to perform process recording, replying, switching, and so on.

Thread : Thread is the basic unit of independent operation and independent scheduling (the real thread is running on the CPU), and the thread is equivalent to different execution paths in a process.

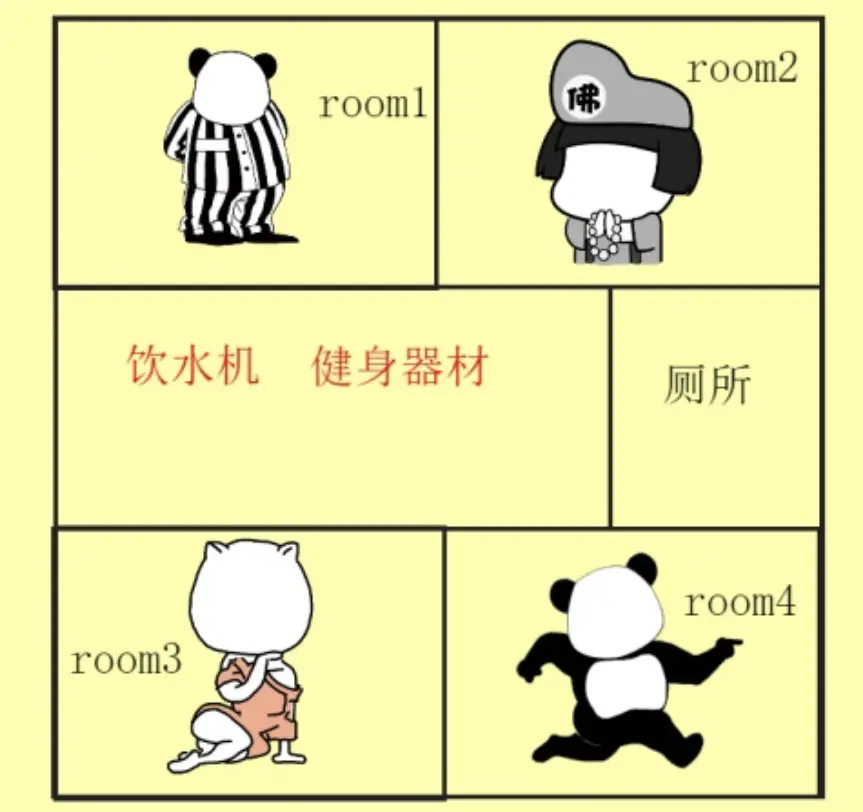

Single-threaded : Single-threaded is a house called a "process" in which only you live, and you can do anything at any time in this house. Whether you watch TV or play on the computer, it's all up to you. Do what you want, and do what you want.

Multithreading: But if you are in a "multi-person" house, each house has residents called "threads": thread 1, thread 2, thread 3, thread 4, the situation has to change Up.

There is the concept of "lock" in multithreaded programming, and there are also locks in your house. If your wife is going to the toilet and locked the door, she is exclusively using the public resource "toilet" in this "house (process)". If your house only has this toilet, you, as another thread, can only wait first .

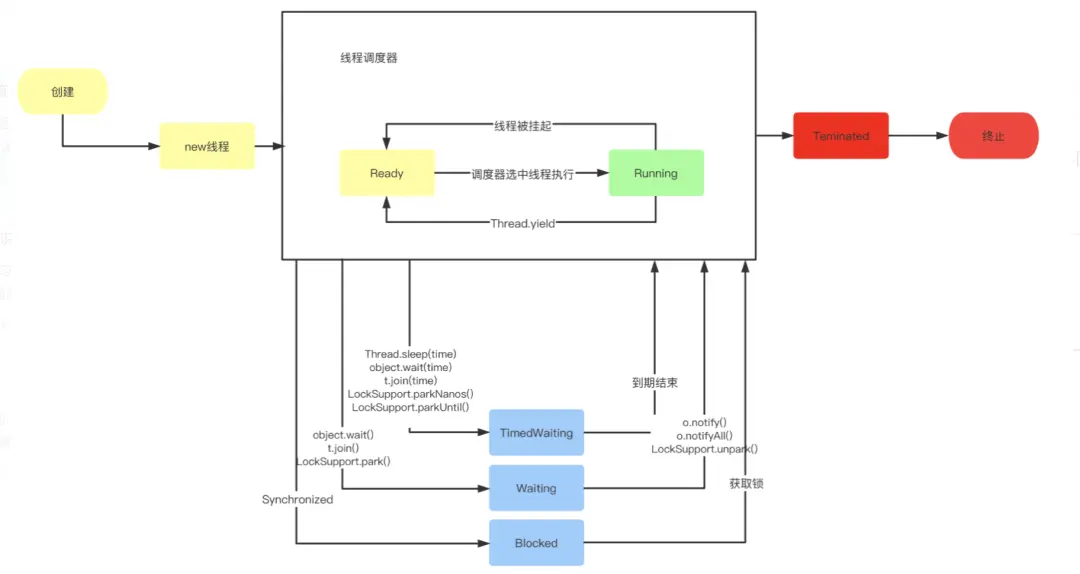

The most important and most troublesome aspect of threads is the interactive communication process between threads. The following figure shows the change process of thread status:

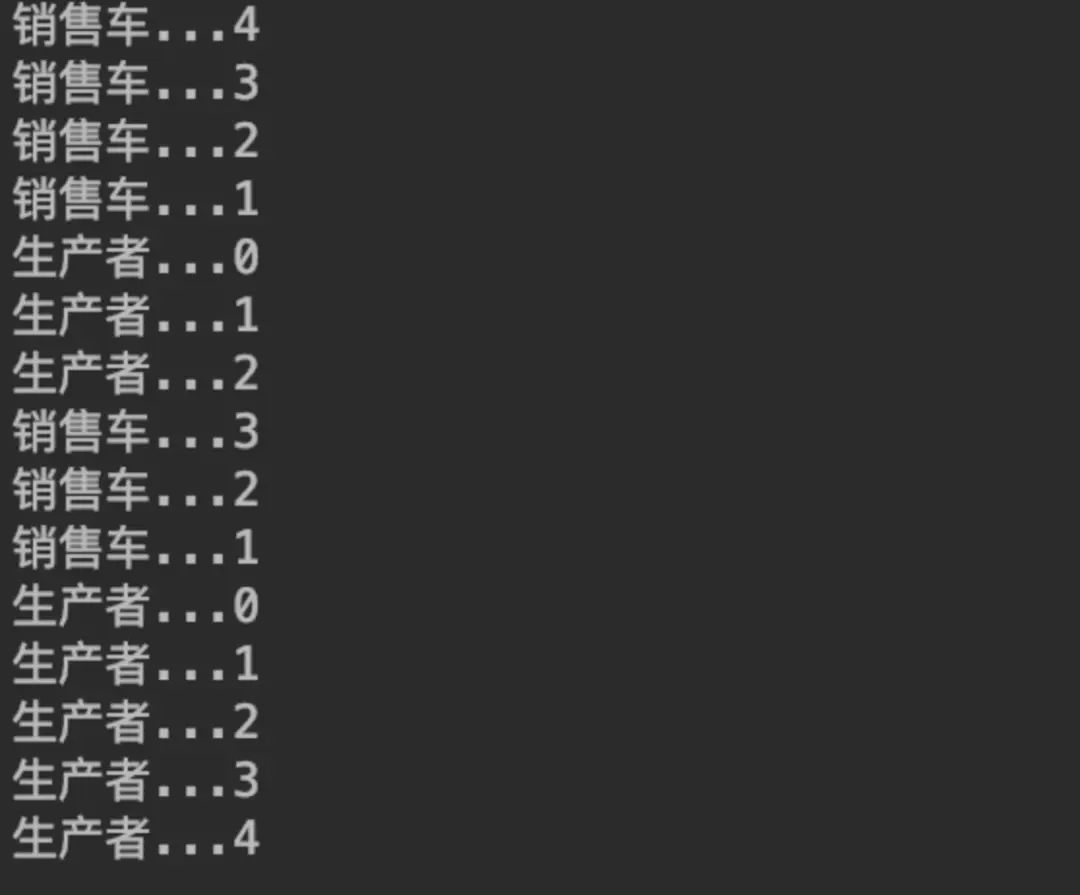

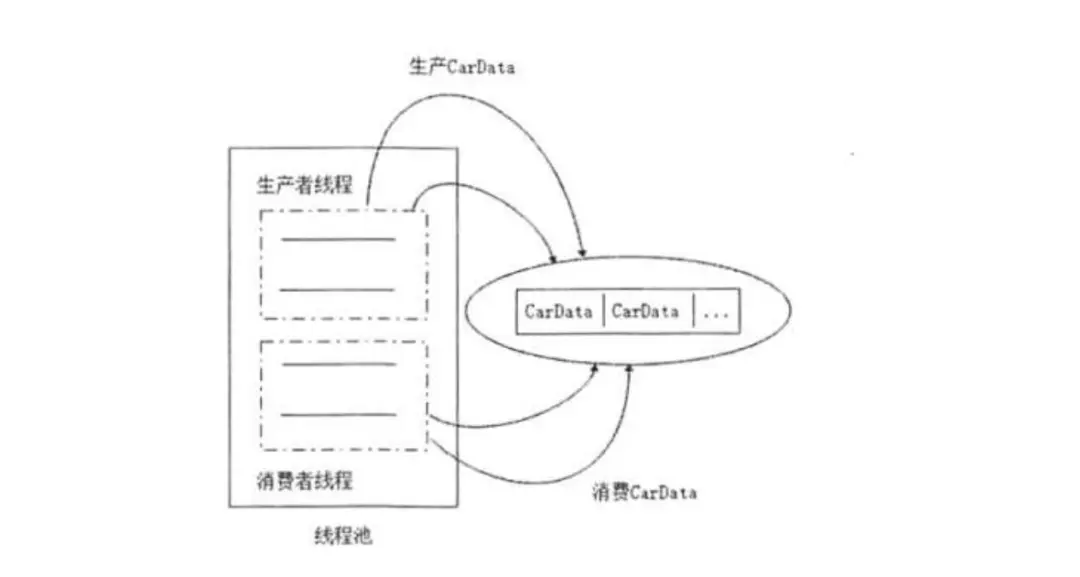

In order to illustrate the communication between threads, simply simulate a producer-consumer model:

Producer

CarStock carStock;

public CarProducter(CarStock carStock){

this.carStock = carStock;

}

@Override

public void run() {

while (true){

carStock.produceCar();

}

}

public synchronized void produceCar(){

try {

if(cars < 20){

System.out.println("生产者..." + cars);

Thread.sleep(100);

cars++;

notifyAll();

}else {

wait();

}

} catch (InterruptedException e) {

e.printStackTrace();

}

}Consumer

CarStock carStock;

public CarConsumer(CarStock carStock){

this.carStock = carStock;

}

@Override

public void run() {

while (true){

carStock.consumeCar();

}

}

public synchronized void consumeCar(){

try {

if(cars > 0){

System.out.println("销售车..." + cars);

Thread.sleep(100);

cars--;

notifyAll();

}else {

wait();

}

} catch (InterruptedException e) {

e.printStackTrace();

}

} Consumption process

Communication process

For this simple producer-consumer model, you can use queues, thread pools and other technologies to improve the program, use BolckingQueue to share data, and improve the consumption process

05Three Features of Concurrent Programming

Most of the concurrent programming implementation mechanisms revolve around the following three points: atomicity, visibility, and order

1) Atomicity issues

for(int i = 0; i < 20; i++){

Thread thread = new Thread(() -> {

for (int j = 0; j < 10000; j++) {

res++;

normal++;

atomicInteger.incrementAndGet();

}

});

thread.start();

}operation result:

volatile: 170797

atomicInteger:200000

normal:182406

This is the problem of atomicity. Atomicity means that in an operation, the cpu cannot be suspended in the middle and then scheduled again. The operation is neither interrupted, or the execution is completed, or it is not executed.

If an operation is atomic, then the variable will not be modified in the case of multiple threads concurrently.

2) Visibility issues

class MyThread extends Thread{

public int index = 0;

@Override

public void run() {

System.out.println("MyThread Start");

while (true) {

if (index == -1) {

break;

}

}

System.out.println("MyThread End");

}

}The main thread modifies the index to -1, and the myThread thread is not visible. This is the thread safety caused by the visibility problem. Visibility means that when a thread modifies the value of a thread shared variable, other threads can immediately learn about the modification. The Java memory model achieves visibility by synchronizing the new value back to the main memory after the variable is modified, and refreshing the variable value from the main memory before the variable is read, which relies on the main memory as a transfer medium to achieve visibility, whether it is a normal variable or volatile Variables are the same. The difference between ordinary variables and volatile variables is that the special rules of volatile ensure that the new value can be immediately synchronized to the main memory and refreshed from the memory immediately before each use. Because we can say that volatile guarantees the visibility of variables during thread operation, while ordinary variables cannot guarantee this.

3) Orderly problem

Double search singleton lazy mode

orderliness : The natural orderliness of programs in the Java memory model can be summarized in one sentence: If you observe in this thread, all operations are in order; if you observe another thread in one thread, all operations It is disorderly. The first half of the sentence refers to the "serial semantics in the thread", and the second half of the sentence refers to the phenomenon of "instruction reordering" and the phenomenon of "main memory synchronization delay in working memory".

06 Thinking Questions

Sometimes, in order to release resources as soon as possible and avoid meaningless consumption, some functions will end early, such as many quota grabbing issues. Here is a thought question for your reference and implementation:

Question: 8 people in the 100-meter race, the top three are required to stop after reaching the finish line, and the realization of this problem is designed.

Reference materials:

100 million-level traffic Java high concurrency and actual network programming

2.LMAX article ( http://ifeve.com/lmax/)

Upcoming in the next chapter:

- Volatile and Syncronize keywords

- Volatile keywords

- Synchronized keyword Volatile keyword

- Synchronized keywords

For more information about Good Future Technology, please scan the QR code on WeChat and follow the WeChat Official Account of "Good Future Technology"

**粗体** _斜体_ [链接](http://example.com) `代码` - 列表 > 引用。你还可以使用@来通知其他用户。