Preface

There are various streaming media protocols, and there are even more audio and video encoding formats. It is not difficult to browse normally in the browser. Except for the protocols already supported by browsers like WebRTC, HLS, FLV, RTSP, RTMP, DASH and other protocols all require preprocessing, but the process is roughly as follows:

- Obtain data through HTTP, WebSocket, etc.;

- Process data, resolve agreements, framing, etc. to obtain media information and data;

- Encapsulated into media fragments, or decoded into a frame of picture;

- Play through video or canvas (WebGL), etc.

There are also some front-end decoding solutions on the market, such as using WASM to call the c decoding library, or directly using the browser’s WebCodecs API for encoding and decoding... But there are limitations, WebCodecs still an experimental feature; WASM solution breaks through the browser sandbox restrictions (it can play encoding formats that are not supported by the browser, such as H265, etc.), there is still a gap between the decoding and the browser’s original decoding, and the multi-channel performance cannot be overcome because it can only be softened. . Therefore, more on the market is to use another method, solution + encapsulation + the protagonist of this article Media Source Extensions (hereinafter referred to as MSE).

begin

The HTML5 specification allows us to embed videos directly in web pages,

<video src="demo.mp4"></video>But the resource address specified by src must be a complete media file. How to achieve streaming media resource playback on the Web? MSE provides such a possibility, first look at the description MDN

The Media Source Extension API (MSE) provides the ability to implement plug-in-free and Web-based streaming media. With MSE, media streams can be created and<audio>and<video>elements.

As mentioned above, MSE allows us to create media resources through JS, which is also very convenient to use:

const mediaSource = new MediaSource();

const video = document.querySelector('video');

video.src = URL.createObjectURL(mediaSource);After the media resource object is created, the next step is to feed it video data (fragments). The code looks like:

mediaSource.addEventListener('sourceopen', () => {

const mime = 'video/mp4; codecs="avc1.42E01E, mp4a.40.2"';

const sourceBuffer = mediaSource.addSourceBuffer(mime);

const data = new ArrayBuffer([...]); // 视频数据

sourceBuffer.appendBuffer(data);

});At this point, the video can be played normally. If you want to achieve streaming, you only need to call appendBuffer feed audio and video data continuously... But I can't help but wonder, what does this string of 'video/mp4; codecs="avc1.42E01E, mp4a.40.2"' Where does the audio and video data come from? 🤔

MIME TYPE

// webm MIME-type

'video/webm;codecs="vp8,vorbis"'

// fmp4 MIME-type

'video/mp4;codecs="avc1.42E01E,mp4a.40.2"'This string describes the relevant parameters of the video, such as the encapsulation format, audio/video encoding format, and other important information. Take the above mp4 section as an example, and ; is divided into two parts:

video/mp4first half indicates that this is a video in mp4 format;codecssecond half describes the encoding information of the video. It is composed of one or more,, and each value is composed of one or more elements divided.avc1indicates that the video isAVC(ie H264) encoding;42E01Ecomposed of three bytes (represented in hexadecimal notation) and describes the relevant information of the video:0x42(AVCProfileIndication) means Profile video, common ones include Baseline/Extended/Main/High profile, etc.;0xE0(profile_compatibility) represents the constraint condition of the coding level;0x1E(AVCLevlIndication) means level H264, which means the maximum supported resolution, frame rate, bit rate, etc.;

mp4arepresents some kind ofMPEG-4audio;40is the ObjectTypeIndication (OTI) specified by the MP4 registration agency0x40corresponds to theAudio ISO/IEC 14496-3 (d)standard;2represents some kind of audio OTI, andmp4a.40.2representsAAC LC.

However, there are various audio and video formats. Is there any way to get the MIME TYPE video directly at the front end?

For mp4 format, you can use: 🌟🌟🌟 mp4box 🌟🌟🌟, the method of obtaining is as follows:

// utils.ts

// 添加库

// yarn add mp4box

import MP4Box from 'mp4box';

export function getMimeType (buffer: ArrayBuffer) {

return new Promise<string>((resolve, reject) => {

const mp4boxfile = MP4Box.createFile();

mp4boxfile.onReady = (info: any) => resolve(info.mime);

mp4boxfile.onError = () => reject();

(buffer as any).fileStart = 0;

mp4boxfile.appendBuffer(buffer);

});

}MIME TYPE obtained, the static method MediaSource.isTypeSupported() MSE can be used to detect whether the current browser supports the media format.

import { getMimeType } from './utils';

...

const mime = await getMimeType(buffer);

if (!MediaSource.isTypeSupported(mime)) {

throw new Error('mimetype not supported');

}Media Segment

SourceBuffer.appendBuffer(source) intended media segment data source added to SourceBuffer subject, see the MDN pair source description:

A BufferSource (en-US) object (ArrayBufferView or ArrayBuffer), which stores the media fragment data you want to add to the SourceBuffer.

So source is a string of binary data, of course, it is not just a random string. Then what conditions need to be met for media segment

- Meet the MIME type specified by MSE Byte Stream Format Registry

- Belongs to one of Initialization Segment or Media Segment

For the first condition, MSE supports fewer media formats and audio and video formats, and the common ones are fmp4(h264+aac) and webm(vp8/vorbis) . What is fmp4? What is webm? can be clicked to understand, this article will not discuss it.

For the second condition, Initialization Segment means the initialization segment, which contains the Media Segment , such as the resolution, duration, bit rate and other information of the media; Media Segment is the audio and video segment with a time stamp, and the latest The added Initialization Segment associated. Generally, multiple media fragments are appended after an initialization fragment is appended.

For fmp4 , the initialization fragment and the media fragment are actually MP4 box , but the type is different ( learn more about ); for webm , the initialization fragment is EBML Header and Cluster element and track (including some media, track And other information), the media fragment is a Cluster element ( learn more about ).

The theory is understood, what about the actual operation? How to generate the aforementioned media fragment from the existing media files?

Here we use 🌟🌟🌟 FFmpeg 🌟🌟🌟, it is very convenient to use, only one line of command is needed:

ffmpeg -i xxx -c copy -f dash index.mpd

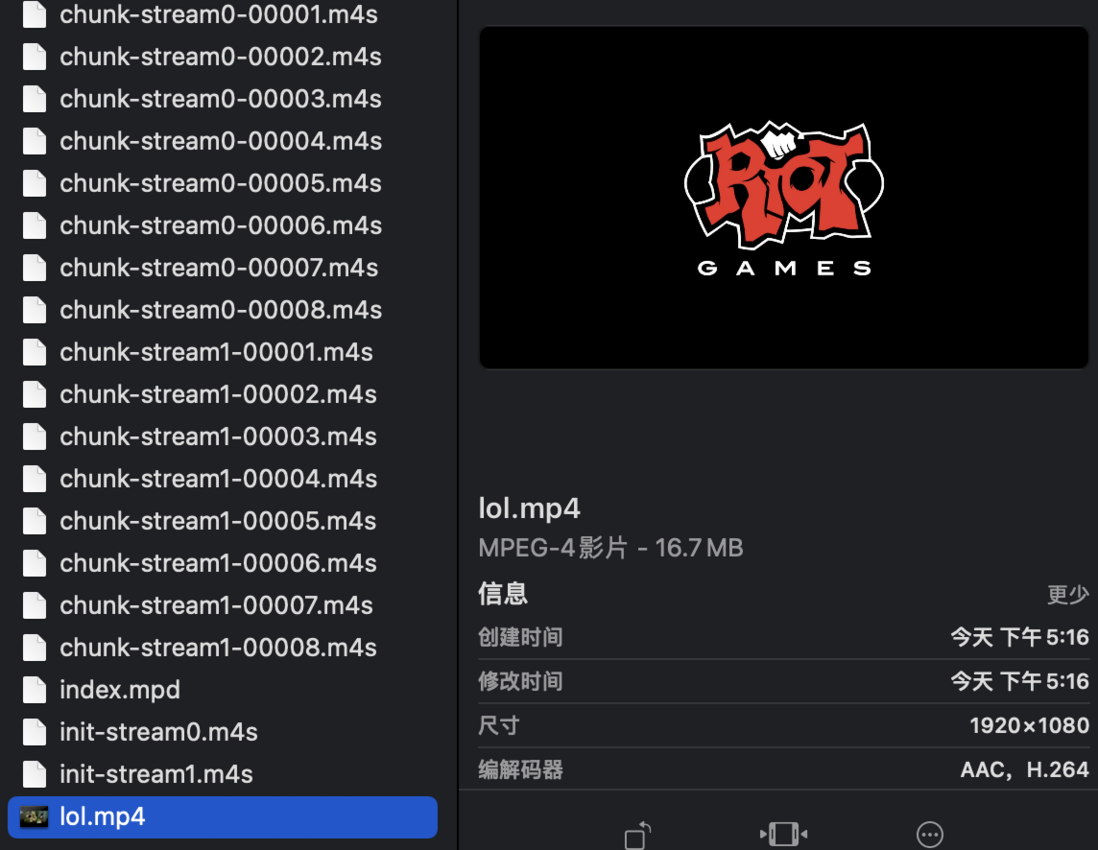

xxx is your local media file. I use lol.mp4 and big-buck-bunny.webm for testing:

👉 ffmpeg -i lol.mp4 -c copy -f dash index.mpd

👇

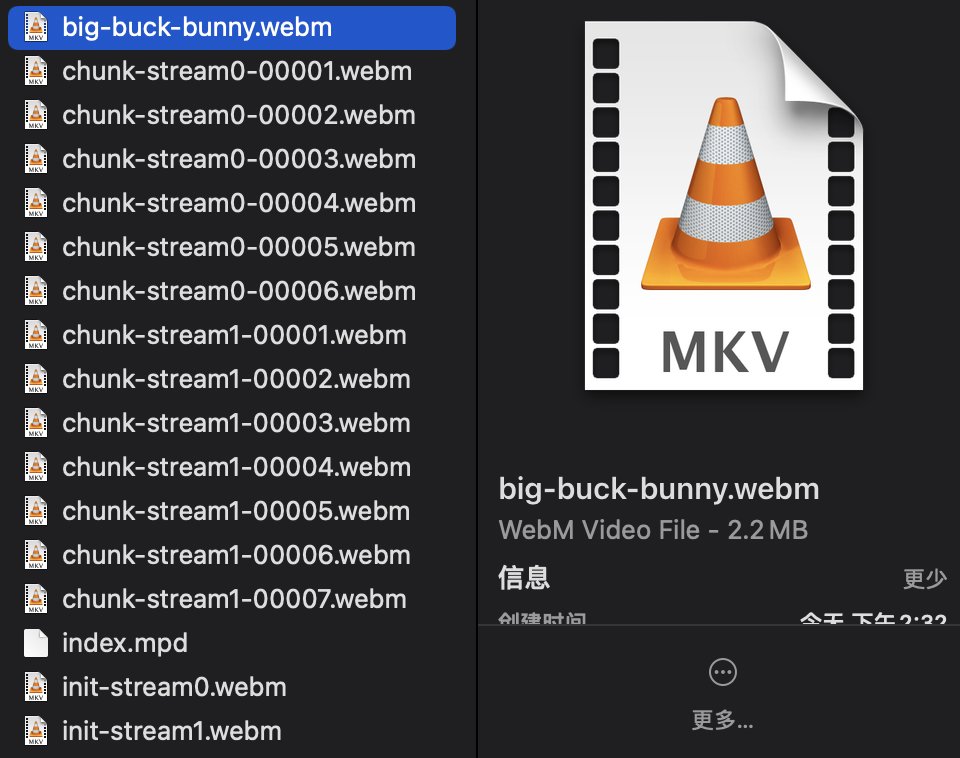

👉 ffmpeg -i big-buck-bunny.webm -c copy -f dash index.mpd

👇

It can be seen from the test results that the files init-xxx.xx and chunk-xxx-xxx.xx

Obviously init-xxx.xx represents the initialization segment, chunk-xxx-xxx.xx represents the media segment, and stream0 and stream1 represent the video and audio channels, respectively.

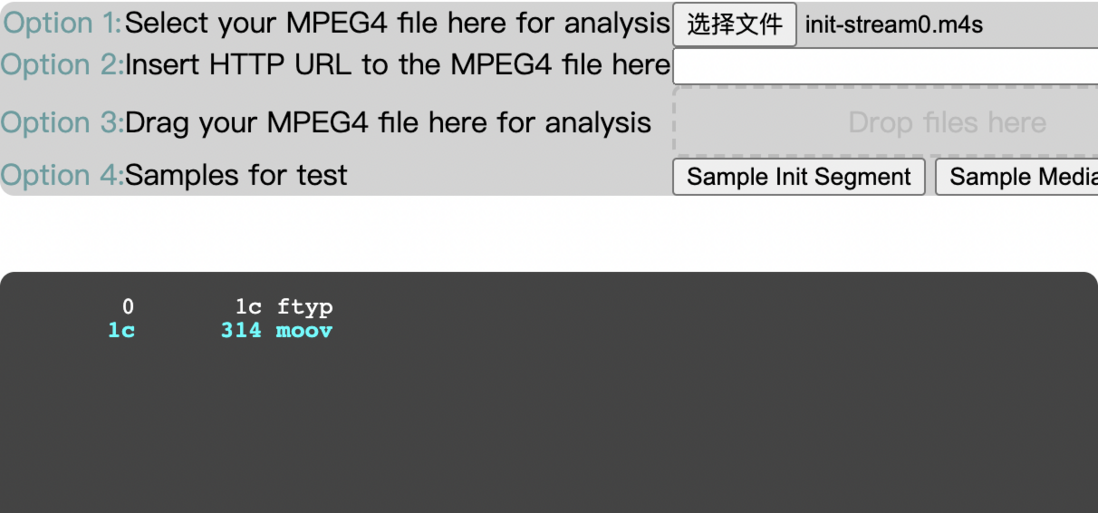

With the help of the online mp4 box parsing tool , look at the internal structure of the initialization fragment and media fragment of fmp4

With ISO BMFF description consistent, initialized slice of ftyp box + moov box composition; media segment styp box , sidx box , moof box , mdat box composition, want to know what the various boxes can go learn MP4, let live more to force learning.

EXAMPLE

👇

**粗体** _斜体_ [链接](http://example.com) `代码` - 列表 > 引用。你还可以使用@来通知其他用户。