How to build a highly available, high-performance, easily expandable, scalable and secure application system? I believe this is a problem that plagues countless developers. Here we take a website as an example to discuss how to do a good job in the architecture design of large-scale application systems.

Architecture evolution and development history

The technical challenges of large websites mainly come from huge users, high concurrent visits and massive amounts of data.

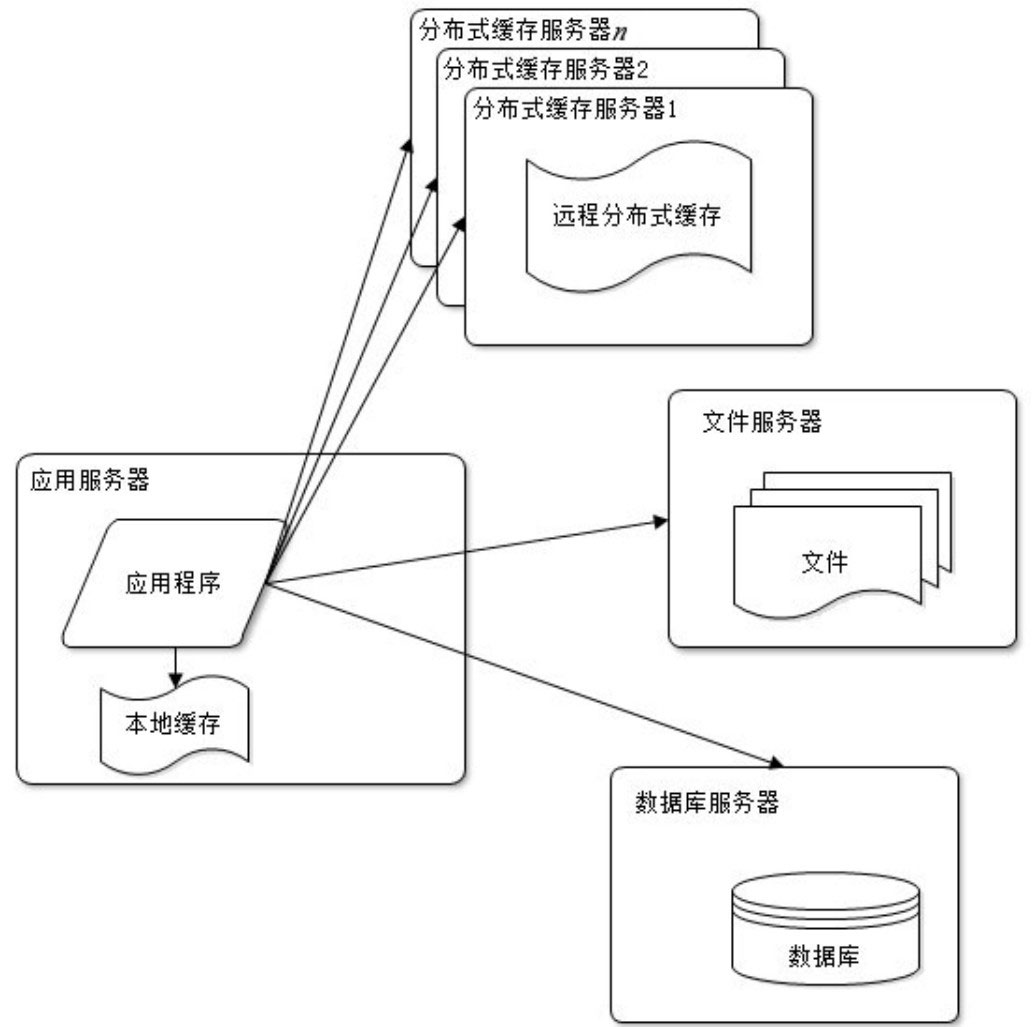

Initial stage

Large-scale websites are developed from small-scale websites. At the beginning, small-scale websites are not visited by too many people. Only one server is more than enough. At this time, the website structure is shown in the figure.

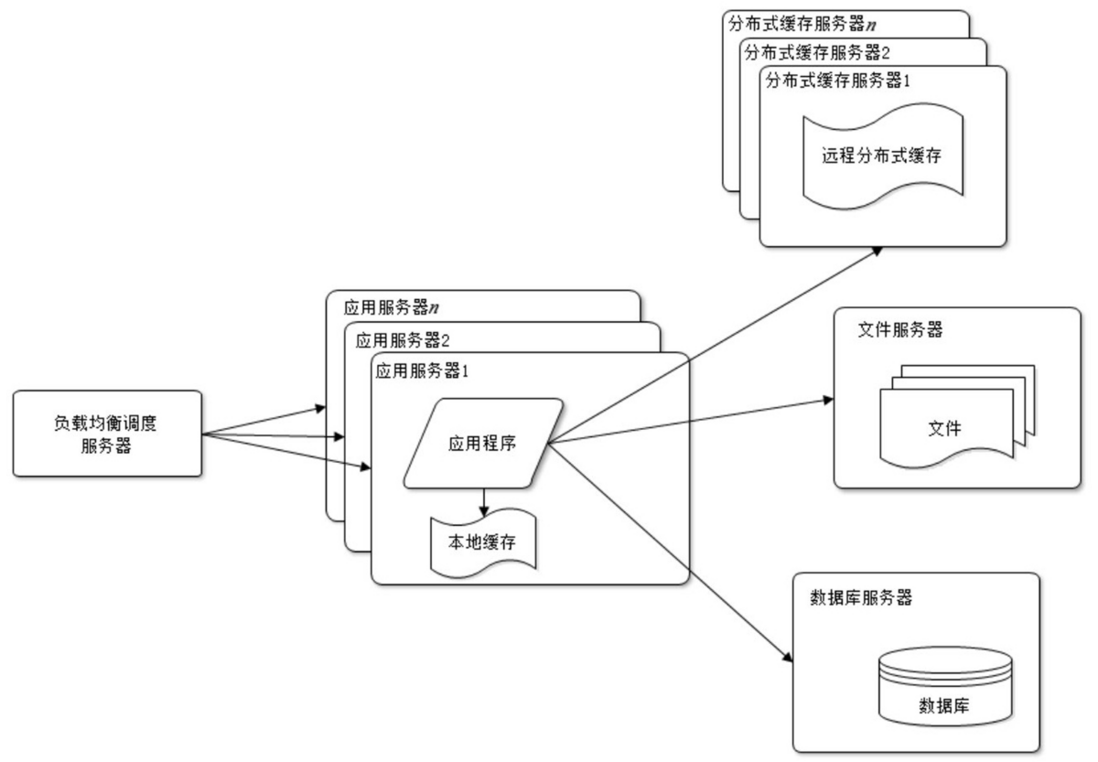

Application and data separation

With the development of business, a single server gradually cannot meet the demand: more and more user access leads to worse and worse performance, and more and more data leads to insufficient storage space. At this time, the application and the data need to be separated.

After the application and data are separated, the entire website uses three servers: application server, file server and database server, as shown in the figure.

These three servers have different requirements for hardware resources. The application server needs to process a lot of business logic, so it needs a faster and more powerful CPU; the database server needs fast disk retrieval and data caching, so it needs a faster hard disk and more Large memory; the file server needs to store a large number of files uploaded by users, so a larger hard disk .

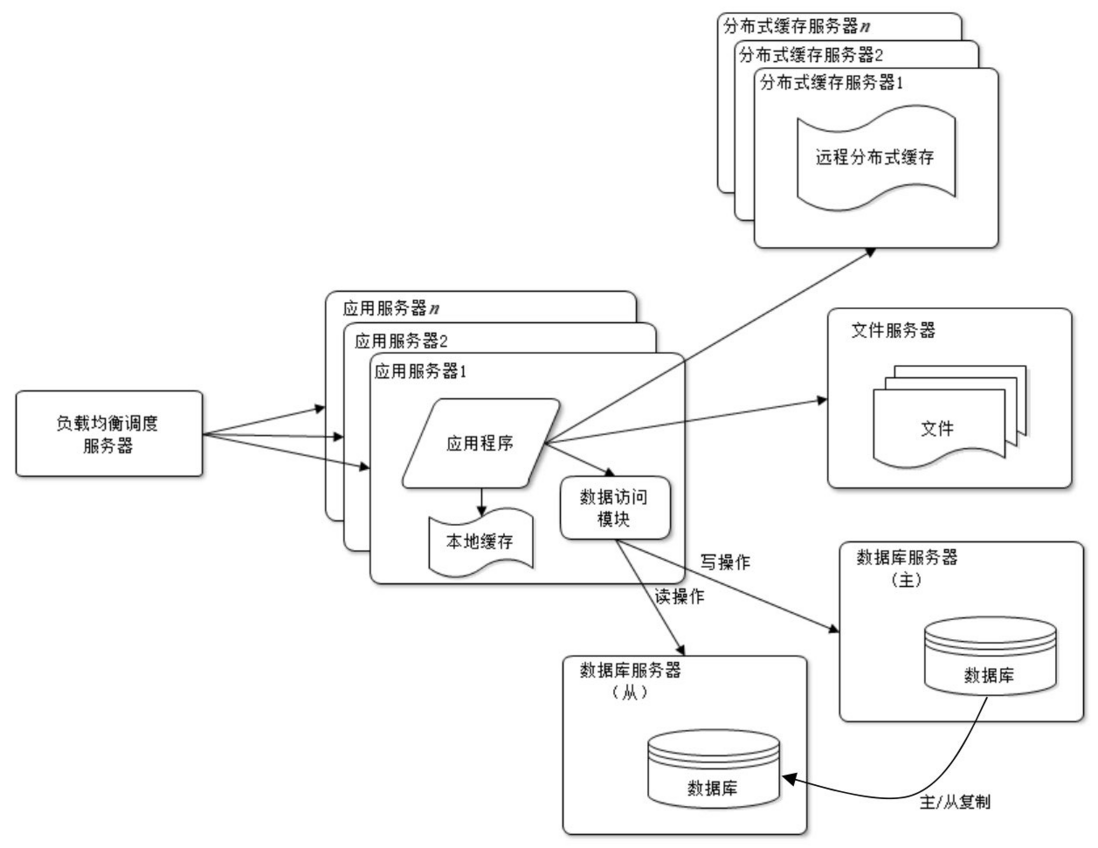

Use cache

With the gradual increase of users, the website faces another challenge: too much pressure on the database leads to delays in access, which in turn affects the performance of the entire website, and the user experience is affected.

Website visits follow the twenty-eight law: 80% of business visits are concentrated on 20% of data. Since most of the business access is concentrated on a small part of the data, if this small part of the data is cached in the memory, can it reduce the access pressure of the database, increase the data access speed of the entire website, and improve the writing of the database? Performance?

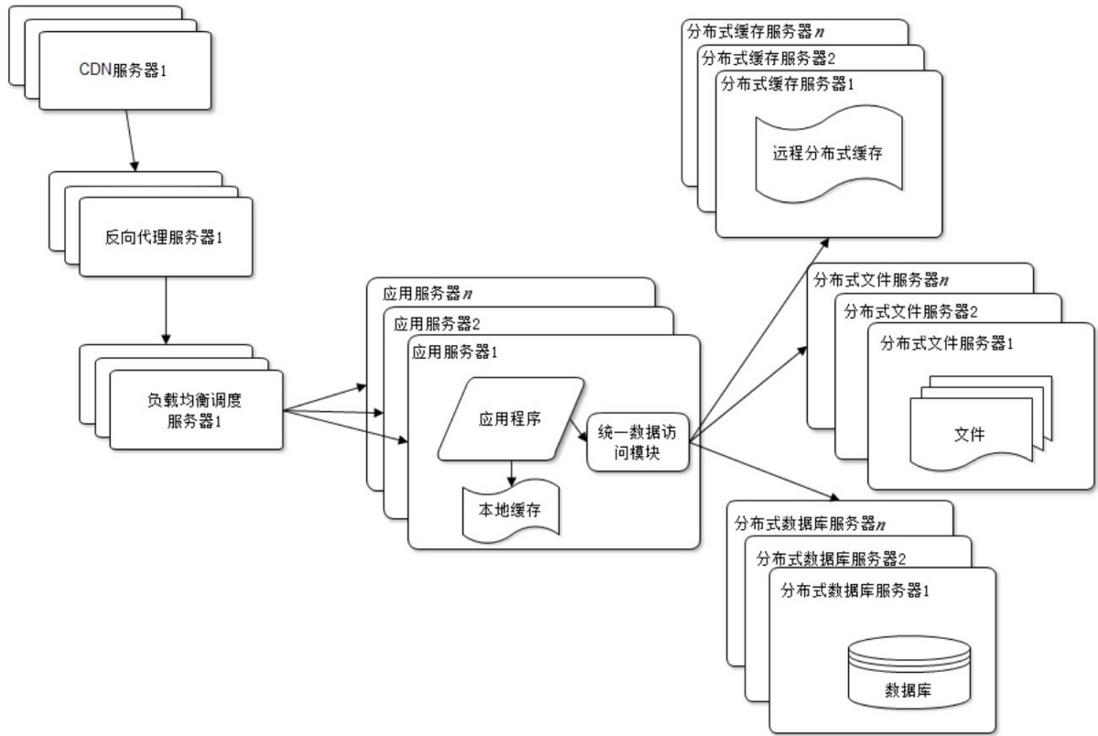

There are two types of caches used by websites: local caches cached on the application server and remote caches cached on a dedicated distributed cache server. The access speed of the local cache is faster, but limited by the memory of the application server, the amount of cached data is limited, and there will be competition for memory with the application. remote distributed cache can use the cluster method, deploying a large memory server as a dedicated cache server, and theoretically achieve the cache service without the limitation of memory capacity, as shown in the figure.

Use application server cluster

After caching is used, data access pressure is effectively alleviated, but the request connections that a single application server can handle are limited. During the peak period of website access, the application server becomes the bottleneck of the entire website.

Using clusters is a common method to solve the problem of high concurrency and massive data. When the processing power and storage space of a server is insufficient, do not try to change to a more powerful server. For large websites, no matter how powerful a server is, it cannot meet the business needs of the website's continuous growth. In this case, it is more appropriate to add a server to share the access and storage pressure of the original server.

As long as the load pressure can be improved by adding a server, you can continue to increase the server in the same way to continuously improve system performance, thereby achieving system scalability. Application server cluster is a relatively simple and mature kind of scalable cluster architecture design, as shown in the figure.

Through the load balancing scheduling server, the access request from the user's browser can be distributed to any server in the application server cluster. If there are more users, add more application servers to the cluster to make the application The load pressure of the server no longer becomes the bottleneck of the entire website.

Read and write separation

After the website uses the cache, most of the data read operations can be accessed without going through the database, but there are still some read operations and all write operations that need to access the database. After the users of the website reach a certain scale, the database is affected by the load. The pressure is too high and becomes the bottleneck of the website.

At present, most mainstream databases provide the master-slave hot backup function. By configuring the master-slave relationship between two databases, data updates from one database server can be synchronized to another server. The website uses this function of the database to realize the separation of reading and writing of the database, thereby improving the load pressure of the database, as shown in the figure.

application server accesses the master database when writing data. The master database synchronizes data updates to the slave database through the master-slave replication mechanism, so that when the application server reads data, it can obtain data from the database. In order to facilitate the application to access the database after the read-write separation, a special data access module is usually used on the application server side to make the database read-write separation transparent to the application.

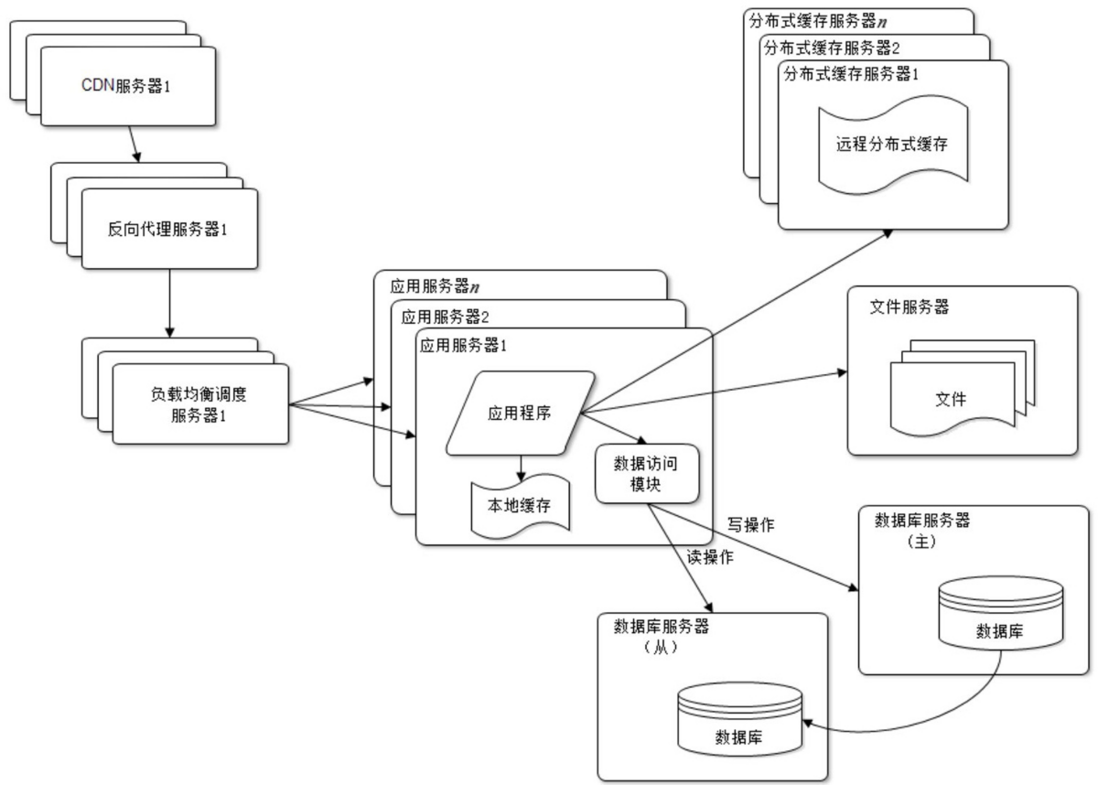

reverse proxy and CDN

With the continuous development of business, the scale of users is getting bigger and bigger, and the speed of users in different regions when visiting the website is also very different. In order to provide a better user experience, the website needs to accelerate the speed of website access. The main methods are the use of CDN and reverse proxy, as shown in the figure.

The basic principles of CDN and reverse proxy are caching. The difference is that the CDN is deployed in the network provider’s computer room so that users can obtain data from the network provider’s computer room closest to them when requesting website services; while reverse proxy is Deployed in the central computer room of the website, when a user request arrives in the central computer room, the first server accessed is the reverse proxy server. If the resource requested by the user is cached in the reverse proxy server, it will be directly returned to the user.

Use distributed file system and distributed database system

After the database is separated from reading and writing, it is split from one server into two servers. However, with the development of the website business, it still cannot meet the needs, and a distributed database is needed at this time. The file system is the same, and a distributed file system needs to be used, as shown in the figure.

distributed database is the last resort to split the website database. It is only used when the single-table data is very large. As a last resort, the more commonly used method of database splitting for websites is business sub-database, which deploys the databases of different businesses on different physical servers.

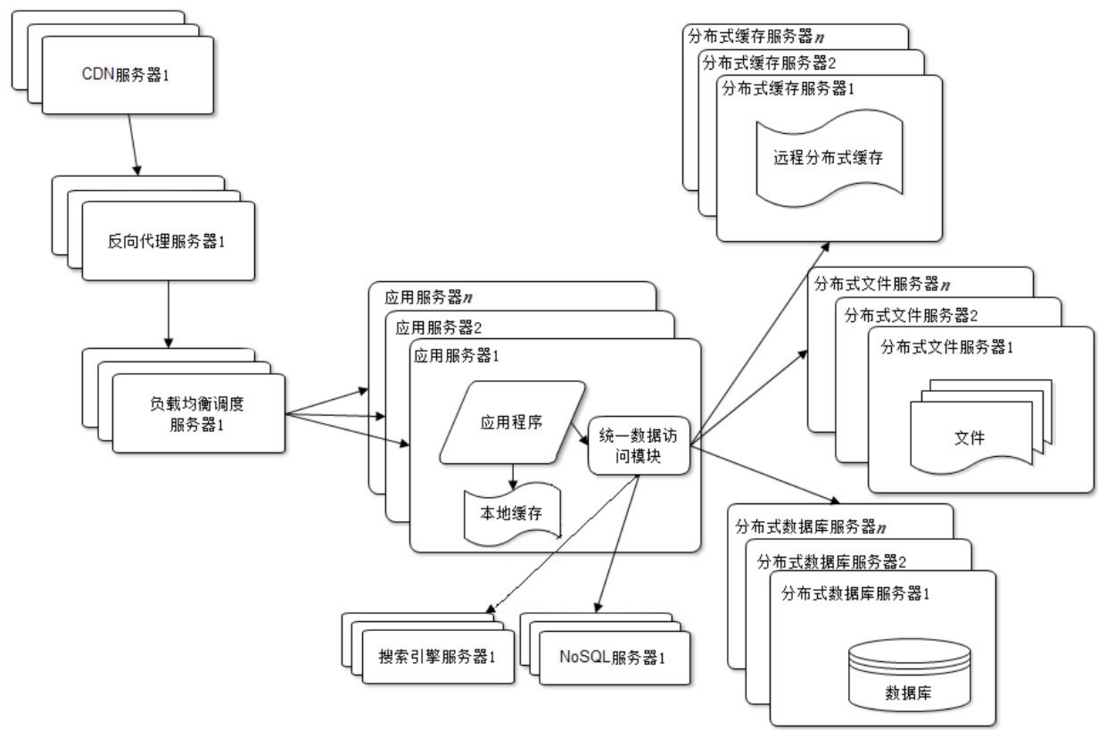

uses NoSQL and search engine

As the website business becomes more and more complex, the requirements for data storage and retrieval are becoming more and more complicated. The website needs to adopt some non-relational database technologies such as NoSQL and non-database query technologies such as search engines, as shown in the figure.

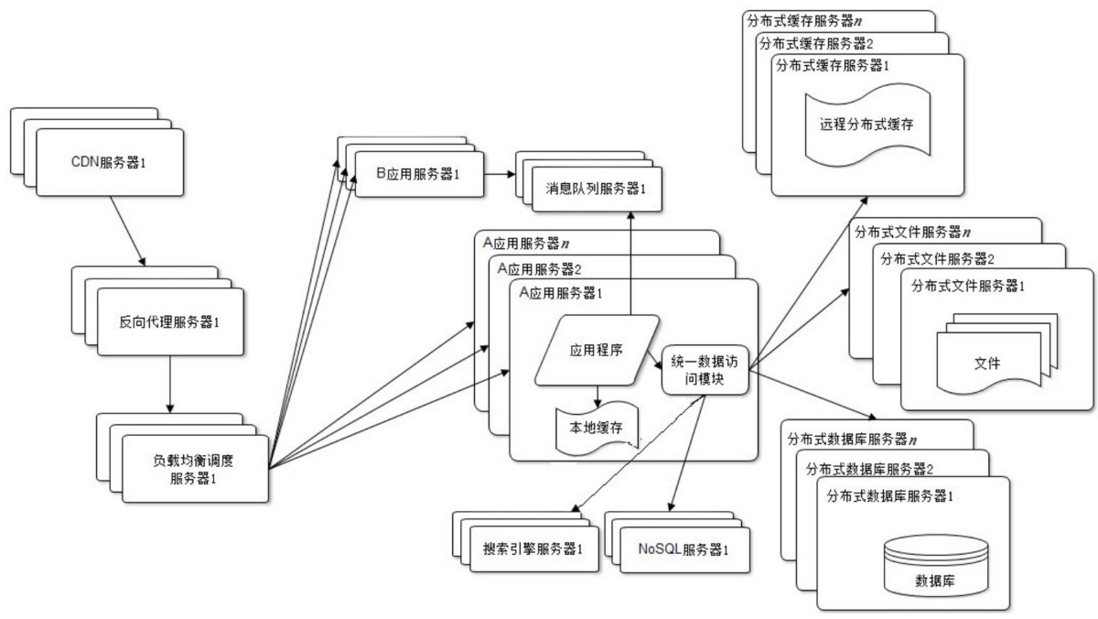

Business split

In order to cope with increasingly complex business scenarios, large websites divide the entire website business into different product lines by using the method of divide and conquer. In terms of technology, ** split a website into many different applications, and each application is deployed and maintained independently. The relationship between applications can be established through a hyperlink (the navigation links on the home page each point to a different application address), and data can be distributed through the message queue. Of course, the most common one is to access the same data storage system. Associated complete line

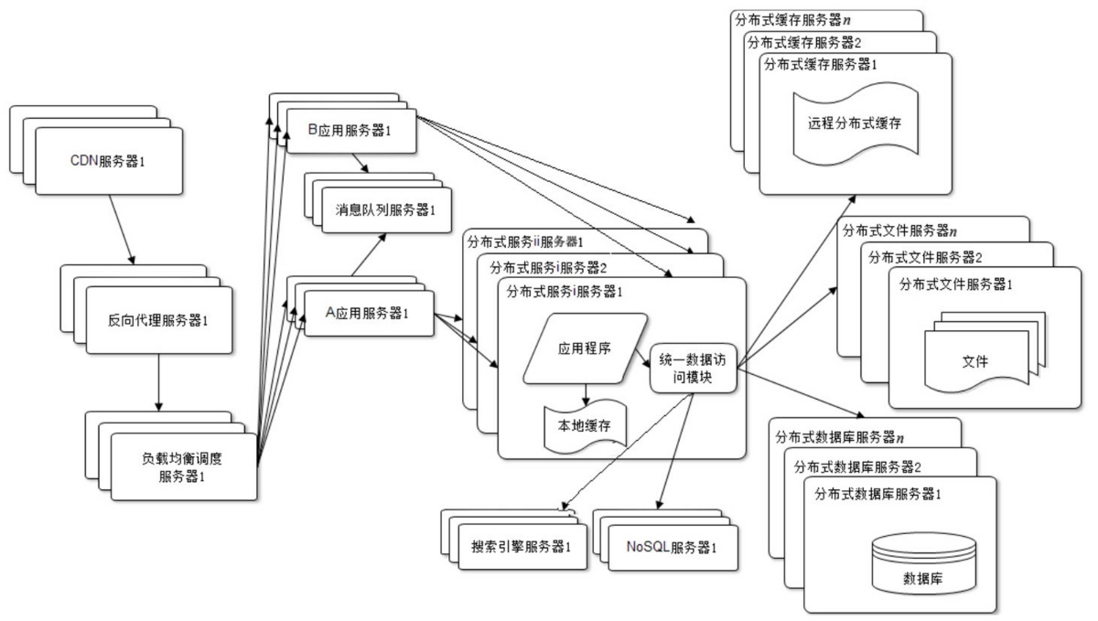

Distributed service

As business splits become smaller and smaller, storage systems become larger and larger, and the overall complexity of application systems increases exponentially, making deployment and maintenance more and more difficult.

Since each application system needs to perform many of the same business operations, such as user management, commodity management, etc., these shared services can be extracted and deployed independently. These reusable services connect to the database to provide common business services, while the application system only needs to manage the user interface, and call the common business services through distributed services to complete specific business operations , as shown in the figure.

The architecture of large-scale websites has evolved here, and most of the technical problems have basically been solved.

Architecture mode

In order to solve a series of problems and challenges faced by application systems, such as high concurrent access, massive data processing, and highly reliable operation, large Internet companies have put forward many solutions in practice to achieve high performance, high availability, easy scalability, scalability, Various technical architecture goals such as security. These solutions have been reused by more companies, thus gradually forming an architectural model.

layered

layering is one of the most common architectural patterns in enterprise application systems. The system is divided into several parts in the horizontal dimension. Each part is responsible for a relatively single part of the responsibility, and then forms one through the upper layer's dependence and call on the lower layer. Complete system.

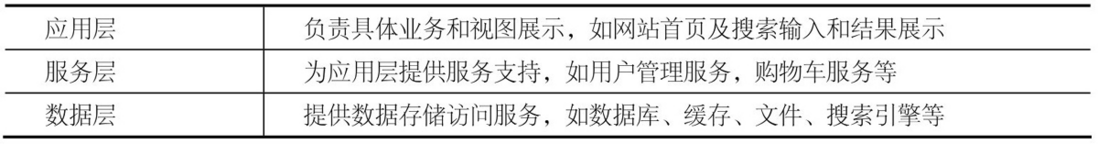

In the website architecture, the application system is usually divided into application layer, service layer, and data layer, as shown in the following figure.

can better divide a huge software system into different parts through layering, which is convenient for division of labor, cooperative development and maintenance. Each layer has a certain degree of independence. As long as the calling interface remains unchanged, each layer can evolve and develop independently according to specific issues without requiring other layers to make corresponding adjustments.

However, the layered architecture also has some challenges, that is, the layer boundaries and interfaces must be properly planned. During the development process, the constraints of the layered architecture must be strictly followed, and cross-layer calls and reverse calls must be prohibited. In practice, a large layered structure can continue to be layered.

The layered architecture is logical, and the three-layer structure can be deployed on the same physical machine. However, with the development of website business, it is necessary to separate and deploy the hierarchical modules so that the website has more computing resources to cope with more and more user visits.

Division

Layering is to segment the software in the horizontal direction, and segmentation is to segment the software in the vertical direction.

The larger the website, the more complex the functions, and the more types of services and data processing. separates these different functions and services and packs them into modules with high cohesion and low coupling. On the one hand, it helps the development and maintenance of software; on the other hand, it facilitates the distributed deployment of different modules and improves the concurrency of the website. Processing power and function expansion capabilities.

The granularity of large-scale website segmentation may be very small. For example, at the application layer, different businesses are divided, for example, shopping, forums, search, and advertising are divided into different applications, which are handled by independent teams and deployed on different servers.

distributed

For large websites, layering and segmentation is to facilitate distributed deployment of the divided modules, that is, deploy different modules on different servers, and work collaboratively through remote calls . Distributed means that more resources can be used to complete the same function, and the amount of concurrent access and data that can be processed is larger.

But while the distributed network solves the problem of high concurrency, it also brings other problems. The following points are typical:

- means that service calls must pass through the network, which may have a serious impact on performance.

- servers, the greater the probability of downtime. The resulting service unavailability may cause many applications to become inaccessible and reduce the availability of the website.

- data is very difficult to maintain data consistency in a distributed environment, and distributed transactions are also difficult to guarantee.

- system depends on intricate and complicated development, management and maintenance.

Therefore, the distributed design should be based on the actual situation. Commonly used distributed solutions include: distributed services, distributed databases, distributed computing, distributed configurations, distributed locks, and distributed file systems.

cluster

uses distributed. Although the hierarchical and segmented modules have been deployed independently, for users to access centralized modules, it is necessary to cluster independently deployed servers, that is, multiple servers deploy the same application to form a cluster, and load balancing equipment Provide services to the outside world together.

Because the server cluster has more servers providing the same service, it can provide better concurrency. When more users access it, you only need to add new machines to the cluster. At the same time, when a server fails, the load balancing device or the system's failover mechanism will forward the request to other servers in the cluster to improve the availability of the system.

Cache

Cache is to store the data in the closest location from the calculation to speed up the processing. Caching is the first way to improve software performance. In complex software designs, caching is almost everywhere. For example, common reverse proxy, Redis (without persistence), CDN, etc.

There are two prerequisites for using the cache. One is that data access hotspots are uneven, and some data will be accessed more frequently. These data should be stored in the cache; the other is that the data is valid for a certain period of time and will not be fast. Expiration, otherwise the cached data will be dirty because it has become invalid, which will affect the correctness of the result .

cache can not only speed up data access, but also reduce the load pressure on back-end applications and data storage. , website databases are almost always designed for load capacity based on the premise of caching.

asynchronous

An important goal of the application system is to reduce coupling. In addition to the above-mentioned layering, segmentation, distribution, etc., the method of system decoupling is also an important method is asynchronous. The message transfer between businesses is not a synchronous call, but a business operation is divided into multiple stages. The phases collaborate through asynchronous execution by sharing data.

asynchronous architecture is a typical producer-consumer model. There is no direct call between the two. As long as the data structure is kept unchanged, the functions of each other can be changed at will without affecting each other, which is very convenient for the extension of new functions of the website. , the use of asynchronous message queues has the following advantages:

- improves system availability. consumer server 1609b51a811751 fails, the data will be stored and accumulated in the message queue server, and the producer server can continue to process business requests, and the overall system performance is trouble-free. After the consumer server returns to normal, it continues to process the data in the message queue.

- Speed up website response speed. The producer server at the front end of the business processing process writes the data into the message queue after processing the business request. It can return without waiting for the consumer server to process it, and the response delay is reduced.

- Eliminates concurrent access peaks. users visit the website randomly, with visit peaks and valleys. Use the message queue to put the suddenly increased access request data into the message queue and wait for the consumer server to process it in turn, which will not cause too much pressure on the entire website load.

However, it should be noted that using asynchronous methods to process business may have an impact on user experience and business processes, and requires support in website product design.

redundant

The website needs to run continuously for 7×24 hours, but the server may fail at any time, especially when the server scale is relatively large, a certain server downtime is an inevitable event. order to ensure that the website can continue to serve without losing data even when the server is down, a certain degree of server redundancy is required, and data redundancy backup is required, so that when a server is down, it can be Services and data access are transferred to other machines.

Services with low access and load must also be deployed with at least two servers to form a cluster, the purpose of which is to achieve high service availability through redundancy. In addition to regular archiving and cold backup of the database, the database also needs to be master-slave separated and synchronized in real time to achieve hot backup.

Automation and Security

At present, the automation architecture design of the application system mainly focuses on release operation and maintenance. Including automated release, automated code management, automated testing, automated security monitoring, automated deployment, automated monitoring, automated alarms, automated failover and recovery, automated degradation and automated resource allocation, etc.

The system has also accumulated many modes in terms of security architecture: identity authentication through passwords and mobile phone verification codes; operations such as login and transactions need to encrypt network communications, and sensitive data stored on the website server, such as user information, are also encrypted; In order to prevent bots from abusing network resources to attack websites, websites use verification codes for identification; common XSS attacks, SQL injections, and encoding conversions that are commonly used to attack websites are dealt with; spam and sensitive information are filtered; transactions are transferred. Important operations such as risk control are carried out according to the transaction mode and transaction information.

Architecture core elements

Regarding what is an architecture, Wikipedia defines it like this: "an abstract description of the overall structure and components of the software, used to guide the design of various aspects of large-scale software systems."

Generally speaking, in addition to functional requirements, software architecture also needs to focus on the five elements of performance, availability, scalability, scalability, and security.

Performance

Performance is an important indicator of a website, and any software architecture design scheme must consider possible performance issues. It is also because performance problems are almost everywhere, so there are many ways to optimize website performance. The main ways can be summarized as follows:

- Browser: Browser caching, using page compression, reasonable page layout, reducing cookie transmission, etc.

- CDN and reverse proxy

- local cache and distributed cache

- Asynchronous message queue

- Application layer: server cluster

- Code layer: multi-threaded , improve memory management, etc.

- Data layer: index, cache, SQL optimization, etc., and reasonable use of NoSQL database

Availability

The main means of high availability of the website is redundancy. Applications are deployed on multiple servers to provide access at the same time. Data is stored on multiple servers to back up each other. The downtime of any server will not affect the overall availability of the application. Cause data loss.

For application servers, multiple application servers form a cluster through load balancing equipment to provide services to the outside world. If any server is down, you only need to switch the request to another server, but a prerequisite is that the application server cannot be saved The requested session information.

For storage servers, data needs to be backed up in real time. When the server is down, data access needs to be transferred to an available server, and data recovery is performed to ensure that the data is still available even when the server is down.

In addition to the operating environment, the high availability of the website also requires the quality assurance of the software development process. Through pre-release verification, automated testing, automated release, gray release and other means, reduce the possibility of introducing faults into the online environment.

Scalability

The main criteria for measuring the scalability of the architecture are: whether it is possible to build a cluster with multiple servers, whether it is easy to add a new server to the cluster, whether the new server can provide services that are indistinguishable from the original server, and the cluster can accommodate Is there a limit to the total number of servers.

For application server clusters, you can continuously add servers to the cluster by using appropriate load balancing equipment. For cache server clusters, efficient cache routing algorithms need to be used to avoid large-scale routing failures caused by adding new servers. Relational databases are difficult to achieve the scalability of large-scale clusters. Therefore, the cluster scalability solution of relational databases must be implemented outside the database, and servers with multiple databases deployed into a cluster are formed by means such as routing partitions. As for most NoSQL database products, since they are born for massive amounts of data, their support for scalability is usually very good.

Scalability

The main criterion for measuring the scalability of the architecture is whether there is little coupling between different products. When a new business product is added to the website, can it be possible to achieve transparency and no impact on the existing product? New products can be launched without any changes or few changes to existing business functions.

website scalable architecture are event-driven architecture and distributed services.

Event-driven architecture is usually implemented in a website by using a message queue, which constructs user requests and other business events into messages and publishes them to the message queue, and the message processor as a consumer obtains the message from the message queue for processing. In this way, message generation and message processing are separated, and new message producer tasks or new message consumer tasks can be added transparently.

Distributed service separates business from reusable service and calls it through the distributed service framework. New products can implement their own business logic by calling reusable services without any impact on existing products. When the reusable service is upgraded and changed, the application can also be transparently upgraded by providing multi-version services, without the need to force the application to synchronize changes.

Security

The security structure of the website is to protect the website from malicious visits and attacks, and to protect the important data of the website from being stolen. The standard for measuring the security architecture of a website is whether there are reliable countermeasures against various existing and potential attacks and theft methods.

**粗体** _斜体_ [链接](http://example.com) `代码` - 列表 > 引用。你还可以使用@来通知其他用户。