Kubernetes is a powerful tool for building highly scalable systems. As a result, many companies have started or are planning to use it to coordinate production services. Unfortunately, like most powerful technologies, Kubernetes is also very complicated. We have compiled the following list to help you best practice Kubernetes in the production environment ).

Best Practices for Containers

Kubernetes provides a way to orchestrate containerized services, so if you do not practice your containers in order, the cluster will not be in a good state from the beginning. Please follow the prompts below to get started.

1. Use streamlined basic mirroring

what: A container is an application stack built into the system image. Everything from business logic to the core is packaged together. The smallest image takes up as much OS as possible and forces you to explicitly add any components you need.

why: Only include the software you want to use in your container, with performance and security benefits. There are fewer bytes on the disk, less network traffic to copy the mirror, and fewer tools inaccessible to potential attackers.

how: Alpine Linux is a popular choice and has extensive support.

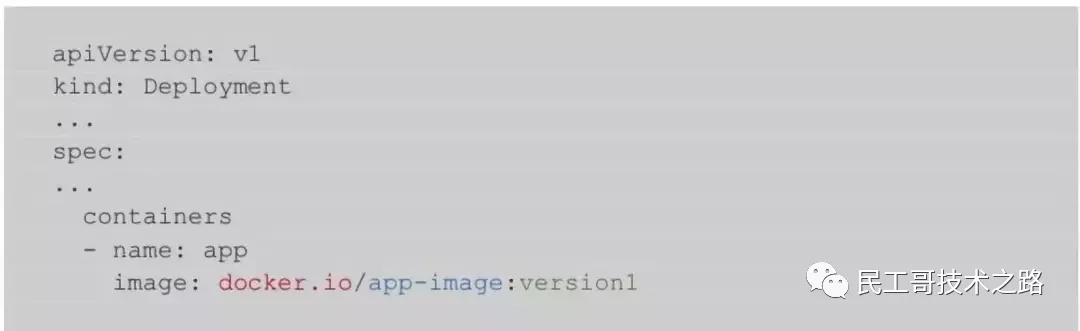

2. Use the registry that provides the best uptime

what: The registry is a repository of images, making these images available for download and startup. When specifying the deployment configuration, you need to specify where to obtain the image with the <registry> / <remote name>:<tag>

why: Your cluster needs to be mirrored to run.

how: Most cloud providers provide private image registry services: Google provides Google container registry, AWS provides Amazon ECR, and Microsoft provides Azure container registry.

Investigate carefully and choose the private registration form that provides the best uptime. Since your cluster will rely on your registry to launch newer versions of the software, any downtime will prevent updates to running services.

3. Use ImagePullSecrets to authenticate your registry

what: ImagePullSecrets is Kubernetes to , which allows your cluster to be authenticated through the registry, so the registry can choose who can download the image.

why: If your registry is public enough to allow the cluster to extract images from it, it means that the registry is public enough and requires authentication.

how: Kubernetes website has a good walkthrough in configuring ImagePullSecrets. This example uses Docker as a sample registry.

Manage your cluster

Microservices are essentially a mess. Many of the benefits of using microservices come from the mandatory separation of duties at the service level, effectively creating abstractions for the various components of the backend. Some good examples are running databases separated from business logic, running separate development and production versions of software, or separating horizontally scalable processes.

The dark side of having different services performing different responsibilities is that they cannot be treated equally. Fortunately, Kubernetes provides you with many tools to solve this problem.

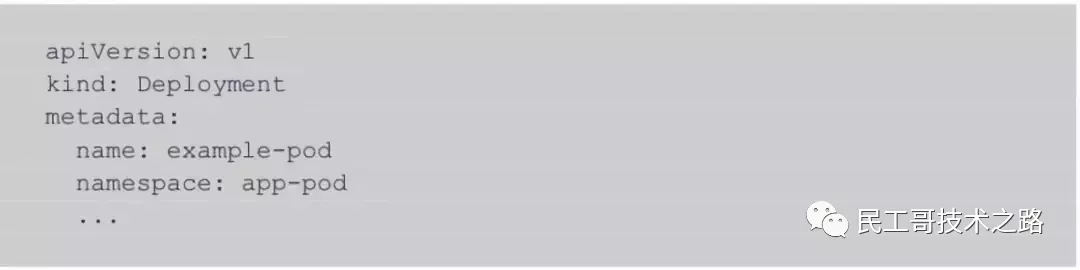

4. Use namespaces to isolate the environment

what: Namespace is the most basic and powerful grouping mechanism Kubernetes They work almost like virtual clusters. By default, most objects in Kubernetes are limited to affecting a single namespace at a time.

why: Most objects are defined within the scope of a namespace, so you must use a namespace. Given that they provide strong isolation, they are very suitable for isolating environments with different purposes, such as a production environment for user services and an environment strictly used for testing, or separating different service stacks that support a single application, such as maintaining a secure solution The workload is separate from your own application. A good rule of thumb is to divide the namespace by resource allocation: if the two groups of microservices will require different resource pools, put them in separate namespaces.

how: It is part of the metadata for most object types:

Note that you should always create your own namespace and not rely on the "default" namespace. The default settings of Kubernetes are usually optimized for developers with minimal friction, which usually means giving up even the most basic security measures.

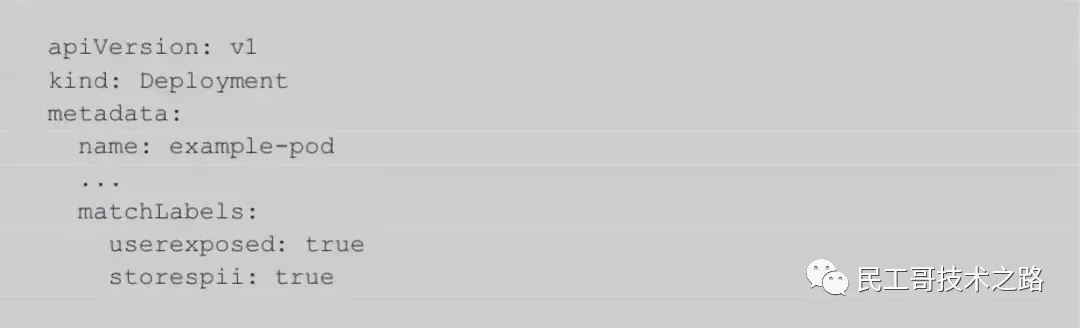

5. Manage your cluster through Labels

what: Labels are the most basic and extensible way to cluster They allow you to create arbitrary key:value pairs that are used to separate Kubernetes objects. For example, you can create a tag key to distinguish services that process sensitive information from services that do not.

why: As mentioned earlier, Kubernetes uses labels for organization, but more specifically, they are used for selection. This means that when you want to refer to a set of objects in a certain namespace for Kubernetes objects (such as telling network policies which services are allowed to communicate with each other), use their labels. Since they represent this type of open organization, please do your best to keep things simple and create labels only where options are needed.

how: The tag is a simple specification field, you can add it to the YAML file:

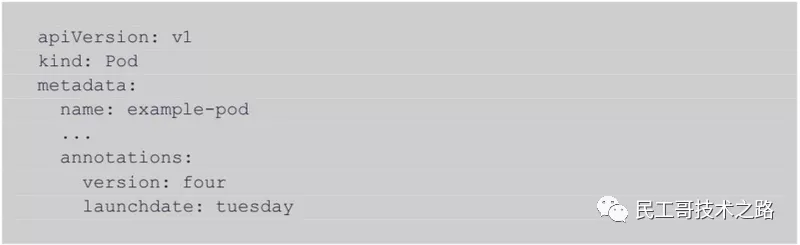

6. Use comments to track important system changes, etc.

what: Annotation is any key-value metadata that can be attached to a pod, just like a tag. However, Kubernetes does not read or process annotations, so the rules around you can and cannot use annotations for annotations are fairly loose and cannot be used for selection.

why: They can help you keep track of some important features of a containerized application, such as the version number or the date and time of the first launch. In the context of Kubernetes alone, annotations are a powerless construct, but when used to track important system changes, annotations can become an asset for developers and operations teams.

how: The comment is a specification field similar to a label.

Make your cluster more secure

Well, you have set up the cluster and organized it the way you want-now? Well, the next step is to ensure some safety. You may spend a lifetime studying, but you still haven't discovered all the ways in which someone can invade your system. The content space of a blog post is much less than a lifetime, so you must meet some strong recommendations.

7. Use RBAC to implement access control

what: RBAC (Role-Based Access Control) allows you to control who can view or modify different aspects of the cluster.

why: If you want to follow the principle of least privilege, you need to set up RBAC to limit the operations that cluster users and deployments can perform.

how: If you want to set up your own cluster (that is, do not use the hosted Kube service), please make sure to start your kube apiserver with "--authorization-mode = Node, RBAC". If you are using hosted Kubernetes, for example, you can query The command used to start the kube apiserver to check whether it is set to use RBAC. The only general check method is to look for "--authorization-mode..." in the output of kubectl cluster-info dump.

After RBAC is turned on, you need to change the default permissions to suit your needs. The Kubernetes project site provides walkthroughs on setting up roles and RoleBindings here. Managed Kubernetes services require custom steps to enable RBAC-please refer to Google's GKE guide or Amazon's AKS guide.

8. Use Pod security strategies to prevent dangerous behaviors

what: Pod security policy is a resource, very similar to Deployment or Role, which can be created and updated in the same way through kubectl. Each has a set of flags that can be used to prevent specific unsafe behaviors in the cluster.

Why: If the people who created Kubernetes think that restricting these behaviors is important enough to create a special object to handle it, then they are important.

how: Getting them to work can be frustrating. I recommend getting RBAC up and running, and then check out the guide for the Kubernetes project here. In my opinion, the most important use is to prevent privileged containers and write access to the host file system, because they represent some of the more leaky parts of the container abstraction.

9. Use network strategy to implement network control/firewall

what: A network policy is an object that allows you to clearly declare which traffic is allowed, and Kubernetes will block all other non-compliant traffic.

why: Limiting network traffic in a cluster is a basic and important security measure. By default, Kubernetes enables open communication between all services. Keeping this "open by default" configuration means that the Internet-connected service is only one step away from the database that stores sensitive information.

how: There is an article that is well written, check here for details.

10. Use Secrets to store and manage necessary sensitive information

what: Secrets is how you store sensitive data in Kubernetes, including passwords, certificates, and tokens.

why: Whether you implement TLS or restrict access, your services may require mutual authentication, authentication with other third-party services or your users.

how: The Kubernetes project provides guidance here. A key recommendation: Avoid loading secrets as environment variables, because it is generally not safe to have confidential data in your environment. Instead, load the secret into a read-only volume in the container-you can find an example in this Use Secrets.

11. Use mirror scanning to identify and repair mirror vulnerabilities

what: The scanner checks the components installed in the image. Everything from the operating system to the application stack. Scanners are very useful to find out which vulnerabilities exist in the software version contained in the image.

why: Vulnerabilities have been found in popular open source software packages. Some famous examples are Heartbleed and Shellshock. You will want to know where these vulnerabilities are in the system so that you know which images may need to be updated.

how: Scanners are a fairly common part of the infrastructure-most cloud providers provide products. If you want to host something yourself, then the open source Clair project is a popular choice.

Keep the cluster stable

Kubernetes represents a very high technology stack. You have applications that run on embedded kernels, applications that run in VMs (and in some cases even on bare metal), and Kubernetes' own services to share hardware. Considering all these factors, many things can go wrong in the physical and virtual fields, so it is very important to reduce the risk of the development cycle as much as possible. The ecosystem around Kubernetes has developed a series of best practices to keep things as consistent as possible.

12. Follow the CI/CD method

what: Continuous integration/continuous deployment is a process philosophy. I believe that every modification to the code base should increase the incremental value and be ready for production. Therefore, if something in the code base changes, you may want to start a new version of the service to run tests.

why: Following CI/CD can help your engineering team keep quality in mind in their daily work. If there is a problem, fixing the problem will become a top priority for the entire team, because all subsequent changes that depend on the decomposed commit will also be decomposed.

how: Due to the rise of cloud deployment software, CI/CD is becoming more and more popular. Therefore, you can choose from many great products that are hosted or self-hosted. If your team is relatively small, I suggest you take the hosting route, because the time and effort saved is definitely worth your extra cost.

13. Update using the Canary method

what: Canary is a way to bring service changes from the commit in the code base to the user. You start a new instance running the latest version, and then slowly migrate users to the new instance, thereby gradually gaining confidence in the update, rather than swapping them all at once.

why: No matter how extensive your unit tests and integration tests are, they can't fully simulate the operation in production-there is always the possibility that some features will not work as expected. Using canaries can limit users' exposure to these issues.

how: The scalability of Kubernetes provides many ways to gradually roll out service updates. The most straightforward method is to create a separate deployment, sharing a load balancer with the currently running instance. The idea is that you expand the new deployment while scaling down the old deployment until all running instances are the new version.

14. Implement monitoring and integrate it with SIEM

what: Monitoring means tracking and recording what your service is doing.

why: Let's face it-no matter how good your developers are, no matter how hard your security experts try to use their ingenuity, things will go wrong. When they do, you will want to know what happened to make sure you don't make the same mistake twice.

how: There are two steps to successfully monitoring the service-the code needs to be tested, and the output of the test needs to be fed to a place for storage, retrieval and analysis. The way you perform the detection depends to a large extent on your toolchain, but a quick web search should allow you to make a difference. As far as storage output is concerned, unless you have expertise or needs, I recommend hosting SIEM (such as Splunk or Sumo Logic)-in my experience, DIY is always 10 times the expected time and effort associated with any storage.

In-depth advice

Once the cluster reaches a certain size, you will find that manual execution of all best practices will no longer be feasible, resulting in challenges to the security and stability of the system. After this threshold is exceeded, consider the following topics:

15. Use the service grid to manage communication between services

what: A service grid is a method of managing communication between services, which can effectively create a virtual network used when implementing services.

Why: Using a service grid can alleviate some of the more cumbersome aspects of managing a cluster, such as ensuring that communications are properly encrypted.

how: Depending on your choice of service grid, the complexity of startup and operation may vary greatly. As the most commonly used service mesh, Istio seems to be thriving, and your configuration process will largely depend on your workload.

One caveat: if you need to adopt a service mesh, please adopt it early rather than later-gradually changing the communication style in the cluster can be very painful.

16. Use the admission controller to unlock advanced features in Kubernetes

what: The admission controller is a very good universal tool that can be used to manage everything that happens in the cluster. They allow you to set up webhooks that Kubernetes will refer to when it starts. They come in two forms: mutation and verification. The mutation admission controller changes its configuration before the deployment starts. The authentication admission controller will be consistent with your webhook to allow the given deployment to be initiated.

why: Their use cases are wide and numerous-they provide a good way to iteratively improve cluster stability through self-developed logic and restrictions.

how: Check out the guide on how to start using Admission Controllers.

Source: Open Source Linux

**粗体** _斜体_ [链接](http://example.com) `代码` - 列表 > 引用。你还可以使用@来通知其他用户。