The introduction of the Mesh mode is a key path to realize the application of cloud native, Ant Group has achieved large-scale implementation internally. With the sinking of more middleware capabilities such as Message, DB, Cache Mesh, etc., the application runtime evolved from Mesh will be the future form of middleware technology. The application runtime aims to help developers quickly build cloud-native applications and further decouple the application and infrastructure. The core of the application runtime is the API standard, and the community is expected to build it together.

Ant Group Mesh Introduction

Ant is a technology and innovation-driven company. From a payment application in Taobao to the current service

For a large company with 1.2 billion users worldwide, the evolution of Ant's technical architecture will probably be divided into the following stages:

Before 2006, the earliest Alipay was a centralized monolithic application with modular development for different businesses.

In 2007, with the promotion of payment in more scenarios, we started to split applications and data, and made some transformations to SOA.

After 2010, fast payment, mobile payment, support for double eleven, and Yu'e Bao and other phenomenal products were launched. The number of users has reached the level of 100 million, and the number of applications of Ant has also increased by orders of magnitude. Ant has developed many complete sets of micro Service middleware to support Ant's business;

In 2014, the emergence of business forms such as borrowing flowers, offline payments, more scenarios, and higher requirements for the availability and stability of ants. Ants supported the LDC unitization of microservice middleware. Support business activities in different places, and elastic expansion and contraction on the hybrid cloud in order to support the ultra-large traffic on Double Eleven.

In 2018, Ant’s business is not only digital finance, but also the emergence of some new strategies such as digital life and internationalization, prompting us to have a more efficient technical architecture to make the business run faster and more stable, so Ant combined with the industry to compare The popular cloud native concept has been implemented internally in the direction of Service Mesh, Serverless, and Trusted Native.

It can be seen that Ant’s technical architecture is also evolving with the company’s business innovation. The previous process from centralized to SOA to microservices, I believe students who have engaged in microservices will have a deep understanding, and from microservices to cloud-native The practice of ant has been explored by itself in recent years.

Why introduce Service Mesh

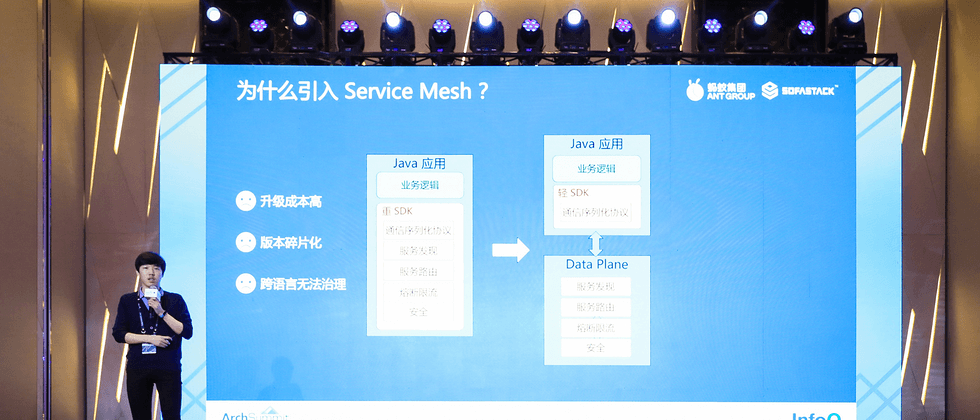

Since Ant has a complete set of microservice governance middleware, why does it need to introduce Service Mesh?

Take the service framework SOFARPC developed by Ant as an example. It is a powerful SDK that includes a series of capabilities such as service discovery, routing, and current limiting. In a basic SOFA (Java) application, the business code is integrated with the SOFARPC SDK, and the two run in the same process. After Ant's large-scale implementation of microservices, we faced the following problems:

upgrade cost is high : SDK needs to be introduced in business code, and each upgrade needs to be released with modified code. Due to the large scale of the application, when some major technical changes or security issues are repaired. Thousands of applications need to be upgraded together each time, which is time consuming and laborious.

version is fragmented : Due to the high upgrade cost and serious fragmentation of the SDK version, we need to be compatible with historical logic when writing code, and the overall technological evolution is difficult.

Cross-language cannot be managed : Most of Ant’s mid- and back-end online applications use Java as the technology stack, but there are many cross-language applications in the foreground, AI, big data and other fields, such as C++/Python/Golang, etc., because there is no corresponding language SDK, their service governance capabilities are actually missing.

We noticed that some concepts of Service Mesh in cloud native began to appear, so we started to explore in this direction. In the concept of Service Mesh, there are two concepts, one is the Control Plane control plane, and the other is the Data Plane data plane. The control plane is not expanded here for the time being. The core idea of the data plane is decoupling, abstracting some complicated business logic (such as service discovery in RPC calls, service routing, fusing current limit, security) into an independent process. . As long as the communication protocols of services and independent processes remain unchanged, the evolution of these capabilities can be independently upgraded following this independent process, and the entire Mesh can achieve unified evolution. For our cross-language applications, as long as the traffic passes through our Data Plane, you can enjoy the various service governance-related capabilities just mentioned. The application is transparent to the underlying infrastructure capabilities and is truly cloud-native.

Ant Mesh landing process

So starting from the end of 2017, Ants began to explore the technical direction of Service Mesh, and proposed a vision of unified infrastructure and no sense of business upgrade. The main milestones are:

At the end of 2017, the pre-research on Service Mesh technology will be started, and it will be determined as the future development direction;

At the beginning of 2018, the Sidecar MOSN was self-developed and open sourced with Golang, mainly supporting the small-scale pilot of RPC on Double Eleven;

In 618 in 2019, the new forms of Message Mesh and DB Mesh will be added to cover several core links and support the 618 promotion;

On Double Eleven in 2019, it covered hundreds of applications on the core links of all major promotions, supporting the Double Eleven major promotions at the time;

On Double Eleven in 2020, more than 80% of the online applications on the entire site will be meshed, and the entire Mesh system also has the ability to complete the entire site upgrade from capability development to completion within two months.

Ant Mesh landing architecture

At present, the scale of Meshization in the landing of ants is about thousands of applications and hundreds of thousands of containers. The landing of this scale is one of the best in the industry. There is no way to learn from the predecessors. Therefore, in the process of landing, the ants also Build a complete R&D operation and maintenance system to support meshing of ants.

The Ant Mesh architecture is roughly as shown in the figure. The bottom is our control plane, in which the service end of the service management center, PaaS, monitoring center and other platforms are deployed, which are some of the existing products. There is also our operation and maintenance system, including R&D platform and PaaS platform. In the middle is our protagonist data plane MOSN, which manages four types of traffic: RPC, messaging, MVC, and tasks, as well as basic capabilities for health checks, monitoring, configuration, security, and technical risks. At the same time, MOSN also blocks business. Some interactions with the basic platform. DBMesh is an independent product in Ant, which is not shown in the picture. Then the top layer is some of our applications, which currently support access to multiple languages such as Java and Nodejs.

For applications, although Mesh can achieve infrastructure decoupling, access still requires an additional upgrade cost. Therefore, in order to promote application access, Ant has completed the entire R&D operation and maintenance process, including in the existing framework Do the simplest access in the above, and control risks and progress through batch advancement, and let new applications access Mesh by default and other things.

At the same time, with the increase in sinking capabilities, each capability has also faced some problems in R&D collaboration before, and even issues that affect each other's performance and stability. Therefore, we have also done a modular isolation for the research and development efficiency of Mesh itself. , New capabilities, dynamic plug-in, automatic return and other improvements. At present, a sinking capability can be completed within 2 months from development to site-wide promotion.

Exploration on the runtime of cloud native applications

New problems and thoughts after the large-scale landing

After the large-scale landing of Ant Mesh, we are currently encountering some new problems:

The maintenance cost of cross-language SDK is high: Take RPC as an example, most of the logic has been sunk into MOSN, but there is still a part of the logic of the communication codec protocol in a lightweight SDK of Java, this SDK still has a certain degree In terms of maintenance costs, there are as many lightweight SDKs as there are languages. It is impossible for a team to be proficient in the development of all languages, so the code quality of this lightweight SDK is a problem.

New scenarios where the business is compatible with different environments: part of Ant's applications are both deployed inside Ant and exported to financial institutions. When they are deployed to ants, they are docked with the ant's control surface. When they are docked with the bank, they are docked with the bank's existing control surface. The current practice of most applications is to encapsulate a layer in the code by themselves, and temporarily support docking when encountering unsupported components.

From Service Mesh to Multi-Mesh: The earliest scenario of ants is Service Mesh. MOSN intercepts traffic through a network connection proxy, and other middleware interacts with the server through the original SDK. And now MOSN is not just Service Mesh, but Multi-Mesh, because in addition to RPC, we also support the Mesh implementation of more middleware, including messaging, configuration, caching, and so on. It can be seen that for every sinking middleware, there is almost a corresponding lightweight SDK on the application side. This combined with the first problem just now, it is found that there are a lot of lightweight SDKs that need to be maintained. In order to keep the functions from affecting each other, they open different ports for each function and call MOSN through different protocols. For example, the RPC protocol for RPC, the MQ protocol for messages, and the Redis protocol for caching. Then the current MOSN is actually not only for traffic. For example, the configuration is to expose a bit of API for business code to use.

In order to solve the problems and scenarios just now, we are thinking about the following points:

1. Can SDKs of different middleware and different languages be unified in style?

2. Can the interaction protocol of each sinking capability be unified?

3. Is our middleware sinking component-oriented or capability-oriented?

4. Can the underlying implementation be replaced?

Ant cloud native application runtime architecture

Beginning in March last year, after multiple rounds of internal discussions and research on some new concepts in the industry, we proposed a concept of "cloud native application runtime" (hereinafter referred to as runtime). As the name implies, we hope that this runtime can contain all the distributed capabilities that applications care about, help developers quickly build cloud-native applications, and help further decouple applications and infrastructure!

The core points in the runtime design of cloud native applications are as follows:

first . With the experience of MOSN's large-scale landing and supporting operation and maintenance system, we decided to develop our cloud native application runtime based on the MOSN kernel.

second capability-oriented, not component-oriented, and uniformly define the runtime API capabilities.

third , the interaction between the service code and Runtime API adopts a unified gRPC protocol, in this case, the service side can directly generate a client through the proto file in reverse, and call it directly.

fourth , the corresponding component implementation behind the capability can be replaced, for example, the provider of the registration service can be SOFARegistry, or Nacos or Zookeeper.

Run-time capability abstraction

In order to abstract some of the capabilities most needed by cloud-native applications, we first set a few principles:

1. Focus on the APIs and scenarios required by distributed applications instead of components;

2. API conforms to intuition, is used out of the box, convention is better than configuration;

3. The API does not bind to the implementation, and realizes the differentiated use of extended fields.

With the principles in place, we abstracted out three sets of APIs, namely the application calling mosn.proto at runtime, the appcallback.proto calling the application at runtime, and the actuator.proto related to runtime operation and maintenance. For example, RPC call, message sending, read cache, read configuration are all applied to the runtime, while RPC receiving requests, receiving messages, and receiving task scheduling belong to the runtime application. Other monitoring inspections, component management, flow control, etc. It is related to runtime operation and maintenance.

Examples of these three protos can be seen in the following figure:

runtime component control

On the other hand, in order to realize the replacement of the runtime implementation, we also proposed two concepts at MOSN. We call each distributed capability a Service, and then there are different components to implement this Service, and a Service can have multiple The component implements it, and one component can implement multiple Services. For example, in the example in the figure, there is "MQ-pub", the message service has two components, SOFAMQ and Kafka, and Kafka Component implements two services, message sending and health check.

When the business actually initiates a request through the client generated by gRPC, the data will be sent to the Runtime through the gRPC protocol and distributed to a specific implementation later. In this case, the application only needs to use the same set of APIs, and connect to different implementations through the parameters in the request or runtime configuration.

runtime and Mesh

In summary, a simple comparison between the runtime of the cloud native application and the Mesh just now is as follows:

Cloud native application runtime landing scene

The research and development started in the middle of last year, and the following scenes are currently implemented in Ant during operation.

heterogeneous technology stack access

In Ant, in addition to the requirements of RPC service management, messaging, etc., applications in different languages also hope to use Ant's unified middleware and other infrastructure capabilities. Java and Nodejs have corresponding SDKs, but other languages do not. The corresponding SDK. With the application runtime, these heterogeneous languages can directly call the runtime through the gRPC Client and connect to the ant infrastructure.

manufacturer

As mentioned earlier, Ant's blockchain, risk control, intelligent customer service, financial middle office, and other services are deployed at the main site, but also deployed in Alibaba Cloud or Proprietary Cloud. With the runtime, the application can create a mirror image of a set of code and runtime together, and determine which low-level implementation to call through configuration, not tied to the specific implementation. For example, products such as SOFARegistry and SOFAMQ are docked inside Ant, while products such as Nacos and RocketMQ are docked on the cloud, and Zookeeper, Kafka, etc. are docked on the proprietary cloud. We are in the process of landing this scene. Of course, this can also be used for legacy system management, such as upgrading from SOFAMQ 1.0 to SOFAMQ 2.0, and there is no need to upgrade the applications that are connected to the runtime.

FaaS cold start preheating pool

FaaS cold-start preheating pool is also a scenario we are exploring recently. Everyone knows that when a function in FaaS is cold-started, it needs to start from creating a Pod to downloading the function and then starting, this process will be relatively long. With the runtime, we can create the Pod in advance and start the runtime. When the application starts, the application logic is already very simple. After testing, it is found that it can be shortened from 5s by 80% to 1s. We will continue to explore this direction.

Planning and outlook

API co-built

The most important part of the runtime is the definition of API. In order to implement the internal, we already have a relatively complete API, but we also see that many products in the industry have similar requirements, such as dapr, envoy, and so on. So the next thing we will do is to unite with various communities to launch a set of cloud native application APIs that everyone recognizes.

Continue to open source

In addition, we will gradually develop internal runtime practices in the near future. It is expected that version 0.1 will be released in May and June, and we will maintain a monthly release of a small version, and strive to release version 1.0 before the end of the year.

to sum up

finally make a summary:

1. The introduction of the Service Mesh mode is the key path to realize the original Yunsheng application;

2. Any middleware can also be Meshed, but the problem of R&D efficiency still partially exists;

3. The large-scale implementation of Mesh is an engineering matter and requires a complete supporting system;

4. Cloud native application runtime will be the future form of basic technologies such as middleware, further decoupling applications and distributed capabilities;

5. The core of cloud-native application runtime is API, and it is expected that the community will build a standard.

Further reading

- takes you into cloud native technology: exploration and practice of cloud native open operation and maintenance system

- Accumulate a Thousand Miles: A Summary of the Landing of the QUIC Agreement in the Ant Group

- Rust's Emerging Fields: Confidential Computing

- Protocol Extension Base On Wasm-Protocol Extension

**粗体** _斜体_ [链接](http://example.com) `代码` - 列表 > 引用。你还可以使用@来通知其他用户。