In April 2020, the front-end team of Zhanmeiye completed the design and migration of Meiye’s PC architecture from a single SPA to a micro-front-end architecture after 7 months. The PPT was available in June last year, and now I will organize it into an article to share with you.

contents

Part 01 "Big Talk" Micro Front End

Clarify the cause and effect of this matter

What is a micro front end

It is not that difficult to answer this question and give a definition directly, but students who have never contacted it may not understand it. So you need to introduce the background first, and then it will be easier to understand.

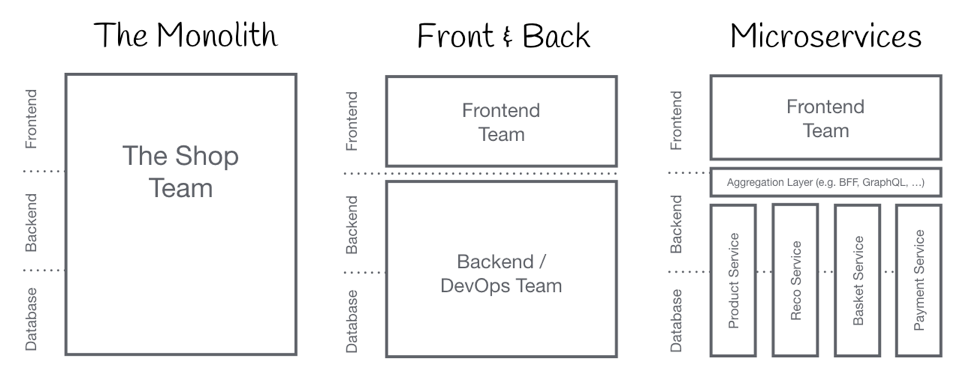

This picture shows the three phases of the division of labor after the software development front-end:

- Monolithic application: In the early stage of software development and in some small Web site architectures, the front-end and back-end database personnel exist in the same team, and everyone’s code assets are also in the same physical space. With the development of the project, our code assets have grown to To a certain extent, it was turned into a boulder.

- Front-end and back-end separation: The front-end and back-end teams are separated, and the software architecture is also separated, relying on mutual agreement to collaborate, and everyone's production materials have begun to be physically isolated.

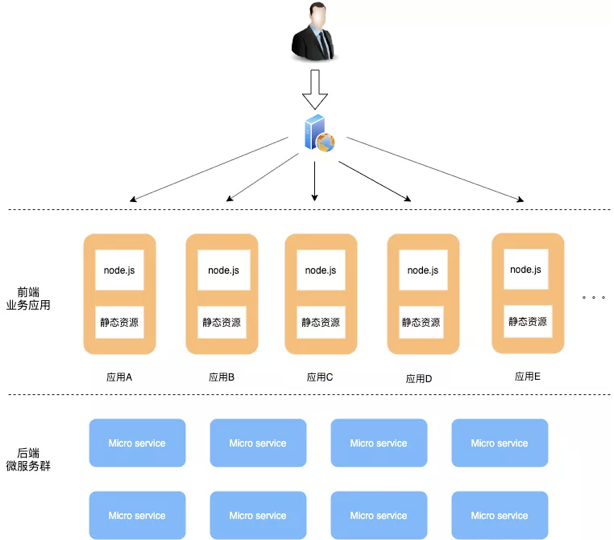

- Microservices: The back-end team splits the vertical field according to the actual business. The complexity of a single back-end system is divided and conquered, and the back-end services rely on remote calls to interact. At this time, when the front-end needs to call the back-end service, it needs to add a layer of API gateway or BFF for access.

The working model of the R&D teams of many Internet companies is now closer to this, splitting the entire product into multiple amoeba model business groups.

Under this research and development process and organizational model, the back-end architecture has formed a split and adjustable form through microservices. If the front-end is still in a single application mode, let alone other, the front-end architecture has already brought bottlenecks to collaboration.

In addition, with the advent of the Web 3.0 era, front-end applications are getting heavier and heavier. With the development of business iterations and the accumulation of project codes, front-end applications have evolved into a behemoth under hard-working production. People's ability to pay attention to complexity is limited, and the dimensionality is probably maintained at around 5-8. There are too many production materials for monomer application polymerization, too many dimensions that bring complexity, and it is easy to cause more problems. In short, the traditional SPA has no way to deal with the test of the rapid business development to the bottom of the technology.

Our products and front-end projects also encountered this problem. How to solve this problem?

In fact, the development of the back-end has already given a plan that can be used for reference, and the micro-front-end architecture of micro-services/micro-kernels is born at the right time.

To solve this problem, we encountered the micro-front-end architecture under the guidance of the law of attraction, and verified that it did help us solve this problem.

Now give a definition of our micro front end:

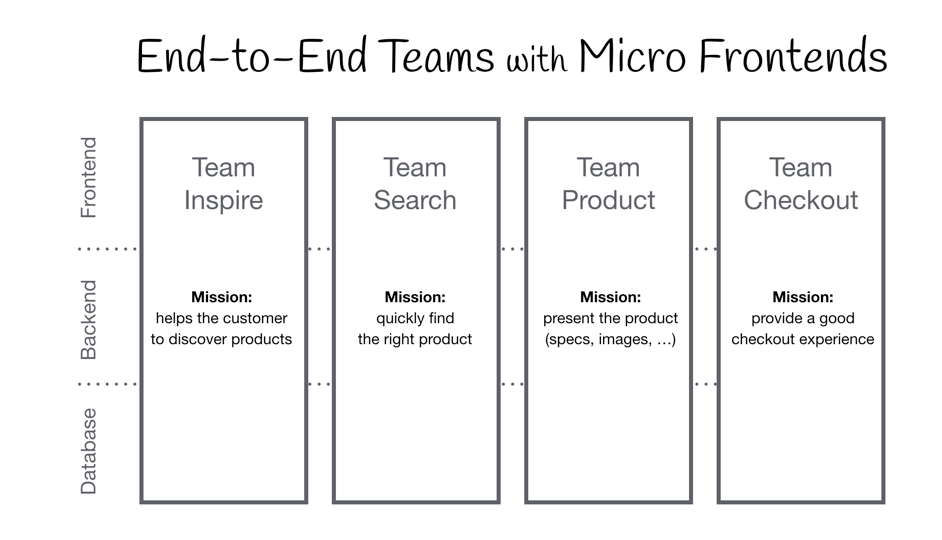

Micro front-end is an architecture similar to micro-kernel, which applies the concept of micro-services to the browser side, that is, a Web application is transformed from a single application into an application in which multiple small front-end applications are aggregated into one. Multiple front-end applications can also run independently, develop independently, and deploy independently.

background

- As a single application, Meiye PC has undergone 4 years of iterative development. The amount of code and dependencies are huge. According to statistics, there are more than 600,000 lines of pure business code.

- In terms of engineering, the speed of build and deployment is extremely slow, and the developer’s local debugging experience is poor and inefficient. A simple build + release takes 7+8=15 minutes or more

- In terms of code, the business code is seriously coupled, and the scope of influence is difficult to converge, which has repeatedly brought "butterfly effect"-style online bugs and faults

- In terms of technology, the cost of changes and regression brought by the general-purpose dependency upgrade is huge, and it is almost impossible to promote the daily needs and technical upgrades related to dependency packages such as Zent components and middle-stage components.

- In terms of testing, a single application responds to multi-person and multi-project releases. The total number of single-application releases is high and very frequent. Each integration test has the risk of conflict resolution and exposure of new issues.

- In terms of organization, a single application can’t handle the business team’s development organization, the boundary responsibilities are not clear, and module development is easy to interfere.

- In terms of architecture, the front-end cannot form a corresponding domain application development model with the back-end, which is not conducive to the sinking of the business, nor can it support the service-oriented front-end capabilities and the evolution of the technology stack.

In general, the bloated single application model has brought unbearable difficulties for developers, and has brought a big bottleneck to the rapid support of the business, and there is no confidence to cope with the continued expansion of the business in the future. It is a very urgent and valuable thing to adjust the structure of the US industry PC

Target

- At the business architecture level, focusing on the business form, project architecture and development trend of the US PC, the front-end application developed by large-scale multi-team collaboration is regarded as a combination of functions produced by multiple independent teams.

- At the technical architecture level, large-scale front-end applications are decoupled and split into base applications, micro-front-end kernels, registration centers, and several independently developed and deployed subsystems to form a distributed system of centralized management system.

- In terms of software engineering, ensure gradual migration and transformation to ensure the normal operation of new and old applications.

Achieve value

Business value

- Realize the atomization of products with front-end dimensions. If new businesses are integrated, sub-applications can be quickly integrated by other businesses

- Divided by business area, making the project multi-person collaboration under the organizational structure adjustment clearer and lower cost, and adapt to the organizational structure adjustment

- Slow down the entropy increase of the system and pave the way for business development.

Engineering value

- The independent development and deployment of business sub-applications have been realized, and the waiting time for construction and deployment has been reduced from 15 minutes to 1.5 minutes

- Supports a progressive architecture, the system sub-applications have no dependencies and can be upgraded individually, the technology stack allows inconsistencies, and there is more room for technology iteration

- Front-end capabilities can serve output

- Flexible architecture, new businesses can grow freely without increasing the cognitive burden of existing business developers

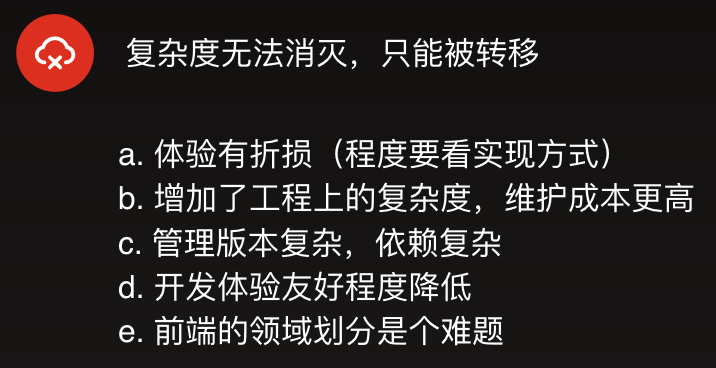

shortcoming

The design of an architecture is actually a trade-off and trade-off for the whole, in addition to value and advantages, it also brings some influences that need to be considered.

Part 02 Architecture and Engineering

Grasp the results from a global perspective

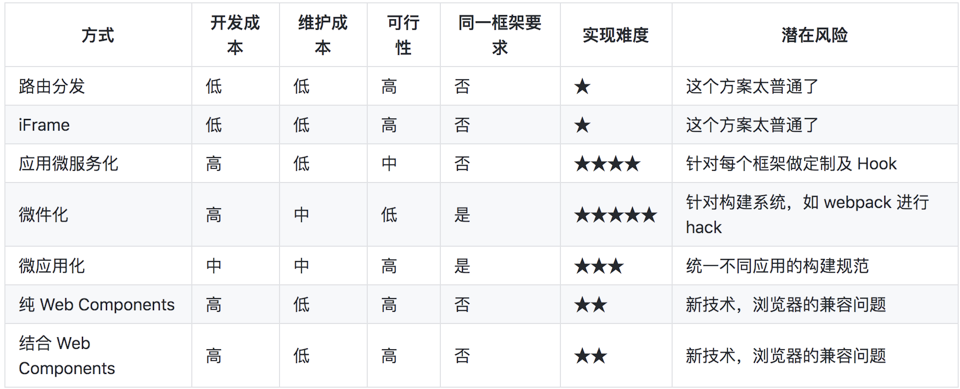

What are the micro front-end solutions

- Use HTTP server to reverse proxy to multiple applications

- Design communication and loading mechanisms on different frameworks

- Build a single application by combining multiple independent applications and components

- Use iFrame and custom messaging mechanism

- Use pure Web Components to build applications

- Built with Web Components

Each solution has its own advantages and disadvantages. Our brother team uses the most primitive gateway forwarding configuration similar to Nginx configuration reverse proxy, from the perspective of the access layer to combine the system, but every new addition and adjustment needs to be in operation Dimension level to configure.

While iframe nesting is the simplest and fastest solution, but the disadvantages of iframes are unavoidable.

The Web Components solution requires a lot of transformation costs.

The combined application routing and distribution solution has a moderate transformation cost and satisfies most of the needs without affecting the experience of the front terminal application. It was a relatively advanced solution at that time.

Points for Attention in Architecture Design Selection

- How to reduce the complexity of the system?

- How to ensure the maintainability of the system?

- How to ensure the scalability of the system?

- How to ensure the availability of the system?

- How to guarantee the performance of the system?

After comprehensive evaluation, we chose a combined application routing and distribution solution, but there is still an overall blueprint for the architecture and engineering implementation that need to be designed.

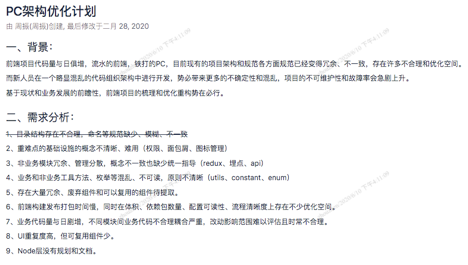

demand analysis

- Independent operation/deployment of sub-applications

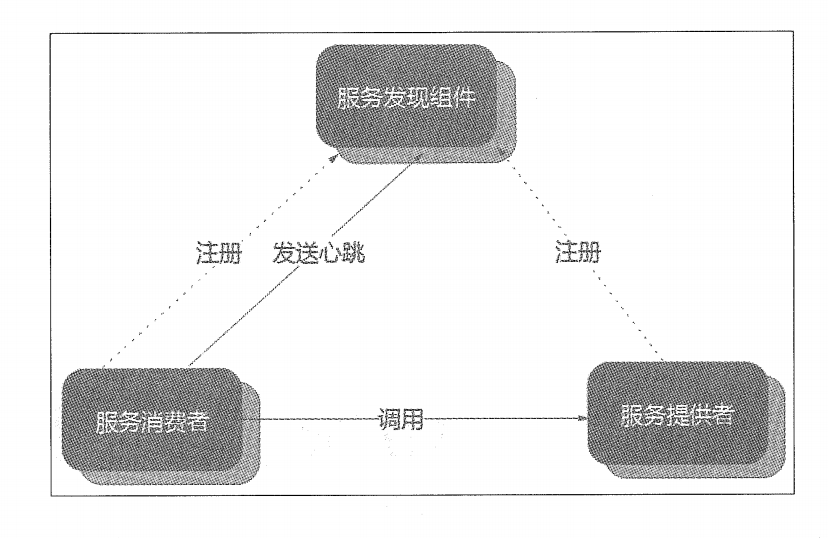

- Central control loading (service discovery/service registration)

- Sub-application common part reuse

- Standardize the access of sub-applications

- Base application routing and container management

- Establish supporting infrastructure

Design Principles

- Support gradual migration, smooth transition

- The principle of splitting is unified, try domain division to decouple

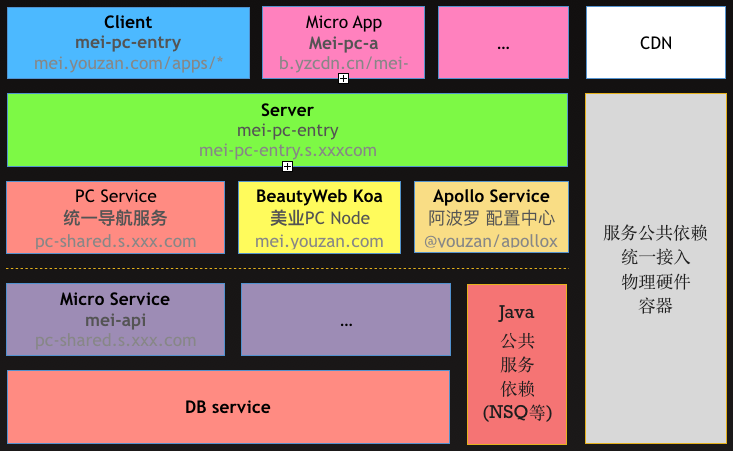

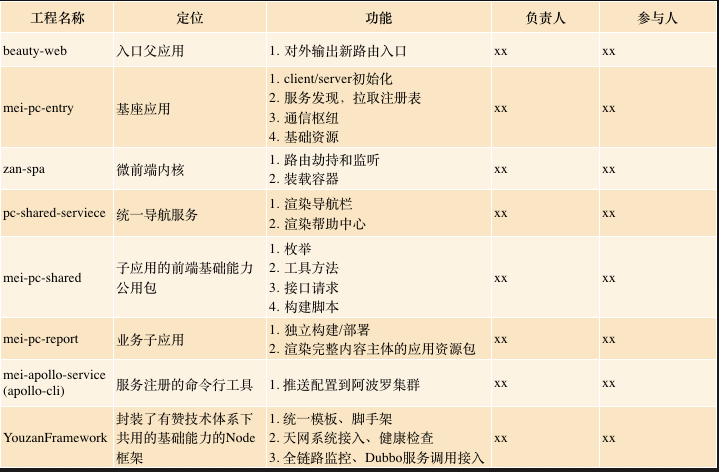

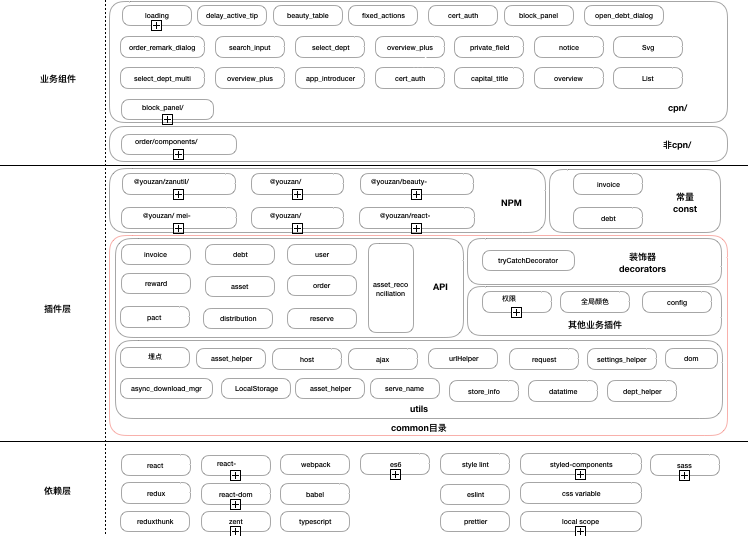

Application architecture diagram

System split

Three points need to be explained for the split here:

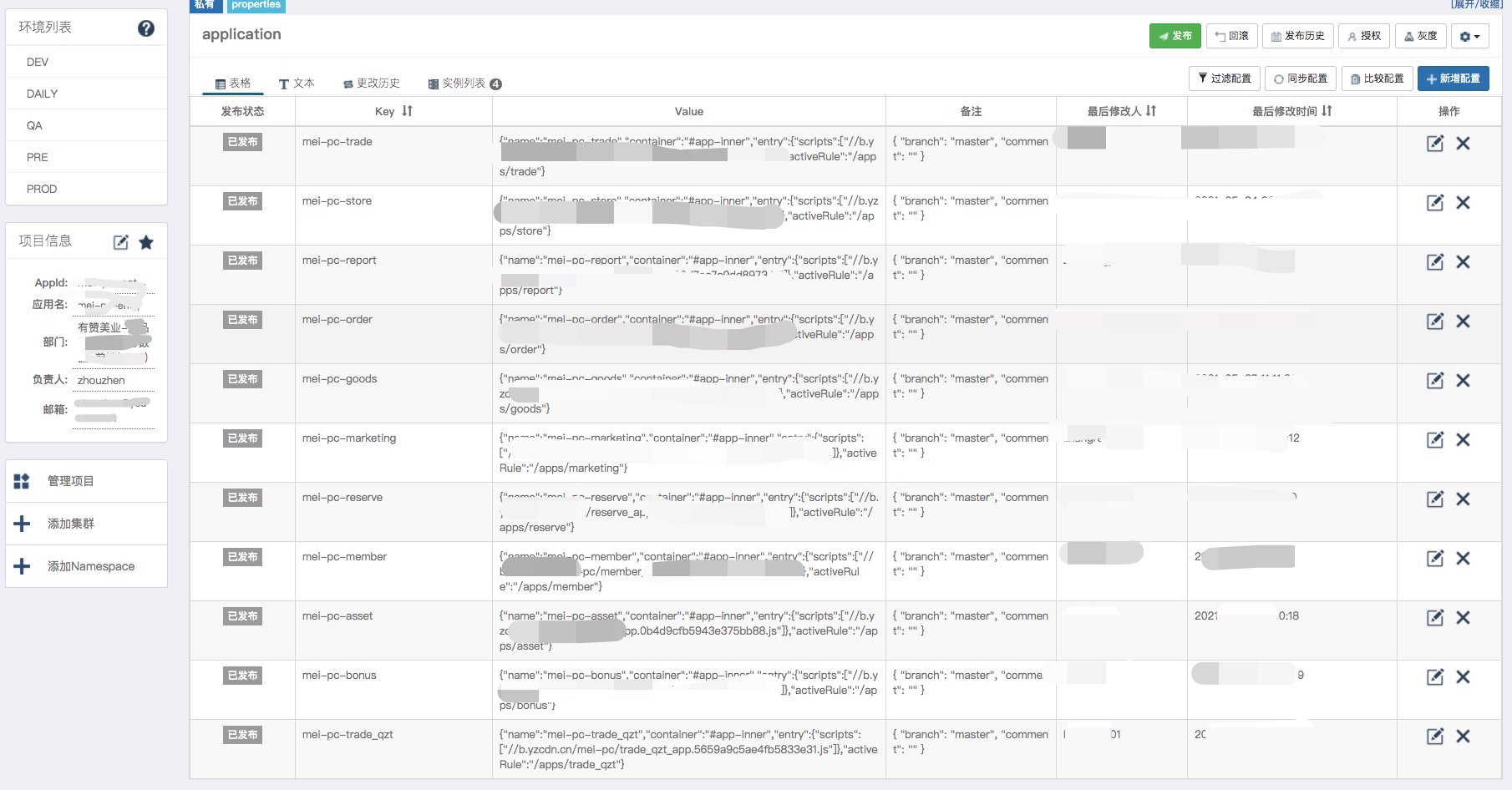

- Independent deployment (service registration): uploading application resource packages (packaged and generated files) to the Apollo configuration platform is a finishing touch

- The difference between servicing and npm package plug-in is that it does not need to be integrated through parent application construction, is dependent on each other, independent of release, and more flexible/reliable

- At the same time, Apollo carries the function of the registry, which can omit this layer of the web server of the sub-application and simplify the architecture.

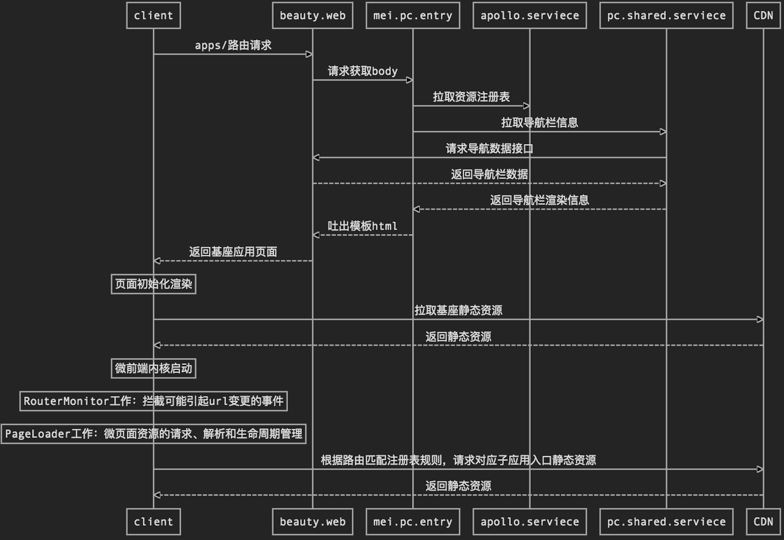

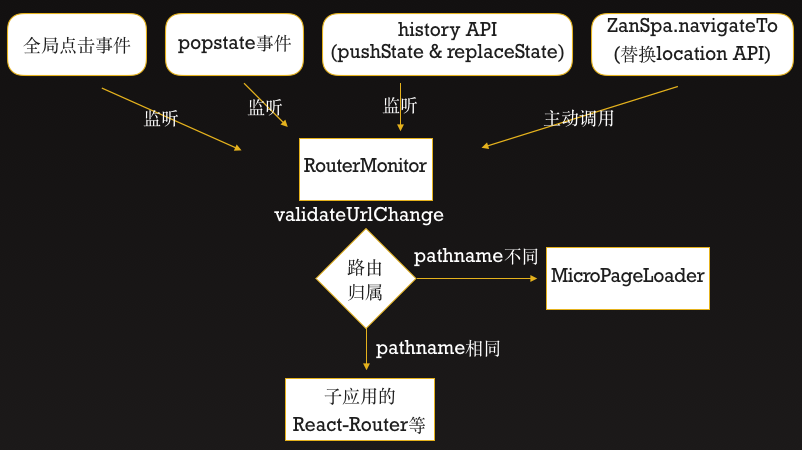

Timing diagram

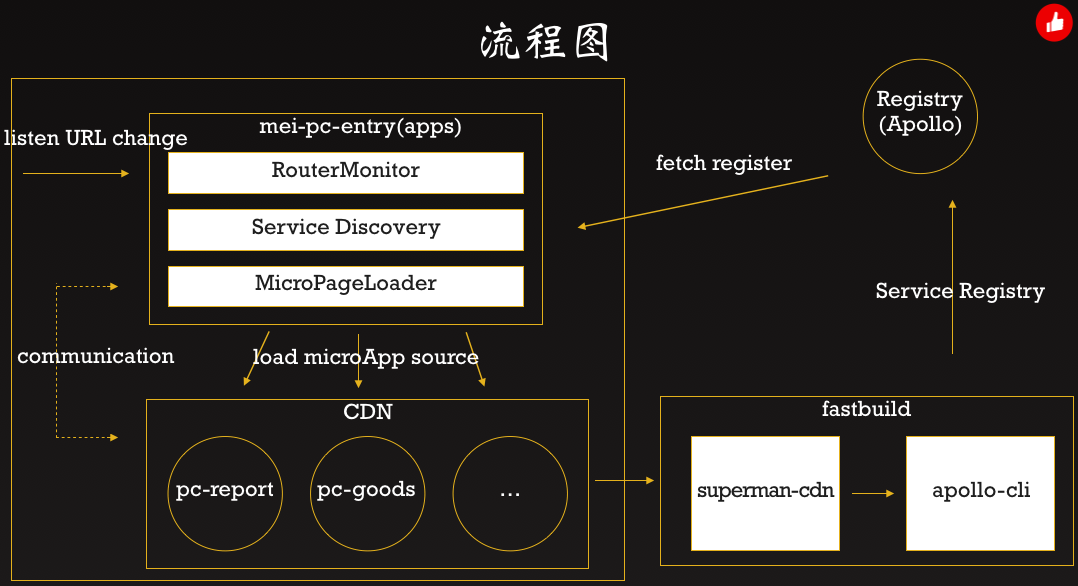

Front-end flow chart

## Part 03 Key Technology

What are the technical details worth mentioning in the landing

List of key technologies

We split the project into a structured narrative. There are four chapters: architecture core, registration center, sub-application, and code reuse.

It contains these technical points:

- Apollo

- Apollo Cli

- Version Manage

- Sandbox

- RouterMonitor

- MicroPageLoader

- Shared Menu

- Shared Common

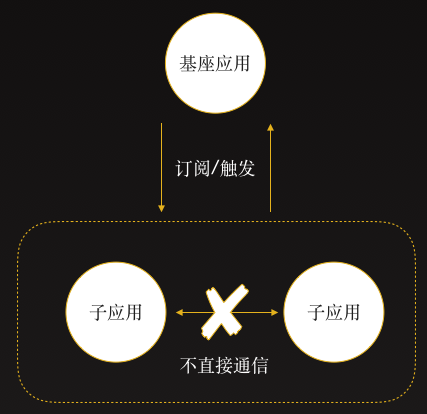

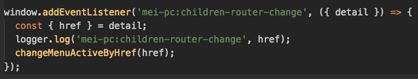

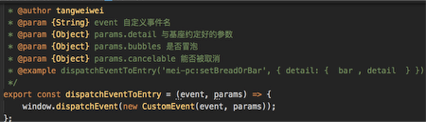

[Architecture Core] Message Communication

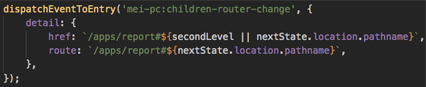

[Architecture core] routing distribution

When the path of the browser changes, the router of the base is the first to receive this change. All routing changes are controlled by RouterMonitor of the base router, because it will hijack all operations that cause url changes to obtain the timing of route switching. . If it is apps/xxx/# , it will only intercept and prevent the browser from re-initiating a web page request and will not be issued. If the url change before # is not involved, it will be issued to the sub-application, allowing the sub-application routing to take over.

[Architecture Core] Application Isolation

Mainly divided into JavaScript execution environment isolation and CSS style isolation.

JavaScript execution environment isolation: Whenever the JavaScript of the sub-application is loaded and run, its core is actually the modification of the global object window and the change of some global events. For example, after the js of JQuery runs, it will be mounted on the window. A window.$ object is no exception to other libraries React and Vue. For this reason, it is necessary to eliminate this conflict and influence as much as possible while loading and unloading each sub-application. The most common method is to use the sandbox mechanism SandBox.

The core of the sandbox mechanism is to make the access and modification of external objects within a controllable range when the partial JavaScript is running, that is, no matter how internally runs, it will not affect external objects. Generally, the vm module can be used on the Node.js side, but for the browser, you need to combine the with keyword and the window.Proxy object to implement the sandbox on the browser side.

CSS style isolation: When the base application and sub-applications are rendered on the same screen, some styles may pollute each other. If you want to completely isolate CSS pollution, you can use CSS Module or namespace to give each sub-application module a specific prefix , You can ensure that they will not interfere with each other. You can use webpack's postcss plug-in to add a specific prefix when packaging.

The CSS isolation between sub-applications and sub-applications is very simple. Every time the application is loaded, all the link and style content of the application will be marked. After the application is uninstalled, synchronize the corresponding link and style on the uninstall page.

[Architecture core] core flow chart

We store routing distribution, application isolation, application loading, and general business logic into the two-party package of the micro front-end kernel, which is used for multiplexing of various business lines, and a unified agreement is reached internally.

[Registration Center]Apollo

In fact, most companies do not have the concept of a registry center when they implement micro front-end solutions. Why does our micro front end also have the concept and actual existence of a registry? The point of consideration for model selection also mainly comes from our back-end microservice architecture.

Why choose to introduce a registry to increase the complexity of the overall structure?

Two reasons:

- Although our sub-applications do not need to communicate, there are cases where the base application needs the resource information of all the sub-applications to maintain the mapping of the resource addresses of the corresponding sub-applications. When most companies landed, they hard-coded the address information of the sub-applications into the base. When such applications are added, deleted, or modified, the base application needs to be redeployed, which violates our original intention of decoupling. The registry has stripped this mapping file from the base, allowing the architecture to have better decoupling and flexibility.

- We must know that the product entry of our sub-applications is hashed JS files uploaded to the CDN. At the same time, it is necessary to publish the base application to avoid sub-application publishing. There are two solutions. One is to increase the Web server of the sub-application, and you can get the latest static resource files through a fixed HTTP service address. One is to increase the registry. Sub-application publishing is to push the new JS address to the registry, and the structure of the sub-application can be thinner.

If a registration center is needed, we also have two solutions. One is to develop a micro front end that is dedicated to serve ourselves. Although it can be more suitable and focused, as a registration center, the circuit breaker is degraded under the requirements of high-availability technology. Such mechanisms are indispensable, and these research and development are difficult and costly. Another is to directly apply mature open source projects that provide registry capabilities or rely on the company's existing technical facility components.

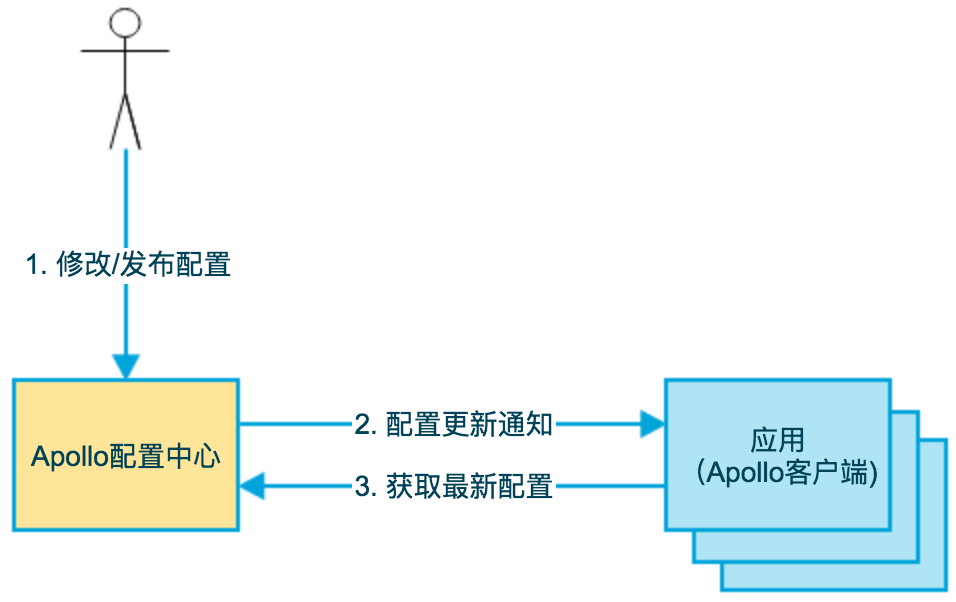

Finally, we decided to choose the Apollo project of the company’s internal basic technical facilities. The advantages have these two aspects.

- The project itself is open source and has a high degree of maturity. It has done a good job in multi-environment, immediacy, version management, gray release, permission management, open API, support, simple deployment and other functional aspects. It is a trustworthy and highly available Configuration center.

- The company has made privatization customization and deployment, which is more suitable for business, and it is stable and used in Java and Node scenarios, and there are maintenance personnel on duty.

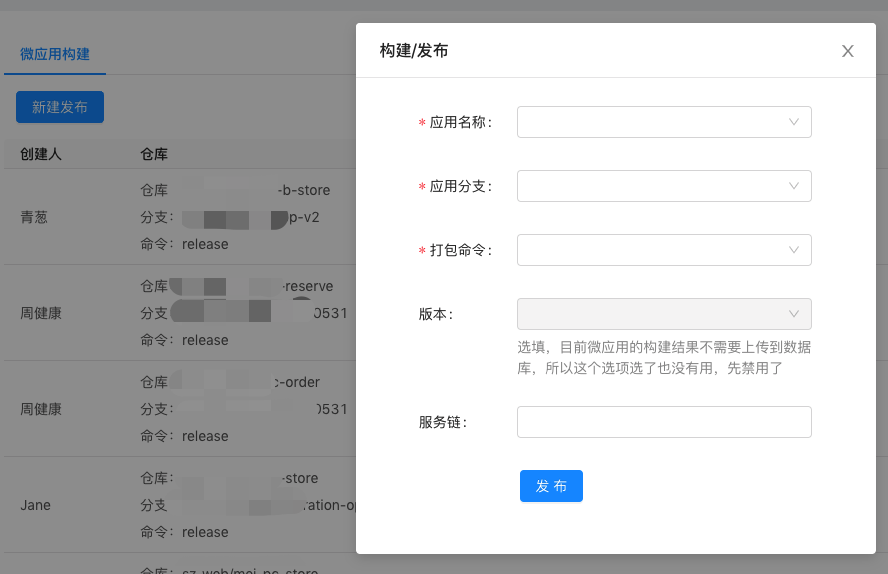

Package and build experience of sub-applications

- Positioning: After a sub-application is built, it is a static resource with a hash, waiting to be loaded by the base.

How to do it:

- Package a single-entry static resource, while exposing global methods to the base

- Each build generates an entry app.js with hash

- Get packaged output generation upload configuration

- Upload to apollo according to environmental parameters

How is the experience

Very lightweight, no need to publish, just build

How does the sub-application push the packaged cdn address to Apollo

- Get the JSON of the packaged product, get the entry file Hash, and the basic information of the current project.

- Generate content based on the above configuration, and then call the API open on the Apollo platform to upload it to Apollo.

How to conduct multi-environment publishing and service chain collaboration

- The environment is mainly divided into testing, pre-release, and production.

- After the packaging is completed, specify the environment according to the micro front-end construction platform.

- When pushing configuration, just specify the environment cluster corresponding to Apollo.

- When the base application is running, it will interact with Apollo according to the environment and the registry information of the corresponding environment cluster.

[Code Reuse] How to reuse common libraries between sub-applications

1. Add shared as a remote warehouse

git remote add shared http://gitlab.xxx-inc.com/xxx/xxx-pc-shared.git2. Add shared to the report project

git subtree add --prefix=src/shared shared master3. Pull shared code

git subtree pull --prefix=src/shared shared master4. Submit local changes to shared

git subtree push --prefix=src/shared shared hotfix/xxxNote: If it is a newly created sub-application 1-2-3-4; if it is to modify a sub-reference 1-3-4

[Code reuse] What to pay attention to when using shared

- Modified shared components, need to push changes to the shared warehouse

- If a component in a shared is frequently updated by a sub-application, consider removing this component from the shared and internalizing it in the sub-application

[Sub-application] How to access the sub-application

First of all, we need to understand our positioning of sub-applications:

After a sub-application is built, it is a static resource with a hash, waiting to be loaded by the base, and then rendering the view in the center, while having its own sub-route

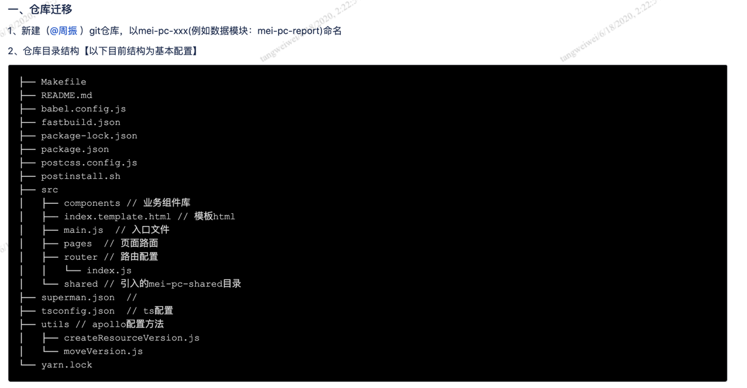

The first step is to create a new warehouse based on our template, and put the code of the corresponding sub-application

The second step is to access shared and modify a series of configuration files

The third step is to carry out the forwarding configuration required for development

The fourth part, run, and try to package and deploy

[Sub-application] Can the sub-application be adjusted independently? How to use the base station for joint debugging?

- Turn on the base, and map ports and resources to the local re-adjustment mode

- Zan-proxy

- Local Nginx forwarding

[Sub-application] Sub-application development experience

Part 04 project implementation

The tortuous path taken by a problem from its appearance to its resolution

1. The mindset before the project

- I've seen the concept of micro front end, and I feel fancy, playing with nouns, and forcibly creating new concepts.

- Have a general perception of the current problems of the project (it’s a problem)

- Starting from the business, use existing knowledge to think about solutions (almost no solution)

- Recall that I have understood the concepts and scenarios of micro-front-end architecture, and I feel that the two fit together (if life is just like first time)

- The industry’s solution proved that it was decided to use a micro front end to get rid of the swelling burden (it was time to dismantle it)

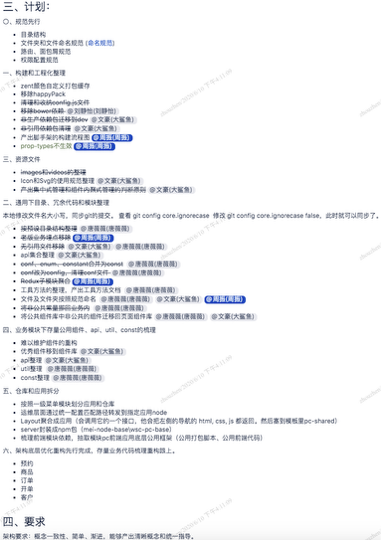

- First, optimize the project in the front-end architecture, and output the architecture diagram (to explore the path of the project as a whole)

- Next, sort out the dependencies of each business module and see which ones (sub-application analysis)

- A large number of chats, understandings and discussions with different people to obtain information supporting technology selection (external experts)

- Determine the basic model of micro front-end architecture in the US industry (basic architecture)

- Carry out outline technical design (concretization)

- Clarify the iteration scope

- Technical review

- Gang formation/division of labor

- kickoff

- But the story has just begun...

2. Refer to the micro front-end data

3. Carry out a PC architecture optimization plan

4. Risk

Foresee

- Insufficient developer input

- Technical uncertainty comes with more construction period risks

- The technical implementation of the details needs to be polished and takes more time than expected

- Part of the function is difficult to achieve

Accident

- Inaccurate understanding of the project structure

- Task splitting and boundary understanding are not in place

- Insufficient input of testers

- Cooperative friction

5. Iterative project establishment

6. Progress

- PC Micro Front End Dock application is online

- PC data split into sub-applications has been launched

- Coordinated the front-end of the central office to extract the micro-front-end core of the US industry

- Visualization of general tool methods and enumerations

- With the Apollo platform, it forms the registration center of the front terminal application resources

- Sub-application access document output

- Optimization of several front-end technology systems

7. Follow-up plan

**粗体** _斜体_ [链接](http://example.com) `代码` - 列表 > 引用。你还可以使用@来通知其他用户。