video tutorial

k8s tutorial description

- The essence of k8s underlying principle and source code explanation

- Advanced chapter of k8s underlying principle and source code explanation

- K8s pure source code interpretation course, help you become a k8s expert

- k8s-operator and crd combat development help you become a k8s expert

- Interpretation of source code of tekton full pipeline actual combat and pipeline operation principle

Prometheus full component tutorial

- 01_prometheus configuration and use of all components, analysis of underlying principles, and high availability

- 02_prometheus-thanos use and source code interpretation

- 03_kube-prometheus and prometheus-operator actual combat and principle introduction

- 04_prometheus source code explanation and secondary development

go language courses

- golang basic course

- Golang operation and maintenance platform actual combat, service tree, log monitoring, task execution, distributed detection

foreword

Why prometheus was born for cloud-native monitoring

- You must have heard that prometheus is born for cloud-native monitoring more than once. Have you ever wondered what this sentence means?

- We know that containers and k8s environments are used as the basic operating environment in cloud native

The integrated architecture is divided into many scattered microservices, and the changes and expansions and contractions of microservices are very frequent, which leads to frequent changes in the collected target information. This puts forward two requirements for the timing monitoring system:

- Need to collect massive pod containers running on multiple hosts

- At the same time, it is necessary to sense their changes in time

- At the same time, it is necessary to build a complete k8s monitoring ecosystem.

- In fact, to put it bluntly, monitoring in a cloud-native environment has become more difficult and complicated. Therefore, a system that is suitable for cloud-native monitoring scenarios at the beginning of design is needed, and prometheus is designed in this way.

What improvements have prometheus made to make it worthy of k8?

What metrics should be paid attention to in k8s

In the following table, I briefly list the four major indicators that we need to pay attention to in k8s:

| Indicator type | Acquisition source | Application examples | discovery type | grafana screenshot |

|---|---|---|---|---|

| Container Basic Resource Metrics | kubelet built-in cadvisor metrics interface | View container cpu, mem utilization, etc. | k8s_sd node level directly accesses node_ip |  |

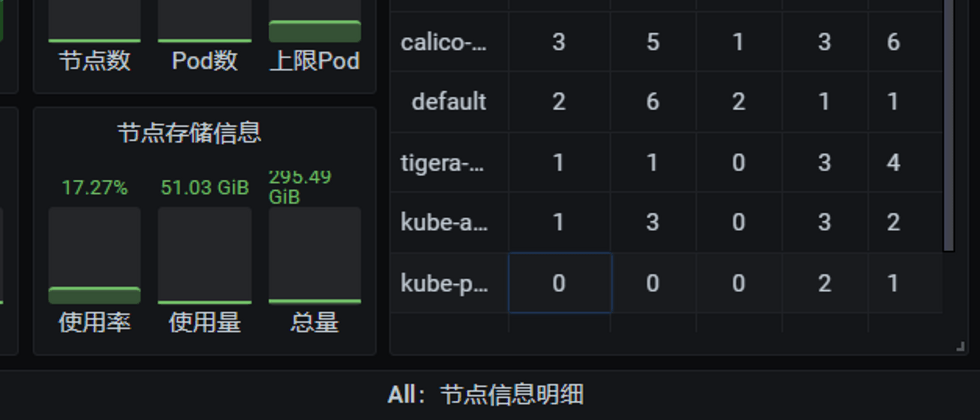

| k8s resource metrics | kube-stats-metrics (referred to as ksm) | For details, see the many components of k8s from container monitoring kube-stats-metrics Look at the pod status, such as the number of reasons for the pod waiting state, such as: check the distribution of node pods by namespace | Access domain names through coredns |  |

| k8s service component metrics | Service component metrics interface | View apiserver, scheduler, etc, coredns request delay, etc. | k8s_sd endpoint level |  |

| Deployed in the pod business buried point indicators | pod metrics interface | Scenarios based on business indicators | k8s_sd pod level, access metricspath of pod ip |

Adaptation 1. sdk + indicator self-exposure + pull model: building the entire ecosystem of k8s monitoring

- In the table listed above, we see four major indicators that need to be paid attention to in k8s

- In fact, we can simply divide k8s users into two roles: k8s cluster administrators and ordinary users. Each role focuses on different metrics

Isn't it tiring to collect by yourself?

- Since there are so many needs, if the monitoring system only collects it by itself, the first is very tiring, and the second is that such a complete ecosystem cannot be built.

secret

- Prometheus is collected by the pull model. Each monitored source only needs to expose its own indicators to the local http port, and prometheus can access the interface to collect indicators.

- The same is true for prometheus in k8s. Components need to expose their own metrics. For example, the built-in cadvisor metrics of kubelet mentioned in the container basic resource metrics are exposed under /metrics/cadvisor under port 10250.

- Prometheus discovers these indicator sources through the k8s service to complete the collection

Adaptation 2. k8s service discovery

Example

- 1. Service discovery at the endpoint level: for example, collecting apiserver, kube-controller-manager, etc.

kubernetes_sd_configs:

role: endpoints- 2. Service discovery at the node level: for example, when collecting cadvisor and kubelet's own indicators

kubernetes_sd_configs:

- role: node- 3. Service discovery at the node level: for example, when collecting pod self-managing indicators

kubernetes_sd_configs:

- role: pod

follow_redirects: trueInterpretation: watch real-time update

- Real-time discovery of resource changes through watch satisfies one of the challenges of monitoring in cloud-native situations that we proposed at the beginning, and it is necessary to sense changes in collection sources in time.

- At the same time, in the large second-layer environment of k8s, prometheus can access the discovered endpoints, nodes, and pods.

Adaptation 3. Collection authentication: token & certificate

Many interfaces in k8s require authentication, and even TLS two-way authentication is required

- At the same time, we know that many interfaces in k8s have access authentication. For example, if we directly access the /metrics/cadvisor interface of the kubelet on the k8s node, it will return unauthorized. as shown below

[root@Kubernetes-node01 logs]# curl -k https://localhost:10250/metrics/cadvisor

Unauthorized- Prometheus also faces authentication problems when collecting cadvisor metrics

Solution

- Smart prometheus developers solve this problem by supporting the relevant tokens and certificates in the configuration in the acquisition, such as the following configuration represents a token file, and a certificate file at the same time.

bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token

tls_config:

ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt

insecure_skip_verify: true- When we use k8s, we know that k8s solves the problem of token and certificate mounting through service account and clusterrolebinding

- Prometheus also takes advantage of this. When creating a prometheus container, it is a related service account. An example of the clusterrolebinding configuration is as follows:

apiVersion: rbac.authorization.k8s.io/v1 # api的version

kind: ClusterRole # 类型

metadata:

name: prometheus

rules:

- apiGroups: [""]

resources: # 资源

- nodes

- nodes/proxy

- services

- endpoints

- pods

verbs: ["get", "list", "watch"]

- apiGroups:

- extensions

resources:

- ingresses

verbs: ["get", "list", "watch"]

- nonResourceURLs: ["/metrics"]

verbs: ["get"]

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: prometheus # 自定义名字

namespace: kube-system # 命名空间

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: prometheus

roleRef: # 选择需要绑定的Role

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin

subjects: # 对象

- kind: ServiceAccount

name: prometheus

namespace: kube-system- We need to configure the corresponding serviceAccountName

serviceAccountName: prometheusin the prometheus yaml when we create the statsfulset of the related prometheus - After configuration, Kubernetes will mount the corresponding token and certificate files to the pod.

- When we exec into the pod of prometheus, we can see that the relevant files are in

/var/run/secrets/kubernetes.io/serviceaccount/, as shown in the following figure:

/ # ls /var/run/secrets/kubernetes.io/serviceaccount/ -l

total 0

lrwxrwxrwx 1 root root 13 Jan 7 20:54 ca.crt -> ..data/ca.crt

lrwxrwxrwx 1 root root 16 Jan 7 20:54 namespace -> ..data/namespace

lrwxrwxrwx 1 root root 12 Jan 7 20:54 token -> ..data/token

/ # df -h |grep service

tmpfs 7.8G 12.0K 7.8G 0% /var/run/secrets/kubernetes.io/serviceaccount

/ # When collecting etcd, you need to configure the secret of the relevant certificate

kubectl create secret generic etcd-certs --from-file=/etc/kubernetes/pki/etcd/healthcheck-client.crt --from-file=/etc/kubernetes/pki/etcd/healthcheck-client.key --from-file=/etc/kubernetes/pki/etcd/ca.crt -n kube-system

Adaptation 4. Powerful relabel capability for label interception, transformation, and static segmentation

prometheus relabel description

- Documentation address https://prometheus.io/docs/prometheus/latest/configuration/configuration/#relabel_config

Application 1: labelmap intercepts the key name of the service discovery label when collecting cadvisor indicators

- When collecting cadvisor, you can see that the service discovery source has added a lot of tags starting with

__meta_kubernetes_node_label_

- But these tag names are too long and need to be shortened. We use the following relabel configuration

relabel_configs:

- separator: ;

regex: __meta_kubernetes_node_label_(.+)

replacement: $1

action: labelmap- Take this label as an example,

__meta_kubernetes_node_label_kubernetes_io_os="linux", the above configuration represents the key that matches the beginning of _meta_kubernetes_node_label_, only the latter part is reserved, so what you see in the final label isbeta_kubernetes_io_os="linux". - labelmap represents that the matched label is assigned to the target label

Application 2: replace assigns values to labels when collecting pod custom metrics

When we use pod custom indicators, we need to define three configurations starting with

prometheus.ioin spec.template.metadata.annotations of pod yaml. The distribution represents whether we need prometheus collection, metrics exposed ports, metrics http path information, the detailed configuration is as follows:spec: selector: matchLabels: name: fluentd-elasticsearch template: metadata: labels: name: fluentd-elasticsearch annotations: prometheus.io/scrape: 'true' prometheus.io/port: '9102' prometheus.io/path: 'metrics'When collecting pod custom metrics, the following is used

relabel_configs: - source_labels: [__meta_kubernetes_pod_annotation_prometheus_io_scrape] separator: ; regex: "true" replacement: $1 action: keep - source_labels: [__meta_kubernetes_pod_annotation_prometheus_io_path] separator: ; regex: (.+) target_label: __metrics_path__ replacement: $1 action: replace - source_labels: [__address__, __meta_kubernetes_pod_annotation_prometheus_io_port] separator: ; regex: ([^:]+)(?::\d+)?;(\d+) target_label: __address__ replacement: $1:$2 action: replace- It means to assign

__meta_kubernetes_pod_annotation_prometheus_io_pathto__metrics_path__ - It means to assign the relevant

__meta_kubernetes_pod_annotation_prometheus_io_portto the port behind__address__

Application 2: keep for filtering, when collecting service component endpoints

- The endpoint resource is a list of ip addresses and ports that expose a service

- It means that the k8s service is used to discover the endpoint, and there will be a lot of endpoints, so it is necessary to filter the apiserver

kubernetes_sd_configs:

- role: endpoints- The filtering method is that the tag __meta_kubernetes_namespace matches default and __meta_kubernetes_service_name matches kubernetes and __meta_kubernetes_endpoint_port_name matches https, what about:

keep

relabel_configs:

- source_labels: [__meta_kubernetes_namespace, __meta_kubernetes_service_name, __meta_kubernetes_endpoint_port_name]

separator: ;

regex: default;kubernetes;https

replacement: $1

action: keepk8s will create the apiserver service in the default namespace

$ kubectl get svc -A |grep 443 default kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 9d- The obtained endpoint is converted to the collection path as:

https://masterip:6443/metrics

The adaptation work done by prometheus for k8s monitoring

| Adapter name | illustrate | Example |

|---|---|---|

| The metrics of each component are self-exposed | All components expose their own indicators on their respective service ports, and prometheus pulls the indicators through pull | apiserver:6443/metrics |

| k8s service discovery | Instantly discover resource changes through watch | kubernetes_sd_configs:- role: node |

| Authentication | The component interfaces of k8s are all authenticated, so the collector of k8s should support configuration authentication | Support configuration of token and tls certificate |

| Label relabel capability | Filter service discovery target | labelmap Remove the long prefix of the service discovery labelreplace do replacehashmod do static sharding |

**粗体** _斜体_ [链接](http://example.com) `代码` - 列表 > 引用。你还可以使用@来通知其他用户。