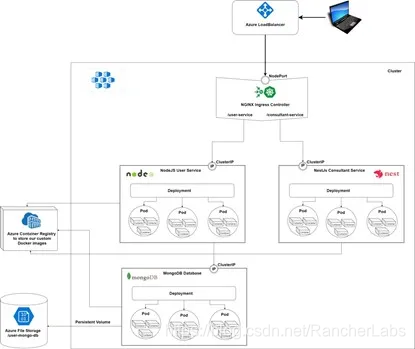

This article is one of a series of articles that introduces the basic concepts of Kubernetes. In the first article, we briefly introduced Persistent Volumes. In this article, we will learn how to set up data persistence and will write a Kubernetes script to connect our Pod to the persistent volume. In this example, Azure File Storage will be used to store data from our MongoDB database, but you can use any type of volume to achieve the same result (such as Azure Disk, GCE Persistent Disk, AWS Elastic Block Storage) Wait).

If you want to fully understand other concepts of , you can check the previously published articles first at 160ca0afeda3b8.

Please note: The script provided in this article is not limited to a certain platform, so you can use other types of cloud providers or use a local cluster with K3S to practice this tutorial. This article recommends using K3S, because it is very light, all dependencies are packaged in a single binary package size is less than 100MB. It is also a highly available certified Kubernetes distribution for production workloads in resource-constrained environments. For more information, please check the official document:

Preliminary preparation

Before starting this tutorial, make sure Docker is installed. Install Kubectl at the same time (if not, please visit the following link to install:

https://kubernetes.io/docs/tasks/tools/#install-kubectl-on-windows

The kubectl commands used throughout this tutorial can be found in the kubectl Cheat Sheet.:

https://kubernetes.io/docs/reference/kubectl/cheatsheet/

In this tutorial, we will use Visual Studio Code, but you can also use other editors.

What problems can Kubernetes persistent volumes solve?

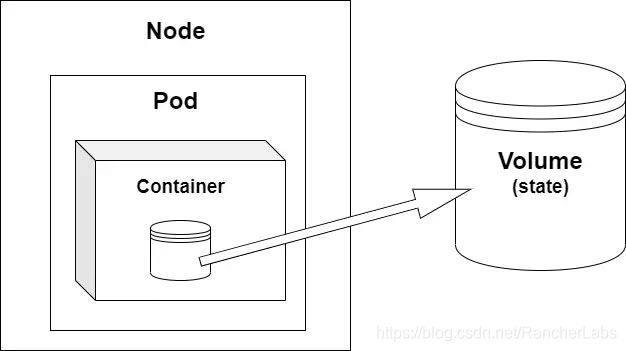

Remember, we have a node (hardware device or virtual machine) and inside the node, we have a Pod (or multiple Pods), and in the Pod, we have containers. The status of Pod is temporary, so they are out of sight (often deleted or rescheduled, etc.). In this case, if you want to save the data in the Pod after it is deleted, you need to move the data outside the Pod. This way it can exist independently of any Pod. This external location is called a volume, which is an abstraction of the storage system. Using volumes, you can maintain a persistent state in multiple Pods.

When to use persistent volumes

When containers began to be widely used, they were designed to support stateless workloads, and their persistent data was stored elsewhere. Since then, many efforts have been made to support stateful applications in the container ecosystem.

Every project requires some kind of data persistence, so you usually need a database to store the data. But in a concise design, you don't want to rely on a specific implementation; you want to write an application that is as reusable and platform-independent as possible.

It has always been necessary to hide the details of the storage implementation from the application. But now, in the era of cloud-native applications, cloud providers have created an environment where applications or users who want to access data need to integrate with specific storage systems. For example, many applications directly use specific storage systems, such as Amazon S3, AzureFile, or block storage, which creates unhealthy dependencies. Kubernetes is trying to change this by creating an abstraction called Persistent Volumes, which allows cloud-native applications to connect to various cloud storage systems without having to establish clear dependencies with these systems. This can make the consumption of cloud storage more seamless and eliminate integration costs. It also makes it easier to migrate the cloud and adopt a multi-cloud strategy.

Even if sometimes, due to objective conditions such as money, time, or manpower, you need to make some compromises to directly couple your application with a specific platform or provider, you should try to avoid as many direct dependencies as possible. One way to decouple the application from the actual database implementation (there are other solutions, but these solutions are more complex) is to use containers (and persistent volumes to prevent data loss). In this way, your application will rely on abstractions rather than specific implementations.

The real question now is, should we always use containerized databases with persistent volumes, or which storage system types shouldn't be used in containers?

There is no universal golden rule for when to use persistent volumes, but as a starting point, you should consider scalability and handling of node loss in the cluster.

According to scalability, we can have two types of storage systems:

- Vertical scaling-including traditional RDMS solutions, such as MySQL, PostgreSQL, and SQL Server

- Horizontal scaling-including "NoSQL" solutions, such as ElasticSearch or Hadoop-based solutions

Vertically scalable solutions such as MySQL, Postgres, and Microsoft SQL should not enter the container. These database platforms require high I/O, shared disks, block storage, etc., and cannot gracefully handle node loss in the cluster, which usually occurs in container-based ecosystems.

For horizontally scalable applications (Elastic, Cassandra, Kafka, etc.), you should use containers because they can withstand the loss of nodes in the database cluster, and database applications can be rebalanced independently.

Generally, you can and should containerize distributed databases that use redundant storage technology and can withstand the loss of nodes in the database cluster (Elasticsearch is a very good example).

Types of persistent volumes in Kubernetes

We can classify Kubernetes volumes according to their life cycle and configuration methods.

Taking into account the life cycle of the volume, we can be divided into:

- Temporary volume, which is tightly coupled with the life cycle of the node (such as ExpertDir or HostPath). If the node goes down, the number of cut-offs will be deleted.

- Persistent volume, that is, long-term storage, and has nothing to do with Ppd or node life cycle. These can be cloud volumes (such as gcePersistentDisk, awselasticBlockStore, AzureFile or AzureDisk), NFS (Network File System) or Persistent

Volume Claim (a series of abstractions to connect to the underlying cloud to provide storage volumes).

According to the configuration of the volume, we can be divided into:

- direct interview

- Static configuration

- Dynamic configuration

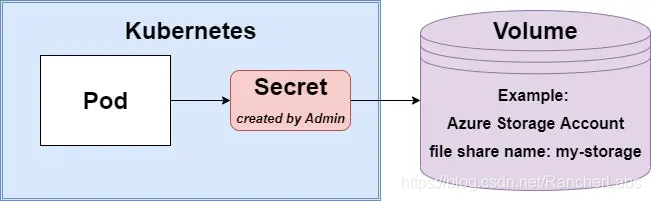

Direct access to persistent volumes

In this case, the Pod will be directly coupled with Volume, so it will be aware of the storage system (for example, the Pod will be coupled with an Azure storage account). The solution has nothing to do with the cloud, it depends on the implementation rather than abstraction. Therefore, try to avoid such solutions if possible. Its only advantage is that it is fast. Create a Secret in the Pod and specify the Secret and the exact storage type that should be used.

Create the Secret script as follows:

apiVersion: v1

kind: Secret

metadata:

name: static-persistence-secret

type: Opaque

data:

azurestorageaccountname: "base64StorageAccountName"

azurestorageaccountkey: "base64StorageAccountKey"In any Kubernetes script, in line 2 we specify the type of resource. In this case, we call it Secret. In line 4, we give it a name (we call it static because it is manually created by the administrator, not automatically generated). From the perspective of Kubernetes, the Opaque type means that the content (data) of the Secret is unstructured (it can contain any key-value pair). To learn more about Kubernetes Secrets, you can refer to Secrets Design Document and ConfigureKubernetes Secrets.

https://github.com/kubernetes/community/blob/master/contributors/design-proposals/auth/secrets.md

https://kubernetes.io/docs/concepts/configuration/secret/

In the data section, we must specify the account name (in Azure, it is the name of the storage account) and Access key (in Azure, select "Settings" under the storage account, Access key). Don't forget that both should be encoded using Base64.

The next step is to modify our Deployment script to use the volume (in this case, the volume is Azure File Storage).

apiVersion: apps/v1

kind: Deployment

metadata:

name: user-db-deployment

spec:

selector:

matchLabels:

app: user-db-app

replicas: 1

template:

metadata:

labels:

app: user-db-app

spec:

containers:

- name: mongo

image: mongo:3.6.4

command:

- mongod

- "--bind_ip_all"

- "--directoryperdb"

ports:

- containerPort: 27017

volumeMounts:

- name: data

mountPath: /data/db

resources:

limits:

memory: "256Mi"

cpu: "500m"

volumes:

- name: data

azureFile:

secretName: static-persistence-secret

shareName: user-mongo-db

readOnly: falseWe can find that the only difference is that from line 32 we specify the volume used, give it a name and specify the exact details of the underlying storage system. secretName must be the name of the previously created Secret.

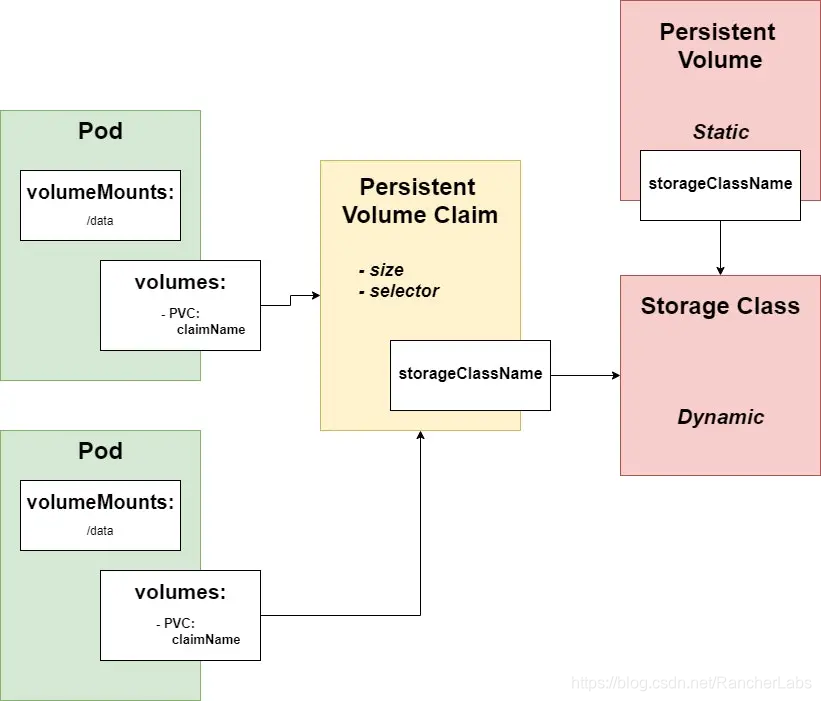

Kubernetes storage class

To understand static or dynamic configuration, we must first understand the Kubernetes storage class.

With StorageClass, administrators can provide profiles or "classes" about available storage. Different classes may be mapped to different service quality levels, or backup strategies or arbitrary strategies determined by the cluster administrator.

For example, you can have a configuration file that stores data on HDD, named Slow Storage, or a configuration file that stores data on SSD, named Fast Storage. The type of storage is determined by the supplier. For Azure, there are two providers: AzureFile and AzureDisk (the difference is that AzureFile can be used with the Read Wriite Many access mode, while AzureDisk only supports Read Write Once access. This may be a disadvantage when you want to use multiple Pods at the same time. ). You can learn about the different types of Storage Classes here:

https://kubernetes.io/docs/concepts/storage/storage-classes/

The following is the script of Storage Class:

kind: StorageClass

apiVersion: storage.k8s.io/v1

metadata:

name: azurefilestorage

provisioner: kubernetes.io/azure-file

parameters:

storageAccount: storageaccountname

reclaimPolicy: Retain

allowVolumeExpansion: trueKubernetes predefined provider attribute values (see Kubernetes storage class). The retention recycling strategy means that after we delete the PVC and PV, the actual storage medium is not cleared. We can set it to delete and use this setting. Once the PVC is deleted, it will also trigger the deletion of the corresponding PV and the actual storage medium (the actual storage here is Azure File Storage).

Persistent Volume and Persistent Volume Claim

Kubernetes has a matching primitive for every traditional storage operation activity (provisioning/configuration/attachment). The persistent volume is provisioning, the storage class is being configured, and the persistent volume Claim is additional.

From the initial documentation:

*Persistent Volume (PV) is the storage in the cluster, which has been configured by the administrator or dynamically configured using storage classes.

Persistent Volume Claim (PVC) is a request stored by the user. It is similar to Pod. Pod consumes node resources and PVC consumes PV resources similarly. Pods can request specific resource levels (CPU and memory). Claims can request specific sizes and access modes (for example, they can be installed once read/write or read-only multiple times).

This means that the administrator will create a persistent volume to specify the storage size, access mode, and storage type that the Pod can use. The developer will create a Persistent Volume Claim, asking for a volume, access rights, and storage type. In this way, there is a clear distinction between the "development side" and the "operation and maintenance side". The developer is responsible for the required volume (PVC), and the operation and maintenance personnel is responsible for preparing and configuring the required volume (PV).

The difference between static and dynamic configuration is that if there are no persistent volumes and secrets manually created by the administrator, Kubernetes will try to create these resources automatically. *

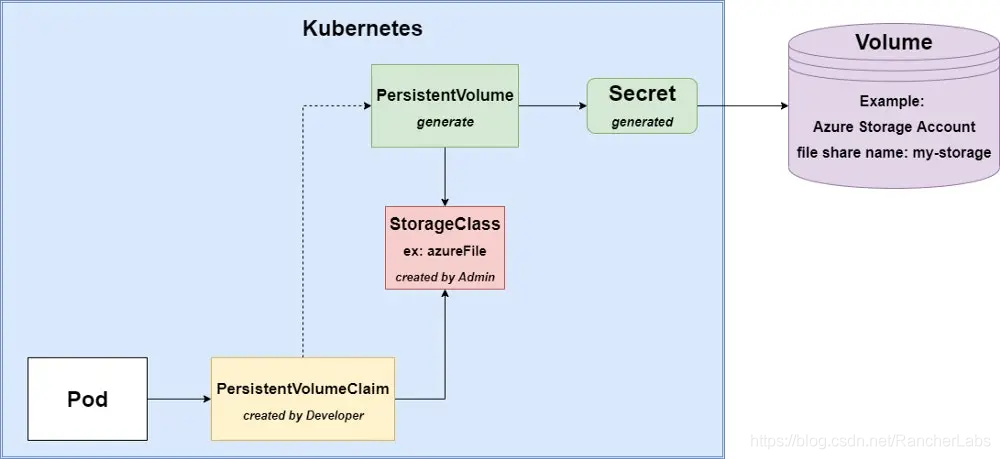

Dynamic configuration

In this case, there are no manually created persistent volumes and secrets, so Kubernetes will try to generate them. Storage Class is necessary, we will use the Storage Class created in the previous article.

The script of PersistentVolumeClaim is as follows:

apiVersion: v1

kind:Persistent Volume Claim

metadata:

name: persistent-volume-claim-mongo

spec:

accessModes:

- ReadWriteMany

resources:

requests:

storage: 1Gi

storageClassName: azurefilestorageAnd our updated Deployment script:

apiVersion: apps/v1

kind: Deployment

metadata:

name: user-db-deployment

spec:

selector:

matchLabels:

app: user-db-app

replicas: 1

template:

metadata:

labels:

app: user-db-app

spec:

containers:

- name: mongo

image: mongo:3.6.4

command:

- mongod

- "--bind_ip_all"

- "--directoryperdb"

ports:

- containerPort: 27017

volumeMounts:

- name: data

mountPath: /data/db

resources:

limits:

memory: "256Mi"

cpu: "500m"

volumes:

- name: data

Persistent Volume Claim:

claimName: persistent-volume-claim-mongoAs you can see, in line 34, we refer to the previously created PVC by name. In this case, we did not manually create a persistent volume or secret for it, so it will be created automatically.

The most important advantage of this method is that you don't have to manually create PV and Secret, and Deployment is cloud-agnostic. The low-level details of storage do not exist in the Pod's spec. But there are some disadvantages: you cannot configure storage accounts or file shares because they are automatically generated, and you cannot reuse PV or Secret-they will be regenerated for each new claim.

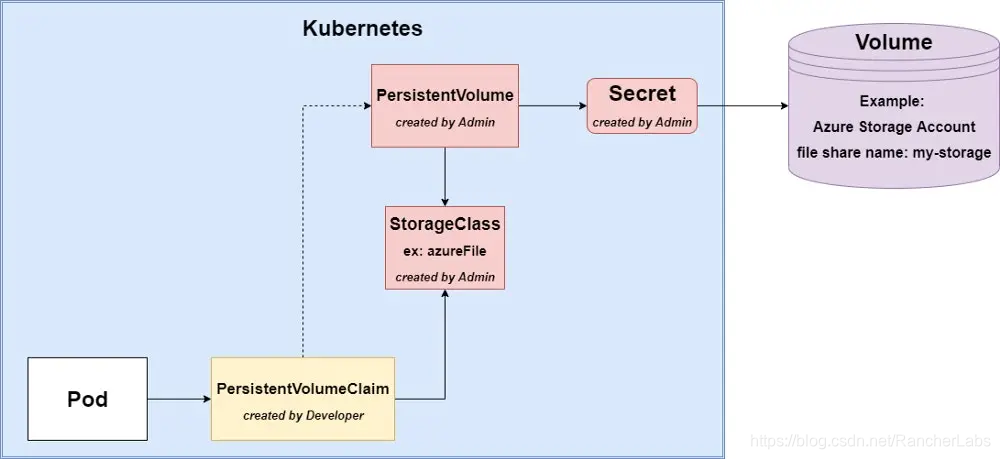

Static configuration

The only difference between static and dynamic configuration is that we manually create persistent volumes and secrets in static configuration. In this way, we have complete control over the resources created in the cluster.

The persistent volume script is as follows:

apiVersion: v1

kind: PersistentVolume

metadata:

name: static-persistent-volume-mongo

labels:

storage: azurefile

spec:

capacity:

storage: 1Gi

accessModes:

- ReadWriteMany

storageClassName: azurefilestorage

azureFile:

secretName: static-persistence-secret

shareName: user-mongo-db

readOnly: falseThe important thing is that in line 12 we refer to the Storage Class by name. In addition, in line 14 we quoted Secret, which is used to access the underlying storage system.

This article recommends this solution even if it requires more work, but it is cloud-agnostic. It also allows you to apply separation of concerns regarding roles (cluster administrators and developers) and gives you control over naming and creating resources.

to sum up

In this article, we learned how to use Volume to persist data and state, and proposed three different methods to set up the system, namely direct access, dynamic configuration and static configuration, and discussed the advantages and disadvantages of each system.

About the Author

Czako Zoltan, an experienced full-stack developer, has extensive experience in multiple fields including front-end, back-end, DevOps, Internet of Things and artificial intelligence.

**粗体** _斜体_ [链接](http://example.com) `代码` - 列表 > 引用。你还可以使用@来通知其他用户。