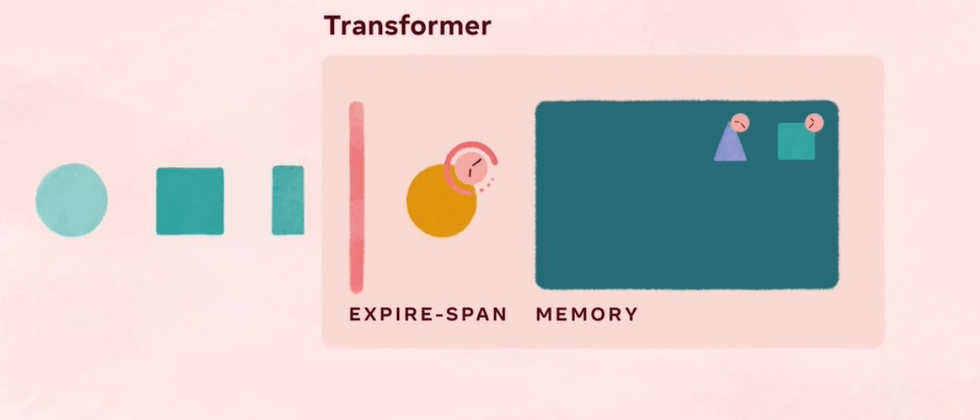

Facebook AI Research (FAIR) open sourced Expire-Span, a deep learning technology that can learn which items in the input sequence should be remembered, thereby reducing AI's memory and computing requirements. FAIR shows that the Transformer model included in Expire-Span can be extended to a sequence of tens of thousands of items, and its performance is improved compared with previous models.

The research team described the technique and several experiments in a paper published at the upcoming International Conference on Machine Learning (ICML). Expire-Span allows sequential artificial intelligence models to "forget" events that are no longer relevant. When incorporated into self-attention models, such as Transformer, Expire-Span reduces the amount of memory required and enables the model to process longer sequences, which is the key to improving the performance of many tasks, such as natural language processing (NLP). Using Expire-Span, the model trained by the team can handle sequences up to 128k, which is an order of magnitude more than the previous model. Compared with the baseline, the accuracy and efficiency are improved. Research scientists and paper co-authors Angela Fan and Sainbayar Sukhbaatar wrote on FAIR's blog.

Facebook said: As the next step in our research on artificial intelligence systems that are more like humans, we are studying how to incorporate different types of memories into neural networks. Therefore, in the long run, we can make artificial intelligence closer to human memory and have the ability to learn faster than the current system. We believe that Expire-Span is an important and exciting advancement towards this kind of future artificial intelligence-driven innovation.

To evaluate the performance of Expire-Span, the team selected three baseline Transformer models--Transformer-XL, Compressive Transformer, and Adaptive-Span--and compared the accuracy of the models as well as GPU memory and training speed. These models are used in several reinforcement learning (RL) and NLP tasks. Expire-Span performs better than the baseline in most experiments; for example, in the sequence replication task, Expire-Span extends to a sequence length of 128k, reaching an accuracy of 52.1%, while Transform-XL has only a 2k sequence length 26.7% accuracy rate.

Expire-Span project GitHub address: https://github.com/facebookresearch/transformer-sequential

**粗体** _斜体_ [链接](http://example.com) `代码` - 列表 > 引用。你还可以使用@来通知其他用户。