【Recommendation in this issue】 Is it because everyone’s self-control is not enough, or the e-commerce platform is too good at understanding people’s minds, from a technical perspective, to find out.

Abstract: a live broadcast room where tens of millions of people are online at any time, so that everyone can buy at the same time and fairly, and also to ensure the stability of the system. This is a very big test. What kind of technical blessing is behind it, and what challenges did you encounter in the process? Let us find out.

This article is shared from the " 618 Technical Special (3) Live Streaming King of HUAWEI CLOUD COMMUNITY. Why is there a series of technical challenges behind "OMG buy it"? ", the original author: technical torchbearer.

"OMG, buy it, buy it!" In the live broadcast room of a popular anchor, there was a lot of excitement. Millions of people are online at the same time, and their right thumb is like a stringed arrow, waiting for the anchor to issue the password "3, 2, 1, link up!" and place orders quickly.

That's right, in this year's 618, live delivery of goods has become a form of shopping that "hands" people like to see and hear. As of 2020, among my country's 904 million netizens, there will be 265 million e-commerce live broadcast users.

In a live broadcast room where tens of millions of people are online at the same time, once the baby link is on the shelf, it takes seconds to reach consumers. It is a very big test for everyone to see the link at the same time, to buy it fairly, and to ensure the stability of the system. What kind of technical blessing is behind it, and what challenges did you encounter in the process? Let us find out.

Traditional live broadcast: stuttering delays, the speed of the fight is always a step slower

The timeliness and interactivity of live broadcast make it a new medium for information access and interactive communication, maximizing the true restoration of offline interaction scenes between people. But is the real-time interactive effect of the live broadcast good enough?

The whole link of Internet live broadcast can be divided into 7 steps: collecting, encoding, sending, distributing, receiving, decoding and rendering. In the transmission and distribution stage, due to various uncontrollable factors such as network jitter, the live broadcast delay is uncontrollable.

- online, students ask questions. Due to the delay of the live broadcast, the teacher will only pop up the question until the next knowledge point, and can only return to reply again;

- e-commerce live broadcast, fans asked for baby information. Due to the delay of the live broadcast, they had just heard that the anchor was on sale, but they couldn't get it;

- match live broadcast, only found out that the goal was scored in the shouts of others...

Many live broadcasts start to live broadcast across platforms and regions. How to realize live broadcasts in different places and cross-platforms requires some streaming and pulling technologies. Push streaming is the process of transmitting the packaged content in the acquisition phase to the server; pulling streaming refers to the process where the server already has live content and uses the specified address to pull it.

Currently, the industry’s live broadcast protocols generally use RTMP as the streaming protocol and RTMP/HTTP-FLV as the streaming protocol, with a delay of about 3-5 seconds. On H5, the HLS system with a delay of more than 10 seconds is more adopted. In other words, when the host holds a rush to buy in the live broadcast room, every time you hear the host yell "Three Two One, link up!", in fact, several seconds have passed in the live broadcast scene.

In addition to the delay caused by the live broadcast protocol itself, the traditional live broadcast will also cause delay due to architectural reasons. The technical architecture of traditional live broadcast is divided into three layers, namely, a single-line CDN edge node, a multi-line CDN central node, and a source station that carries some value-added services. For the entire live broadcast from push to pull, the general scheduling system will cut the anchor to the most appropriate edge node, and the edge node will receive the stream, and then forward it to the source station of the live broadcast through the CDN central node; at this time, the audience accesses to On the edge nodes that pull the stream, statically return to the source station of the live broadcast through the CDN central node.

However, from a comprehensive experience point of view, the following three points do not apply to low-latency scenarios. First of all, the biggest consumption is on the last mile from the viewer side to the edge of CDN streaming, where the TCP protocol is not suitable for low latency. The second is based on a static tree distribution architecture, which is not particularly good for cost considerations. The third is transcoding on the entire live source station. At present, there is also a relatively large delay, generally around 500ms. This is also a low-latency live broadcast that cannot be solved.

The hard-core technology behind "3, 2, 1 link up": large-scale ultra-low latency

Traditional live broadcast technology encounters a bottleneck between high concurrency and low latency, which hinders the implementation of live broadcast in some scenes, and can no longer meet some live broadcast scenes with higher interaction requirements. The next upgrade of the live broadcast industry: low-latency live broadcast technology is emerging, and is expected to become the new focus of live broadcast technology.

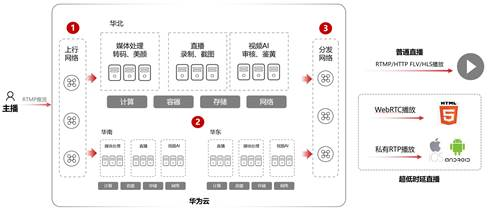

The Eight Immortals cross the sea, each showing their magical powers. Different players realize low-latency live broadcasts in different ways. Huawei Cloud Audio and Video R&D department uses CDN transmission protocol optimization, dynamic optimization of internal links, ultra-low-latency transcoding and other technologies to combine traditional technologies. The live broadcast 3-5s delay is significantly reduced to within 800ms, and the transcoding delay is controlled within 150ms. At the same time, ultra-low-latency live broadcasting is fully compatible with traditional live broadcasting, reducing the impact on the original technical architecture and reducing the cost of architecture optimization.

Architecture analysis

In addition to the choice of live broadcast protocol that can reduce latency, we can also optimize the details of some architectures to further reduce live broadcast latency, allowing users to have a better viewing experience.

The above architecture design better achieves the forward compatibility with the original traditional live broadcast architecture, and can maintain the original stream of the anchor RTMP protocol. On the source station of the live broadcast, the notification of media transcoding and some function message callbacks can be seamlessly compatible. At the same time, on the basis of the original live broadcast protocol, the H5 end has expanded the support for standard RTC protocol playback, and the iOS and Android end support the private RTC protocol playback.

The core of Huawei Cloud's ultra-low-latency live broadcast technology optimization has three points:

First, the last mile is based on the transformation of the TCP to UDP plan and the introduction of the Huawei Cloud algorithm, which reduces the delay of traditional live broadcasts to within the order of milliseconds, while ensuring good loss resistance and a smooth experience;

Second, the traditional tree-like static planning and scheduling architecture is changed to an intelligent and dynamic mesh architecture, which brings about the dynamic planning of the path back to the source distributed within the CDN, rather than the previously statically planned link;

Third, the introduction of ultra-low delay transcoding technology will eventually reduce the entire transcoding delay from the original 500ms to a stable control within 150ms.

Intelligent dynamic mesh architecture

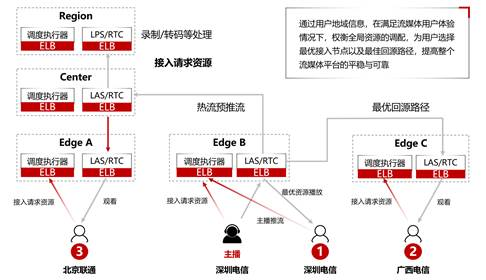

Why is it called an intelligent dynamic mesh architecture? For example, let’s analyze the access paths of three viewers. For example, an anchor is a user of Shenzhen Telecom. With the original architecture, it will be connected to the nearby edge coverage node of Shenzhen Telecom, and then pushed to the central node. Then to the origin site, and the visiting audience will get this information through an inherently static center. No matter how far the distance between the user and the audience is, they will walk to the edge central source station, and then go down, which needs to be distributed through the 5-layer node.

With the current architecture, if a viewer is user 1 of Shenzhen Telecom, he will be dispatched to the same node as the anchor push node in real time through a dynamic architecture, and his access path is from the anchor to node B, and then to The audience actually passed through one, and the quality and cost of the entire link can be improved very well. For a user 2 of Guangxi Telecom, if he is directly dispatched to the streaming point, the entire network will be uncontrollable, and the dynamic intelligent mesh architecture will connect him to the nearest node. Because the network between B and C is relatively more reliable, the scheduling system judges that the access quality of this block is feasible, so the access path of user 2 is 2-CB, after two points. And if it’s the user 3 of Beijing Connect, the anchor and the audience are in the north and south. In this case, the nearby visit may cause more damage to the quality, so the stream will still be pulled in the original way to ensure high quality. Access.

Intelligent scheduling streaming brain

The above example is the audience's presentation of the final result of the anchor's overall link, but behind this it also relies on a set of intelligent scheduling system based on stream information. The architecture of this scheduling system is based on the intelligent scheduling streaming media brain built on the source station, which is mainly composed of four core modules:

- content management center, can be understood as the eyes of streaming media, which can report real-time streaming information through the edge, such as when the host is online or offline at a certain time, and accurately knows the online and every time of each host. The access of a viewer can clearly know where the stream is pushed to and on which node in the entire network.

2. quality map , used to construct the entire CDN network between nodes, including the real-time state of the network from user to node. By issuing some scheduling tasks to the nodes of the edge detection agent, the detection agent initiates periodic detection tasks, and then reports the detection results to the big data middle station, and finally analyzes the coverage quality of the entire network in real time. - scheduling controller masters the basic data of the platform upon which the scheduling depends, such as node traffic, schedule planning, and some data on the user side.

- Scheduling Decision Center . The output of the first three blocks will be used as the input of the dispatching decision center. As the brain of the final scheduling, the scheduling decision center will generate a scheduling strategy for the entire network in real time, including the scheduling strategy for user access and the internal return-to-source strategy between nodes, and send it to the scheduling executor. Initially, Huawei deployed the scheduling actuators on the cloud. Later, in order to create end-to-end low latency, this part was submerged on the edge nodes of the CDN to try its best to reduce the scheduling delay between users.

Ultra-low latency transcoding technology

When it comes to low video latency, video transcoding has to be mentioned. Currently, video transcoding has become a standard feature of major live broadcast platforms. However, due to the different network conditions of end users, in order to achieve low end-to-end latency, the transcoding latency needs to be further reduced. Huawei's transcoding technology can stably control the delay within 150ms. At the same time, low-latency transcoding also supports high-definition low-code technology. Under the same image quality, the bit rate of the playback end is reduced by more than 30%, saving the bandwidth of the entire platform. cost. Based on high-quality image requirements, low-latency transcoding also supports image quality enhancement and ROI enhancement functions, as well as precise directional optimization of image details and textures.

With the application and promotion of 5G technology, the "live +" model has accelerated its extension to various vertical fields, and online live broadcast applications have exploded. The traditional live broadcast will be converted to ultra-low latency live broadcast this year, and will be the first to be promoted in four industry scenarios: online education, e-commerce live broadcast, event live broadcast, and show live broadcast.

2021 will be a transition year for traditional live broadcasts to ultra-low latency live broadcasts. At the end of this year, it is expected that 20% of Internet live broadcasts will be fully upgraded to ultra-low latency live broadcasts. In the next 2-3 years, ultra-low latency live broadcasting will fully replace traditional live broadcasting, and eventually lead a new round of business models and a new round of development in the live broadcasting industry.

Business innovation has promoted the vigorous growth of the e-commerce live broadcast industry, and the support of these boiling scenarios and transaction miracles must be the tremendous energy of technology. I believe that under the baptism of new technologies, consumers' personal needs, consumption environment, and consumption concepts can be further upgraded.

618 Technical Special (1) Unknowingly, it exceeded the budget by 3 times. Why can't you stop buying and buying? If you want to understand why our wallets are always emptied whenever there is a big sale? Behind this is whether everyone's self-control is not enough, or the e-commerce platform is too good at understanding people's hearts, we might as well take a look at it from the technical dimension.

618 Technical Special (2) Why is it more and more easy to get a spike where millions of people place orders at the same time? When consumers are captured by e-commerce’s recommendation system, how do they ensure that you can buy your favorite products anytime and anywhere during the big promotion, and how hundreds of millions of transaction data are circulated in an orderly manner to ensure that you can both Can you receive the goods in time if you grab it? This article will decipher it for you one by one.

Click to follow and learn about Huawei Cloud's fresh technology for the first time~

**粗体** _斜体_ [链接](http://example.com) `代码` - 列表 > 引用。你还可以使用@来通知其他用户。