The topic of the article is "How to ensure the fluency of the UI under the single-threaded model". This topic is based on the performance principle of Flutter, but the dart language is an extension of js, and many concepts and mechanisms are the same. I won’t talk about it in detail. In addition, js is also a single-threaded model, similar to dart in terms of interface display and IO. So let’s talk about it in conjunction with the comparison, help sort out and make analogy, and it will be easier to grasp the theme of this article and the horizontal expansion of knowledge.

From the front-end point of view, analyze the event loop and event queue models. Let's start from the Flutter layer and talk about the relationship between the event queue on the dart side and the synchronous and asynchronous tasks.

1. Design of single-threaded model

1. The most basic single-threaded processing simple tasks

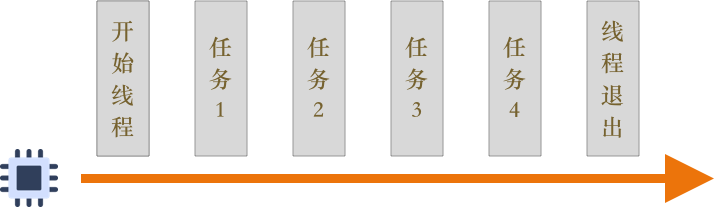

Suppose there are several tasks:

- Task 1: "Name:" + "Hangcheng Xiao Liu"

- Task 2: "Age:" + "1995" + "02" + "20"

- Task 3: "Size:" + (2021-1995 + 1)

- Task 4: Print the results of tasks 1, 2, 3

Executed in a single thread, the code may be as follows:

//c

void mainThread () {

string name = "姓名:" + "杭城小刘";

string birthday = "年龄:" + "1995" + "02" + "20"

int age = 2021 - 1995 + 1;

printf("个人信息为:%s, %s, 大小:%d", name.c_str(), birthday.c_str(), age);

}The thread starts to execute the task. According to the demand, a single thread executes each task in turn. After the execution is completed, the thread immediately exits.

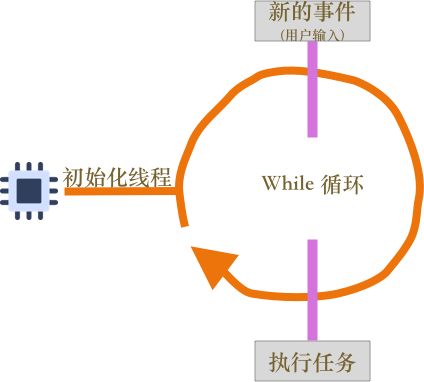

2. How to deal with new tasks that come in the process of thread running?

The threading model introduced in Question 1 is too simple and ideal. It is impossible to determine n tasks from the beginning. In most cases, new m tasks will be received. Then the design in section1 cannot meet this requirement.

is able to accept and execute new tasks while the thread is running, it needs an event loop mechanism. most basic event loop of 160cf58031f67c can be realized with a loop.

// c++

int getInput() {

int input = 0;

cout<< "请输入一个数";

cin>>input;

return input;

}

void mainThread () {

while(true) {

int input1 = getInput();

int input2 = getInput();

int sum = input1 + input2;

print("两数之和为:%d", sum);

}

}Compared with the first version of thread design, this version has made the following improvements:

- The loop mechanism introduced, and the thread will exit immediately after finishing its work.

- The event introduced. At the beginning, the thread will wait for user input. While waiting, the thread is in a paused state. When the user input is completed, the thread gets the input information, and the thread is activated at this time. Perform the addition operation, and finally output the result. Constantly waiting for input and calculating output.

3. Processing tasks from other threads

The thread module in the real environment is far from simple. For example, in a browser environment, the thread may be drawing, may receive 1 event from the user's mouse click, 1 event from the completion of the network loading css resource, and so on. Although the second version of the threading model introduces an event loop mechanism that can accept new event tasks, have you found it? These tasks come from within the thread, and this design cannot accept tasks from other threads.

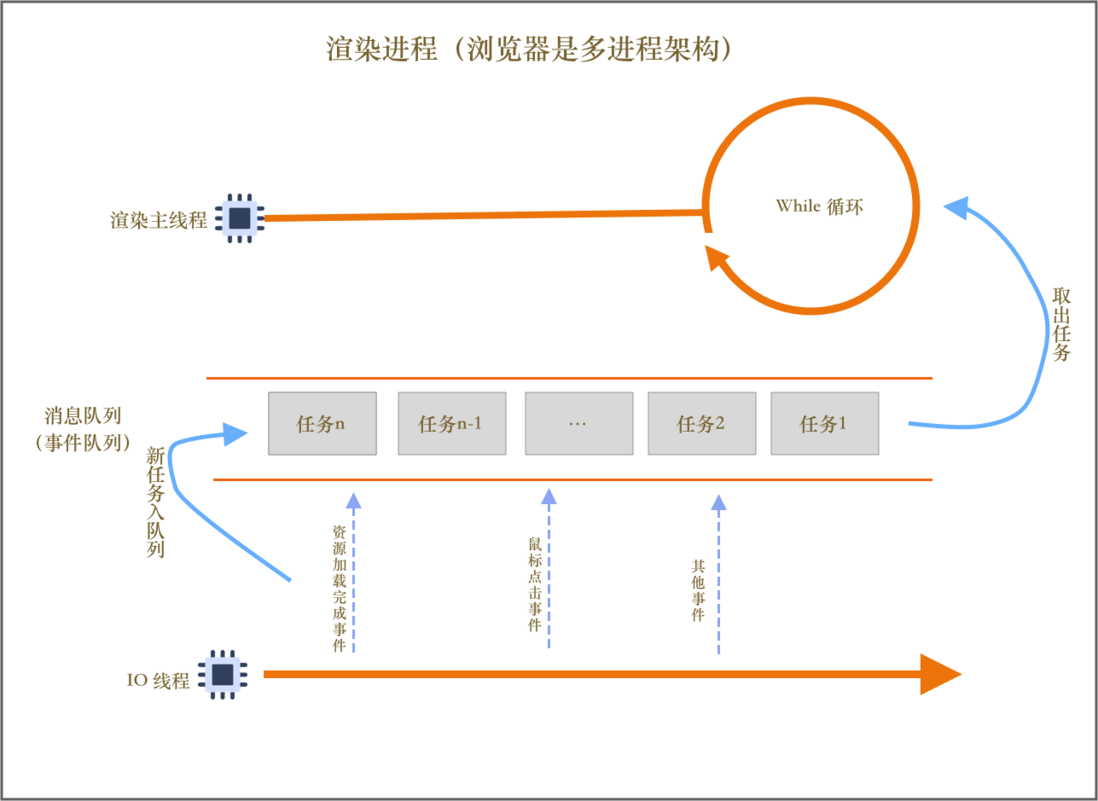

As can be seen from the above figure, the main rendering thread will frequently receive some event tasks from the IO thread. When it receives a message after the resource is loaded, the rendering thread will start DOM parsing; when it receives a message from a mouse click , The main rendering thread will execute the bound mouse click event script (js) to handle the event.

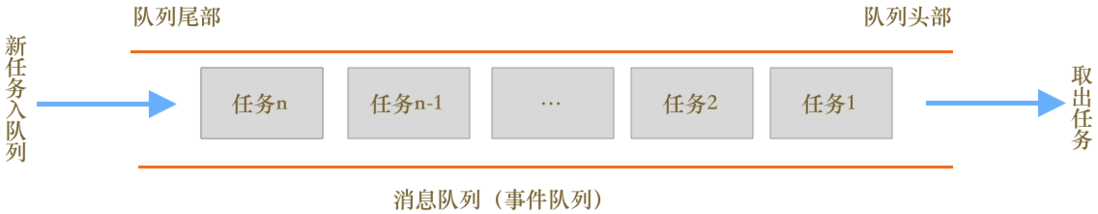

Need a reasonable data structure to store and retrieve messages sent by other threads?

message queue . In the GUI system, the event queue is a general solution.

message queue (event queue) is a reasonable data structure. The task to be executed is added to the end of the queue, and the task to be executed is taken out from the head of the queue.

With the message queue, the threading model has been upgraded. as follows:

It can be seen that the transformation is divided into 3 steps:

- Build a message queue

- New tasks generated by the IO thread will be added to the end of the message queue

- The main rendering thread will cyclically read tasks from the head of the message queue and execute tasks

Fake code. Construct the queue interface part

class TaskQueue {

public:

Task fetchTask (); // 从队列头部取出1个任务

void addTask (Task task); // 将任务插入到队列尾部

}Transform the main thread

TaskQueue taskQueue;

void processTask ();

void mainThread () {

while (true) {

Task task = taskQueue.fetchTask();

processTask(task);

}

}IO thread

void handleIOTask () {

Task clickTask;

taskQueue.addTask(clickTask);

}Tips: The event queue is accessed by multiple threads, so it needs to be locked.

4. Handle tasks from other threads

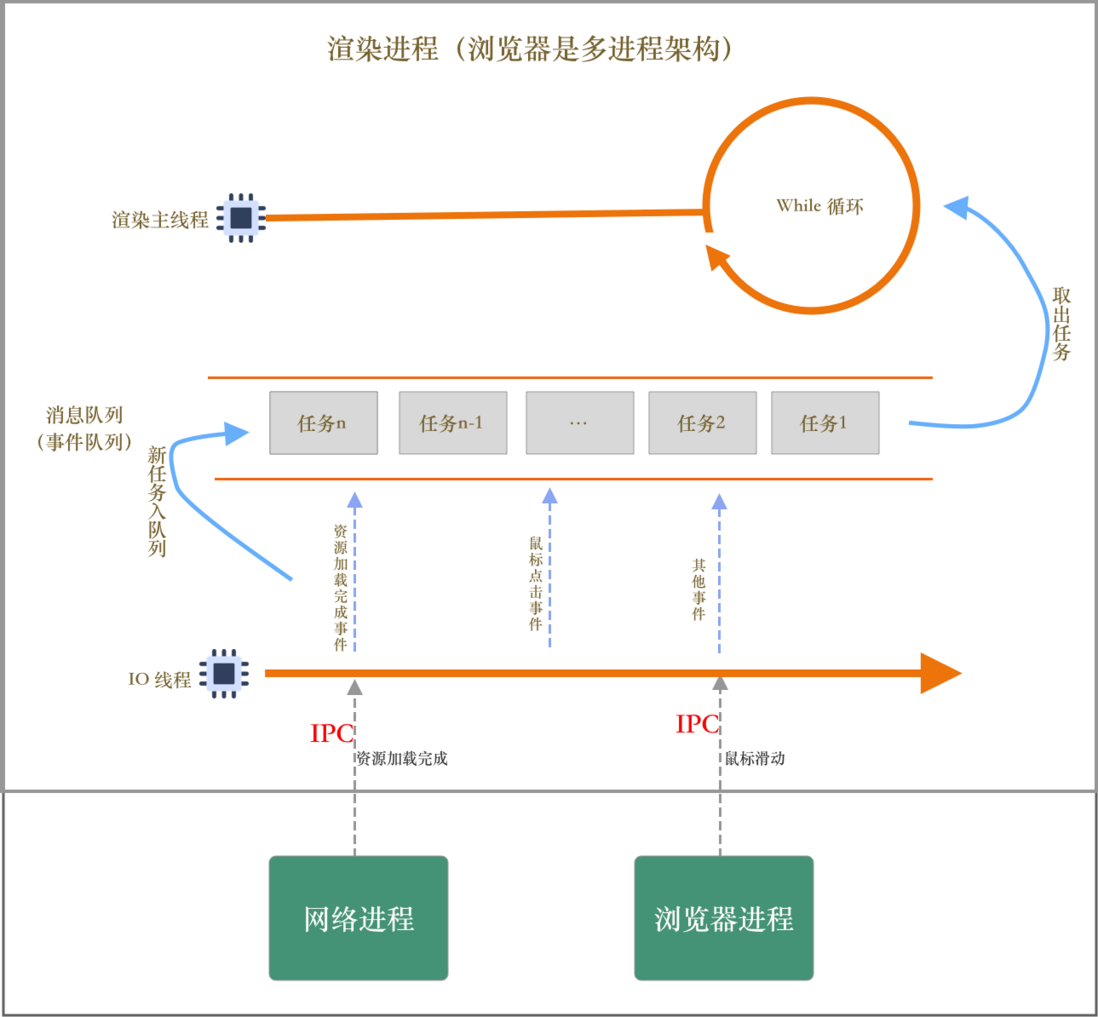

In the browser environment, the rendering process often receives tasks from other processes, and the IO thread is specifically used to receive messages from other processes. IPC specializes in cross-process communication.

5. Types of tasks in the message queue

There are many message types in the message queue. Internal messages: such as mouse scrolling, clicking, moving, macro tasks, micro tasks, file reading and writing, timers, etc.

There are also a large number of page-related events in the message queue. Such as JS execution, DOM analysis, style calculation, layout calculation, CSS animation and so on.

The above events are all executed in the main rendering thread, so you need to pay attention to coding and minimize the time taken by these events.

6. How to exit safely

In the design of Chrome, when it is determined to exit the current page, the main thread of the page will set an exit flag variable, and the flag will be judged every time a task is executed. If set, interrupt the task and exit the thread

7. Disadvantages of single thread

The feature of the event queue is first-in, first-out, and last-in and then-out. The late task may be blocked by the previous task because the execution time is too long, waiting for the previous task to complete before executing the subsequent task. There are two problems.

How to handle high-priority tasks

If you want to monitor the changes of DOM nodes (insert, delete, modify innerHTML), and then trigger the corresponding logic. The most basic approach is to design a set of monitoring interfaces. When the DOM changes, the rendering engine calls these interfaces synchronously. However, there is a big problem with this, that is, the DOM changes frequently. If every DOM change triggers the corresponding JS interface, the task execution will be very long, resulting in a execution efficiency of

If these DOM changes are treated as asynchronous messages, suppose they are in the message queue. There may be a problem that the current DOM message will not be executed due to the execution of the previous task, which affects the real-time .

How to balance efficiency and real-time performance? solves this type of problem.

Usually, we call the task in the message queue macro task , each macro task contains a micro task queue , in the process of executing the macro task, if the DOM changes, the change will be added The macro task is in the micro task queue, so that the efficiency problem can be solved.

When the main functions in the macro task are executed, the rendering engine will execute the micro tasks in the micro task queue. So the real-time problem is solved

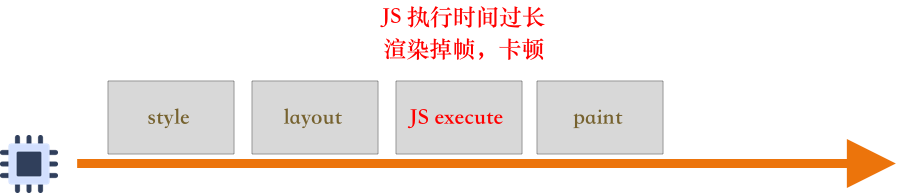

How to solve the problem of too long execution time for a single task

It can be seen that if the JS calculation timeout causes the animation paint to timeout, it will cause a freeze. In order to avoid this problem, the browser adopts the callback design to circumvent it, that is, to delay the execution of the JS task.

Second, the single-threaded model in flutter

1. Event loop mechanism

Dart is single-threaded, which means that the code is executed in an orderly manner. In addition, Dart, as the development language of Flutter, a GUI framework, must support asynchrony.

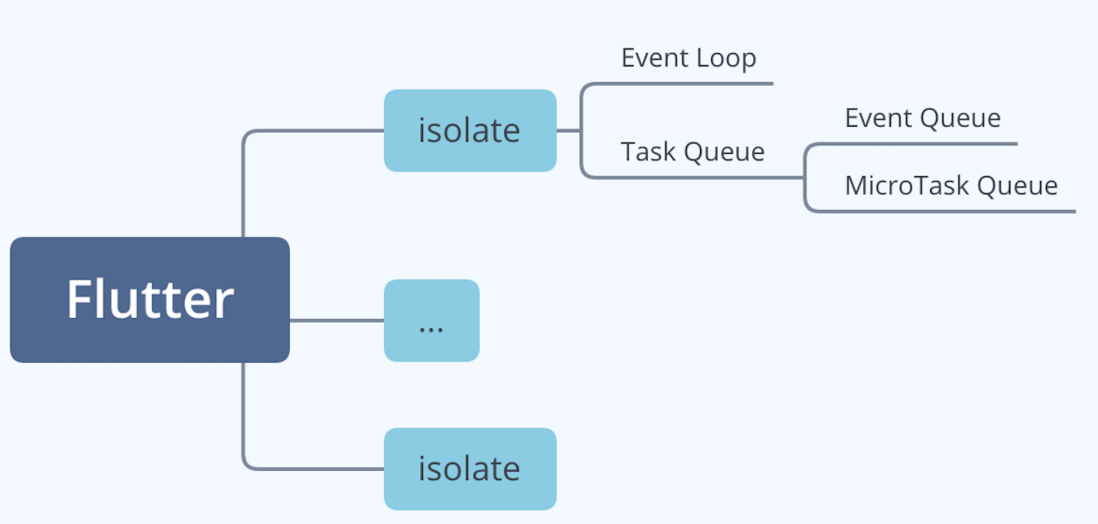

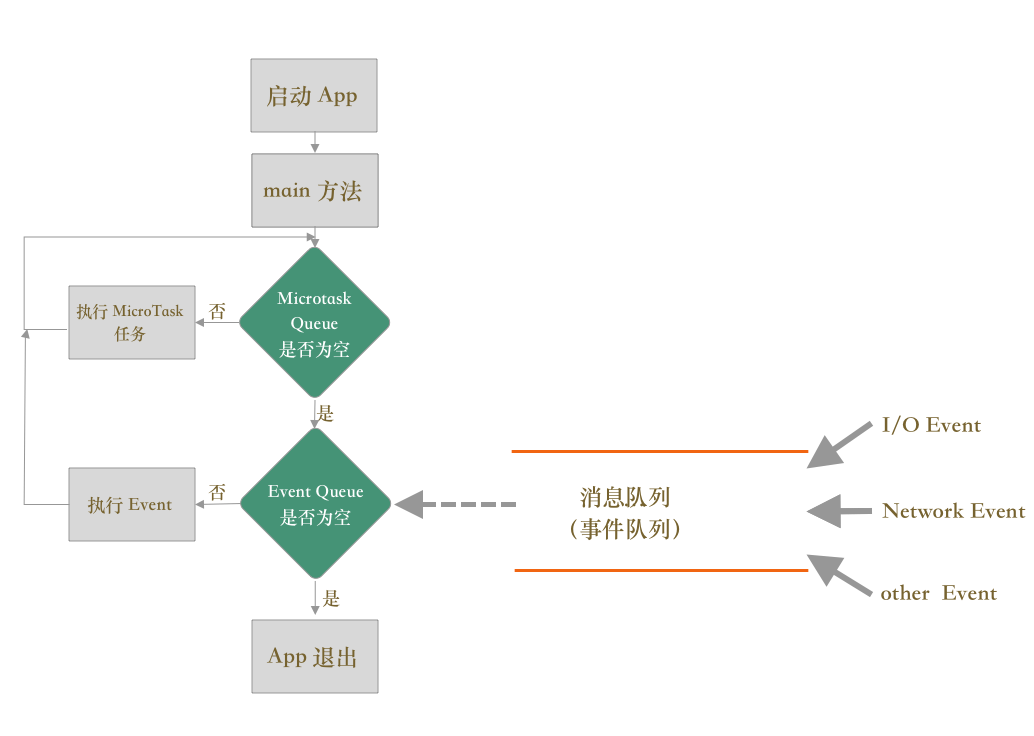

A Flutter application contains one or more isolate , the default method is executed in main isolate ; an isolate contains 1 Event loop and 1 Task queue. Among them, Task queue contains 1 Event queue and 1 MicroTask queue . as follows:

Why is asynchronous? Because the application is not always doing calculations in most scenarios. For example, while waiting for the user's input, participate in the calculation after the input. This is an IO scenario. So a single thread can do other things while waiting, and then process it when it really needs to process the calculation. So although it is single-threaded, it gives us the feeling that colleagues are doing a lot of things (doing other things when they are free)

If a task involves IO or asynchronous, the main thread will first do other things that need to be calculated. This action is driven by event loop. Like JS, the role of storing event tasks in dart is the event queue.

Event queue is responsible for storing task events that need to be executed, such as DB reading.

There are two queues in Dart, one Microtask Queue and one Event Queue.

Event loop continuously polls, first judge whether the micro task queue is empty, and take out the tasks that need to be executed from the head of the queue. If the micro task queue is empty, judge whether the event queue is empty. If it is not empty, take out events (such as keyboard, IO, network events, etc.) from the head, and then execute its callback function in the main thread, as follows:

2. Asynchronous tasks

Micro tasks are asynchronous tasks that can be completed in a short period of time. The micro task has the highest priority in the event loop. As long as the micro task queue is not empty, the event loop will continue to execute the micro task, and the tasks in the subsequent event queue will continue to wait. The micro task queue can be created scheduleMicroTask

In general, there are relatively few usage scenarios for microtasks. Flutter also uses microtasks in scenarios that require high-quality execution tasks such as gesture recognition, text input, scrolling views, and page effects.

Therefore, under general demand, we use the Event Queue with a lower priority for asynchronous tasks. For example, IO, drawing, timers, etc. are all executed by the main thread driven by the event queue.

Dart provides a layer of encapsulation for the tasks of Event Queue, called Future. Putting a function body into the Future completes the packaging of synchronous tasks to asynchronous tasks (similar to the synchronization and asynchronous submission of a task to a queue via GCD in iOS). Future has the ability of chain call, which can execute other tasks (functions) after the asynchronous execution is completed.

Look at a specific code:

void main() {

print('normal task 1');

Future(() => print('Task1 Future 1'));

print('normal task 2');

Future(() => print('Task1 Future 2'))

.then((value) => print("subTask 1"))

.then((value) => print("subTask 2"));

}

//

lbp@MBP ~/Desktop dart index.dart

normal task 1

normal task 2

Task1 Future 1

Task1 Future 2

subTask 1

subTask 2In the main method, a normal synchronous task is added first, and then an asynchronous task is added in the form of a Future. Dart will add the asynchronous task to the event queue, and then understand the return. Subsequent code continues to be executed in a synchronous task. Then added a common synchronization task. Then an asynchronous task is added in the way of Future, and the asynchronous task is added to the event queue. At this time, there are two asynchronous tasks in the event queue. Dart takes out one task from the head of the event queue to execute it in a synchronous manner. After all execution (first in, first out) is completed, the subsequent then will be executed.

Future and then share an event loop. If there are multiple then, they are executed in order.

Example 2:

void main() {

Future(() => print('Task1 Future 1'));

Future(() => print('Task1 Future 2'));

Future(() => print('Task1 Future 3'))

.then((_) => print('subTask 1 in Future 3'));

Future(() => null).then((_) => print('subTask 1 in empty Future'));

}

lbp@MBP ~/Desktop dart index.dart

Task1 Future 1

Task1 Future 2

Task1 Future 3

subTask 1 in Future 3

subTask 1 in empty FutureIn the main method, Task 1 is added to Future 1, and Dart is added to Event Queue. Task 1 is added to Future 2, and Dart is added to Event Queue. Task 1 is added to Future 3 and added to Event Queue by Dart. SubTask 1 and Task 1 share the Event Queue. The task in Future 4 is empty, so the code in then will be added to the Microtask Queue so that it can be executed in the next round of event loop.

Comprehensive example

void main() {

Future(() => print('Task1 Future 1'));

Future fx = Future(() => null);

Future(() => print("Task1 Future 3")).then((value) {

print("subTask 1 Future 3");

scheduleMicrotask(() => print("Microtask 1"));

}).then((value) => print("subTask 3 Future 3"));

Future(() => print("Task1 Future 4"))

.then((value) => Future(() => print("sub subTask 1 Future 4")))

.then((value) => print("sub subTask 2 Future 4"));

Future(() => print("Task1 Future 5"));

fx.then((value) => print("Task1 Future 2"));

scheduleMicrotask(() => print("Microtask 2"));

print("normal Task");

}

lbp@MBP ~/Desktop dart index.dart

normal Task

Microtask 2

Task1 Future 1

Task1 Future 2

Task1 Future 3

subTask 1 Future 3

subTask 3 Future 3

Microtask 1

Task1 Future 4

Task1 Future 5

sub subTask 1 Future 4

sub subTask 2 Future 4Explanation:

- Event Loop first executes the main method synchronous task, then executes the micro task, and finally executes the asynchronous task of the Event Queue. So the normal task is executed first

- The same is true for Microtask 2 execution

- Secondly, Event Queue FIFO, Task1 Future 1 is executed

- fx Future is empty inside, so the content in then is added to the micro task queue, and the micro task has the highest priority, so Task 1 Future 2 is executed

- Secondly, Task1 Future 3 is executed. Since there are two then, first execute subTask 1 Future 3 in the first then, and then encounter the micro task, so Microtask 1 is added to the micro task queue, waiting for the next Event Loop to be triggered. Then execute subTask 3 Future 3 in the second then. With the arrival of the next Event Loop, Microtask 1 is executed

- Secondly, Task1 Future 4 is executed. Subsequently, the task in the first then is packaged into an asynchronous task by the Future and added to the Event Queue, and the content in the second then is also added to the Event Queue.

- Next, execute Task1 Future 5. The end of this event loop

- Wait for the next round of event loop to come, print the sub subTask 1 Future 4, sub subTask 1 Future 5 in the queue.

3. Asynchronous functions

The result of the asynchronous function will be returned at some point in the future, so it is necessary to return a Future object for the caller to use. The caller judges whether to register a then on the Future object and then perform asynchronous processing after the Future execution body ends, or wait synchronously until the Future execution ends. If the Future object needs to wait synchronously, you need to add await to the calling place, and the function where the Future is located needs to use the async keyword.

await is not a synchronous wait, but an asynchronous wait. Event Loop treats the function where the caller is located as an asynchronous function, and adds the context of the waiting statement to the Event Queue as a whole. Once it returns, the Event Loop will take out the context code from the Event Queue, and the waiting code will continue to execute.

await blocks subsequent code execution in the current context, and cannot block subsequent code execution in the upper layer of the call stack

void main() {

Future(() => print('Task1 Future 1'))

.then((_) async => await Future(() => print("subTask 1 Future 2")))

.then((_) => print("subTask 2 Future 2"));

Future(() => print('Task1 Future 2'));

}

lbp@MBP ~/Desktop dart index.dart

Task1 Future 1

Task1 Future 2

subTask 1 Future 2

subTask 2 Future 2Analysis:

- Task1 Future 1 in Future is added to Event Queue. Secondly, when I encounter the first then, there is an asynchronous task wrapped by Future, so

Future(() => print("subTask 1 Future 2"))is added to the Event Queue, and the await function is also added to the Event Queue. The second then is also added to the Event Queue - The'Task1 Future 2 in the second Future will not be blocked by await, because await is an asynchronous wait (added to the Event Queue). So execute'Task1 Future 2. Then execute "subTask 1 Future 2, then take out await to execute subTask 2 Future 2

4. Isolate

In order to utilize multi-core CPUs, Dart isolates the intensive computing at the CPU level and provides a multi-threading mechanism, namely Isolate. Each isolate resource is isolated and has its own Event Loop, Event Queue, and Microtask Queue. The resource sharing between isolates communicates through a message mechanism (same as a process)

It is very simple to use, a parameter needs to be passed when creating it.

void coding(language) {

print("hello " + language);

}

void main() {

Isolate.spawn(coding, "Dart");

}

lbp@MBP ~/Desktop dart index.dart

hello DartIn most cases, more than just concurrent execution is required. It may also be necessary to inform the main Isolate of the result after an Isolate operation is over. Message communication can be achieved through the Isolate pipeline (SendPort). You can pass the pipeline as a parameter to the sub-Isolate in the main Isolate. When the sub-Isolate operation is over, the result will be passed to the main Isolate through this pipeline.

void coding(SendPort port) {

const sum = 1 + 2;

// 给调用方发送结果

port.send(sum);

}

void main() {

testIsolate();

}

testIsolate() async {

ReceivePort receivePort = ReceivePort(); // 创建管道

Isolate isolate = await Isolate.spawn(coding, receivePort.sendPort); // 创建 Isolate,并传递发送管道作为参数

// 监听消息

receivePort.listen((message) {

print("data: $message");

receivePort.close();

isolate?.kill(priority: Isolate.immediate);

isolate = null;

});

}

lbp@MBP ~/Desktop dart index.dart

data: 3In addition, Flutter provides a shortcut to perform concurrent computing tasks- compute function . The creation of Isolate and two-way communication are encapsulated inside.

In fact, there are very few scenarios where compute is used in business development. For example, compute can be used to encode and decode JSON.

Calculate the factorial:

int testCompute() async {

return await compute(syncCalcuateFactorial, 100);

}

int syncCalcuateFactorial(upperBounds) => upperBounds < 2

? upperBounds

: upperBounds * syncCalcuateFactorial(upperBounds - 1);to sum up:

- Dart is single-threaded, but asynchronous can be achieved through the event loop

- Future is the encapsulation of asynchronous tasks. With the help of await and async, we can achieve non-blocking synchronous waiting through the event loop

- Isolate is a multi-thread in Dart, which can achieve concurrency, has its own event loop and Queue, and monopolizes resources. Isolates can communicate one-way through the message mechanism, and these transmitted messages drive the other party to perform asynchronous processing through the other party's event loop.

- Flutter provides a compute method for CPU-intensive operations, and internally encapsulates the communication between Isolate and Isolate

- The concepts of event queue and event loop are very important in GUI systems, and they almost exist in front-end, Flutter, iOS, Android, and even NodeJS.

**粗体** _斜体_ [链接](http://example.com) `代码` - 列表 > 引用。你还可以使用@来通知其他用户。