Prometheus is an open source monitoring and warning system based on a time-series database. Speaking of Prometheus we have to mention SoundCloud, which is an online music sharing platform, similar to YouTube for video sharing, because they are on the road of microservice architecture. The farther you go, hundreds of services have appeared, and there are a lot of limitations in using traditional monitoring systems StatsD and Graphite.

So they started to develop a new monitoring system in 2012. The original author of Prometheus is Matt T. Proud. He also joined SoundCloud in 2012. In fact, before joining SoundCloud, Matt worked at Google. He drew inspiration from Google’s cluster manager Borg and its monitoring system Borgmon. , Developed the open source monitoring system Prometheus. Like many Google projects, the programming language used is Go.

Obviously, Prometheus 160dff5e27aae4, as a micro-service architecture monitoring system solution, is also As early as August 9, 2006, Eric Schmidt first proposed the concept of Cloud Computing at the Search Engine Conference. In the following ten years, the development of cloud computing has been like a broken bamboo.

In 2013, Matt Stine of Pivotal put forward the concept of Cloud Native. Cloud Native is composed of microservice architecture, DevOps and agile infrastructure represented by containers. Deliver software.

In order to unify cloud computing interfaces and related standards, in July 2015, the Cloud Native Computing Foundation (CNCF, Cloud Native Computing Foundation) under the Linux Foundation came into being. The first project to join the CNCF was Google's Kubernetes, and Prometheus was the second to join (2016).

At present, Prometheus has been widely used in the monitoring system of Kubernetes clusters. Students who are interested in the history of Prometheus can take a look at the speech by SoundCloud engineer Tobias Schmidt at the 2016 PromCon conference: The History of Prometheus at SoundCloud.

One, Prometheus overview

We can find an article Prometheus: Monitoring at SoundCloud on the official SoundCloud blog about why they need to develop a new monitoring system. In this article, they introduced that the monitoring system they need must meet the following four characteristics:

Simply put, it is the following four characteristics:

- Multi-dimensional data model

- Convenient deployment and maintenance

- Flexible data collection

- Powerful query language

In fact, the two features of multi-dimensional data model and powerful query language are exactly what the time series database requires, so Prometheus is not only a monitoring system, but also a time series database. So why doesn't Prometheus directly use the existing time series database as the back-end storage? This is because SoundCloud not only hopes that their monitoring system has the characteristics of a time series database, but it also needs to be deployed and maintained very convenient.

Looking at the more popular time series databases (see appendix below), they either have too many components or heavy external dependencies. For example: Druid has a bunch of components including Historical, MiddleManager, Broker, Coordinator, Overlord, Router, and it also relies on ZooKeeper. , Deep storage (HDFS or S3, etc.), Metadata store (PostgreSQL or MySQL), deployment and maintenance costs are very high. Prometheus uses a decentralized architecture that can be deployed independently and does not rely on external distributed storage. You can build a monitoring system in a few minutes.

In addition, the Prometheus data collection method is also very flexible. To collect the monitoring data of a target, you first need to install a data collection component at the target. This is called Exporter. It collects monitoring data at the target and exposes an HTTP interface for Prometheus to query. Prometheus collects it through Pull. Data, this is different from the traditional Push mode.

However, Prometheus also provides a way to support Push mode. You can push your data to Push Gateway, and Prometheus obtains data from Push Gateway through Pull. The current Exporter can already collect most of the third-party data, such as Docker , HAProxy , StatsD, JMX, etc. There is a list of Exporter on the official website.

In addition to these four features, with the continuous development of Prometheus, it began to support more and more advanced features, such as: service discovery, richer graph display, use of external storage, powerful alarm rules and various notification methods. The following figure is the overall architecture diagram of Prometheus:

As can be seen from the above figure, the Prometheus ecosystem contains several key components: Prometheus server, Pushgateway, Alertmanager, Web UI, etc., but most of the components are not necessary. The core component is of course the Prometheus server, which is responsible for Collect and store indicator data, support expression query, and alarm generation. Next we will install Prometheus server.

Two, install Prometheus server

Prometheus can support a variety of installation methods, including Docker , Ansible , Chef, Puppet , Saltstack, etc. The two simplest methods are described below, one is to use the compiled executable file directly, out of the box, and the other is to use a Docker image.

2.1 Out of the box

First, get the latest version and download address of Prometheus from the download page of the official website. The latest version is 2.4.3 (October 2018), execute the following command to download and unzip:

$ wget https://github.com/prometheus/prometheus/releases/download/v2.4.3/prometheus-2.4.3.linux-amd64.tar.gz

$ tar xvfz prometheus-2.4.3.linux-amd64.tar.gz Then switch to the unzipped directory and check the Prometheus version:

$ cd prometheus-2.4.3.linux-amd64

$ ./prometheus --version

prometheus, version 2.4.3 (branch: HEAD, revision: 167a4b4e73a8eca8df648d2d2043e21bdb9a7449)

build user: root@1e42b46043e9

build date: 20181004-08:42:02

go version: go1.11.1 Run Prometheus server:

$ ./prometheus --config.file=prometheus.yml 2.2 Use Docker image

It is easier to install Prometheus using Docker, just run the following command:

$ sudo docker run -d -p 9090:9090 prom/prometheus Under normal circumstances, we will also specify the location of the configuration file:

$ sudo docker run -d -p 9090:9090 \

-v ~/docker/prometheus/:/etc/prometheus/ \

prom/prometheus We put the configuration file in the local ~/docker/prometheus/prometheus.yml, which is convenient for editing and viewing. The local configuration file is mounted to the /etc/prometheus/ location through the -v parameter, which is the default prometheus in the container The location of the loaded configuration file. If we are not sure where the default configuration file is, we can execute the above command without the -v parameter first, and then use docker inspect naming to see what the default parameters of the container are at runtime (Args parameter below):

$ sudo docker inspect 0c

[...]

"Id": "0c4c2d0eed938395bcecf1e8bb4b6b87091fc4e6385ce5b404b6bb7419010f46",

"Created": "2018-10-15T22:27:34.56050369Z",

"Path": "/bin/prometheus",

"Args": [

"--config.file=/etc/prometheus/prometheus.yml",

"--storage.tsdb.path=/prometheus",

"--web.console.libraries=/usr/share/prometheus/console_libraries",

"--web.console.templates=/usr/share/prometheus/consoles"

],

[...] 2.3 Configure Prometheus

As seen in the above two sections, Prometheus has a configuration file, which is specified by the parameter --config.file, and the configuration file format is YAML. We can open the default configuration file prometheus.yml to see the contents:

/etc/prometheus $ cat prometheus.yml

# my global config

global:

scrape_interval: 15s # Set the scrape interval to every 15 seconds. Default is every 1 minute.

evaluation_interval: 15s # Evaluate rules every 15 seconds. The default is every 1 minute.

# scrape_timeout is set to the global default (10s).

# Alertmanager configuration

alerting:

alertmanagers:

- static_configs:

- targets:

# - alertmanager:9093

# Load rules once and periodically evaluate them according to the global 'evaluation_interval'.

rule_files:

# - "first_rules.yml"

# - "second_rules.yml"

# A scrape configuration containing exactly one endpoint to scrape:

# Here it's Prometheus itself.

scrape_configs:

# The job name is added as a label `job=<job_name>` to any timeseries scraped from this config.

- job_name: 'prometheus'

# metrics_path defaults to '/metrics'

# scheme defaults to 'http'.

static_configs:

- targets: ['localhost:9090'] Prometheus default configuration file is divided into four major blocks:

- global block: the global configuration of Prometheus, for example scrape_interval indicates how often Prometheus fetches data, and evaluation_interval indicates how often the alarm rule is checked;

- alerting block: Regarding the configuration of Alertmanager, we will look at this later;

- rule_files block: warning rules, we will look at this later;

- scrape_config block: This defines the target that Prometheus wants to crawl. We can see that a job named prometheus has been configured by default. This is because Prometheus will also expose its own index data through the HTTP interface when it is started, which is quite Prometheus monitors itself. Although this is useless when actually using Prometheus, we can learn how to use Prometheus through this example; you can visit http://localhost:9090/metrics see which metrics Prometheus exposes;

Three, learn PromQL

After installing Prometheus through the above steps, we can now start to experience Prometheus. Prometheus provides a visual Web UI to facilitate our operations, just visit http://localhost:9090/ , it will jump to the Graph page by default:

The first time you visit this page, you may be at a loss. We can first look at the content under other menus. For example, Alerts displays all the defined alarm rules. Status can view various Prometheus status information, including Runtime & Build Information, Command-Line Flags, Configuration, Rules, Targets, Service Discovery, etc.

In fact, the Graph page is the most powerful function of Prometheus. Here we can use a special expression provided by Prometheus to query monitoring data. This expression is called PromQL (Prometheus Query Language). Not only can you query data on the Graph page through PromQL, but you can also query through the HTTP API provided by Prometheus. The queried monitoring data has two display forms: list and graph (corresponding to the two labels of Console and Graph in the above figure).

As we said above, Prometheus itself also exposes a lot of monitoring indicators, you can also query on the Graph page, expand the drop-down box next to the Execute button, you can see many indicator names, we can choose one at random, for example: promhttp_metric_handler_requests_total, this indicator means / The number of visits to the metrics page. Prometheus uses this page to grab its own monitoring data. The results of the query in the Console tab are as follows:

When introducing the configuration file of Prometheus above, you can see that the scrape_interval parameter is 15s, which means that Prometheus visits the /metrics page every 15s, so we refresh the page after 15s, and you can see that the index value will increase automatically. It can be seen more clearly in the Graph tab:

3.1 Data model

To learn PromQL, we first need to understand the Prometheus data model. A Prometheus data consists of a metric name (metric) and N labels (label, N >= 0), such as the following example:

promhttp\_metric\_handler\_requests\_total{code="200",instance="192.168.0.107:9090",job="prometheus"} 106The metric name of this data is promhttp_metric_handler_requests_total, and it contains three tags code, instance, and job. The value of this record is 106. As mentioned above, Prometheus is a time series database, and data with the same indicator and the same label constitutes a time series. If you use the concept of a traditional database to understand a time series database, you can treat the indicator name as the table name, the label as the field, timestamp as the primary key, and a float64 type field that represents the value (all values in Prometheus are stored as float64).

This data model is similar to the OpenTSDB data model. For detailed information, please refer to the official website document Data model.

Although the data stored in Prometheus is a value of float64, if we divide it by type, we can divide Prometheus data into four categories:

- Counter

- Gauge

- Histogram

- Summary

Counter is used to count, such as the number of requests, the number of tasks completed, and the number of errors. This value will always increase, but will not decrease. Gauge is a general value, which can be large or small, such as temperature changes and memory usage changes. Histogram is a histogram, or histogram, which is often used to track the scale of events, such as: request time, response size.

Its special feature is that it can group the recorded content and provide the functions of count and sum. Summary is very similar to Histogram and is also used to track the scale of events. The difference is that it provides a quantiles function that can divide the tracking results by percentage. For example, the value of quantile is 0.95, which means that 95% of the data in the sampled value is taken.

These four types of data are only distinguished by the indicator provider, which is the Exporter mentioned above. If you need to write your own Exporter or expose indicators for Prometheus crawling in an existing system, you can use Prometheus client libraries, At this time you need to consider the data types of different indicators. If you don’t need to implement it yourself, but directly use some ready-made Exporters, and then check the relevant indicator data in Prometheus, then you don’t need to pay too much attention to this, but understanding the data types of Prometheus is also useful for writing correct and reasonable PromQL Helping.

3.2 Getting started with PromQL

We start to learn PromQL from some examples. The simplest PromQL is to directly enter the indicator name, such as:

# 表示 Prometheus 能否抓取 target 的指标,用于 target 的健康检查

up This statement will find out the current running status of all targets captured by Prometheus, such as the following:

up{instance="192.168.0.107:9090",job="prometheus"} 1

up{instance="192.168.0.108:9090",job="prometheus"} 1

up{instance="192.168.0.107:9100",job="server"} 1

up{instance="192.168.0.108:9104",job="mysql"} 0 You can also specify a label to query:

up{job="prometheus"} This way of writing is called Instant vector selectors, where not only the = sign can be used, but also !=, =~, !~, such as the following:

up{job!="prometheus"}

up{job=~"server|mysql"}

up{job=~"192\.168\.0\.107.+"}

#=~ 是根据正则表达式来匹配,必须符合 RE2 的语法。Corresponding to Instant vector selectors, there is also a selector called Range vector selectors, which can find out all the data in a period of time:

http_requests_total[5m] This statement finds out the number of HTTP requests captured within 5 minutes. Note that the data type it returns is Range vector, which cannot be displayed as a graph on the Graph. In general, it will be used for Counter type indicators. Use with rate() or irate() functions (note the difference between rate and irate).

# 计算的是每秒的平均值,适用于变化很慢的 counter

# per-second average rate of increase, for slow-moving counters

rate(http_requests_total[5m])

# 计算的是每秒瞬时增加速率,适用于变化很快的 counter

# per-second instant rate of increase, for volatile and fast-moving counters

irate(http_requests_total[5m]) In addition, PromQL also supports aggregation operations such as count, sum, min, max, topk, and a bunch of built-in functions such as rate, abs, ceil, floor, etc. For more examples, please go to the official website to learn. If you are interested, we can also compare PromQL and SQL. We will find that PromQL has a more concise syntax and higher query performance.

3.3 HTTP API

Not only can we query PromQL on the Graph page of Prometheus, Prometheus also provides an HTTP API method that can integrate PromQL into other systems more flexibly. For example, Grafana described below uses Prometheus' HTTP API. Query indicator data. In fact, we also use HTTP API to query the Graph page of Prometheus.

Let's take a look at the official document of Prometheus HTTP API, which provides the following interfaces:

GET /api/v1/query

GET /api/v1/query_range

GET /api/v1/series

GET /api/v1/label/<label_name>/values

GET /api/v1/targets

GET /api/v1/rules

GET /api/v1/alerts

GET /api/v1/targets/metadata

GET /api/v1/alertmanagers

GET /api/v1/status/config

GET /api/v1/status/flagsStarting from Prometheus v2.1, several new interfaces for managing TSDB have been added:

POST /api/v1/admin/tsdb/snapshot

POST /api/v1/admin/tsdb/delete_series

POST /api/v1/admin/tsdb/clean_tombstonesFourth, install Grafana

Although the Web UI provided by Prometheus can also be used to view different indicators, this function is very simple and is only suitable for debugging. To implement a powerful monitoring system, you also need a panel that can be customized to display different indicators, and can support different types of display methods (graph, pie chart, heat map, TopN, etc.). This is the Dashboard function.

Therefore, Prometheus developed a set of dashboard system PromDash, but soon this system was abandoned, the official began to recommend the use of Grafana to visualize Prometheus indicator data, this is not only because Grafana is very powerful, but also it and Prometheus It can be perfectly and seamlessly integrated.

Grafana is an open source system for visualizing large-scale measurement data. Its functions are very powerful and its interface is also very beautiful. You can use it to create a custom control panel. You can configure the data to be displayed and the display mode in the panel. It supports Many different data sources, such as Graphite, InfluxDB, OpenTSDB, Elasticsearch, Prometheus, etc., and it also supports many plug-ins.

Let's experience the use of Grafana to display Prometheus indicator data. First, let's install Grafana, we use the simplest Docker installation method:

$ docker run -d -p 3000:3000 grafana/grafana Run the above docker command and Grafana is installed! You can also use other installation methods, refer to the official installation documentation. After the installation is complete, we visit http://localhost:3000/ enter the Grafana login page, and enter the default user name and password (admin/admin).

To use Grafana, the first step is of course to configure the data source, tell Grafana where to get the data from, we click Add data source to enter the data source configuration page:

We fill in here in turn:

- Name: prometheus

- Type: Prometheus

- URL: http://localhost:9090

- Access: Browser

It should be noted that Access here refers to the way Grafana accesses the data source. There are two ways: Browser and Proxy. Browser mode means that when the user accesses the Grafana panel, the browser directly accesses the data source through the URL; while the Proxy mode means that the browser first accesses a certain proxy interface of Grafana (the interface address is /api/datasources/proxy/), which is controlled by Grafana The server accesses the URL of the data source. This method is very useful if the data source is deployed on the intranet and the user cannot directly access it through the browser.

After configuring the data source, Grafana will provide several configured panels by default for you to use. As shown in the figure below, three panels are provided by default: Prometheus Stats, Prometheus 2.0 Stats and Grafana metrics. Click Import to import and use the panel.

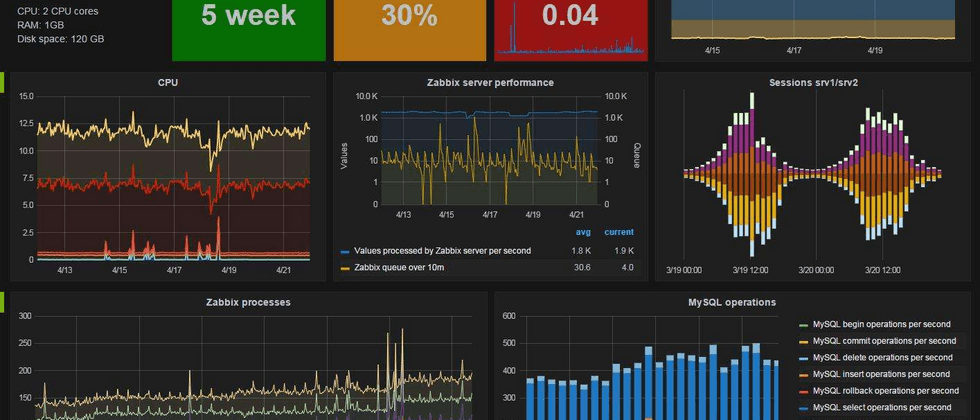

We import the Prometheus 2.0 Stats panel, and you can see the following monitoring panel. If your company has the conditions, you can apply for a large display to hang on the wall, project this panel on the large screen, and observe the status of the online system in real time. It can be said to be very cool.

5. Use Exporter to collect metrics

So far, what we have seen are only some indicators that have no practical use. If we want to really use Prometheus in our production environment, we often need to pay attention to various indicators, such as the server's CPU load, memory usage, and IO. Cost, incoming and outgoing traffic, etc.

As mentioned above, Prometheus uses the Pull method to obtain indicator data. To allow Prometheus to obtain data from the target, you must first install the indicator collection program on the target and expose the HTTP interface for Prometheus to query. This indicator collection program It is called Exporter. Different indicators need different Exporters to collect. At present, there are a large number of Exporters available, almost covering all kinds of systems and software we commonly use.

The official website lists a list of commonly used Exporters. Each Exporter follows a port convention to avoid port conflicts, that is, starting from 9100 and increasing sequentially. Here is the complete Exporter port list. It is also worth noting that some software and systems do not need to install Exporter, because they provide the function of exposing indicator data in Prometheus format, such as Kubernetes, Grafana, Etcd, Ceph, etc.

In this section, let us collect some useful data.

5.1 Collect server metrics

First, let's collect server metrics. This requires node_exporter to be installed. This exporter is used to collect *NIX kernel systems. If your server is Windows, you can use WMI exporter.

Like Prometheus server, node_exporter is also available out of the box:

$ wget https://github.com/prometheus/node_exporter/releases/download/v0.16.0/node_exporter-0.16.0.linux-amd64.tar.gz

$ tar xvfz node_exporter-0.16.0.linux-amd64.tar.gz

$ cd node_exporter-0.16.0.linux-amd64

$ ./node_exporter After node_exporter is started, we visit the /metrics interface to see if the server metrics can be obtained normally:

$ curl http://localhost:9100/metrics If everything is OK, we can modify the configuration file of Prometheus and add the server to scrape_configs:

scrape_configs:

- job_name: 'prometheus'

static_configs:

- targets: ['192.168.0.107:9090']

- job_name: 'server'

static_configs:

- targets: ['192.168.0.107:9100'] After modifying the configuration, you need to restart the Prometheus service, or send a HUP signal to make Prometheus reload the configuration:

$ killall -HUP prometheus In the Status -> Targets of the Prometheus Web UI, you can see the newly added server:

In the indicator drop-down box on the Graph page, you can see many indicators whose names start with node. For example, we enter node_load1 observe the server load:

If you want to view server metrics in Grafana, you can search for node exporter on Grafana's Dashboards page. There are many panel templates that can be used directly, such as Node Exporter Server Metrics or Node Exporter Full. We open the Import dashboard page of Grafana and enter the URL of the panel ( https://grafana.com/dashboards/405) or ID (405).

Precautions

In general, node_exporter runs directly on the server where the metrics are to be collected, and it is officially not recommended to use Docker to run node_exporter. If you have to run in Docker, you must pay special attention. This is because Docker's file system and network have their own namespace, and the collected data is not a real indicator of the host. You can use some workarounds, such as adding the following parameters when running Docker:

docker run -d \

--net="host" \

--pid="host" \

-v "/:/host:ro,rslave" \

quay.io/prometheus/node-exporter \

--path.rootfs /host For more information about node_exporter, please refer to the documentation of node_exporter and the official guide of Prometheus, Monitoring Linux host metrics with the Node Exporter.

5.2 Collect MySQL Metrics

mysqld_exporter is an exporter officially provided by Prometheus. We first download the latest version and decompress it (out of the box):

$ wget https://github.com/prometheus/mysqld_exporter/releases/download/v0.11.0/mysqld_exporter-0.11.0.linux-amd64.tar.gz

$ tar xvfz mysqld_exporter-0.11.0.linux-amd64.tar.gz

$ cd mysqld_exporter-0.11.0.linux-amd64/ mysqld_exporter needs to connect to mysqld to collect its metrics. There are two ways to set the mysqld data source. The first is through the environment variable DATA_SOURCE_NAME, which is called DSN (data source name), it must conform to the DSN format, a typical DSN format is like this: user:password@(host:port)/.

$ export DATA_SOURCE_NAME='root:123456@(192.168.0.107:3306)/'

$ ./mysqld_exporter Another way is through the configuration file. The default configuration file is ~/.my.cnf, or you can specify it through the --config.my-cnf parameter:

$ ./mysqld_exporter --config.my-cnf=".my.cnf" The format of the configuration file is as follows:

$ cat .my.cnf

[client]

host=localhost

port=3306

user=root

password=123456 If you want to import MySQL indicators into Grafana, you can refer to these Dashboard JSON.

Precautions

For the sake of simplicity, root is used directly in mysqld_exporter to connect to the database. In a real environment, you can create a separate user for mysqld_exporter and give it restricted permissions (PROCESS, REPLICATION CLIENT, SELECT), and it is best to restrict it. The maximum number of connections (MAX_USER_CONNECTIONS).

CREATE USER 'exporter'@'localhost' IDENTIFIED BY 'password' WITH MAX_USER_CONNECTIONS 3;

GRANT PROCESS, REPLICATION CLIENT, SELECT ON *.* TO 'exporter'@'localhost'; 5.3 Collect Nginx metrics

There are two official methods for collecting Nginx metrics.

- first is the Nginx metric library, which is a Lua script (prometheus.lua). Nginx needs to enable Lua support (libnginx-mod-http-lua module). For convenience, you can also use OpenResty's OPM (OpenResty Package Manager) or luarocks (The Lua package manager) to install.

- The second is the Nginx VTS exporter. This method is much more powerful than the first one, the installation is simpler, and the supported indicators are more abundant. It relies on the nginx-module-vts module. The vts module can provide a large number of Nginx indicator data can be viewed in the form of JSON, HTML, etc. Nginx VTS exporter is to convert the data format of vts to Prometheus format by grabbing the /status/format/json interface.

However, a new interface has been added to the latest version of nginx-module-vts: /status/format/prometheus. This interface can directly return the format of Prometheus. From this point, we can also see the influence of Prometheus. It is estimated that Nginx VTS The exporter will be retired soon (TODO: to be verified).

In addition, there are many other ways to collect Nginx metrics, such as: nginx_exporter can obtain some simple metrics by grabbing the statistics page/nginx_status that comes with Nginx (need to open the ngx_http_stub_status_module module); nginx_request_exporter collects through the syslog protocol And analyze the access log of Nginx to count some indicators related to HTTP requests; nginx-prometheus-shiny-exporter is similar to nginx_request_exporter and also uses the syslog protocol to collect the access log, but it is written in the Crystal language. There is also vovolie/lua-nginx-prometheus based on Openresty, Prometheus, Consul, and Grafana to achieve traffic statistics for domain names and Endpoint levels.

Students who need or are interested can install and experience by themselves according to the instructions, so I won't try them one by one here.

5.4 Collect JMX metrics

Finally, let's look at how to collect the metrics of Java applications. The metrics of Java applications are generally obtained through JMX (Java Management Extensions). As the name suggests, JMX is an extension for managing Java, which can be easily managed and monitored. Java program.

JMX Exporter is used to collect JMX metrics. Many systems that use Java can use it to collect metrics, such as Kafaka, Cassandra, etc. First we download JMX Exporter:

$ wget https://repo1.maven.org/maven2/io/prometheus/jmx/jmx_prometheus_javaagent/0.3.1/jmx_prometheus_javaagent-0.3.1.jar JMX Exporter is a Java Agent program, which is loaded by the -javaagent parameter when running the Java program:

$ java -javaagent:jmx_prometheus_javaagent-0.3.1.jar=9404:config.yml -jar spring-boot-sample-1.0-SNAPSHOT.jar Among them, 9404 is the port where JMX Exporter exposes indicators, and config.yml is the configuration file of JMX Exporter. For its content, please refer to the configuration instructions of JMX Exporter. Then check whether the indicator data is correctly obtained:

$ curl http://localhost:9404/metrics Sixth, warnings and notifications

At this point, we have been able to collect a large amount of indicator data, which can also be displayed through a powerful and beautiful panel. However, as a monitoring system, the most important function should be able to detect system problems in time and notify the person in charge of the system in time. This is Alerting.

The alert function of Prometheus is divided into two parts: one is the configuration and detection of alert rules and sends alerts to Alertmanager, and the other is Alertmanager, which is responsible for managing these alerts, removing duplicate data, grouping, and routing to the corresponding receiving method. Raise an alarm. Common receiving methods are: Email, PagerDuty, HipChat, Slack, OpsGenie, WebHook, etc.

6.1 Configure alarm rules

When we introduced the configuration file of Prometheus above, we learned that its default configuration file prometheus.yml has four blocks: global, alerting, rule_files, and scrape_config. The rule_files block is the configuration item of the alert rule, and the alerting block is used to configure Alertmanager. We will look at this in the next section. Now, let us add an alert rule file in the rule_files block:

rule_files:

- "alert.rules" Then refer to the official documentation to create an alert rule file alert.rules:

groups:

- name: example

rules:

# Alert for any instance that is unreachable for >5 minutes.

- alert: InstanceDown

expr: up == 0

for: 5m

labels:

severity: page

annotations:

summary: "Instance {{ $labels.instance }} down"

description: "{{ $labels.instance }} of job {{ $labels.job }} has been down for more than 5 minutes."

# Alert for any instance that has a median request latency >1s.

- alert: APIHighRequestLatency

expr: api_http_request_latencies_second{quantile="0.5"} > 1

for: 10m

annotations:

summary: "High request latency on {{ $labels.instance }}"

description: "{{ $labels.instance }} has a median request latency above 1s (current value: {{ $value }}s)" This rule file contains two alarm rules: InstanceDown and APIHighRequestLatency. As the name implies, InstanceDown means that an alarm is triggered when the instance is down (up === 0), and APIHighRequestLatency means that half of the API request latency is greater than 1s (api_http_request_latencies_second{quantile="0.5"}> 1).

After configuration, you need to restart the Prometheus server, and then visit http://localhost:9090/rules to see the rules just configured:

Visit http://localhost:9090/alerts to see the alarms generated according to the configured rules:

Here we stop an instance and you can see that there is an alert whose status is PENDING, which means that the alarm rule has been triggered, but the alarm condition has not been reached. This is because the for parameter configured here is 5m, which means that the alarm will be triggered after 5 minutes. We wait 5 minutes and we can see that the status of this alert has changed to FIRING.

6.2 Use Alertmanager to send alert notifications

Although you can see all the alerts on Prometheus's /alerts page, there is still the last step: automatic notification is sent when an alert is triggered. This is done by Alertmanager. We first download and install Alertmanager. Like other Prometheus components, Alertmanager is also available out of the box:

$ wget https://github.com/prometheus/alertmanager/releases/download/v0.15.2/alertmanager-0.15.2.linux-amd64.tar.gz

$ tar xvfz alertmanager-0.15.2.linux-amd64.tar.gz

$ cd alertmanager-0.15.2.linux-amd64

$ ./alertmanager Alertmanager can be accessed through http://localhost: /160dff5e27b83b by default after it is started, but now there is no alert because we have not configured Alertmanager into Prometheus. Let’s go back to the Prometheus configuration file prometheus.yml and add The following lines:

alerting:

alertmanagers:

- scheme: http

static_configs:

- targets:

- "192.168.0.107:9093" This configuration tells Prometheus to send the alert information to Alertmanager when an alert occurs. The address of is 160dff5e27b85d http://192.168.0.107:9093. You can also specify Alertmanager using named lines:

$ ./prometheus -alertmanager.url=http://192.168.0.107:9093 Visit Alertmanager again at this time, you can see that Alertmanager has received the alert:

The following question is how to make Alertmanager send us the alert information, we open the default configuration file alertmanager.ym:

global:

resolve_timeout: 5m

route:

group_by: ['alertname']

group_wait: 10s

group_interval: 10s

repeat_interval: 1h

receiver: 'web.hook'

receivers:

- name: 'web.hook'

webhook_configs:

- url: 'http://127.0.0.1:5001/'

inhibit_rules:

- source_match:

severity: 'critical'

target_match:

severity: 'warning'

equal: ['alertname', 'dev', 'instance'] The global block represents some global configuration; the route block represents notification routing, which can send alarm notifications to different receivers according to different tags. There is no routes item configured here, which means that all alarms are sent to the receiver defined below web.hook; If you want to configure multiple routes, you can refer to this example:

routes:

- receiver: 'database-pager'

group_wait: 10s

match_re:

service: mysql|cassandra

- receiver: 'frontend-pager'

group_by: [product, environment]

match:

team: frontend Immediately afterwards, the receivers block represents the receiving method of alert notifications. Each receiver contains a name and a xxx_configs. Different configurations represent different receiving methods. Alertmanager has the following built-in receiving methods:

email_config

hipchat_config

pagerduty_config

pushover_config

slack_config

opsgenie_config

victorops_config

wechat_configs

webhook_configAlthough there are many receiving methods, most of them are rarely used in China. The most commonly used ones are email_config and webhook_config. In addition, wechat_configs can support the use of WeChat to alert, which is quite in line with national conditions.

In fact, it is difficult to complete all aspects of the alert notification method, because there are various messaging software, and each country may be different, and it is impossible to fully cover it, so Alertmanager has decided not to add a new receiver, but recommends it. Webhook to integrate custom receiving methods. You can refer to these integration examples, such as connecting DingTalk to Prometheus AlertManager WebHook.

Seven, learn more

Up to this point, we have learned most of the functions of Prometheus. Combining Prometheus + Grafana + Alertmanager can build a very complete monitoring system. But in actual use, we will find more problems.

7.1 Service discovery

Since Prometheus actively obtains monitoring data through Pull, it is necessary to manually specify the list of monitoring nodes. When the number of monitored nodes increases, the configuration file needs to be changed every time the node is added, which is very troublesome. At this time, it needs to be discovered through service (service Discovery, SD) mechanism to solve.

Prometheus supports multiple service discovery mechanisms, which can automatically obtain the targets to be collected. You can refer to here. The service discovery mechanisms included include: azure, consul, dns, ec2, openstack, file, gce, kubernetes, marathon, triton, zookeeper (nerve , Serverset), the configuration method can refer to the Configuration page of the manual. It can be said that the SD mechanism is very rich, but due to the limited development resources, no new SD mechanism is developed anymore, and only the file-based SD mechanism is maintained.

There are many tutorials on service discovery on the Internet. For example, the article Advanced Service Discovery in Prometheus 0.14.0 in the official Prometheus blog has a more systematic introduction to this, which comprehensively explains the relabeling configuration, and how to use DNS-SRV, Consul and files To do service discovery.

In addition, the official website also provides an introductory example of file-based service discovery. The Prometheus workshop introductory tutorial written by Julius Volz also uses DNS-SRV for service discovery.

7.2 Alarm configuration management

Neither the configuration of Prometheus nor the configuration of Alertmanager provides an API for us to dynamically modify. A very common scenario is that we need to build an alert system with customizable rules based on Prometheus. Users can create, modify or delete alert rules on the page according to their needs, or modify alert notification methods and contacts, as in This user’s question in Prometheus Google Groups: How to dynamically add alerts rules in rules.conf and prometheus yml file via API or something?

Unfortunately, Simon Pasquier said below that there is currently no such API, and there is no plan to develop such an API in the future, because such functions should be given to Puppet, Chef, Ansible, Salt, etc. Configuration management system.

7.3 Use Pushgateway

Pushgateway is mainly used to collect some short-term jobs. Since these jobs exist for a short time, they may disappear before Prometheus comes to Pull. The official has a good explanation on when to use Pushgateway.

to sum up

In the past two years, Prometheus has developed very rapidly, the community is also very active, and there are more and more people studying Prometheus in China. With the popularization of microservices, DevOps, cloud computing, cloud native and other concepts, more and more companies begin to use Docker and Kubernetes to build their own systems and applications, and established monitoring systems like Nagios and Cacti will become more and more popular. The less applicable, I believe that Prometheus will eventually develop into a monitoring system that is most suitable for cloud environments.

Source: aneasystone.com/archives/2018/11/prometheus-in-action.html

**粗体** _斜体_ [链接](http://example.com) `代码` - 列表 > 引用。你还可以使用@来通知其他用户。