Tucao yourself

It's been three months, and I haven't written anything for three months. Even my daughter-in-law couldn't help but say: "I don't feel like you are very motivated this year." I replied: "Be confident and get rid of your feelings."

April is busy for weddings, May needs to be a mountain, and June is a little idle, just in time for the NBA playoffs and the European Cup. There is no time to study, all lying flat.

In fact, since I first entered the industry, I have been in contact with Nginx one after another, learning to install, learn to configure, and learn to use it to solve front-end problems that are not controlled by the front-end. I have checked a lot of knowledge from Baidu, but I never remembered it, so ....

About Nginx

If you don’t have any concept of Nginx yet, I recommend you to take a look at Baidu Encyclopedia's nginx ;

Remember one sentence: Nginx is a very powerful high-performance Web and reverse proxy service, it has many very superior features;

High-performance web services

Web services are actually also called static resource services. Since the front and back ends are separated, the output of the front end tends to be in the form of static resources. What is a static resource: It is the result that we usually use webpack to build the output, such as:

In order to provide file accessibility on the Internet, the front end still needs to rely on static resource service; the most commonly used methods are Node service and Nginx service.

The most common Node service is WebpackServer, which is often used in front-end development and joint debugging. After startup, we can http://localhost:8907/bundle.05a01f6e.js; in addition, , I will give you another Node service library: serve , it can also quickly start a static resource service.

But in the production environment, we generally use Nginx for processing. It is not that Node is bad, but that Nginx is good enough. Usually there are many front-end projects in the entire big front-end. We put the build results in a folder on a server (usually a backup machine), and then install Nginx to create a static resource service for all front-end resources to access ; For example, there are four front-end project resources A, B, C, D under the folder static, we configure through nginx:

server {

listen 80;

listen [::]:80;

server_name static.closertb.site;

# Load configuration files for the default server block.

include /etc/nginx/default.d/*.conf;

location / {

alias /home/static/;

autoindex on;

if ($request_uri ~ .*\.(css|js|png|jpg)$) {

#etag on;

expires 365d;

#add_header Cache-Control max-age=31536000;

}

}

}We can by http://static.closertb.site/A/index.html access A project by http://static.closertb.site/C/index.html access C projects to do Eat more with one chicken, this kind of gameplay is especially common in the era of HTTPS and HTTP2.

The above is the simple usage of Nginx as a web service. Next, let’s take a look at the reverse proxy service.

Reverse proxy service

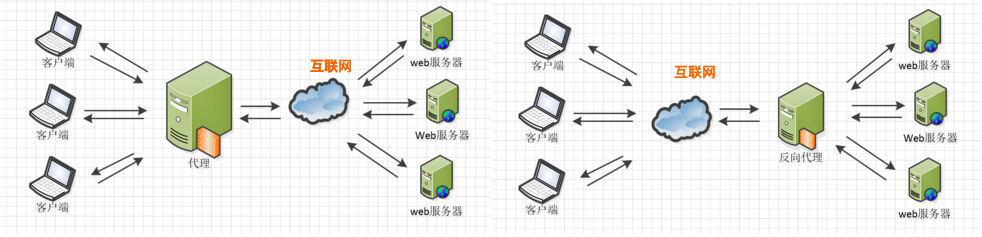

As a developer, you may often hear the proxy, but many people do not know the difference between forward and reverse:

As shown on the left side of the above figure, the forward proxy is the active behavior of the user, as we did when we visited goggle when we fq; the reverse proxy on the right is the behavior of the server we access, and as a user, we cannot control or need to pay attention.

Reverse proxy is a very common technology in service deployment, such as load balancing, disaster tolerance, and caching.

For front-end development, reverse proxy is mostly used for request forwarding to handle cross-domain issues. When we point the front-end static resource service to a domain name (static.closertb.site), it is inconsistent with the server-side request domain name (server.closertb.site), which will cause cross-domain. If the server does not cooperate, then through nginx, the front end can also be easily done. In the previous configuration, we add:

server {

listen 80;

listen [::]:80;

server_name static.closertb.site;

location /api/ {

proxy_pass http://server.closertb.site;

proxy_set_header Host $host;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

}

location / {

....

}So when the webpage makes a request: http://static.closertb.site/api/user/get, the actual request address is: http://server.closertb.site/api/user/get, which is simple to implement A service agent. The principle is similar to the proxy of

The above are two typical usage scenarios of Nginx's web service and proxy service in front-end development. Next, let's talk about the fragmentary and useful ones.

Nginx configuration refinement

Knowledge source: http://nginx.org/en/docs/http/ngx_http_core_module.html

root vs alias

The main difference between root and alias lies in how nginx interprets the uri behind location, that is, mapping the request path to the server file in different ways, such as:

location ^~ /static {

root /home/static;

}When requesting http://static.closertb.site/static/a/logo.png, the /home/static/static/a/logo.png file on the server will be returned, which is'/home/static'+ '/static/a/logo.png', its spliced address is the matching string and beyond

And for alias:

location ^~ /static {

root /home/static;

}When requesting http://static.closertb.site/static/a/logo.png, it will return the /home/static/a/logo.png file on the server, which is'/home/static'+'/ a/logo.png', its spliced address is the address after the matching string

location with'/' and without'/'

You may have seen A like this:

location /api {

proxy_pass http://server.closertb.site;

}You may also have seen B like this

location /api/ {

proxy_pass http://server.closertb.site;

}What's the difference?

Both have similarities with root and alias, but this difference only applies to:

If location is defined by a prefix string ending with a slash character, and the request is processed by one of proxy_pass, fastcgi_pass, uwsgi_pass, scgi_pass, memcached_pass, or grpc_pass, special processing is performed. In response to a request with a URI equal to this string but without a trailing slash, a permanent redirect with code 301 will return to the requested URI with the additional slash

So when a request is received: http://static.closertb.site/api/user/get , configuration A will proxy the request to: http://server.closertb.site/api/user/ get; Configuration B will proxy the request to: http://server.closertb.site/user/get

This knowledge is really important in proxy configuration

Request redirection

When we remove a front-end service, or a user visits a page that does not exist at all, we do not want the user to see a 404, but direct it to a vaguely correct page. At this time, I can use the rewrite service; Backhand a configuration to directly hit the traffic to the homepage of the website;

location /404.html {

rewrite ^.*$ / redirect;

}Another commonly used one is to enable https on the website. We need to redirect all http requests to https:

server {

listen 80 default_server;

server_name closertb.site;

include /etc/nginx/default.d/*.conf;

rewrite ^(.*)$ https://$host$1 permanent;

}The above is the same as rewrite, but there are still differences, one is redirect (302), the other is permanent (301), there is still a big difference between the two;

Help optimize web performance experience

Web performance optimization is a front-end knowledge. A good static resource loading speed will significantly improve the user experience. As the most commonly used static resource server, nginx also has many channels to help us improve the static resource loading speed. Simply put , You can directly configure in three aspects:

- Enable resource caching: Because current resources except .html, our file names are all hashed, so we can cache files other than html files for a long time to ensure that the resources are loaded instantly when the user refreshes the page;

if ($request_uri ~ .*\.(css|js|png|jpg|gif)$) {

expires 365d;

add_header Cache-Control max-age=31536000;

}Both expires and max-age configuration methods can achieve the purpose of telling the browser that resources will expire in one year. For more information about http caching, see this article ;

- Open http2: With the continuous maturity of HTTP2 and its multiplexing characteristics, it can greatly improve the loading speed of the first screen of the page's multi-resource dependence. In fact, the more work of opening http2 is to open https, and then add an http2 logo , There are many articles on the Internet, just searched one: nginx configure ssl to achieve https access ;

# listen 443 ssl default_server;

listen 443 ssl http2 default_server;- Turn on gzip: http2 solves the number of requests at the same time, and resource caching improves the speed of resource reloading, so gzip fundamentally reduces the size of this resource transmitted on the network, and directly turns on gzip at the http level Configure;

http {

gzip on;

gzip_disable "msie6";

gzip_min_length 10k;

gzip_vary on;

# gzip_proxied any;

gzip_comp_level 3;

gzip_buffers 16 8k;

gzip_types text/plain text/css application/json application/javascript text/xml application/xml application/xml+rss text/javascript;

}Summary of the stretch

The article was originally planned to be sent out at the end of June, the party’s centenary birthday, but the procrastination and the ball game defeated me again. After a week of writing, I finally finished writing. The knowledge is very simple and a bit fragmented. I hope it will be useful to you.

Last night, a great new generation of Italian left-back was injured, which is a pity.

**粗体** _斜体_ [链接](http://example.com) `代码` - 列表 > 引用。你还可以使用@来通知其他用户。