Abstract: You have never interacted with EX, you can't remember the appearance of junior high school classmates, ex-colleagues, and even the people you least want to see-your BOSS, how did these people appear in the recommended users of your social software? What's on the list? The key technology of this is: the link prediction of the knowledge base, also known as the completion of the knowledge graph.

The crowd looked for him thousands of Baidu, and suddenly looked back, but the person was on the recommended list.

One of the best places in social software must be the deep mining of user relationships. Obviously you have blocked some people's phone numbers, WeChat, and all social accounts, but TA still appears in the "People You May Know" on the page without exception. These people include the EX you have never seen each other, junior high school classmates who can't remember their appearances, former colleagues, and even the person you least want to see-your BOSS.

▲ Douyin-discover friends

So how did these people appear on your list?

The key technology is: link prediction of knowledge base, also known as knowledge graph completion.

A picture to understand what is a knowledge graph?

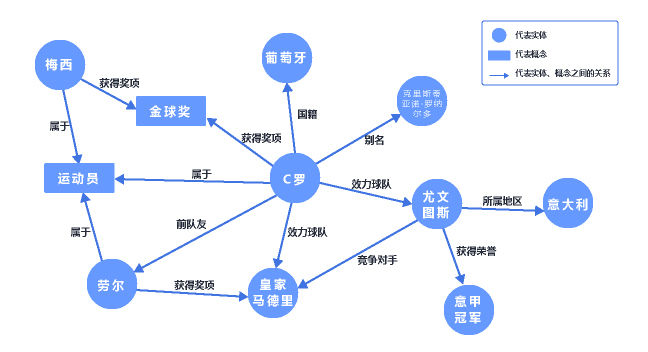

Knowledge graph is a kind of multi-relation graph in which knowledge is written as structured triples, including entities, concepts and relations.

Entities refer to things in the real world such as names of people, places, institutions, etc. of entities with the same characteristics, such as "athletes" and "Golden Globes" in the following figure. Relations are used to express a certain connection between different entities.

The knowledge graph composes a graph with entities and relationships to intuitively model various scenes in the real world. The essence of the process of constructing a knowledge graph is the process of establishing cognition and understanding the world.

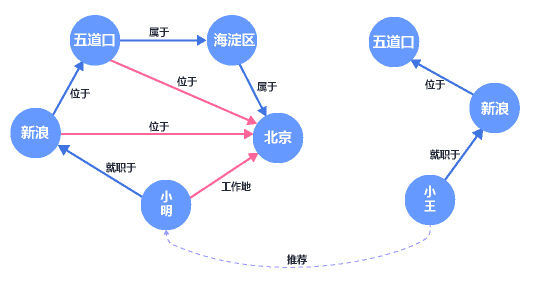

How to complete the knowledge graph

Take Xiaoming as an example. Xiaoming works for Sina in Wudaokou. The system can infer that Xiaoming works in Beijing. And recommended Xiao Wang, who also works at Beijing Sina, to him. In the figure below, the blue arrow indicates the relationship that already exists, and the red arrow indicates the relationship after the knowledge graph is completed.

The relationship between knowledge graph and knowledge representation learning

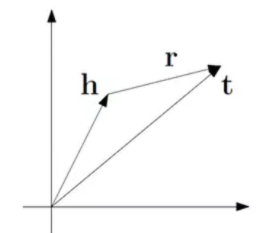

The knowledge graph is composed of entities and relationships, usually expressed in the form of triples-head (head entity), relation (relationship of entities), tail (tail entity), abbreviated as (h, r, t). The task of knowledge representation learning is to learn distributed representations of h, r, and t (also known as the embedding representation of the knowledge graph). It can be said that with the Embedding of knowledge graphs, AI-style knowledge graph applications become possible.

How to understand Embedding?

Simply put, embedding is a description of an object (word, character, sentence, article...) in multiple dimensions, which is equivalent to describing an object through data modeling.

For example, the RGB representation of colors in Photoshop that we often use is an atypical embedding. Here the color is divided into three characteristic latitudes, R (red intensity, value range 0-255), G (green intensity, value range 0-255), B (blue intensity, value range 0-255) . RGB(0,0,0) is black. RGB(41,36,33) is ivory black. In this way, we can describe colors by numbers.

What are the methods of knowledge representation learning

The key to knowledge representation learning is to design a reasonable scoring function. We hope to maximize the scoring function under the condition that a given fact triple is true. It can be divided into the following two categories from the realization form:

Structure-based approach

The basic idea of this type of model is to learn the representation of the entities and connections of the knowledge graph from the structure of triples. The most classic algorithm is the TransE model. The basic idea of this method is that the head vector represents the sum of h and the relation vector represents r and the tail vector represents t as close as possible, that is, h+r≈t. Here "close" can be measured using L1 or L2 norm. The schematic diagram is as follows:

Such knowledge representation learning models also include: TransH, TransR, TransD, TransA, etc.

Semantic-based approach

This type of model learns the representation of KG entities and relationships from the perspective of text semantics. Such representation methods mainly include LFM, DistMult, ComplEx, ANALOGY, ConvE, etc.

Application of knowledge representation learning

Since it is based on representation learning, the entities and relationships of the knowledge graph can be vectorized to facilitate the calculation of subsequent downstream tasks. Typical applications are as follows:

1) Similarity calculation: Using the distributed representation of entities, we can quickly calculate the semantic similarity between entities, which is of great significance for many tasks in natural language processing and information retrieval.

How to calculate similarity? for example.

Assuming that the embedding of the word "Li Bai" is a total of 5 dimensions, its value is [0.3, 0.5, 0.7, 0.03, 0.02], where each dimension represents the correlation with something, and these five values represent [Poet, Writers, writers, freelancers, knights].

And "Wang Wei"=[0.3, 0.55, 0.7, 0.03, 0.02], "Newton"=[0.01, 0.02, 0.06, 0.4, 0.01], we can use the cosine distance (in geometry, the angle cosine can be used to measure two The difference in the direction of each vector; in machine learning, this concept is used to measure the difference between sample vectors.) To calculate the distance of these words, it is obvious that the distance between Li Bai and Wang Wei is closer, and the distance between Newton and Newton is farther. It can be judged that "Li Bai" and "Wang Wei" are more similar.

2) Completion of knowledge graph. To build a large-scale knowledge graph, it is necessary to constantly supplement the relationships between entities. Using the knowledge representation learning model, the relationship between two entities can be predicted. This is generally called the link prediction of the knowledge base, which is also called the knowledge graph completion. The example of "Xiaoming Wudaokou" above can be explained very well.

3) Other applications. Knowledge representation learning has been widely used in tasks such as relation extraction, automatic question answering, entity linking, etc., showing great application potential.

Automatic question answering is a major application that is deeply integrated with knowledge representation learning. For intelligent question answering products, the background design is generally divided into three layers, input layer, presentation layer, and output layer. In short, the input layer is a question library, which gathers all the questions that users may ask. After the knowledge extraction of the presentation layer, the result is finally returned.

Typical smart question answering products include Apple Siri, Microsoft Xiaobing, Baidu, Ali Xiaomi, etc. A major feature of these question and answer products is that they can make search results more accurate, instead of returning a bunch of similar pages for you to filter by yourself, and achieve "what you answer is what you ask." For example, if you search for "Wang Sicong's worth", the result will be the specific number.

to sum up

In short, social products are based on knowledge graph knowledge completion technology, which predicts missing triples through the representation of entities and relationships, and predicts its tail entities based on the known head entity and the relationship between the head entity. In other words, they are recommended by friends based on user portraits. If you don’t want those “old acquaintances” to appear in your recommendation list, the best way is to turn off geographic positioning on social products and minimize Disclosure of personal information.

Reference

1. Liu Zhiyuan, Sun Maosong, Lin Yankai, Xie Ruobing, "Research Progress in Knowledge Representation Learning"

Click to follow and learn about Huawei Cloud's fresh technology for the first time~

**粗体** _斜体_ [链接](http://example.com) `代码` - 列表 > 引用。你还可以使用@来通知其他用户。