On May 15th, Agora Senior Architect Gao Chun participated in the first offline event "WebAssembly Meetup" organized by the WebAssambly community, and shared practical experience around the application of Agora on the Web real-time video portrait segmentation technology. . The following is the presentation sharing and finishing.

The RTC industry has developed rapidly in recent years, and online education, video conferencing and other scenes are booming. The development of the scene also puts forward higher requirements for the technology. As a result, machine learning is increasingly applied to real-time audio and video scenarios, such as super-resolution, beauty, and real-time bel canto. These applications also have the same requirements on the Web side, and they are also challenges faced by all audio and video developers. Fortunately, WebAssembly technology provides the possibility of high-performance computing on the Web. We first explored and practiced the application of portrait segmentation on the Web side.

What scenes are video portrait segmentation used in?

When it comes to portrait segmentation, the first application scenario we think of is green screen matting in film and television production. After shooting the video in a green screen environment, after post-production, the background is replaced with a computer-synthesized movie scene.

Everyone should have seen another application scenario. On station B, you will find that the barrage of some videos will not obscure the characters on the screen, and the text will pass through behind the portrait. This is also based on portrait segmentation technology.

The portrait segmentation techniques listed above are all implemented on the server, and are not real-time audio and video scenes.

The portrait segmentation technology made by our sound network is suitable for real-time audio and video scenes such as video conferencing and online teaching. We can use portrait segmentation technology to blur the video background or replace the video background.

Why do these real-time scenarios need this technology?

A recent study found that out of an average of 38 minutes of conference call, a full 13 minutes were wasted dealing with interruptions and interruptions. From online interviews, presentations and employee training courses to brainstorming, sales pitches, IT assistance, customer support and webinars, all these situations face the same problems. Therefore, using a background blur or choosing one of many custom and preset virtual background options can greatly reduce interference.

Another survey showed that 23% of American employees said that video conferencing makes them feel uncomfortable; 75% said that they still prefer voice conferencing to video conferencing. This is because people do not want to expose their living environment and privacy to the public eye. Then by replacing the video background, this problem can be solved.

At present, most of the portrait segmentation and virtual background in real-time audio and video scenes run on the native client. Only Google Meet has used WebAssembly to achieve portrait segmentation in real-time video on the Web. The realization of the sound net is realized by combining machine learning, WebAssembly, WebGL and other technologies.

Realization of Web Real-time Video Virtual Background

Technical components and real-time processing flow of portrait segmentation

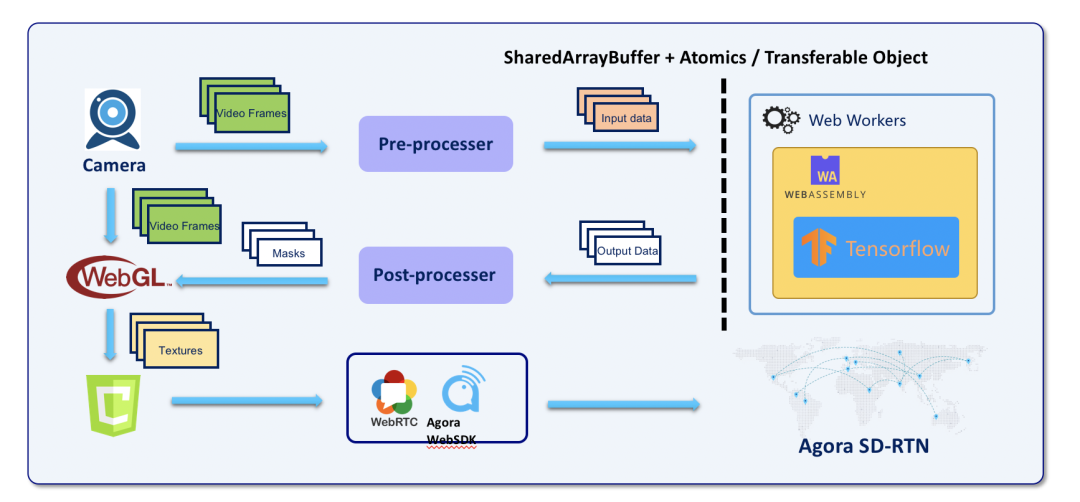

When doing web portrait segmentation, we also need to use these components:

- WebRTC: To collect and transmit audio and video.

- TensorFlow: As a framework for the portrait segmentation model.

- WebAssembly: Do the realization of the portrait segmentation algorithm.

- WebGL: GLSL implements image processing algorithms to process images after portrait segmentation.

- Canvas: Final rendering of video and image results.

- Agora Web SDK: Real-time audio and video transmission.

The real-time processing flow of portrait segmentation is like this. First, we will use W3C's MediaStream API to collect. The data will then be handed over to the WebAssembly engine for prediction. Because the computational overhead of machine learning is large, it requires that the input data cannot be too large, so it is necessary to perform some scaling processing or normalization on the video image before inputting it into the machine learning framework. After the calculation result is output from WebAssembly, some post-processing is required, and then it is passed to WebGL. WebGL will use this information and the original video information to do filtering, superimposition and other processing, and finally generate the result. These results will be sent to Canvas and then transmitted in real time through Agora Web SDK.

machine learning framework

Before we do such a portrait segmentation, we will definitely consider whether there is a ready-made machine learning framework. Currently available include ONNX.js, TensorFlow.js, Keras.js, MIL WebDNN, etc. They will all use WebGL or WebAssembly as the computing backend. But when trying these frameworks, some problems were found:

1. Lack of necessary protection for model files. Generally, the browser will load the model from the server when it is running. Then the model will be directly exposed on the browser client. This is not conducive to the protection of intellectual property rights.

2. The general JS framework IO design does not consider actual scenarios. For example, the input of TensorFlow.js is a general-purpose array. During operation, the content will be packaged into InputTensor, and then handed over to WebAssembly or uploaded as a WebGL texture for processing. This process is relatively complicated, and performance cannot be guaranteed when processing video data that requires high real-time performance.

3. The operator support is imperfect. The general framework will more or less lack operators that can handle video data.

For these problems, our solution strategy is as follows:

1. Implement Wasm porting of native machine learning framework.

2. For operators that have not been implemented, we will fill in them through customization.

3. In terms of performance, we use SIMD (single instruction multiple data stream instruction set) and multithreading for optimization.

Video data preprocessing

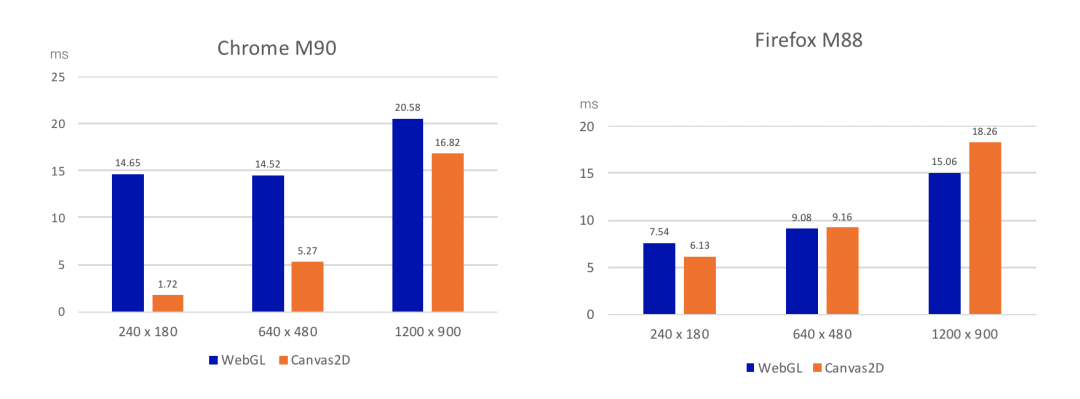

The preprocessing of the data requires scaling of the image. There are generally two ways to do it on the front end: one is to use Canvas2D, and the other is to use WebGL.

Use Canvas2D.drawImage() to draw the content of the Video element onto Canvas, and then use Canvas2D.getImageData() to get the image size you need to zoom.

WebGL itself can upload the Video element itself as a parameter as a texture. WebGL also provides the ability to read Video data from FrameBuffer.

We also tested the performance of these two methods. As shown in the figure below, in the environment of x86_64 window10 and on two browsers, we tested the preprocessing time of videos with three resolutions on Canvas2D and WebGL. Overhead. From this, you can determine which method should be used when preprocessing videos of different resolutions.

Web Workers and multithreading issues

Because Wasm is too expensive to calculate, it will cause the JS main thread to block. And when encountering some special situations, such as entering a coffee shop and there is no power supply nearby, the device will be in a low power consumption mode. At this time, the CPU will reduce the frequency, which may cause video processing to drop frames.

Therefore, we need to improve performance. Here, we are using Web Workers. We run machine learning inference operations on the Web Worker, which can effectively reduce the blocking of the JS main thread.

The method of use is also relatively simple. The main thread creates a Web Worker, and it runs on another thread. The main thread sends a message to it through worker.postMessage for the worker to access it. (Such as the following code example)

But this may also introduce some new problems:

- Structured copy overhead caused by postMessage data transmission

- Resource competition and Web engine compatibility brought by shared memory

In response to these two issues, we have also done some analysis.

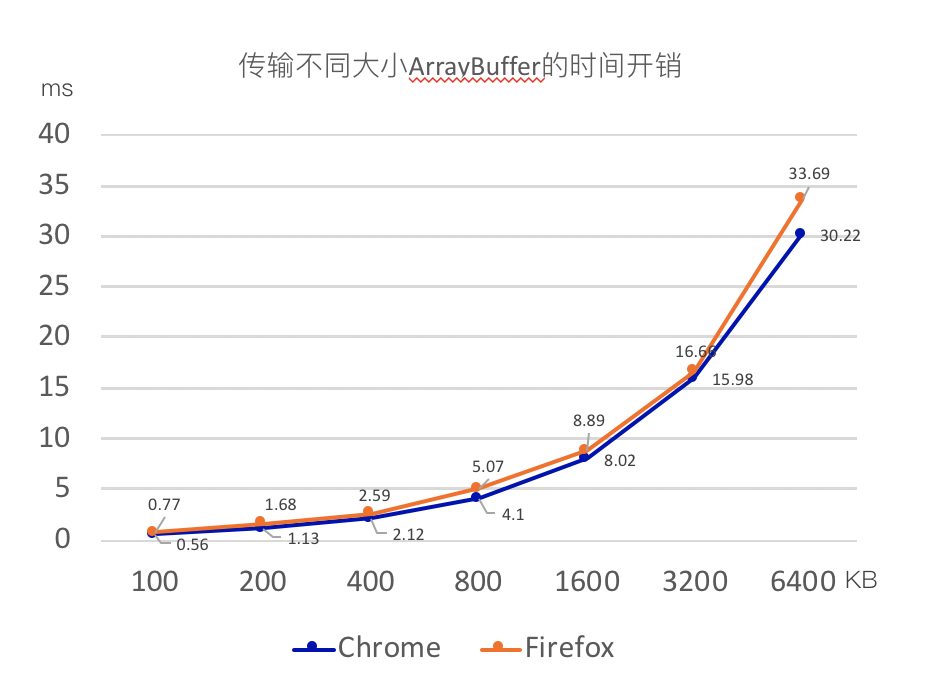

When transferring data, your data is JS primitive data type, ArrayBuffer, ArrayBufferView, lImageData, or File /

FileList / Blob, or Boolean / String / Object / Map / Set type, then postMessage will use structured cloning algorithm for deep copy.

We perform data transfer performance tests between the JS main thread and WebWorkers or between different pages. As shown in the figure below, the test environment is a x86_64 window10 computer. The test results are as follows:

Our pre-processed data is about 200KB or less, so it can be seen from the above figure that the time overhead will be below 1.68ms. This performance overhead is almost negligible.

If you want to avoid structured copying, you can use SharedArrayBuffer. As the name implies, the principle of SharedArrayBuffer is to allow the main thread and Worker to share a memory area to access data at the same time.

But like all shared memory methods (including native ones), SharedArrayBuffer also has resource competition issues. Then at this time, JS needs to introduce additional mechanisms to deal with competition. Atomics in JavaScript was born to solve this problem.

Our sound network also tried SharedArrayBuffer when doing portrait segmentation, and found that it can also cause some problems. The first point is compatibility. At present, SharedArrayBuffer can only be used in Chrome 67 and above.

You should know that before 2018, both Chrome and Firefox platforms supported SharedArrayBuffer, but in 18 years, all CPUs were exposed to two serious vulnerabilities, Meltdown and Spectre, which would cause the data isolation between processes to be broken, so the two The browser disables SharedArrayBuffer. It was not until Chrome 67 did process isolation on the site that SharedarrayBuffer was allowed to be used again.

Another problem is that development is relatively difficult. The introduction of Atomics objects to solve resource competition makes front-end development as difficult as multithreaded programming in native languages.

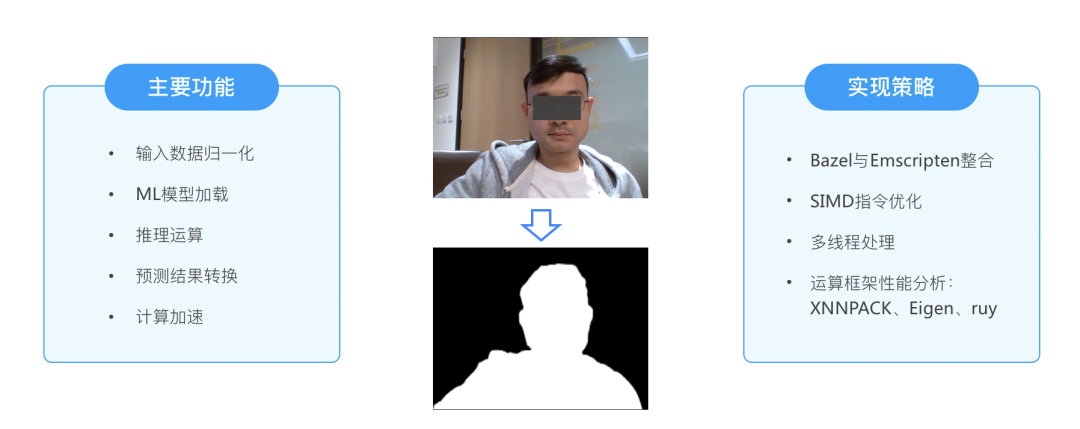

WebAssembly module function and implementation strategy

WebAssembly is mainly responsible for portrait segmentation. The main functions and implementation strategies we want to achieve are as follows:

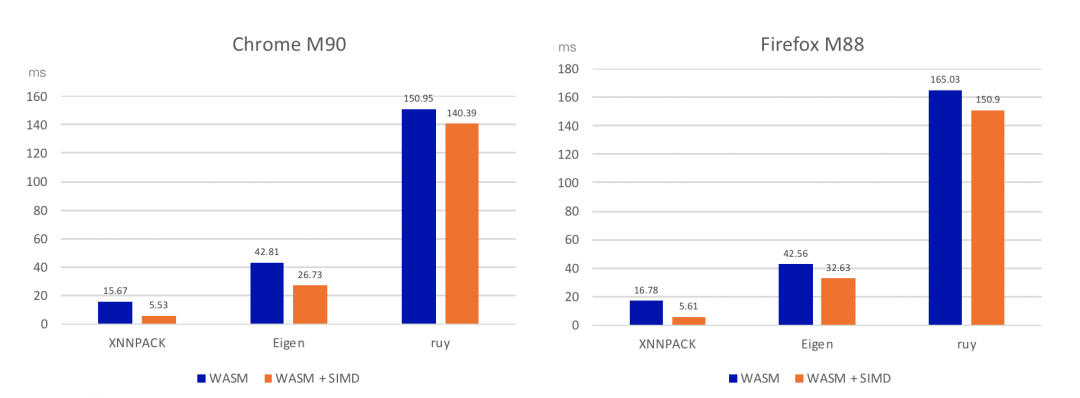

Among them, the machine learning model will have different vector and matrix calculation frameworks. Take TensorFlow as an example. It has three computing frameworks: XNNPACK, Eigen, and ruy. In fact, their performance on different platforms is different. We also tested this. The test results under the x86_64 window10 environment are as follows. It can be clearly seen that XNNPACK performs the best in our processing scenario, because it is an arithmetic framework optimized for floating-point arithmetic.

Here we only show the calculation test results under x86, and do not represent the final results on all platforms. Because ruy is the default computing framework of TensorFlow on the mobile platform, it is better optimized for the ARM architecture. So we also tested on other different platforms. I won’t share them one by one here.

WASM multi-thread

Turn on WASM multithreading to map pthreads to Web Workers, and pthread mutex methods to Atomics methods. After enabling multi-threading, the performance improvement of the portrait segmentation scene reached the maximum in 4 threads, and the improvement reached 26%. The use of threads is not the better, because there will be scheduling overhead.

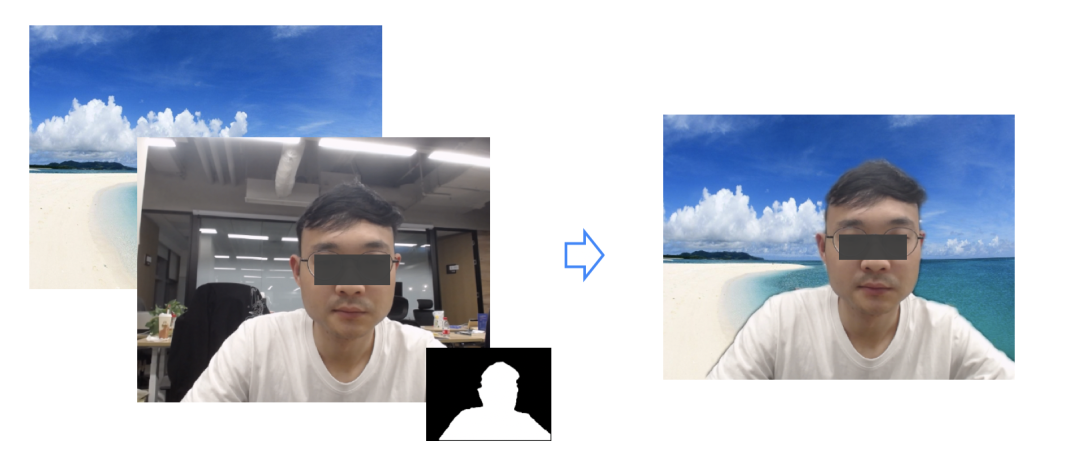

Finally, after we pass the portrait segmentation process, we will use WebGL to achieve image filtering, jitter removal and picture synthesis, and finally we will get the effect of the following figure.

Summarize

There are still some pain points in the process of using WebAssembly.

First of all, as mentioned above, we will optimize the calculation efficiency through SIMD instructions. Currently, the SIMD instruction optimization of WebAssembly only supports 128-bit data width. Therefore, many people in the community have proposed that if support for 256-bit AVX2 and 512-bit AVX512 instructions can be achieved, parallel computing performance can be further improved.

Second, currently WebAssambly cannot directly access the GPU. If it can provide more direct OpenGL ES calling capabilities, it can avoid the performance overhead of JSBridge from OpenGL ES to WebGL.

Third, at present, WebAssambly cannot directly access audio and video data. The data collected from Camera and Mic needs more processing steps to reach wasm.

Finally, for the web-side portrait segmentation, we summarized a few points:

- WebAssembly is one of the correct ways to use machine learning on the web platform.

- Under certain circumstances, enabling SIMD and multithreading bring significant performance improvements.

- When the basic computing performance and algorithm design are poor, the performance improvement brought by SIMD and multithreading is of little significance.

- When using WebGL for video processing and rendering, WebAssembly output data needs to be compatible with the format of WebGL texture sampling.

- When using WebAssembly for real-time video processing, it is necessary to consider the key overhead in the entire Web processing flow, and make appropriate optimizations to improve the overall performance.

If you want to know more about the relevant practical experience of portrait segmentation on the Web, please visit rtcdeveloper.com and post to communicate with us. To read more technical practice dry goods, please visit agora.io/cn/community.

**粗体** _斜体_ [链接](http://example.com) `代码` - 列表 > 引用。你还可以使用@来通知其他用户。