Author: Michael Yuan, WasmEdge Maintainer

Vercel is the leading platform for developing and hosting Jamstack Unlike traditional web applications that dynamically generate UI from the server at runtime, Jamstack applications consist of static UI (HTML and JavaScript) and a set of serverless functions that support dynamic UI elements through JavaScript.

The Jamstack approach has many benefits. One of the most important benefits of this is its powerful performance. Since the UI is no longer generated from the runtime of the central server, the load on the server is much less, and we can deploy the UI through the edge network (such as CDN).

However, the edge CDN only solves the problem of distributing static UI files. The serverless functions on the backend may still be slow. In fact, the current popular serverless platforms have well-known performance problems, such as slow cold start, especially for interactive applications. In this regard, WebAssembly has a lot to offer.

Use WasmEdge , a CNCF managed cloud native Runtime webassembly , developers can write a high-performance function serverless deployed in public cloud computing node or an edge. In this article, we will explore how to use the WasmEdge function written by Rust to drive the back end of the Vercel application.

Why use WebAssembly in Vercel Serverless?

The Vercel platform already has a very easy-to-use serverless framework that can deploy functions hosted in Vercel. As discussed above, the use of WebAssembly and WasmEdge is for further improve the performance of . High-performance functions written in C/C++, Rust and Swift can be easily compiled into WebAssembly. These WebAssembly functions are much faster than JavaScript or Python commonly used in serverless functions.

So the question is, if raw performance is the only goal, why not directly compile these functions into machine local executable files? This is because WebAssembly "containers" still provide many valuable services.

First, WebAssembly at the runtime level. Errors in the code or memory safety issues will not spread outside the WebAssembly runtime. As the software supply chain becomes more and more complex, it is very important to run the code in a container to prevent others from accessing your data through the dependent library without authorization.

Secondly, the WebAssembly bytecode is which is portable . Developers only need to build it once, and there is no need to worry about changes or updates to the underlying Vercel serverless container (operating system and hardware) in the future. It also allows developers to reuse the same WebAssembly functions in similar hosting environments, such as Tencent's Serverless Functions in the public cloud, or in the framework of data streams

Finally, WasmEdge Tensorflow API provides a way to execute Tensorflow models that most conforms to the Rust specification. WasmEdge installed the correct combination of Tensorflow dependent libraries and provided developers with a unified API.

A lot of concepts and explanations have been said. Strike while the iron is hot, let's take a look at the sample application!

Ready to work

Since our demo WebAssembly function is written in Rust, you need to install the Rust compiler . Make sure to install the wasm32-wasi compiler target as follows to generate WebAssembly bytecode.

$ rustup target add wasm32-wasi

The front end of the Demo application is written with Next.js and deployed on Vercel. We assume that you already have the basic knowledge of using Vercel.

Example 1: Image processing

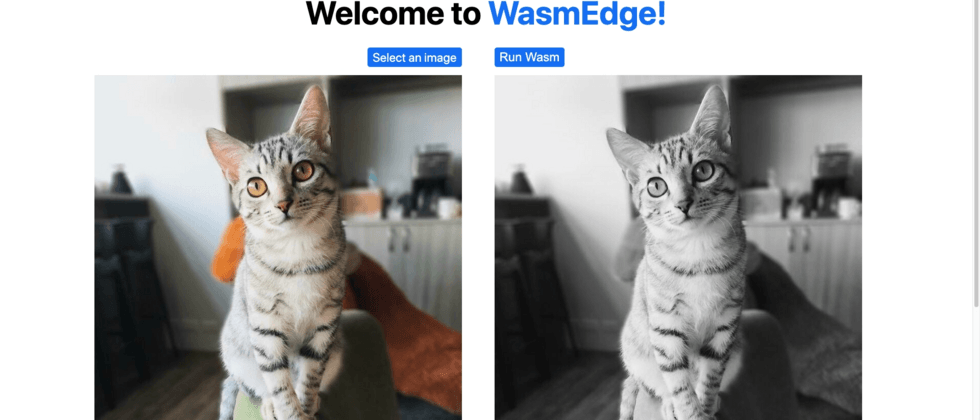

Our first demo application is to let users upload pictures and then call the serverless function to turn them into black and white pictures. Before starting, you can try this demo deployed on Vercel.

First fork the GitHub repo demo application. To deploy the application on Vercel, just from the 16101d370c0abe Vercel for GitHub page and click GitHub repo .

The content of this GitHub repo is a standard Next.js application on the Vercel platform. The back-end serverless functions are in the api/functions/image_grayscale folder. src/main.rs file contains the source code of the Rust program. The Rust program STDIN , and then outputs the black and white image to STDOUT .

use hex;

use std::io::{self, Read};

use image::{ImageOutputFormat, ImageFormat};

fn main() {

let mut buf = Vec::new();

io::stdin().read_to_end(&mut buf).unwrap();

let image_format_detected: ImageFormat = image::guess_format(&buf).unwrap();

let img = image::load_from_memory(&buf).unwrap();

let filtered = img.grayscale();

let mut buf = vec![];

match image_format_detected {

ImageFormat::Gif => {

filtered.write_to(&mut buf, ImageOutputFormat::Gif).unwrap();

},

_ => {

filtered.write_to(&mut buf, ImageOutputFormat::Png).unwrap();

},

};

io::stdout().write_all(&buf).unwrap();

io::stdout().flush().unwrap();

}

Use Rust's cargo tool to build Rust programs as WebAssembly bytecode or native code.

$ cd api/functions/image-grayscale/

$ cargo build --release --target wasm32-wasiCopy the build artifacts to the api folder.

$ cp target/wasm32-wasi/release/grayscale.wasm ../../api/pre.shwhen setting up the serverless environment. At this time, the WasmEdge runtime will be installed, and then the WebAssembly bytecode program will be compiled into a localsolibrary for faster execution.

api/hello.js file complies with Vercel's serverless specification. It loads the WasmEdge runtime, starts the compiled WebAssembly program in WasmEdge, and transmits the uploaded image data through STDIN. Note that here api/hello.js runs the compiled grayscale.so api/pre.sh to get better performance.

const fs = require('fs');

const { spawn } = require('child_process');

const path = require('path');

module.exports = (req, res) => {

const wasmedge = spawn(

path.join(__dirname, 'WasmEdge-0.8.1-Linux/bin/wasmedge'),

[path.join(__dirname, 'grayscale.so')]);

let d = [];

wasmedge.stdout.on('data', (data) => {

d.push(data);

});

wasmedge.on('close', (code) => {

let buf = Buffer.concat(d);

res.setHeader('Content-Type', req.headers['image-type']);

res.send(buf);

});

wasmedge.stdin.write(req.body);

wasmedge.stdin.end('');

}This is done. Next, deploy the repo to Vercel to get a Jamstack application. The application has a high-performance serverless backend based on Rust and WebAssembly.

Example 2: AI reasoning

second demo application is to allow users to upload images, and then call the serverless function to identify the main objects in the image.

It is in the same GitHub repo as the previous example, but branched tensorflow Note: GitHub repo 16101d370c0cb2 to the Vercel website, Vercel will create a preview URL for each branch. tensorflow branch will have its own deployment URL.

The back-end serverless function for image classification is located in the api/functions/image-classification folder in the tensorflow src/main.rs file contains the source code of the Rust program. The Rust program STDIN , and then outputs the text output to STDOUT . It uses WasmEdge Tensorflow API to run AI inference.

pub fn main() {

// Step 1: Load the TFLite model

let model_data: &[u8] = include_bytes!("models/mobilenet_v1_1.0_224/mobilenet_v1_1.0_224_quant.tflite");

let labels = include_str!("models/mobilenet_v1_1.0_224/labels_mobilenet_quant_v1_224.txt");

// Step 2: Read image from STDIN

let mut buf = Vec::new();

io::stdin().read_to_end(&mut buf).unwrap();

// Step 3: Resize the input image for the tensorflow model

let flat_img = wasmedge_tensorflow_interface::load_jpg_image_to_rgb8(&buf, 224, 224);

// Step 4: AI inference

let mut session = wasmedge_tensorflow_interface::Session::new(&model_data, wasmedge_tensorflow_interface::ModelType::TensorFlowLite);

session.add_input("input", &flat_img, &[1, 224, 224, 3])

.run();

let res_vec: Vec<u8> = session.get_output("MobilenetV1/Predictions/Reshape_1");

// Step 5: Find the food label that responds to the highest probability in res_vec

// ... ...

let mut label_lines = labels.lines();

for _i in 0..max_index {

label_lines.next();

}

// Step 6: Generate the output text

let class_name = label_lines.next().unwrap().to_string();

if max_value > 50 {

println!("It {} a <a href='https://www.google.com/search?q={}'>{}</a> in the picture", confidence.to_string(), class_name, class_name);

} else {

println!("It does not appears to be any food item in the picture.");

}

}Use cargo tool to build Rust program into WebAssembly bytecode or native code.

$ cd api/functions/image-grayscale/

$ cargo build --release --target wasm32-wasiCopy build artifacts to api folder

$ cp target/wasm32-wasi/release/classify.wasm ../../Similarly, the api/pre.sh script installs the WasmEdge runtime and its Tensorflow dependencies in this application. It also classify.wasm bytecode program into the classify.so native shared library during deployment.

api/hello.js file complies with the Vercel serverless specification. It loads the WasmEdge runtime, starts the compiled WebAssembly program in WasmEdge, and transmits the uploaded image data STDIN Note that api/hello.js runs the compiled classify.so api/pre.sh to achieve better performance.

const fs = require('fs');

const { spawn } = require('child_process');

const path = require('path');

module.exports = (req, res) => {

const wasmedge = spawn(

path.join(__dirname, 'wasmedge-tensorflow-lite'),

[path.join(__dirname, 'classify.so')],

{env: {'LD_LIBRARY_PATH': __dirname}}

);

let d = [];

wasmedge.stdout.on('data', (data) => {

d.push(data);

});

wasmedge.on('close', (code) => {

res.setHeader('Content-Type', `text/plain`);

res.send(d.join(''));

});

wasmedge.stdin.write(req.body);

wasmedge.stdin.end('');

}Now you can the forked repo to vercel , and you will get a Jamstack application that recognizes objects.

Just change the Rust function in the template, and you can deploy your own high-performance Jamstack application!

Outlook

Running WasmEdge from Vercel's current serverless container is an easy way to add high-performance functions to Vercel applications.

If you use WasmEdge to develop interesting Vercel functions or applications, you can add WeChat h0923xw to receive a small gift.

Looking to the future, a better way is to use WasmEdge itself as a container instead of using Docker and Node.js to start WasmEdge today. In this way, we can run serverless functions more efficiently. WasmEdge has compatible with Docker tools . If you are interested in joining WasmEdge and CNCF for this exciting work, please join our channel at

**粗体** _斜体_ [链接](http://example.com) `代码` - 列表 > 引用。你还可以使用@来通知其他用户。