Throw a problem

After a service is deployed, interface call timeouts are often encountered during a period of time. This problem is easy to encounter when the traffic is relatively large. For example, for a service that has been done, the entire service only provides one interface to the outside. The function is computationally intensive and will pass A series of complex processing. The full amount of data is cached with Redis when the instance is started. There is little read and write of the underlying library during operation, and Gauva local cache is added, so the service processing speed is very fast, and the response time is stable at 1-3ms , 8 2-core 4G machines in 2 remote clusters, and the usual QPS is maintained at around 1300. The seemingly perfect service has a headache in the mid-term. Every iteration of online deployment will always have a "jitter" problem, and the response time will soar to the second level, and this kind of non-primary link service is responding There are strict requirements on time, generally not more than 50~100ms.

Solution process

Here is a brief description of the process of solving the problem, and will not elaborate on the specific steps.

1. Try the first step

The initial guess is that there will be some initialization and caching operations within a period of time after the service is started, or that the service and the serializer need to establish a serialization model of the request and response, which will slow down the request, so try to do it before the traffic cuts in Warm up.

2. Try the second step

Through the above indicators, we can find that the CPU soars when the service is deployed, the memory is still normal, and the number of active threads soars. It is not difficult to see that the CPU soaring is due to the soaring number of Http threads. At this time, you can ignore what the CPU is up to. Troubleshoot problems by adding machine configuration.

3. Try the third step

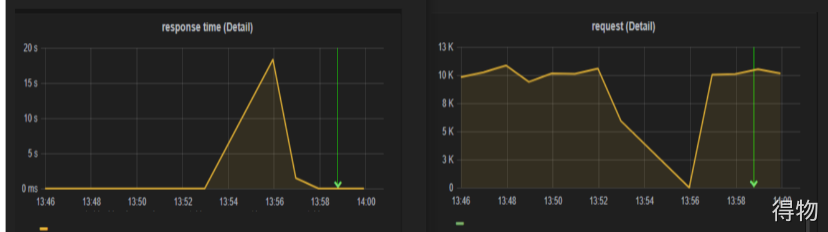

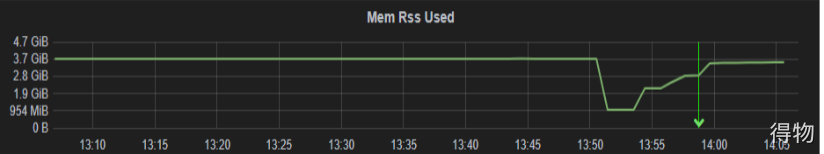

Coming here means that the previous attempts have all ended in failure. When I have no clue, I carefully checked the monitoring indicators and found that there is another abnormal indicator, as shown in the following figure:

The JVM's just-in-time compiler time reaches 40s+, which causes the CPU to soar, the number of threads increases, and it is not difficult to understand that the interface timeout finally occurs. Find the reason and then analyze the trilogy. What is it, why, and how?

What is just-in-time compiler

1 Overview

Programming languages are divided into high-level languages and low-level languages. Machine language and assembly language belong to low-level languages. Humans generally write high-level languages, such as C, C++, and Java. Machine language is the lowest level language, which can be directly executed by the computer. Assembly language is translated into machine execution by assembler and then executed. There are three types of execution of high-level language, which are compiled execution, interpreted execution and compiled and interpreted mixed mode. Java was originally defined as Interpretation and execution, later mainstream virtual machines include just-in-time compilers, so Java belongs to a mixed mode of compilation and interpretation. First, the source code is compiled into a .class bytecode file through javac, and then interpreted and executed through the java interpreter or through just-in-time compilation The machine (hereinafter referred to as JIT) is pre-compiled into machine code to be executed.

JIT is not a necessary part of JVM, and the JVM specification does not stipulate that JIT must exist, nor how to implement it. The main significance of its existence is to improve the execution performance of the program. According to the "28th law", 20% of the code Taking up 80% of the resources, we call these 20% of the code "Hot Spot Code". For the hot code, we use JIT to compile it into machine code related to the local platform, and perform various levels In-depth optimization, the next time it is used, it will no longer be compiled and the compiled machine code will be directly taken for execution. For non-"hot code", use a relatively time-consuming interpreter to execute, interpret our code as a binary code that can be recognized by the machine, and interpret one sentence and execute one sentence.

2. Interpreter and Compiler Comparison

- Interpreter: Execute while explaining, save compiling time, do not generate intermediate files, and do not need to compile all the codes, and can be executed in time.

- Compiler: It takes more time to compile, and the size of the compiled code will expand exponentially, but the compiled executable file runs faster and can be reused.

What we call JIT is faster than the interpreter, and is limited to "hot code" being compiled and executed faster than the interpreter's interpretation. If it is only a single execution code, the JIT is slower than the interpreter. According to the advantages of the two, the interpreter that requires fast restart of the service or has a large memory resource limit can play an advantage. After the program runs, the compiler will gradually play a role, compiling more and more "hot code" into local code. Improve efficiency. In addition, the interpreter can also be used as an "escape door" for the compiler to optimize. When the assumption of radical optimization is not established, it can return to the state of the interpreter to continue execution through inverse optimization. The two can cooperate with each other and learn from each other.

Types of just-in-time compilers

In the HotSpot virtual machine, there are two built-in JITs: Client Complier and Server Complier, referred to as C1 and C2 compilers. As mentioned earlier, JVM is a mixed mode of interpretation and compilation. Which compiler the program uses depends on the operating mode of the virtual machine. The virtual machine automatically selects according to its own version and the hardware performance of the host. Users can also use "-client" or The "-server" parameter specifies which compiler to use.

C1 compiler: It is a simple and fast compiler. The main focus is on local optimization. The optimization methods used are relatively simple, so the compilation time is short, and it is suitable for programs with short execution time or requirements for startup performance. Such as GUI applications.

C2 compiler: It is a compiler for performance tuning of long-running server-side applications. The optimization methods used are relatively complex, so the compilation time is longer, but at the same time the execution efficiency of the generated code is higher, which is suitable for longer execution time Or procedures that require peak performance.

Java7 introduces hierarchical compilation, which combines the start-up performance advantages of C1 and the peak performance advantages of C2. Hierarchical compilation divides the execution status of the JVM into 5 levels:

Level 0: Program interpretation and execution, the performance monitoring function (Profiling) is enabled by default, if not enabled, the first level compilation can be triggered.

Level 1: It can be called C1 compilation, which compiles bytecode into native code, performs simple and reliable optimization, and does not turn on Profiling.

Layer 2: Also known as C1 compilation, profiling is turned on, and only C1 compilation with profiling with the number of method calls and the number of loop back side executions is executed.

Layer 3: Also known as C1 compilation, execute all C1 compilation with Profiling.

Layer 4: It can be called C2 compilation, which also compiles bytecode into native code, but will enable some optimizations that take a long time to compile, and even some unreliable radical optimizations based on performance monitoring information.

Tiered compilation (-XX:+TieredCompilation) is turned on by default in Java8, and the settings of -client and -server are no longer valid.

If you only want to use C1, you can use the parameter "-XX:TieredStopAtLevel=1" while turning on the hierarchical compilation.

If you only want to use C2, use the parameter "-XX:-TieredCompilation" to turn off tiered compilation.

If you only want to have an interpreter mode, you can use "-Xint", at this time JIT does not intervene in the work at all.

If you only want to have the JIT compilation mode, you can use "-Xcomp", and the interpreter serves as the "escape door" of the compiler.

You can directly view the compilation mode used by the current system through the java -version command line. As shown below:

4. Hot spot detection

The behavior of the just-in-time compiler to determine whether a certain piece of code is "hot code" is called hot spot detection, which is divided into sampling-based hot spot detection and counter-based hot spot detection.

Sampling-based hot spot detection: The virtual machine periodically checks the top of the stack of each thread. If a method is found to appear frequently, this method is "hot code". This kind of hot spot detection is simple, efficient, and easy to obtain. The call relationship, but it is difficult to accurately confirm the popularity of the method, and it is easy to be interfered by thread blocking or other external factors.

Counter-based hot spot detection: The virtual machine establishes a counter for each method or code block, and counts the number of code executions. If it exceeds a certain threshold, it is considered "hot code". This method is troublesome to implement and cannot directly obtain the calls between methods. Relationship, you need to maintain a counter for each method, but can accurately count the popularity.

The HotSpot virtual machine defaults to counter-based hot spot detection, because "hot code" is divided into two categories: methods that are called multiple times and loops that are executed multiple times, so the hot spot detection counter is divided into method call counters and back edges. counter. It should be noted here that the final compiled objects of the two are complete methods. For the latter, although the action that triggers the compilation is the body of the loop, the compiler will still compile the entire method. This compilation method is because the compilation occurs during the execution of the method. During the process, it is called On Stack Replacement (OSR compilation for short).

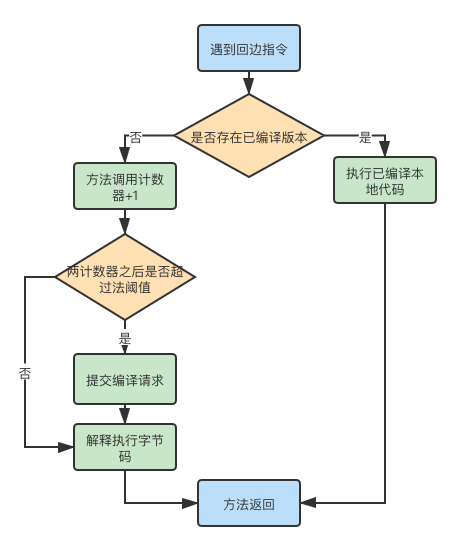

Method call counter: used to count the number of method calls. The default threshold is 1500 times in C1 mode and 10000 times in C2 mode. It can be set by parameter -XX: CompileThreshold. In the case of hierarchical compilation, the threshold specified by -XX: CompileThreshold will be invalid. At this time, it will be dynamically adjusted according to the number of methods currently to be compiled and the number of compilation threads. When the sum of the method counter and the backside counter exceeds the method counter When the threshold is reached, the JIT compiler will be triggered. When triggered, the execution engine will not wait for the completion of the compilation synchronously, but will continue to execute the bytecode according to the interpreter. After the compilation request is completed, the system will change the call entry of the method to the latest address. , The compiled machine code will be used directly in the next call.

If you do not set the task, the method call counter counts not the absolute number of times, but a relative execution frequency, that is, the number of calls in a period of time. When the number of calls exceeds the time limit and still does not reach the threshold, then the method is called The number of times will be halved. This behavior is called method call counter heat decay. This period of time is called the statistical half-life period of this method. You can use the virtual machine parameter -XX: -UseCounterDecay to turn off the heat decay, or you can use the parameter- XX:CounterHalfLifeTime sets the time of the half-life cycle. It should be noted that the action of heat attenuation is performed by the way when the virtual machine is performing garbage collection.

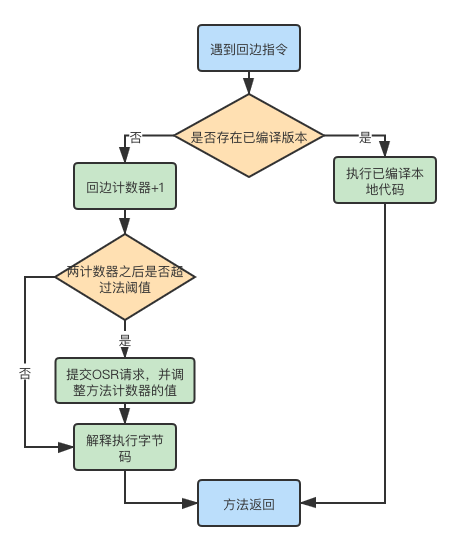

Back-edge counter: used to count the number of executions of the loop body code in a method, and the instruction that jumps back when the control flow is encountered in the bytecode is called the back-edge. Unlike the method counter, the edge-back counter does not count the heat decay process, but counts the absolute number of executions of the method loop. When the counter overflows, the value of the method counter will also be adjusted to the overflow state, so that the compilation process will be executed next time the method is entered. Without enabling hierarchical compilation, C1 defaults to 13995 and C2 defaults to 10700. HotSpot provides -XX: BackEdgeThreshold to set the threshold of the edge counter, but the current virtual machine actually uses -XX: OnStackReplacePercentage to indirectly adjust it.

In Client mode: Threshold = Method call counter threshold (CompileThreshold) * OSR ratio (OnStackReplacePercentage) / 100. The default value CompileThreshold is 1500, OnStackReplacePercentage is 933, and the final return counter threshold is 13995.

In Server mode: threshold = method call counter threshold (CompileThreshold) * (OSR ratio (OnStackReplacePercentage)-interpreter monitoring ratio (InterpreterProfilePercentage)) / 100. The default value CompileThreshold is 10000, OnStackReplacePercentage is 140, InterpreterProfilePercentage is 33, and the final return counter threshold is 10700.

In the case of hierarchical compilation, the threshold specified by -XX: OnStackReplacePercentage will also be invalid. At this time, it will be dynamically adjusted according to the number of methods to be compiled and the number of compilation threads.

Why the Just-in-Time Compiler is time-consuming

Now we know that the CPU soaring during service deployment is because instant compilation is triggered, and instant compilation is executed in the background when the user program is running. It can be set by the BackgroundCompilation parameter. The default value is true. It is very dangerous to set this parameter to false. Operation, the business thread will wait for just-in-time compilation to complete, maybe a century has passed.

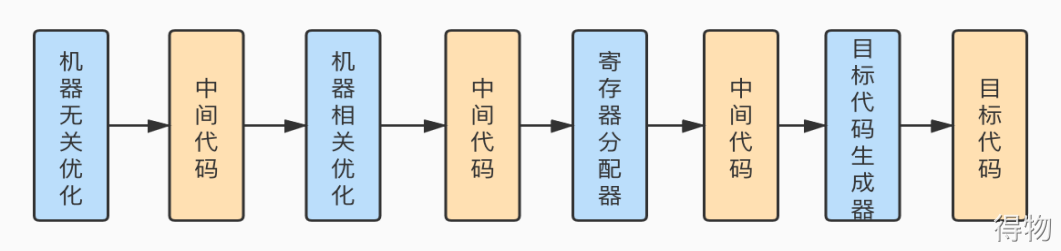

So what does the compiler do in the background execution process? It is roughly divided into three stages, and the process is as follows.

The first stage: A platform-independent front-end constructs bytecode into a high-level intermediate representation (HIR). HIR uses a static single-part form to represent code values, which can make some of the The optimization actions performed during and after the construction are easier to implement. Prior to this, the compiler will complete some basic optimizations on the bytecode, such as method inlining and constant propagation.

The second stage: A platform-related backend generates Low-Level Intermediate Representation (LIR) from HIR, and before that, other optimizations will be completed on HIR, such as null check elimination and range check Elimination and so on.

The third stage: Use the linear scan algorithm (Liner Scan Register Allocation) to allocate registers on the LIR on the platform-related backend, and do peephole optimization on the LIR, and then generate machine code.

The above stages are the general process of the C1 compiler, and the C2 compiler will perform all classic optimization actions, such as useless code elimination, loop unrolling, escape analysis, etc.

How to solve the problem

To know the root cause of the problem, we only need to prescribe the right medicine. Our ultimate goal is not to affect upstream calls when the service is deployed, so we must avoid the execution time of instant compilation and the running time of user programs, and we can choose to cut in upstream traffic. Before let hot code trigger real-time compilation, which is what we call warm-up, the implementation method is relatively simple, using the method provided by Spring. Most of the entire service is hot code. You can add the "-XX:-TieredCompilation -server" parameter to the JVM startup script to turn off the hierarchical compilation mode, enable the C2 compiler, and then simulate the call in the preheated code All interfaces, so that just-in-time compilation will be performed before traffic access. It should be noted that not all codes will be compiled on the fly, and the memory for storing machine codes is limited. Even if the code is too large, it will not be triggered even if it reaches a certain number of times. And most of our services conform to the "Two-Eight Law", so we still choose to keep the hierarchical compilation mode, in the method to simulate calling popular codes, you need to calculate the threshold for triggering instant compilation in the hierarchical mode, and you can also adjust it according to the parameters. Threshold size.

Text|Shane

Pay attention to Dewu Technology, and work hand in hand to the cloud of technology

**粗体** _斜体_ [链接](http://example.com) `代码` - 列表 > 引用。你还可以使用@来通知其他用户。