This article is based on the sharing of Chen Shiyu (Zilliz Senior Data Engineer) on "ECUG Meetup Phase 1 | 2021 Audio and Video Technology Best Practices·Hangzhou Station" on June 26, 2021. The full text has a total of more than 4200 characters, and the main structure is as follows:

- Unstructured data and AI

- The difference between vector and data

- Analyze the Milvus project with audio as a case

- "Take pictures to search for pictures" as an example to analyze the similarity search process

- Audio similarity retrieval process

- Video similarity retrieval process

- Three Cases of Audio and Video Similarity Retrieval

To get the "full version of the lecturer PPT", please add the ECUG assistant WeChat (WeChat ID: ECUGCON) and note "ECUG PPT". The sharing of other lecturers will also be released in the future, so stay tuned.

following is the sharing text:

good afternoon everyone! I am very happy to participate in the ECUG Meetup hosted by ECUG and Qiniu Cloud to discuss with you the best practices of audio and video technology. Today I will introduce you to the "Technical Implementation of Similarity Retrieval of Audio and Video".

First of all, let me introduce myself. My name is Chen Shiyu. I am a data engineer at Zilliz, mainly engaged in data processing and model application.

Tell everyone about our company:

- Zilliz is the abbreviation of Zillion of Zillions. It is a technology startup company from Shanghai that focuses on the development of data science software based on heterogeneous computing.

- Open source is our important strategy, and we hope to promote our software business model through open source.

- Our vision is to redefine data science, provide data-related technologies for new fields, new scenarios, and new needs, and help people better discover the value contained in data.

- The main project of our company is Milvus, an open source project.

Unstructured data and AI

All of you are experts in the audio and video field. Let's review some of the characteristics of audio and video together. Video is actually composed of a series of video frames. Like the comic strips we see, a video can be composed of pictures frame by frame.

Another important factor of video is the frame rate, which represents the frequency of continuous images on the display in units of frames. There is also audio. Audio refers to any sound or noise within the range that the human ear can hear. Sound has its own amplitude and frequency, usually in WAV, Mp3 and other formats. Video data formats with audio include MP4, RMVB, AVI, etc.

The pictures, audios and videos mentioned here can all be called unstructured data. The data we often talk about is generally divided into three categories:

- The first category is structured data, including numbers, dates, strings, and so on.

- The second category is semi-structured data, including text information with a certain format, such as various system logs.

- The third type is unstructured data, such as pictures, videos, voices, natural language, etc., which are not easily understood by computers.

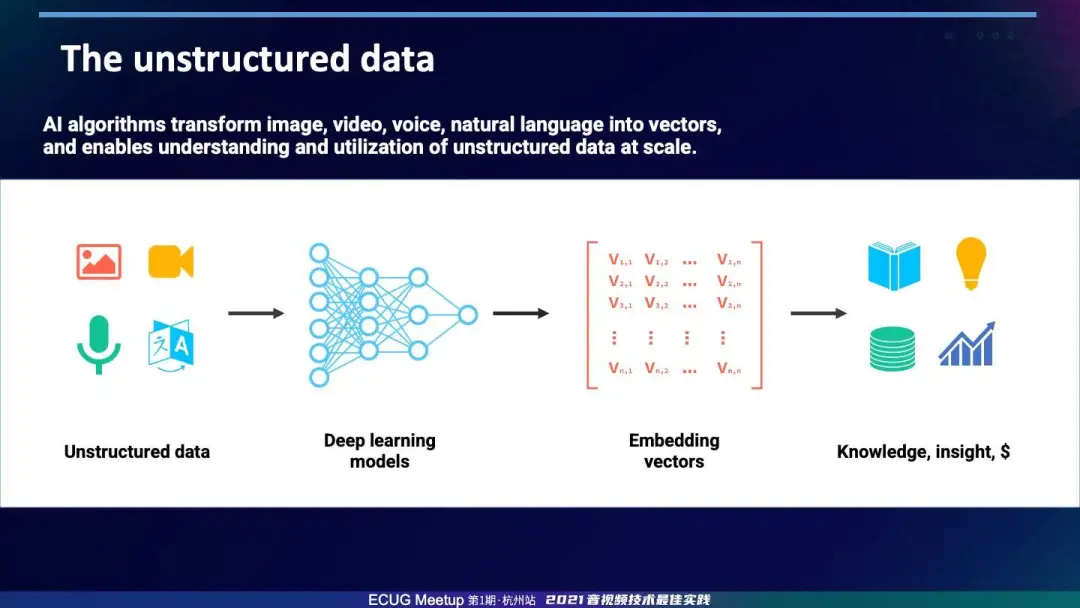

Relational databases and traditional big data technologies are designed to solve the problem of structured data, while semi-structured data is processed by text-based search engines. Only unstructured data is generated every day in our daily lives, accounting for 80% of the total data, and there has been a lack of effective analysis methods in the past. Until the rise of artificial intelligence and deep learning technology in recent years, unstructured data has been applied.

The charm of the deep learning model is that it can convert unstructured information that is difficult for computers to process into feature information that is easy for machines to understand. The deep learning model is used to extract features from unstructured data such as pictures, videos, speech, and natural language. Vector, and finally through the calculation of feature vector, the analysis of unstructured data is realized.

The difference between vector and data

We can convert unstructured data into vectors through AI technology, so what should we do with vector calculations? Can traditional relational databases be calculated? After all, a vector is like a number. To be precise, a vector is composed of a group of numbers.

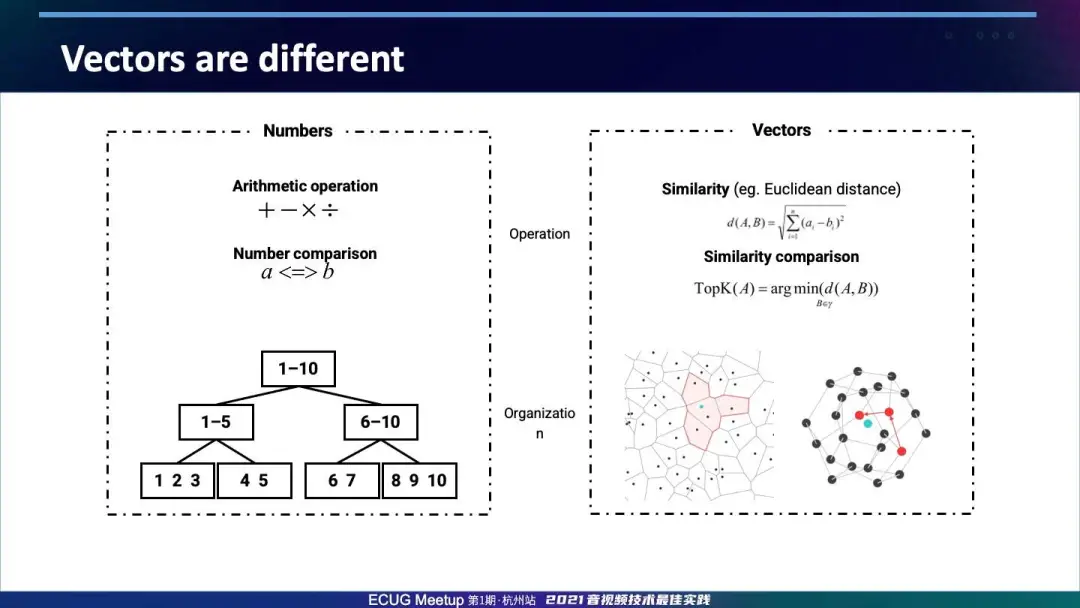

So what is the difference between a vector and a number? The difference between vector and number, I think there are two main aspects:

First of all, the common operations of vectors and numbers are different. The common operation between numbers is addition, subtraction, multiplication and division, but the most common operation of vectors is to calculate the similarity, such as calculating the Euclidean distance between vectors. Here I give the calculation formula of the Euclidean distance, you can see the calculation of the vector It is much more complicated than the general number calculation.

The second point is that the indexing methods of data and vectors are different. Between two numbers, a numerical comparison can be made and then a numerical index of the B-tree can be established. But between two vectors, we cannot make numerical comparisons. We can only calculate the similarity between them, and its index is usually based on the approximate nearest neighbor ANN algorithm. Here I give two ANN methods, cluster-based index and graph-based index.

Due to these significant differences, traditional databases and big data technologies are difficult to meet the requirements of vector analysis, and the algorithms they support and the scenarios they use are different.

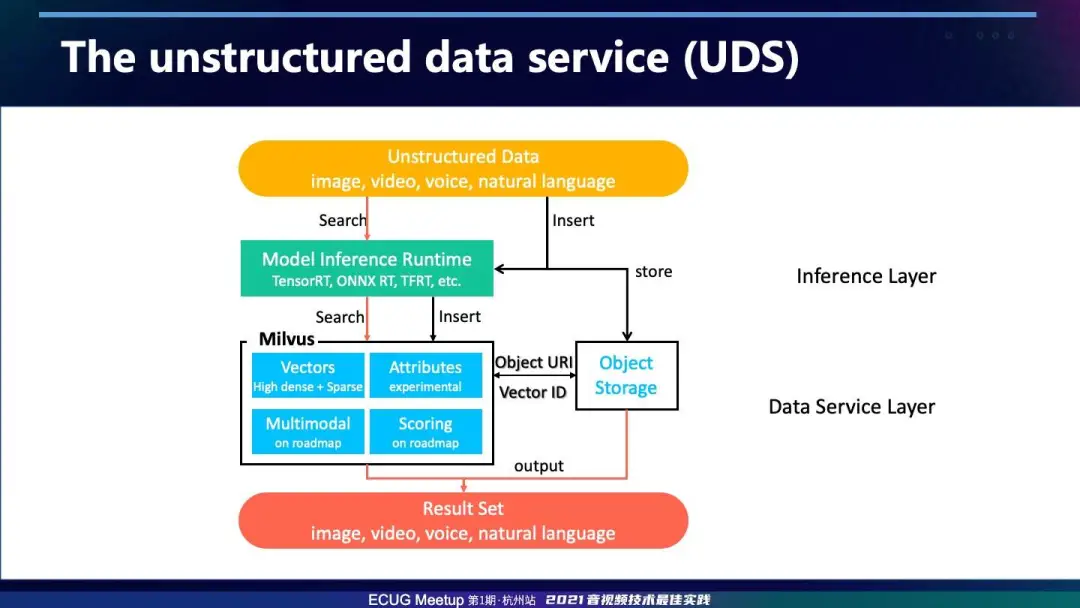

Therefore, we need a set of unstructured data services.

As you can see, both the upper layer input and the lower layer output are unstructured data. AI technology mainly acts on the middle two layers: the green layer model reasoning and the blue layer data service.

The task of model inference is to extract unstructured data into feature vectors. In fact, model training is a very costly thing. But the good news is that the industry already has some mature projects, such as Nvidia Tensor RT, Microsoft ONNX RT and so on.

But for the data service layer, there is currently no comprehensive solution. Some people put vectors in traditional relational databases, some people put them in HDFS, and then analyze the vectors through Spark, and some people use some ANN algorithm libraries. In this field, everyone is trying. The main challenge in this part is how to efficiently manage and analyze vectors. For massive unstructured data, the cost of high-speed analysis is still very high.

Analyzing the Milvus Vector Database with Audio as a Case

In response to this challenge, our answer is an unstructured data service driven by Milvus vector database. It contains four parts:

- The first part is embedded similarity search. Including high-dimensional dense vectors in deep learning scenarios and sparse vectors in traditional machine learning scenarios.

- The second part is attribute or scalar data. For example, the label described with structured data such as string, combining Attribute and vector, can provide the ability of mixed query.

- The third part is to support multiple models. For example, many models are used in daily life, and different vectors are generated. For different vectors and models, an entity concept is needed to combine different vectors.

- The fourth part is the scoring mechanism of integration. For example, when we introduce different models in multimodal search, we need to merge multiple models to form a new scoring mechanism to realize the comprehensive analysis of unstructured data.

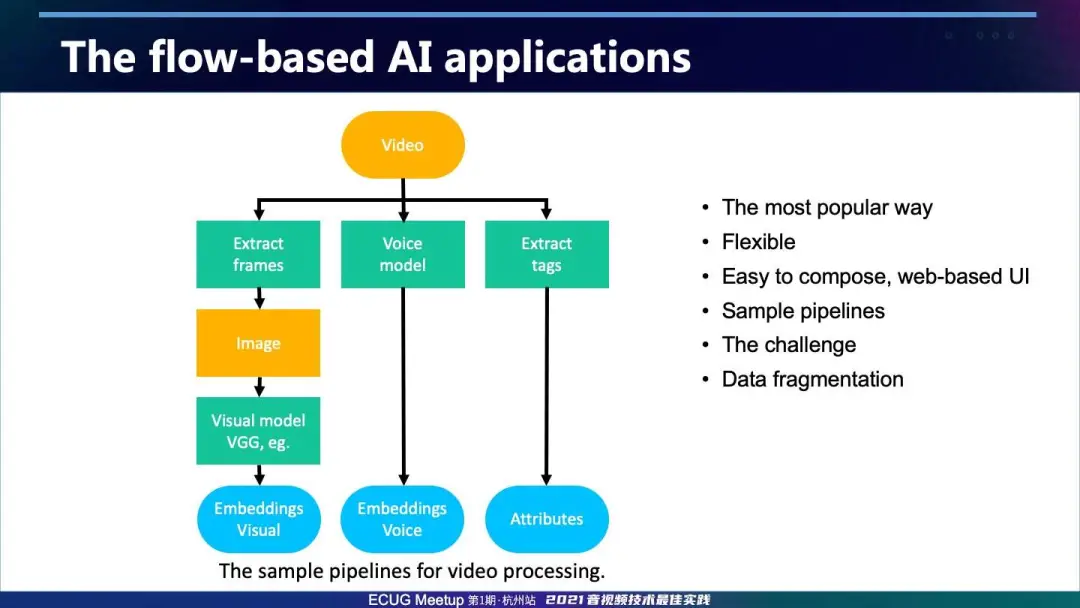

Next, give an example of audio and video processing, which is also a very typical example of stream-based, artificial intelligence applications. For example, to analyze a video, we can create some operation flow to achieve, usually called pipeline Pipeline:

- The pipeline on the left: Intercept video frames, and then extract image features from the intercepted images. Here is a list of the more popular VGG models in the field of computer vision, and finally we get the feature vector of the image in the video to realize the analysis of the image.

- The middle pipeline: processing the sound in the video, it will generate the feature vector of the audio.

- The pipeline on the right: It will automatically mark some attributes for the video. If you have other special requirements, you can create a new Pipeline to achieve it.

If I need other scenes, I can add Pipeline. It can be seen that AI applications are very flexible, and AI applications can be added by extending the operation flow; and it is very easy to deploy, and even operations such as adding and modifying the operation flow can be performed through a web-based interface. At the same time, through the division of operation flow, each Pipeline will become relatively simple.

Analyze the similarity search process from the example of "search for pictures with pictures"

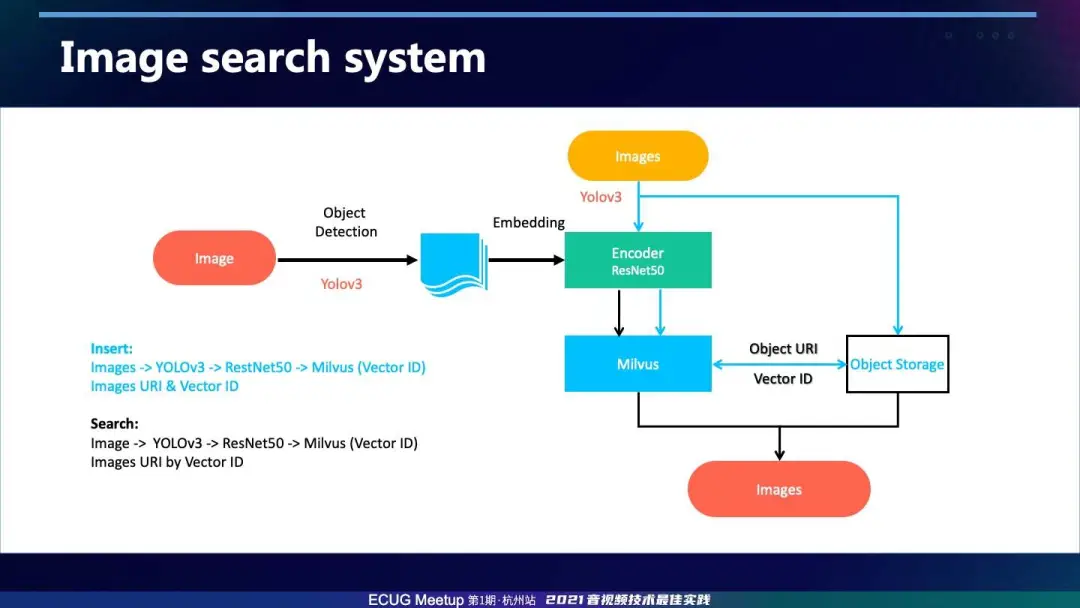

We can take a look at how each operation flow is implemented, such as an image search system, which is also called image search. It is mainly divided into two processes:

- Data insertion

- Data search

For example, the blue line in the figure indicates insertion. The image can first be extracted through the Yolo model to extract the object target in the image, and then extract the feature vector of the image of the target object in the ResNet50 model. After extracting the feature vector of the picture, it can be inserted into Milvus. At this time, Milvus will return the ID corresponding to the vector, and then the corresponding relationship between the ID of the vector and the path of the picture can be stored.

The search process is like the black line. It also goes through the Yolo model and the ResNet50 model, and then searches for the vector in the library in Milvus. At this time, Milvus will return the ID of the search result, and the corresponding picture can be found based on the ID.

This completes the process of searching for pictures with pictures.

The search-by-picture project mentioned here is open source on Github, and interested friends can click on Link to take a look.

Search Github Link by image:

https://github.com/milvus-io/bootcamp/tree/master/solutions/reverse_image_search

As mentioned earlier, our company's main product Milvus is also open source. We also hope that we can develop the community and maintain the project with everyone. Our system is also directly used by users for production use, for example, e-commerce users use it to search for products, designer teams use it to search for design drawings, and so on.

This is the front-end interface of image search, which is different from the traditional labeling of pictures. AI technology can automatically classify these pictures.

Audio similarity retrieval process

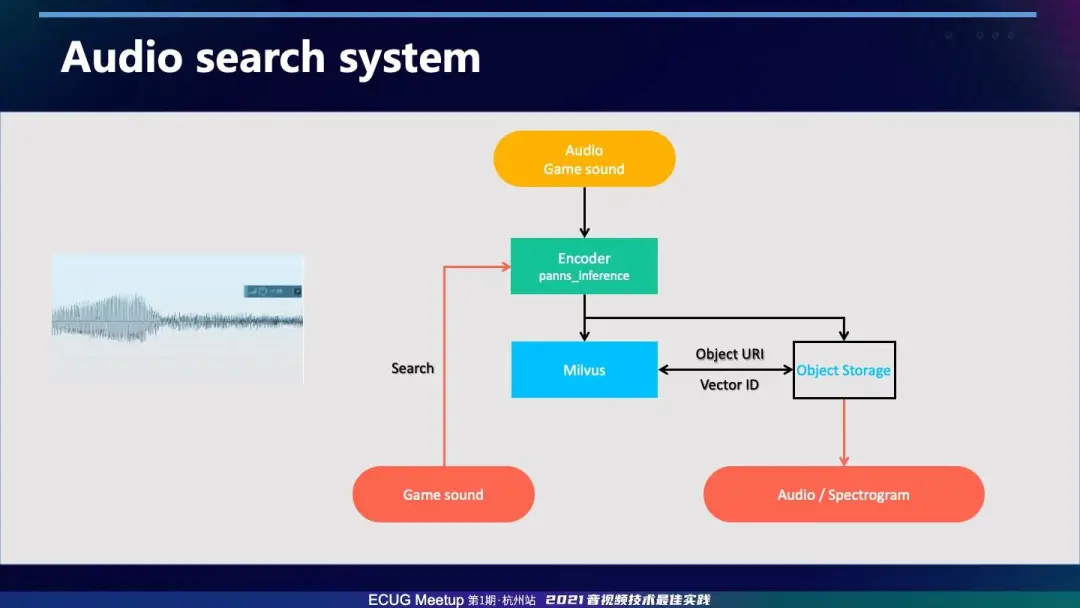

So, how to deal with audio data?

As mentioned earlier, these unstructured data can be extracted as feature vectors through the AI model. The PANNS_INFERENCE model is used here. A model for sound classification is based on the acquired feature vectors and then stored and stored in Milvus. Search, return similar sounds. Similarly, audio search is also available on Github with source code.

Audio search Github Link:

https://github.com/milvus-io/bootcamp/tree/master/solutions/audio_similarity_search

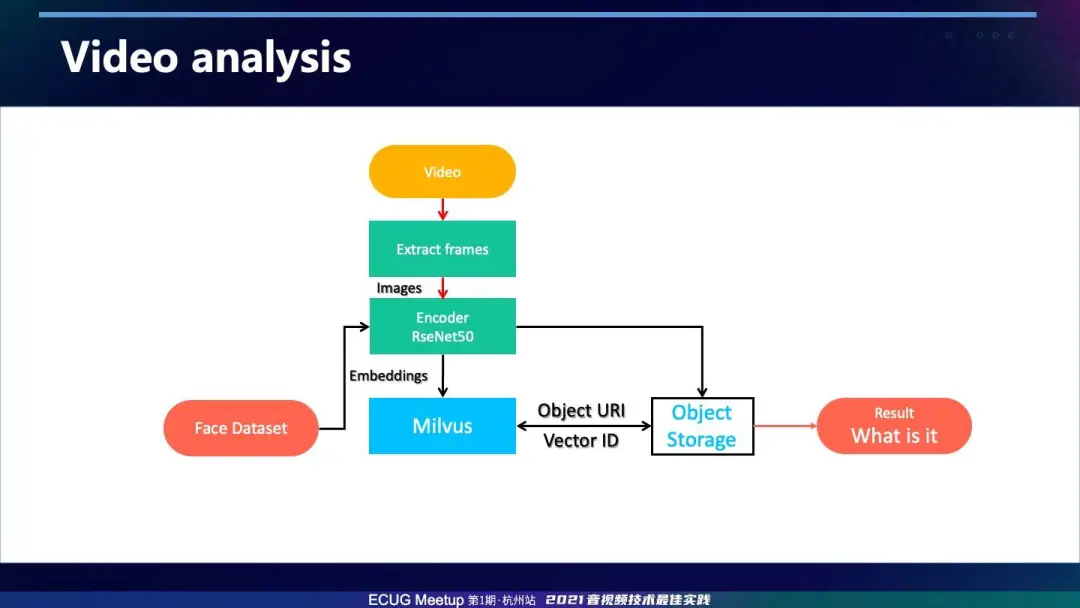

Video similarity retrieval process

The following is an example of video analysis, by cutting the video frame, and then extracting image features from the returned video frame, storing and analyzing it in Milvus.

Let's put a Demo video directly below to learn about the process of video analysis. We will recognize the objects in the video, and there will be a display on the right. In fact, the inspiration for our demo at the time came from the recommendation of video advertisements in Youtube. Youtube will directly provide related advertisements of the products in the video when the video is played.

Three Cases of Audio and Video Similarity Retrieval

Next, I will introduce user cases related to the audio and video field.

The first case, a sports platform analyzes human faces in sports events:

1. First of all, the user must ensure that the photos are uploaded instantly. When the competition starts, the photographer will continue to take photos. In order to ensure the timeliness of the photos transferred to the library, a set of solutions must be designed to ensure the reliability of the photos, and cloud storage will be used here.

2. Fast extraction of facial features. The self-developed face recognition extraction algorithm is used here, and the face image is compressed into a 128-dimensional feature vector while ensuring high accuracy.

3. After a large number of face features are extracted, a vector database is needed to store and retrieve the large number of face feature vectors. Each face feature vector information is stored in the Milvus system, and the modified Leaf algorithm is used to generate the ID corresponding to the image, so that each ID corresponds to the feature database of Milvus. Then after parsing the ID, other information corresponding to the ID can be quickly determined, and the photo can be quickly queried from the business database.

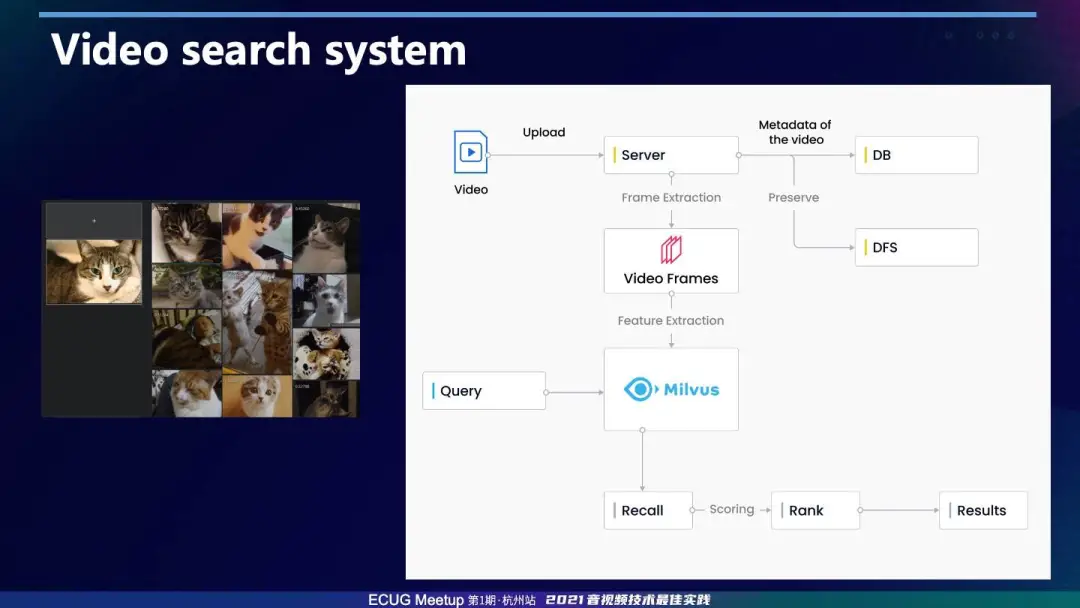

The second case, the video search system:

1. First upload the video to the server and save the video meta information. When the video processing task is triggered, the video will be preprocessed, framed, and the picture will be converted into a feature vector and then imported into the Milvus library.

2. Upload the video or video ID to be checked and convert the video into multiple feature vectors.

3. Search for similarity vectors in the Milvus library, and obtain TopK results by calculating the similarity of the image set.

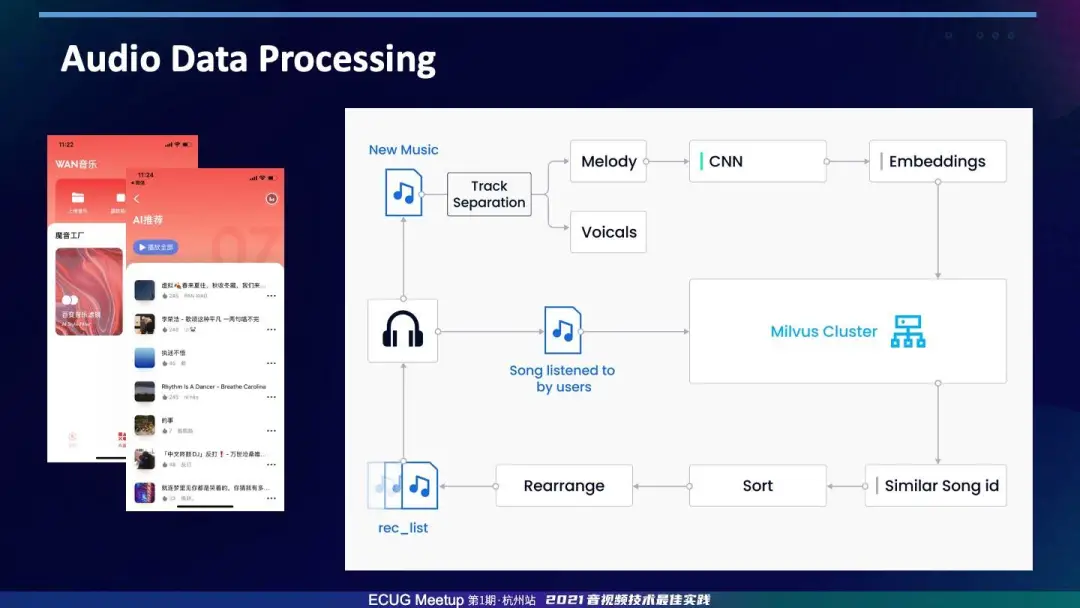

The third case, this is a music recommendation application, which is to recommend music that users may like on a music app.

1. First, the uploaded music will be separated into tracks, the human voice and the BGM will be separated, and the feature vector of the BGM will be extracted. The separation of audio tracks can ensure the effect of distinguishing the original singing and the cover singing.

2. Next, store these feature vectors in Milvus, and search for similar songs based on the songs the user has heard.

3. Then sort and rearrange the retrieved songs to generate music recommendations.

The above is the content of my speech. The official website and address of our Milvus are listed here. You can follow and learn about our project and build an open source community with us.

https://milvus.io

https://github.com/milvus-io/milvus

https://twitter.com/milvusio

https://medium.com/unstructured-data-service

https://zhuanlan.zhihu.com/ai-search

https://milvusio.slack.com/join/shared_invite/zt-e0u4qu3k-bI2GDNys3ZqX1YCJ9OM~GQ

Q & A

Question: I am particularly interested in your audio retrieval, because audio is divided into three categories: vocals, music and wave types. If you are doing retrieval, I would like to ask, what is the measurement index for the extraction of the feature quality?

Chen Shiyu: In fact, for feature extraction, we usually use some trained models. As you mentioned, we have encountered different voiceprints, voices, and music before. These three types of models are used by us. The model is also different. Like the data you mentioned, I think it may just be necessary to do training.

First, you have to have data, and after you have the data, you have to train on the data, and the effect of the extracted feature vector will be better. So, how do you measure it? I think one is a model, it may be measured by an algorithm.

Second, the human senses actually measure this. For example, in the case of searching for pictures with pictures, the pictures searched by the machine may be similar to the pictures searched by the machine, but our human eyes do not look the same. So, to measure this, you may look at the model, or you can make some estimates yourself.

Question: I think you mentioned some multi-modal search strategies. I would like to ask some methods in this regard. How to extract the features of audio and video for search?

Chen Shiyu: In terms of multi-modality, we just recently released Milvus 2.0, which supports multi-column vectors.

Question: You are mainly doing search. After processing and extracting audio, video, and image vectors, how do these two aspects merge?

Chen Shiyu: We did it separately. The fusion you just mentioned is that it does not actually combine the vectors, it is separate, and each column has a different vector, but we will finally have a fusion scoring mechanism, which is to do some fusion scoring on those models. This may make the evaluation of the model better.

About Qiniu Cloud, ECUG and ECUG Meetup

Qiniu Cloud: Qiniu Cloud was established in 2011. As a well-known domestic cloud computing and data service provider, Qiniu Cloud continues to be intelligent in massive file storage, CDN content distribution, video-on-demand, interactive live broadcast and large-scale heterogeneous data In-depth investment in core technologies in the fields of analysis and processing is committed to fully driving the digital future with data technology, and empowering all walks of life to fully enter the data age.

ECUG: Fully known as Effective Cloud User Group (Effective Cloud User Group), CN Erlounge II was established in 2007 and initiated by Xu Shiwei. It is an indispensable high-end frontier group in the field of science and technology. As a window of the industry's technological progress, ECUG brings together many technical people, pays attention to current hot technologies and cutting-edge practices, and jointly leads the technological transformation of the industry.

ECUG Meetup: A series of technology sharing activities jointly created by ECUG and Qiniu Cloud. It is positioned as an offline gathering for developers and technical practitioners. The goal is to create a high-quality learning and social platform for developers. We look forward to every participant. The co-creation, co-construction, and mutual influence of knowledge between developers, generating new knowledge to promote cognitive development and technological progress, promote common progress in the industry through communication, and create a better communication platform and development space for developers and technology practitioners.

**粗体** _斜体_ [链接](http://example.com) `代码` - 列表 > 引用。你还可以使用@来通知其他用户。