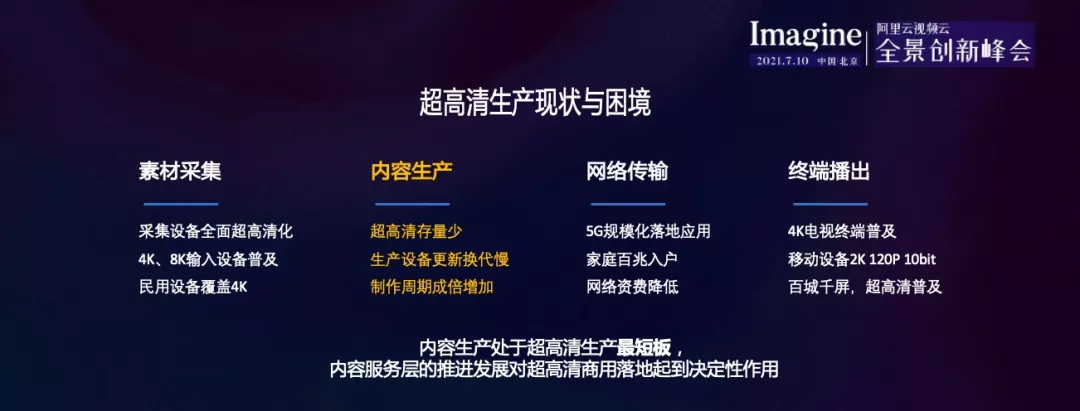

In 2021, UHD will enter the "8K" era. Ultra-high-definition video will bring a new audiovisual experience, but ultra-high-definition production is also facing the dilemma of low ultra-high-definition inventory, slow replacement of production equipment, and doubling of the production cycle at the content production level. At the Imagine Alibaba Cloud Video Cloud Panorama Innovation Summit on July 10, Xie Xuansong, a senior algorithm expert of Dharma Cloud, delivered a keynote speech on "AI Technology Drives the Ultra HD "View" World". To set out, we will deeply analyze how AI technology drives audio-visual upgrades, and share the practical experience of Dharma Academy in the field of ultra-high-definition production. The following is the content of the speech.

The development status of the ultra-high-definition industry

Vision is a physiological word. Through vision, humans and animals perceive the size, brightness, color, movement and static of external objects, and obtain various information that is important to the survival of the body. From the perspective of physical phenomena, that is, the retina's various responses to light, including the brightness of light, detailed descriptions, and information related to time. While visual impact is the biggest video .

What role can AI play in video?

The role of AI in video is mainly divided into two parts. The first part is the most basic. AI's understanding of video or images is reflected in the common classification, marking, detection, and segmentation in our videos. AI is related to people, because people must first understand the world when they come up, so if AI wants to play its role, the first step is to understand and learn.

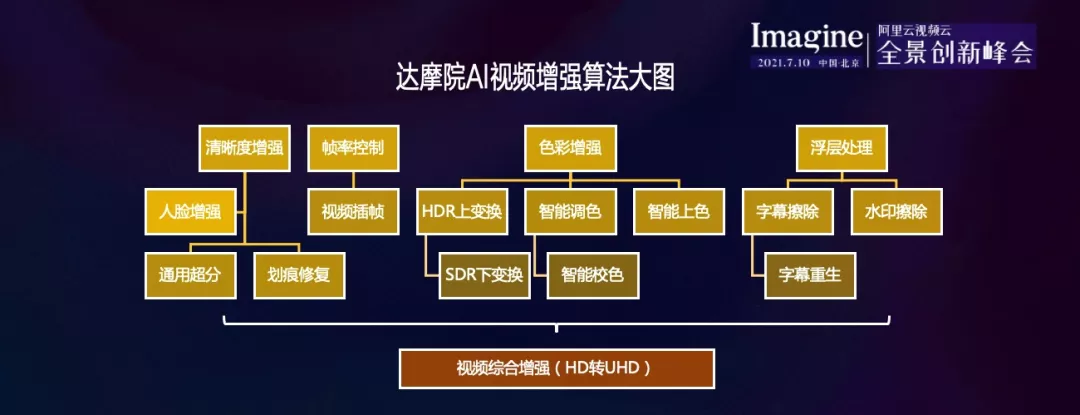

The second part is related to production, such as production, editing, processing, erasing, inserting etc., in which AI plays its role in enhancing the underlying vision. So how does AI play its role in the underlying vision?

Vision is the most important feeling of human beings, so the problem of video experience is the most important thing. And relevant experience will be a lot of things, humans are constantly pursuing the experience, the first is clarity pursuit , from 4K to 8K, the amount of information that contains more and more the richness of detail are also increasing. In 2021, 4k ultra-high-definition video has become popular and enters the 8K high-definition era.

The second is color , which is more vivid color, which is also a very important place that affects people's experience. The third is a more immersive experience.

So what role can AI play? Can it be applied in all walks of life?

The first is ultra-high-definition video. In 2014, the country announced its 4K strategy. Seven years have passed, and 4K has begun to develop in a higher 8K direction. In this process, the content is always lagging behind, and the infrastructure is ahead. Like 4K TVs, consumers still don’t buy a 4K TV with the most basic configuration, and the signal infrastructure has moved towards 8K, 5G .

The first 8K live broadcast was conducted during the Spring Festival last year. There will be 8K live broadcasts for the Tokyo Olympics and the Beijing Winter Olympics, and there will be more and more 8K live broadcasts.

But 8K live broadcast has many difficulties, because video is the process of complete content production. In fact, there are many links, such as material collection, which is now consumer-level, and 1080P and 4K-level material collection can be satisfied with the use of mobile phones. But how to produce content, does it count as production if you shoot a video?

In fact, it is only the most basic and simplest production. It is divided into two parts for content production: the first is the stock content, from the ancient black and white to the later colored content but very low resolution content. The requirements for technology and human experience are getting higher and higher, so the cycle and requirements for subsequent content production are getting higher and higher.

Technology content production , because technology is nothing more than two things, the first and improve efficiency 161163ba341f52, this is the most fundamental. The second is innovation , which can create new opportunities quickly, quickly and at low cost.

Therefore, technology will play a very important role in this area, including network transmission and terminals, which need to improve the entire industrial chain. And what I am talking about today is only one of the points, but this point also requires a lot of technology to complete.

AI technology drives audiovisual upgrades

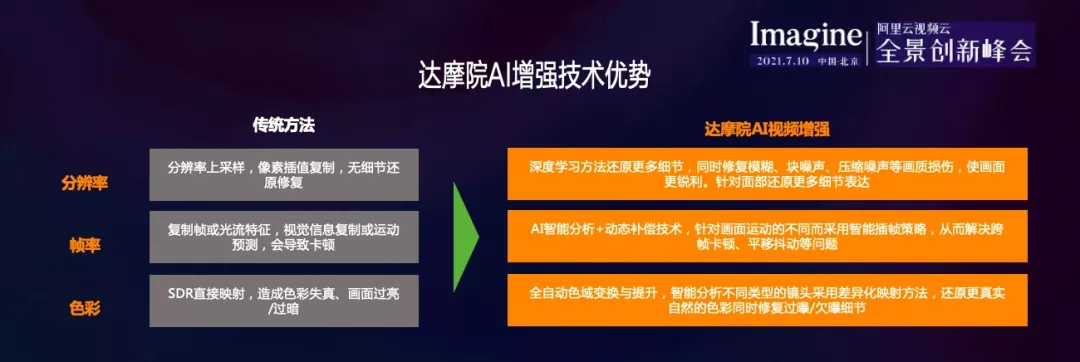

To improve the visual experience, the most basic is increase the observable details , and the most relevant to the details is the resolution, but the resolution needs to be supported by the terminal device, so this is the first and most important point.

The second is smooth silky visual experience , most of the current is 60 Hz display, but there are 120 Hz, 240 Hz, 360 Hz or even a display screen representing Hertz per second refresh rate, i.e. every second The number of times the image appears on the screen. The higher the hertz number supported by the screen, the more pictures displayed per second and the smoother video look and feel.

The previous bandwidth was insufficient to support so many image signal transmissions, and the number of frames of the video itself did not achieve a smooth experience.

Of course, technology can make up for the shortcomings of the video itself to improve the visual experience of the video.

The first is the concern details , the second concern fluency , third concern color . 4K content is clearly regulated by the country. If you want to call it 4K content, you must first meet these conditions.

From these three levels, there may be many flaws in the pursuit of technical details, because many here often use 161163ba342011 GAN series technology , and uncontrollable factors often appear in the production process, leading to flaws.

To be honest, I have always wanted to define visual production as controllable visual content production, which can not only ensure the restoration of details, but also ensure the control of defects. This requires a very core technology, which is the first.

Second, in addition to the algorithm to control the super-resolution, is the source of the 161163ba342042 algorithm? It is data . Everyone generally thinks that there are two types of data, low resolution and high resolution, because low image quality and high image quality are a pair.

There are many ways to obtain these data. The main method is to obtain these two kinds of data manually and at a high cost, and whether a high-simulation and high-realistic data pair can be produced in a technical way is a big topic in the future.

Finally, AI technology must be used in practice to balance the effect and efficiency, which is also a problem in itself.

The first thing to be solved is the scale of the data. Because the scale of AI data is massive, in these scenes, everyone feels the poorer picture quality, and many details have been lost. There are many fuzzy things and wrong colors. AI can't draw these data out of thin air. So whether you can design a plan to use AI algorithms to automatically obtain real data is a very difficult topic at present.

In the early days, everyone used a simple way. If you want to pursue better, you may use some data cores to make the clear picture unclear, and then create a data pair. Of course, it is possible to add something and some noise after the core is done. Isn’t that enough? In fact, it doesn't work, because all videos have to be encoded and decoded, and a lot of losses will be incurred in the transmission process.

So how to simulate the loss part? Designing a good algorithm is also very good for the codec itself. Considering this series, low-quality and high-quality data pairs can do a lot of things. This involves noise analysis and scene analysis. different. The focus of the scene is not the same as . The cartoons pay more attention to the edge, and the sports scenes pay more attention to the movement of the movement, and the complex scenes may pay attention to a lot of details.

Therefore, a lot of data analysis and data production are needed in these aspects. Maybe you usually do an algorithm to let a bunch of people mark and draw a frame. So in this question, the generation of data pairs is a proposition, how to get it To real training data. Of course, this data must have a scale in addition to being real. Dharma Academy has spent a lot of effort in this aspect of technology.

Ultra HD production practice

How to enhance it next? For example, how to enhance portraits, our more important method now is to join the GAN series technology , Dharma Academy open source GPEN high-definition algorithm.

In various video news on the 100th anniversary of the founding of the Communist Party of China, many portraits were repaired based on this algorithm, and played on various platforms such as station B, which played a good PR role. These were repaired based on the algorithm of Dharma Academy. .

The a priori network which is the first to join GAN, also adds a generative data generator. There are basically three categories. The first category is for content, of course, for feature level, and Loss for GAN level, so you can get a very good basic model for portraits. This is one of them.

Of course, although people are the most important to the real physical world, various details such as text and scenery also need algorithms to improve and enhance.

Regarding solving the problem of fluency, in many cases, especially when the rate of change of motion is very large, there are often flaws, so how to detect and compensate for different flaws at different scales and different flaws, and then improve the fluency, it takes a lot of Work to perfect.

In addition to the enhancement of details, the restoration and generation of data, the detection of defects, the detection of differences, and the adjustment of colors, many algorithms can play its role. The combination of this series of videos can be completed from low-definition to 4K to 8K. The evolution of this is also a system engineering in itself.

Therefore, from these three dimensions, there is no such thing as traditional or unconventional methods. Everyone uses deep learning methods to enhance resolution, frame rate, color and details, and how to improve the algorithm in different scenarios.

With these algorithms, there is still a long way to go to form truly usable products and services. This is what systems engineering has to do. Basically, from the original material, plus AI visual production, intelligent production, screen enhancement, and content processing, how to edit, modify, generate cover, and disassemble the content after obtaining the content. There are many technologies that can be used to play. effect.

The above picture shows the AI enhanced , using the open source GPEN HD algorithm to achieve facial enhancement, the enhanced visual effect is great, but there are actually many difficulties in it, if the source of this photo is very poor or suffer Very serious stains, it is still very difficult to do a good job of enhancement.

In addition, this is a comprehensive thing, which may be used to enhance the face, but it cannot be produced in isolation from the environment, and must be combined with the background. If it is a very old photo, color restoration should be done, including black and white to color.

Picture quality enhancement, of course, is more complicated for the video. The original picture is darker, but the color will be more vivid, and the details will be more prominent after the over-resolution. At this time, the car is not so smooth. Adding a difference makes the car driving picture Smoother. From color to detail to smoothness plus scene enhancement, a complete visual enhancement is formed, which belongs to video visual processing technology.

https://www.youku.com/video/XNTE5MTkzODIxMg==

Dharma Academy Video Comprehensive Enhancement Effect Show

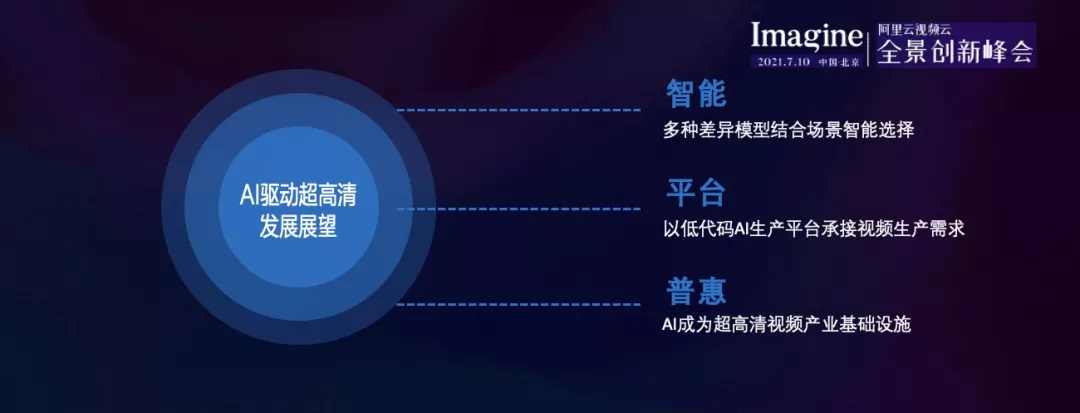

Finally, AI drives HD to move forward. intelligence is the most basic . Dharma Academy does AI technology, so whether it can do things adaptively is very important. Adaptation seems very simple, but in fact, in different scenarios, AI technology does not have the so-called universal ability.

When there are cartoons, news figures, and documentaries, we hope that AI can have a complete system that adapts to it, using a universal model to process, rather than a single model, so that AI can adapt to different scenarios and adopt the best quality algorithm .

Finally, self-assessment is a very important and interesting topic. How to judge whether the subjective video enhancement technology is good or not is also very difficult in itself. Dharma Academy will also invest a lot of time in video enhancement technology. Complete.

Of course, the system is needed to carry it. The video cloud is this infrastructure platform, which makes it possible to do various AI video visual enhancement tasks large scale with high efficiency.

Of course, now AI is slowly moving towards two dimensions, the first one is towards consumers, ordinary people, and serving everyone. In addition, goes deep into all walks of life to provide you with and improve efficiency, as well as various opportunities for innovation. AI technology will be based on the video cloud, driving the future of high-definition vision.

"Video Cloud Technology" Your most noteworthy audio and video technology public account, pushes practical technical articles from the front line of Alibaba Cloud every week, and exchanges and exchanges with first-class engineers in the audio and video field. The official account backstage reply [Technology] You can join the Alibaba Cloud Video Cloud Product Technology Exchange Group, discuss audio and video technologies with industry leaders, and get more industry latest information.

**粗体** _斜体_ [链接](http://example.com) `代码` - 列表 > 引用。你还可以使用@来通知其他用户。