Abstract: relational database data into the lake, there are multiple scenarios, multiple tools, multiple timeliness requirements for entering the lake, etc. This article sorts out the relevant scenarios and the corresponding suggestions.

This article is shared from the HUAWEI cloud community " relational database data into the lake scenario and plan summary ", author: HisonHuang.

Relational database data into the lake, there are multiple scenarios, multiple tools, multiple timeliness requirements for entering the lake, etc. This article sorts out the relevant scenarios and the corresponding suggestions.

First introduce the next two tools for entering the lake: batch data migration tools (such as CDM) and real-time data access tools (such as CDL).

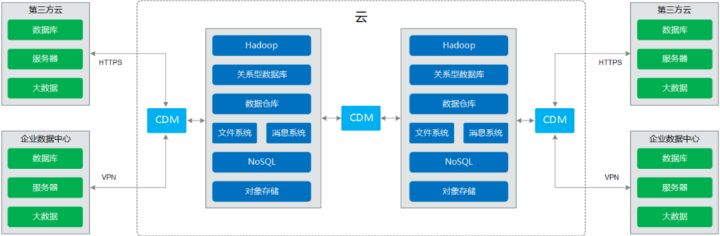

batch data migration tool can migrate data from relational database data to the data lake in a full volume at a time, full volume at a time + batch increment, and often timeliness of entering the lake (from data generation to data entry into the source layer of the data lake) More than 10 minutes or longer, such as about 15 minutes, depends on the time interval of batch incremental migration tasks. The following is the functional architecture diagram of the bulk data migration tool (CDM):

real-time data access tool (such as CDL) , can capture the binlog of relational database in real time, save it in the message queue such as Kafka, and support real-time analysis of binlog log to generate addition, deletion and modification commands to operate the data records of the data lake, and realize the connection of relational database Data enters the lake in real time, and the timeliness of entering the lake is at the second or minute level, generally less than 15 minutes. The following is the functional architecture diagram of the real-time data access tool (CDL):

Scenario 1: The relational database has historical data in stock, but no new data generated in real time

Program:

1. Lake entry tools: batch data migration tools (such as CDM)

2. How to enter the lake: the entire table is migrated, as shown below:

3. The process of entering the lake:

3.1. Use the lake access tool, configure the relational database as the source end, and configure the HDFS/Hive directory as the destination end;

3.2. Start the lake entry operation with the lake entry tool, relational database-"HDFS directory (data lake paste source layer).

Scenario 2: The relational database has no data initially, and the relational database is only open for data writing after the establishment of the lake entry process

Program:

Precondition: The binlog log switch is turned on for the relational database.

1. Lake access tools: real-time data access tools (such as CDL)

2. Way of entering the lake: real-time incremental data entering the lake, as shown below:

3. The process of entering the lake:

3.1. Use the lake tool to configure the relational database as the source and the directory of the Hudi file as the destination;

3.2. Start the operation of the lake access tool;

3.3. Real-time incremental data of relational database enters the lake;

3.3.1. Insert, modify and delete data records to relational database;

3.3.2. The data change log of the relational database is captured to the lake tool in real time;

3.3.3. The lake access tool parses the log and calls the Hudi interface to insert, modify, and delete data records to the Hudi file directory (data lake paste source layer).

Scenario 3: The relational database has historical data in stock, and new data is generated in real time, and the data record has a time identification field

plan 1:

1. Lake entry tools: batch data migration tools (such as CDM)

2. The way to enter the lake: the first historical data entry into the lake + continuous batch incremental data entry into the lake

3. Time to enter the lake: near real-time (depending on the batch scheduling cycle)

4. The process of entering the lake:

4.1. It is assumed that the relational database generates a stable amount of new data in real time;

4.2. Use the lake access tool, configure the relational database as the source end, and configure the HDFS/Hive directory as the destination end;

4.3. Use the tool to enter the lake to start the operation of the stock data entering the lake, where the time identification field of the Where filter condition is from the initial time to the current time;

Note: The operation of stock data into the lake takes a long time, depending on factors such as the amount of historical data in the stock, network bandwidth, and the throughput of the operation into the lake. During this period, the relational database continuously accepts new writes, accumulating a larger amount of new data.

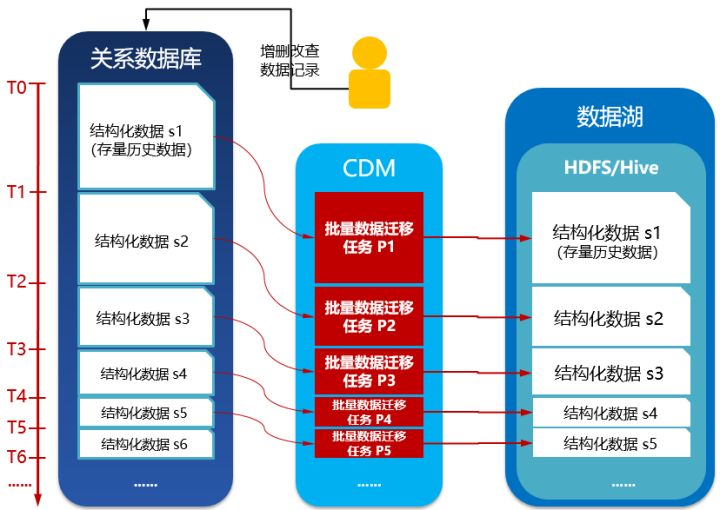

4.4. Continuous batches start incremental data entry operations, where the time identification field of the Where filter condition is from the deadline of the previous batch of operations to the current time; the amount of data migrated in each batch is gradually reduced, and the time between batches The interval gradually decreases and tends to stabilize, and the computing resources occupied by each batch of operations also gradually decreases and tends to stabilize. The schematic is as follows:

Scenario 2:

Precondition: The binlog log switch is turned on for the relational database.

1. Tools for entering the lake: batch data migration tools (such as CDM) + real-time data access tools (such as CDL)

2. The way to enter the lake: the first historical stock data into the lake + continuous batch incremental data into the lake + real-time incremental data into the lake

3. Time limit for entering the lake: the first two stages are not real-time, and the last stage is real-time

4. The process of entering the lake:

4.1. It is assumed that the relational database generates a stable amount of new data in real time;

4.2. Use the batch data migration tool, configure the relational database as the source end, and configure the HDFS/Hive directory as the destination end (Table A, the file format may be CSV);

4.3. Use the batch data migration tool to start the operation of storing data into the lake, where the time identification field of the Where filter condition is from the initial time to the current time;

Note: The operation of stock data into the lake takes a long time, depending on factors such as the amount of historical data in the stock, network bandwidth, and the throughput of the operation into the lake. During this period, the relational database continuously accepts new writes, accumulating a larger amount of new data.

4.4. Continuous batches start incremental data entry operations, where the time identification field of the Where filter condition is from the deadline of the previous batch of operations to the current time; the amount of data migrated in each batch is gradually reduced, and the time between batches The interval gradually decreases and tends to stabilize, and the computing resources occupied by each batch of operations also gradually decreases and tends to stabilize;

4.5. At a certain point in time, Ts suspends data writing to the relational database to ensure that all data before Ts is migrated from batch jobs to the HDFS directory (data lake paste source layer);

4.6. Stop batch operations of the batch data migration tool.

4.7. Use the real-time data access tool, configure the relational database as the source end, and configure the Hudi file directory as the destination end (Table B, the file format is Hudi);

4.8. Start the operation of real-time data access tools;

4.9. At this time, Te relational database is open for data writing;

4.10. Real-time incremental data from relational databases enter the lake;

4.10.1. The data change log of the relational database is captured in real time to the real-time data access tool;

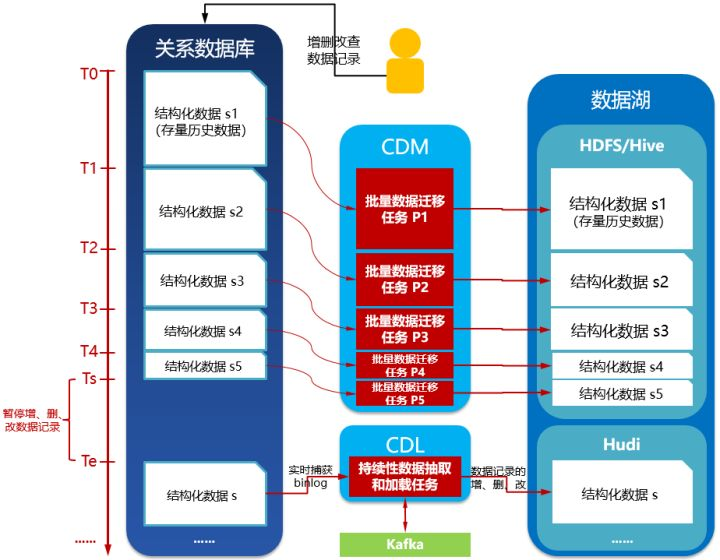

4.10.2. The real-time data access tool parses the log and calls the Hudi interface to insert, modify, and delete data records to the Hudi file directory (data lake paste source layer). The schematic is as follows:

Scenario 4: The relational database has historical data in stock, and new data is generated in real time, and the data record has no time identification field

Program:

Precondition: The binlog log switch is turned on for the relational database.

1. Tools for entering the lake: batch data migration tools (such as CDM) + real-time data access tools (such as CDL)

2. The way to enter the lake: migration of the entire table + real-time incremental data entry into the lake

3. Time limit for entering the lake: the final stage enters real-time

4. The process of entering the lake:

4.1. Suspend data writing in relational database;

4.2. Use the batch data migration tool, configure the relational database as the source end, and configure the HDFS/Hive directory as the destination end (Table A, the file format may be CSV);

4.3. Use the batch data migration tool to start the lake entry operation, the relational database-"HDFS directory (data lake paste source layer);

4.4. After the above stock data migration is completed, stop the batch operation of the batch data migration tool;

4.5. Use the real-time data access tool, configure the relational database as the source end, and configure the Hudi file directory as the destination end (Table B, the file format is Hudi);

4.6. Start the operation of real-time data access tools;

4.7. At this time, Te relational database is open for data writing;

4.8. Real-time incremental data of relational database enters the lake;

4.8.1. The data change log of the relational database is captured in real time to the real-time data access tool;

4.8.2. The real-time data access tool parses the log, and calls the Hudi interface to insert, modify, and delete data records to the Hudi file directory (data lake paste source layer). The schematic is as follows:

Click to follow, and learn about Huawei Cloud's fresh technology for the first time~

**粗体** _斜体_ [链接](http://example.com) `代码` - 列表 > 引用。你还可以使用@来通知其他用户。