On July 31st, Alibaba Cloud Video Cloud was invited to participate in the Global Open Source Technology Summit GOTC 2021 jointly organized by the Open Atom Open Source Foundation, Linux Foundation Asia Pacific, and Open Source China. In the audio and video performance optimization session of the conference, open source was shared. FFmpeg's practical experience in performance acceleration and the construction and optimization of a device-cloud integrated media system.

As we all know, FFmpeg, as the Swiss army knife of open source audio and video processing, is very popular because of its open source, free, powerful, and easy-to-use features. The high computational complexity of audio and video processing makes performance acceleration an eternal theme of FFmpeg development. Alibaba Cloud Video Cloud Media Processing System draws extensively on the experience of open source FFmpeg in performance acceleration. At the same time, it designs and optimizes the end-cloud integrated media system architecture based on its own products and architecture to create high-performance, high-quality, and low-latency Real-time media service with end-cloud collaboration.

Li Zhong, senior technical expert of Alibaba Cloud Intelligent Video Cloud, is currently responsible for Alibaba Cloud Video Cloud RTC cloud media processing services and media processing performance optimization of end-cloud integration. He is the maintainer of FFmpeg official code and a member of the technical committee. He has participated in multiple audio and video Open source software development.

The topic shared this time is "From FFmpeg Performance Acceleration to End-Cloud Integrated Media System Optimization", which mainly introduces three aspects:

1. Common performance acceleration methods in FFmpeg

2. Cloud media processing system

3. End + cloud collaborative media processing system

Common performance acceleration methods in FFmpeg

One of the main challenges currently faced by audio and video developers is the high demand for computing. It is not only single-task algorithm optimization, but also involves many hardware, software, scheduling, business layers, and challenges in different business scenarios. The complex status of the secondary end, cloud, different devices, and different networks requires developers to develop and optimize systematic architectures.

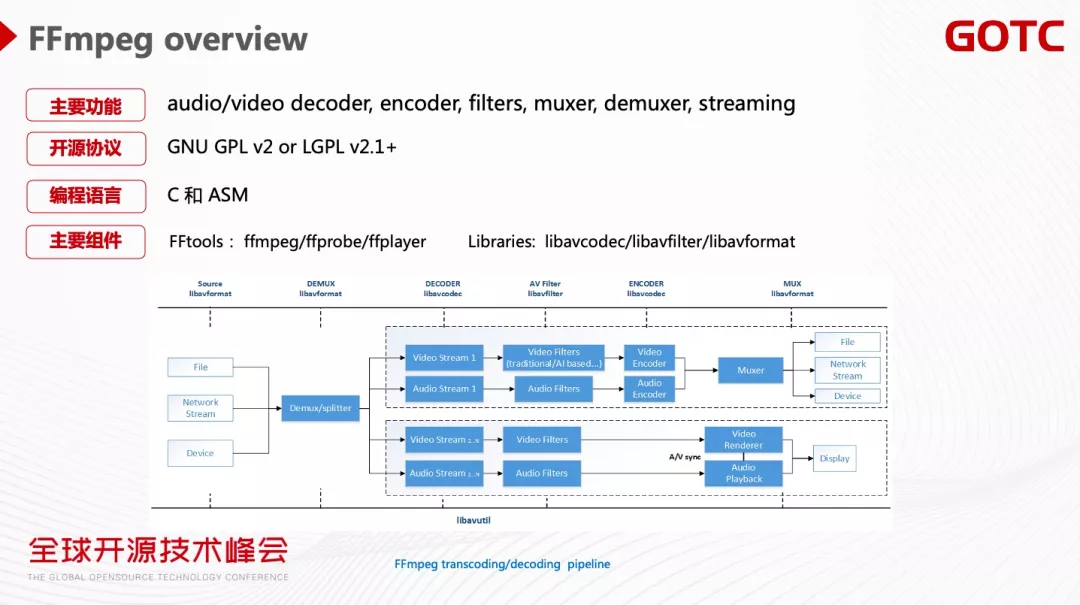

FFmpeg is a very powerful software, including audio and video decoding and encoding, various audio and video filters, and various protocol support. As an open source software FFmpeg, the main license is GPL or LGPL, and the programming language is C and assembly. It also provides a lot of command line tools that are ready to use, such as ffmpeg for transcoding, ffprobe for audio and video analysis, and ffplayer. Its core Library includes libavcodec for encoding and decoding, libavfilter for processing audio and video, and libavformat for supporting various protocols.

In the FFmpeg development community, audio and video performance optimization is an eternal theme. Its open source code also provides a lot of classic performance optimization methods, mainly general acceleration, CPU instruction acceleration, and GPU hardware acceleration.

Universal acceleration

General acceleration is mainly algorithm optimization, IO read and write optimization, and multi-thread optimization. The goal of algorithm optimization is to improve performance without increasing CPU Usage. The most typical is the various fast search algorithms of the codec, which can greatly optimize the encoding speed with only a small loss of accuracy. Various pre-processing Filter algorithms also have similar methods, and algorithms can also be combined to reduce redundant calculations to achieve performance optimization.

The figure below is a typical noise reduction and sharpening convolution template, which requires a 3 by 3 matrix convolution. We can see that the sharpening template here is similar to the smoothing template. For the operations of noise reduction and sharpening at the same time, as long as the subtraction is performed on the basis of the smoothing template, the result of the sharpening template can be achieved, which can be reduced. Redundant calculations improve performance. Of course, algorithm optimization also has limitations. For example, the codec algorithm has a loss of accuracy, and it may also need to sacrifice space complexity in exchange for time complexity.

The second method of performance optimization is IO read and write optimization. The common method is to use CPU pre-reading to improve Cache Miss. The above figure is to achieve the same calculation result through two methods of reading and writing. The method of reading and writing in rows is faster than reading and writing in columns. The main reason is that the CPU can do pre-reading when reading and writing in rows. At that time, the CPU has pre-read other pixels in the row, which can greatly accelerate the speed of IO read and write and improve performance. In addition, Memory Copy can be reduced as much as possible, because the video processing YUV is very large, and the performance loss of each frame read is relatively large.

The multi-threaded optimization of general acceleration mainly uses CPU multi-core to do multi-threaded parallel acceleration to greatly improve performance. The chart in the lower left corner of the above figure shows that as the number of threads increases, the performance has increased by 8 times. There will also be a problem. Multi-threaded acceleration is commonly used. Is the number of threads the better?

The answer is No.

First of all, because of the limitation of the number of CPU cores, multi-threaded waiting and scheduling, multi-threaded optimization will encounter performance bottlenecks. Taking the chart in the lower left corner as an example, the speedup ratio of thread number equal to 10 is very close to that of thread number equal to 11. It can be seen that multi-threaded optimization has a decreasing marginal effect, and it will also increase latency and memory consumption (especially It is a commonly used inter-frame multithreading).

Second, inter-frame (Frame level) multi-threading requires the establishment of Frame Buffer Pool for multi-threaded parallelism, which requires buffering many frames. For delay-sensitive media processing, such as low-latency live broadcast and RTC, it will bring relatively large negatives. effect. Correspondingly, FFmpeg supports intra-frame (Slice level) multi-threading, which can divide a frame into multiple Slices, and then perform parallel processing, which can effectively avoid the Buffer delay between frames.

Third, when the number of threads increases, the cost of thread synchronization and scheduling will also increase. Take an FFmpeg Filter acceleration in the lower right figure as an example. As the number of threads increases, the CPU Cost also increases significantly, and the end of the graph curve begins to slope upward, which indicates that the increase in cost becomes more obvious.

CPU instruction acceleration

CPU instruction acceleration is SIMD (single instruction multiple data stream) instruction acceleration. Traditional general registers and instructions, one instruction processes one element. However, a SIMD instruction can process multiple elements in an array, so as to achieve a very significant acceleration effect.

The current mainstream CPU architecture has a corresponding SIMD instruction set. X86 architecture SIMD instructions include MMX instructions, SSE instructions, AVX2, AVX-512 instructions. One AVX-512 instruction can handle 512 bits, and the acceleration effect is very obvious.

The SIMD instructions in the FFmpeg community include inline assembly and handwritten assembly. The FFmpeg community does not allow the use of intrinsic programming because it depends on the version of the compiler, and the code compiled by different compilers and their acceleration effects are also inconsistent.

Although the SIMD instruction has a good acceleration effect, it also has certain limitations.

First of all, many algorithms are not processed in parallel and cannot be optimized for SIMD instructions.

Secondly, programming is more difficult. Assembly programming is more difficult, and the SIMD instruction has some special requirements for programming, such as memory alignment. AVX-512 requires Memory to be aligned with 64 bytes. If it is not aligned, performance may be lost and the program may even crash.

We see that different CPU manufacturers are competing in instruction sets, and the supported bit widths are increasing. From SSE to AVX2 to AVX-512, bit widths have increased significantly. Is the wider the better? In the above figure, you can see the speed-up of X265 encoding AVX 512 relative to AVX 2. The bit width of AVX 512 is twice that of AVX 2, but the performance improvement is often far less than double, or even less than 10%. In some cases, the performance of AVX 512 will be lower than AVX 2.

what is the reason?

First of all, the one-time data input may not be as much as 512 bits, and may only be 128 bits or 256 bits.

Second, there are many complex calculation steps (such as encoders) that cannot be done in parallel with the instruction set.

Third, the high power consumption of AVX 512 will cause CPU frequency reduction, resulting in a decrease in the overall processing speed of the CPU. We look at the X265 ultrafast encoding in the picture above. The encoding of AVX 512 is slower than that of AVX 2. (For details, please refer to: https://networkbuilders.intel.com/docs/accelerating-x265-the-hevc-encoder-with-intel-advanced-vector-extensions-512.pdf)

Hardware Acceleration

FFmpeg's more mainstream hardware acceleration is GPU acceleration. The hardware acceleration interface is divided into two major blocks. One is that hardware manufacturers provide different acceleration interfaces. Intel mainly provides QSV and VAAPI interfaces, NVIDIA provides NVENC, CUVID, NVDEC, VDPAU, and AMD provides AMF and VAAPI interfaces. Second, different OS vendors provide different acceleration interfaces and solutions, such as DXVA2 for Windows, MediaCodec for Android, and VideoToolbox for Apple.

Hardware acceleration can significantly improve the performance of media processing, but it also brings some problems.

First, the coding quality of hardware is limited by the design and cost of the hardware, and the quality of hardware coding is often worse than that of software coding. However, hardware coding has very obvious performance advantages, and performance can be used to exchange coding quality. The example in the figure below shows that the motion search window of the hardware encoder is relatively small, which leads to a decrease in coding quality. The solution is the HME algorithm. When the search window is relatively small, first zoom the larger picture to a very small picture, do a motion search on this picture, and then search and zoom step by step, which can significantly improve the motion Search the range, and then find the most matching block, thereby improving the coding quality.

Second, the memory copy performance interaction between hardware-accelerated CPU and GPU will cause performance degradation.

The interaction between CPU and GPU is not only a simple data movement process, but actually needs to convert the corresponding pixel format. For example, the CPU is in the I420 linear format, and the GPU is good at matrix operations and adopts the NV12 Tiled format.

This kind of memory format conversion will bring significant performance loss. The problem of CPU/GPU Memory interaction can be effectively avoided by constructing a pure hardware pipeline. When the CPU and GPU must interact, the Fast Memory Copy method can be adopted, and the GPU is used for Memory Copy to speed up this process.

The figure below is a summary of performance optimization. In addition to the optimization methods mentioned above, the media processing on the client has some special features. For example, the mobile phone CPU has a large and small core architecture. If thread scheduling is scheduled on a small core, its performance will be significantly worse than that of a large core. Unstable performance.

In addition, many algorithms, no matter how they are optimized, cannot run through on some models. At this time, optimization should be made on the business strategy, such as formulating a black and white list, and the machine that is not on the support list will not enable the algorithm.

Cloud media processing system optimization

For media processing, there are two major challenges, one is cost optimization in the cloud, and the other is client device adaptation and compatibility. The following figure is a typical system for cloud media processing: including the stand-alone layer, the cluster scheduling layer, and the business layer.

The stand-alone layer includes the FFmpeg Pipeline processing framework, codec, and hardware layer. Take the cloud transcoding system as an example. Its core technical indicators include image quality, processing speed, latency, and cost. In terms of image quality, Alibaba Cloud Video Cloud has uniquely created narrowband HD technology and S265 encoding technology, which can significantly improve encoding image quality. In terms of processing speed and latency optimization, we extensively learn from FFmpeg performance acceleration methods, such as SIMD instructions, multi-thread acceleration, and support for heterogeneous computing. Cost is a relatively complex system. It will include the scheduling layer, the stand-alone layer, and the business layer. It requires rapid and flexible expansion and contraction, accurate portraits of stand-alone resources, and reduction of single-task calculation costs.

Cloud cost optimization

The core of cloud cost optimization is to optimize around three curves, corresponding to the three curves of single-task actual resource consumption, single-task resource estimated allocation, and total resource pool. There are four real problems to face in the optimization process:

First, in the video cloud business, the trend of business diversity will become more and more obvious, including on-demand, live broadcast, RTC, AI editorial department, and cloud editing. The challenge brought by business diversity is how to share a resource pool with multiple businesses for mixed running.

Second, there are more and more large-particle tasks. A few years ago, the mainstream video was 480P, but now the mainstream is 720P and 1080P processing tasks. It is foreseeable that 4K, 8K, VR and other media processing will be more and more in the future. , This brings the challenge that the thirst for stand-alone performance will become greater and greater.

Third, the scheduling layer needs to estimate the resource consumption of each task, but the actual consumption of a single-machine task will be affected by many factors. The complexity of video content, different algorithm parameters, and multi-process switching all affect task resource consumption.

Fourth, there will be more and more pre-processing for encoding, and at the same time, a transcoding task needs to do multi-rate or multi-resolution output. Various pre-processing (image quality enhancement / ROI recognition / super frame rate / super division, etc.) will greatly increase the processing cost.

Let's take a look at how to optimize these three curves from the overall perspective.

The main methods of resource consumption optimization of actual tasks are performance optimization of each task, performance optimization of algorithms, and optimization of Pipeline architecture.

The core goal of resource allocation is to make the yellow curve in the above figure keep close to the black curve and reduce the waste of resource allocation. In the case of insufficient resource allocation, the algorithm can be automatically upgraded to avoid stalls in online tasks, such as reducing the encoder's preset from the medium file to the fast file.

For the optimization of the total resource pool, you can first see that the yellow curve has peaks and troughs. If the current on-demand task is in the trough state, you can transfer the live-streaming task, so that you can run more without changing the peak value of the entire pool. Task. Second, how the total resource pool can be quickly elastic, can quickly release resources within a certain time window, and reduce resource pool consumption, which is also the core of cost optimization that needs to be considered for scheduling.

Let's talk about some optimization methods.

CPU instruction acceleration

The main goal of CPU model optimization is to increase the throughput of a single CPU and reduce CPU fragmentation. The figure above describes the advantages of multi-core CPUs. However, multi-core CPUs may also bring direct memory access from multiple NUMA nodes, resulting in performance degradation.

Accurate portrait of stand-alone resources

The main goal of single-machine resource accurate portrait is to be able to know exactly how many resources it needs for each task. It is a systematic tool that requires image quality evaluation tools, computing resource statistics tools, and a variety of complex video sets with multiple scenes. , And can do various computing resources and iterative feedback, correct the consumption of cost calculation, and guide the adaptive upgrade of the algorithm.

1-N architecture optimization

A transcoding task may output different resolutions and bit rates. The traditional method is to start N independent one-to-one processes. Such an architecture obviously has some problems, such as redundant decoding calculations and pre-coding processing. An optimization method is to integrate these tasks, from N to N transcoding to one to N transcoding. In this way, the video decoding and pre-coding processing only need to be done once, so as to achieve the goal of cost optimization.

1-N transcoding will also bring new challenges. The FFmpeg transcoding tool supports 1-N transcoding, but each module is processed serially, and the speed of a single one-turn N task will be slower than a single one-turn one task. Second, the challenge of scheduling, the granularity of single-task resources will be greater, and the allocation of required resources will be more difficult to estimate. Third, the difference in algorithm effect, because the pre-processing of some videos may be after the Scale, and for the one to N transcoding architecture, the pre-processing will be placed before the Scale. Changes in the flow of media processing will cause differences in the effect of the algorithm (usually this problem is not particularly large, because there is no image quality loss in the processing before the Scale, but it is better to do the processing before the Scale).

End + cloud collaborative media processing system

The advantage of end-side media processing is that the available computing power on the mobile phone can be used to reduce costs. Therefore, the ideal situation is to make full use of various end-side computing power, and each algorithm has very good performance optimization and end-side adaptation. It can be implemented at zero cost at each end.

But the ideal is very satisfying, the reality is very skinny. There will be four major practical problems:

First, the difficulty of end-to-side adaptation. Need a lot of OS hardware model adaptation.

Second, the difficulty of algorithm access. In reality, it is impossible to optimize all algorithms on all ends, so end-side performance bottlenecks will make it difficult to implement algorithms.

Third, the difficulty of experience optimization. Customers will have different SDKs, or Alibaba Cloud's SDK will also have different versions. The fragmentation of the SDK itself makes it difficult to implement some solutions. For example, the non-standard H264 encoding, in fact, the implementation of the H265 encoding and decoding algorithm has also encountered challenges, and some devices do not support H265.

Fourth, it is difficult for users to access, and it takes a long time for customers to upgrade or replace the SDK.

Faced with this reality, we propose a media processing solution with cloud and end collaboration. The main idea is to achieve a better user experience through the solution of cloud processing + end-side rendering.

The main types of media processing for cloud and end collaboration are transcoding and preview. The transcoding class is a one-way data stream. The preview category needs to push various streams to the cloud first, and then add various special effects, and then pull it back to show the anchor whether the effect meets his expectations, and finally push it from the CDN to the audience.

Such a scheme will also encounter some challenges. First of all, the cloud processing will increase the computational cost (of course there can be various ways to optimize, because the client does not have a direct sense of body). Second, the delay will increase, and the processing in the cloud increases the delay of the link.

As RTC technology becomes more and more mature, through RTC's low-latency transmission protocol, coupled with various cost optimizations in the cloud, it can support media processing on the cloud at low cost/low latency, creating a cloud-plus-end real-time media processing service. The RTC real-time media processing service RMS built by Alibaba Cloud Video Cloud can achieve high-performance, low-cost, high-quality, low-latency, and smarter cloud collaborative media processing solutions.

The left picture above is the overall RMS architecture diagram, which is divided into the Pipeline layer, the module layer, the hardware adaptation layer, and the hardware layer. Pipeline can be used as the assembly module layer of various business scenarios. The module layer is the core of audio and video processing, and realizes various AI or low-latency and high-quality effects.

End-to-end collaborative media processing with cloud: Empowering RTC+

Taking editing cloud rendering as an example, it is more difficult for traditional editing solutions to ensure multi-terminal experience consistency and smooth performance. Our idea is to only issue instructions on the end, and video synthesis and rendering are implemented on the cloud, which can support a lot of special effects and ensure the consistency of multi-end effects.

Let's take a look at the pipeline rendered by the editing cloud. Cloud-rendered web pages are responsible for signaling delivery. The editing instructions are forwarded to the RMS media processing engine through the scheduling layer to render the media processing on the cloud. After synthesis, they are encoded and streamed through SFU. Finally, the editing effects are viewed on the edited web page. As you can see in the demo above, the webpage combines many tracks into one track. If a 4 by 4 grid is processed on the end, it will be more laborious to run on a low-end machine, but the cloud can easily run out of this effect.

After the high-bit-rate and low-resolution video is streamed to the cloud, it can be processed by the cloud narrowband high-definition technology of Alibaba Cloud Video Cloud to achieve the goal of higher resolution and lower bitrate. After the narrowband HD is enabled in the Demo below, the definition of the video has been significantly improved (the bit rate has also been significantly reduced).

At the same time, the use of RTC low latency, coupled with AI special effects processing, can produce many interesting scenes. Push the stream of real people to the cloud, and the cloud will do the output processing of cartoon portraits, and then output the cartoon portraits for real-time communication. The audiences in the venue see each other's cartoon portraits.

With cloud matting technology, it is easy to realize virtual education scenes and virtual meeting room scenes. For example, in a virtual classroom, you can insert the portrait into the PPT to increase the immersion of the whole effect presentation. Different participants in the virtual meeting room arrange them into the virtual meeting room scene through cutouts to achieve the effect of the virtual meeting room.

Follow the "Video Cloud Technology" official account, and reply to "GOTC" in the background to get the full PPT

"Video Cloud Technology" Your most noteworthy audio and video technology public account, pushes practical technical articles from the front line of Alibaba Cloud every week, and exchanges and exchanges with first-class engineers in the audio and video field. The official account backstage reply [Technology] You can join the Alibaba Cloud Video Cloud Product Technology Exchange Group, discuss audio and video technologies with industry leaders, and get more industry latest information.

**粗体** _斜体_ [链接](http://example.com) `代码` - 列表 > 引用。你还可以使用@来通知其他用户。