In recent years, the author has seen many AI-related issues in CTF competitions at home and abroad. Some require players to implement an AI by themselves to automate certain operations; some provide a target AI model that requires players to crack. This article mainly talks about the latter-in the CTF competition, how do we deceive the AI given by the title?

The deception AI problems in CTF are generally divided into two categories: neural network-based and statistical model-based. If the question requires the player to cheat the neural network, a white box model (usually an image classification task) will be given; if the player is required to cheat the statistical learning model, some questions will give the white box model parameters, and some provide training data sets. .

Let’s start with a very simple deception statistical learning model to experience the main solution process of this type of problem.

Cheating kNN: [Xihu Lunjian 2020] Pointing to the deer as a horse

mission target

There is an AI model that requires players to upload a picture. The difference with dear.png is very small, but it is judged as a horse by AI.

import numpy as np

from PIL import Image

import math

import operator

import os

import time

import base64

import random

def load_horse():

data = []

p = Image.open('./horse.png').convert('L')

p = np.array(p).reshape(-1)

p = np.append(p,0)

data.append(p)

return np.array(data)

def load_deer():

data = []

p = Image.open('./deer.png').convert('L')

p = np.array(p).reshape(-1)

p = np.append(p,1)

data.append(p)

return np.array(data)

def load_test(pic):

data = []

p = Image.open(pic).convert('L')

p = np.array(p).reshape(-1)

p = np.append(p,1)

data.append(p)

return np.array(data)

def euclideanDistance(instance1, instance2, length):

distance = 0

for x in range(length):

distance += pow((instance1[x] - instance2[x]), 2)

return math.sqrt(distance)

def getNeighbors(trainingSet, testInstance, k):

distances = []

length = len(testInstance) - 1

for x in range(len(trainingSet)):

dist = euclideanDistance(testInstance, trainingSet[x], length)

distances.append((trainingSet[x], dist))

distances.sort(key=operator.itemgetter(1))

neighbors = []

for x in range(k):

neighbors.append(distances[x][0])

return neighbors

def getResponse(neighbors):

classVotes = {}

for x in range(len(neighbors)):

response = neighbors[x][-1]

if response in classVotes:

classVotes[response] += 1

else:

classVotes[response] = 1

sortedVotes = sorted(classVotes.items(), key=operator.itemgetter(1), reverse=True)

return sortedVotes[0][0]

def getAccuracy(testSet, predictions):

correct = 0

for x in range(len(testSet)):

if testSet[x][-1] == predictions[x]:

correct += 1

return (correct / float(len(testSet))) * 100.0

def check(pic):

source_p = Image.open('deer.png')

try:

c_p = Image.open(pic)

except:

print("Please upload right picture.")

exit()

diff_pixel = 0

a, b = source_p.size

if c_p.size[0] != a and c_p.size[1] != b:

print("Please upload right picture size("+str(a)+','+str(b)+')')

exit()

for y in range(b):

for x in range(a):

diff_pixel += abs(source_p.getpixel((x, y)) - c_p.getpixel((x, y)))

return diff_pixel

def main():

while 1:

print('-' * 134)

print(''' ____ __ _ _ _ _ _ _ _

| __ \ / _| | | | | | | | | | | | | | |

| |__) |___| |_ ___ _ __ | |_ ___ | |_| |__ ___ __| | ___ ___ _ __ __ _ ___ | |_| |__ ___ | |__ ___ _ __ ___ ___

| _ // _ \ _/ _ \ '__| | __/ _ \ | __| '_ \ / _ \ / _` |/ _ \/ _ \ '__| / _` / __| | __| '_ \ / _ \ | '_ \ / _ \| '__/ __|/ _ \\

| | \ \ __/ || __/ | | || (_) | | |_| | | | __/ | (_| | __/ __/ | | (_| \__ \ | |_| | | | __/ | | | | (_) | | \__ \ __/

|_| \_\___|_| \___|_| \__\___/ \__|_| |_|\___| \__,_|\___|\___|_| \__,_|___/ \__|_| |_|\___| |_| |_|\___/|_| |___/\___|

''')

print('-'*134)

print('\t1.show source code')

print('\t2.give me the source pictures')

print('\t3.upload picture')

print('\t4.exit')

choose = input('>')

if choose == '1':

w = open('run.py','r')

print(w.read())

continue

elif choose == '2':

print('this is horse`s picture:')

h = base64.b64encode(open('horse.png','rb').read())

print(h.decode())

print('-'*134)

print('this is deer`s picture:')

d = base64.b64encode(open('deer.png', 'rb').read())

print(d.decode())

continue

elif choose == '4':

break

elif choose == '3':

print('Please input your deer picture`s base64(Preferably in png format)')

pic = input('>')

try:

pic = base64.b64decode(pic)

except:

exit()

if b"<?php" in pic or b'eval' in pic:

print("Hacker!!This is not WEB,It`s Just a misc!!!")

exit()

salt = str(random.getrandbits(15))

pic_name = 'tmp_'+salt+'.png'

tmp_pic = open(pic_name,'wb')

tmp_pic.write(pic)

tmp_pic.close()

if check(pic_name)>=100000:

print('Don`t give me the horse source picture!!!')

os.remove(pic_name)

break

ma = load_horse()

lu = load_deer()

k = 1

trainingSet = np.append(ma, lu).reshape(2, 5185)

testSet = load_test(pic_name)

neighbors = getNeighbors(trainingSet, testSet[0], k)

result = getResponse(neighbors)

if repr(result) == '0':

os.system('clear')

print('Yes,I want this horse like deer,here is your flag encoded by base64')

flag = base64.b64encode(open('flag','rb').read())

print(flag.decode())

os.remove(pic_name)

break

else:

print('I want horse but not deer!!!')

os.remove(pic_name)

break

else:

print('wrong choose!!!')

break

exit()

if __name__=='__main__':

main()

We looked at the code in detail and found that this AI model is k-Nearest Neighbor (KNN), and it is still k=1, and in the training set, there is only one picture for the deer and the horse. For the topic, read the picture of the contestant and do the following:

- Check whether the pixel difference between the picture uploaded by the contestant and the deer is less than 100000. If the limit is exceeded, an error is reported.

- Find the Euclidean distance between the player's picture and the deer and horse. Whoever is closer will determine which category it belongs to.

- If the player picture is judged to be a horse, the player wins.

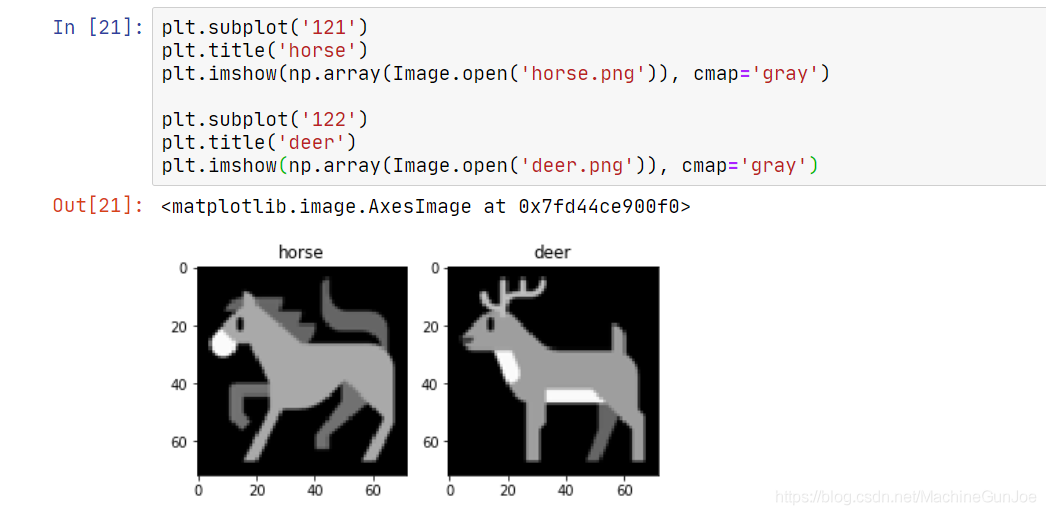

Both deer and horse are grayscale images, as follows:

The author recommends using jupyter notebook or jupyter lab when doing machine learning CTF questions, and use matplotlib well

To visualize the current results. This will greatly improve work efficiency.

Our current goal is to make small modifications on the basis of deer, so that the Euclidean distance between it and the horse is smaller than that between it and the deer.

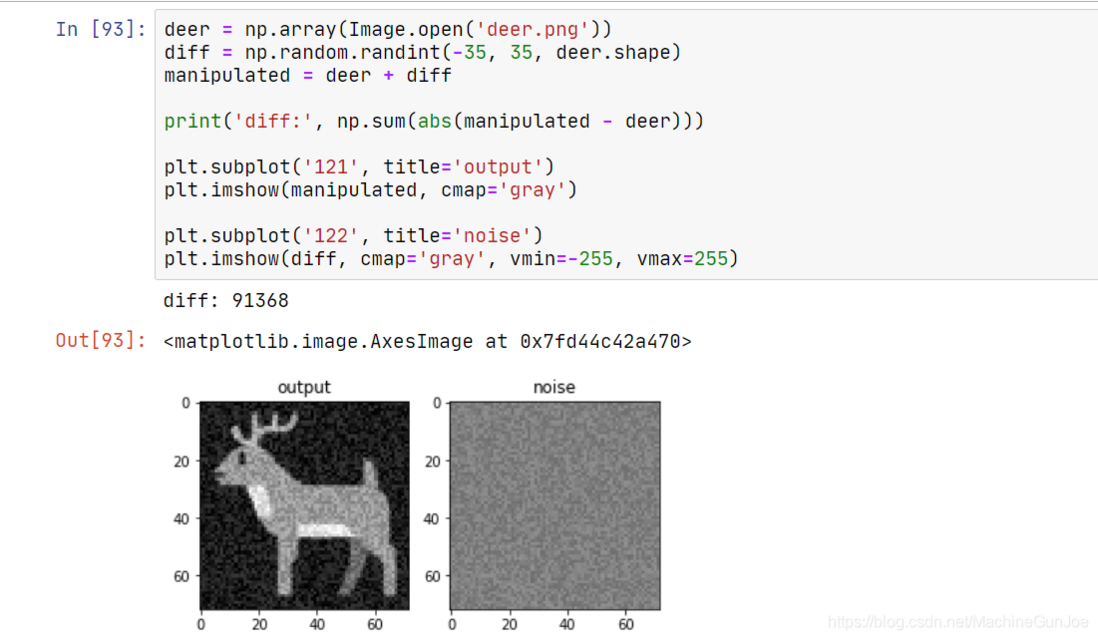

Try: random noise

In order to construct a legal picture, we need to go back and look at the "modification range" measurement method. The code is as follows:

for y in range(b):

for x in range(a):

diff_pixel += abs(source_p.getpixel((x, y)) - c_p.getpixel((x, y)))

return diff_pixelIt measures the sum of the distance of each pixel between pictures A and B. In other words, this is the Manhattan distance. For most of the CTF deception AI problems I encountered, the Manhattan distance was used to measure the magnitude of the modification.

This picture has a total of 5184 pixels, that is to say, on average, each pixel allows 19 deviations. In fact, this is a very lenient value. Let's just demonstrate a legal modification:

The output picture is just like an old-fashioned TV. So can it fool AI?

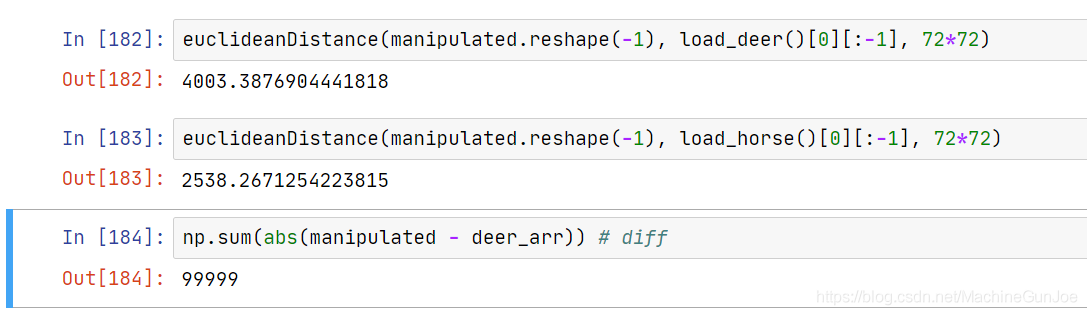

Unfortunately, the Euclidean distance between him and the deer is smaller than the Euclidean distance between him and the horse. We are now going to reflect on a question: Is it the best solution to randomly spread 100,000 differences on each pixel?

Solution: modify the pixels with large differences

Consider this problem on a two-dimensional plane. Suppose we want to make a point far away from (0, 0) by the Euclidean distance, but keep the Manhattan distance no more than 2. If you choose (1, 1), the Euclidean distance is sqrt(2); if you choose (0, 2), the Euclidean distance can reach 2, which is a better choice.

Then, we guess accordingly: For this problem, we should directly change some pixels to be equal to the corresponding pixels of horse; other pixels can be discarded. And those points that should be modified are the points with the biggest difference between deer and horse pixels.

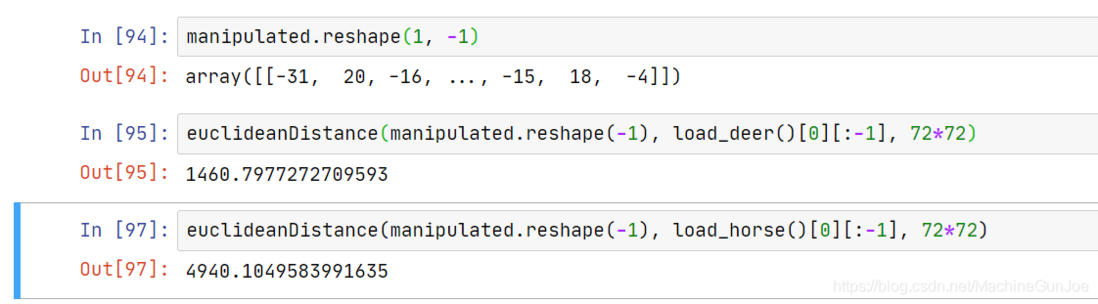

A weird picture was generated. To verify whether the requirements are met:

It can be seen that the distance to the deer is 4003, and the distance to the horse is 2538, which has fooled the AI. The pixel difference is 99999, and we successfully completed the goal specified by the title.

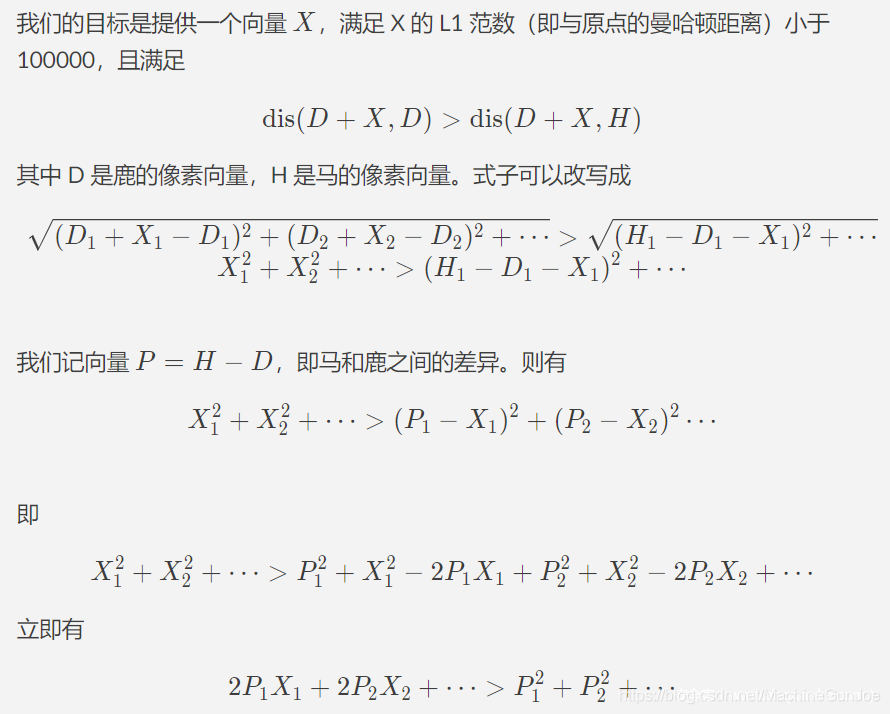

Mathematical evidence

We just succeeded in solving the problem based on the guess that "the pixels with larger differences should be modified". Here is a proof of why it works. Readers who don't like to read the proof can skip it.

Therefore, we proved mathematically why "the bigger the difference, the more worthy of change the pixel." And from the mathematical derivation, we can also find another conclusion: changing the pixel point to the corresponding pixel value of the horse is not the optimal solution. If you want to change it, change it completely: either change it to 0 or change it to 255. However, the pixel difference limit of 100,000 in this question is a very loose boundary, so our previous algorithm that was not so good can also be successful.

Summarize

Looking back at our problem-solving process, we start from an original picture X, apply a small perturbation vector to obtain sample Y, and AI's performance on Y is very different from its performance on X. Such samples are called "adversarial examples". How to construct high-quality adversarial examples and use adversarial examples to improve the robustness of the model is a direction that has gradually received attention in machine learning research.

It should be noted that attacking statistical learning AI models often requires some mathematical derivation. If readers are interested, the author recommends to learn about classic statistical learning methods such as kNN, kmeans, and Gaussian mixture models.

Deceive white box neural network

Overview

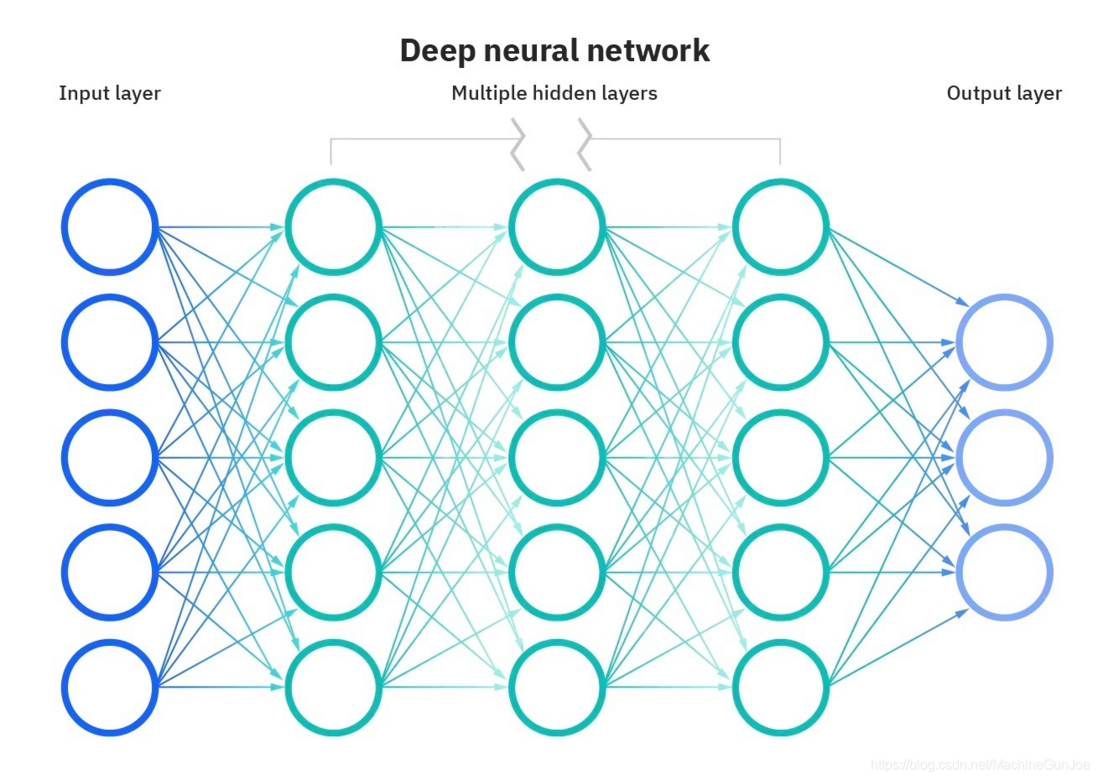

Neural networks can solve a large number of problems that are difficult to solve by traditional models, and have experienced rapid development in recent years. A neural network is generally composed of multiple layers of neurons, and each neuron has its own parameters. The figure below is a simple neural network model (multilayer perceptron):

Picture source IBM. This article assumes that the reader already has some understanding of neural networks; if you start from scratch, I recommend taking a look at 3Blue1Brown's machine learning tutorials and PyTorch's official tutorials.

Take the neural network described in the above figure as an example. In the image classification task, each pixel of the image is input to the first layer, and then transmitted to the second layer, the third layer... until the last layer. Each neuron in the last layer represents a category, and its output is a score of "whether the image belongs to this category". We generally take the category with the highest score as the classification result.

The deception neural network problem in CTF is generally as follows: Given a pre-trained classification model (PyTorch or TensorFlow), then given an original image. It is required to modify the original image slightly so that the neural network will misclassify it into another category.

Means of attack

When we train neural networks, we generally use gradient descent. Each round of iteration can be understood as the following process: first input X, then run net(X) to get the output, according to the difference between the network output and the expected output, backpropagation and modify the parameters of the network model.

So, what can we do to attack this network now? First, we still provide the original image X to the network to get the output net(X). Next, we calculate the loss value based on the difference between the "result of network classification" and "the result we want to mislead", and perform backpropagation. But it should be noted that we do not modify the network parameters, but subtract its gradient from the original image. Iterate several times in this way until it successfully misleads the AI.

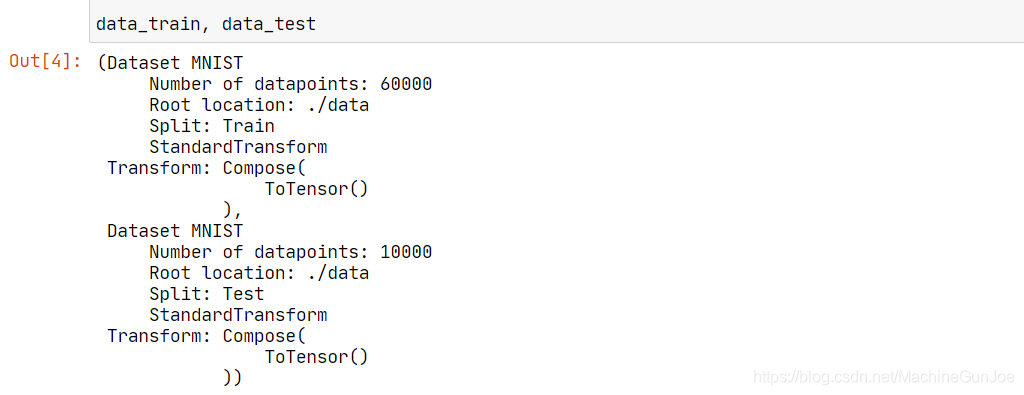

Below, we take the task of recognizing handwritten digits (MNIST data set) as an example, starting from training the network, and demonstrating the attack method.

Practice: Training a neural network

Here, PyTorch is used to implement the neural network. The first is to import the data set:

import torch

import torchvision

import torch.nn as nn

import torchvision.transforms as transforms

import torch.nn.functional as F

import numpy as np

import matplotlib.pyplot as plt

trans_to_tensor = transforms.Compose([

transforms.ToTensor()

])

data_train = torchvision.datasets.MNIST(

'./data',

train=True,

transform=trans_to_tensor,

download=True)

data_test = torchvision.datasets.MNIST(

'./data',

train=False,

transform=trans_to_tensor,

download=True)

data_train, data_test

To implement a DataLoader, the function is to generate randomly scrambled mini batches for training:

train_loader = torch.utils.data.DataLoader(

data_train,

batch_size=100,

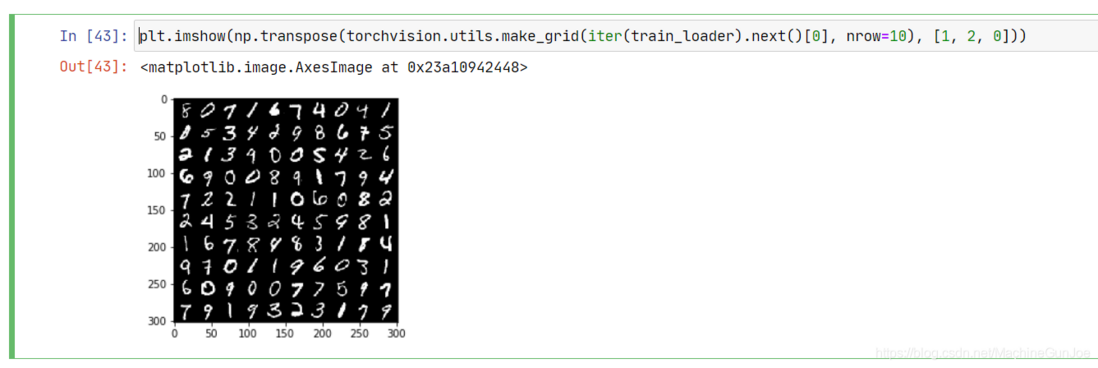

shuffle=True)Let’s take a look at a mini batch.

Next define the network. We use a very primitive model: expand the input 28*28 grayscale image into a one-dimensional array, and then go through a fully connected layer of 100 neurons, and the activation function is ReLu. Next, it passes through a fully connected layer of 10 neurons, and the activation function is sigmoid, which is output as the predicted value.

class MyNet(nn.Module):

def __init__(self):

super().__init__()

self.fc1 = nn.Linear(28*28, 100)

self.fc2 = nn.Linear(100, 10)

def forward(self, x):

x = x.view(-1, 28*28)

x = self.fc1(x)

x = F.relu(x)

x = self.fc2(x)

x = torch.sigmoid(x)

return x

net = MyNet()If the number in the image is c, we hope that only the c-th bit in the output 10-dimensional vector is 1 and the rest are 0. So we use the cross entropy loss function and Adam optimizer:

criterion = nn.CrossEntropyLoss()

optimizer = torch.optim.Adam(net.parameters())The next step is to train this network.

def fit(net, epoch=1):

net.train()

run_loss = 0

for num_epoch in range(epoch):

print(f'epoch {num_epoch}')

for i, data in enumerate(train_loader):

x, y = data[0], data[1]

outputs = net(x)

loss = criterion(outputs, y)

optimizer.zero_grad()

loss.backward()

optimizer.step()

run_loss += loss.item()

if i % 100 == 99:

print(f'[{i+1} / 600] loss={run_loss / 100}')

run_loss = 0

test(net)

def test(net):

net.eval()

test_loader = torch.utils.data.DataLoader(data_train, batch_size=10000, shuffle=False)

test_data = next(iter(test_loader))

with torch.no_grad():

x, y = test_data[0], test_data[1]

outputs = net(x)

pred = torch.max(outputs, 1)[1]

print(f'test acc: {sum(pred == y)} / {y.shape[0]}')

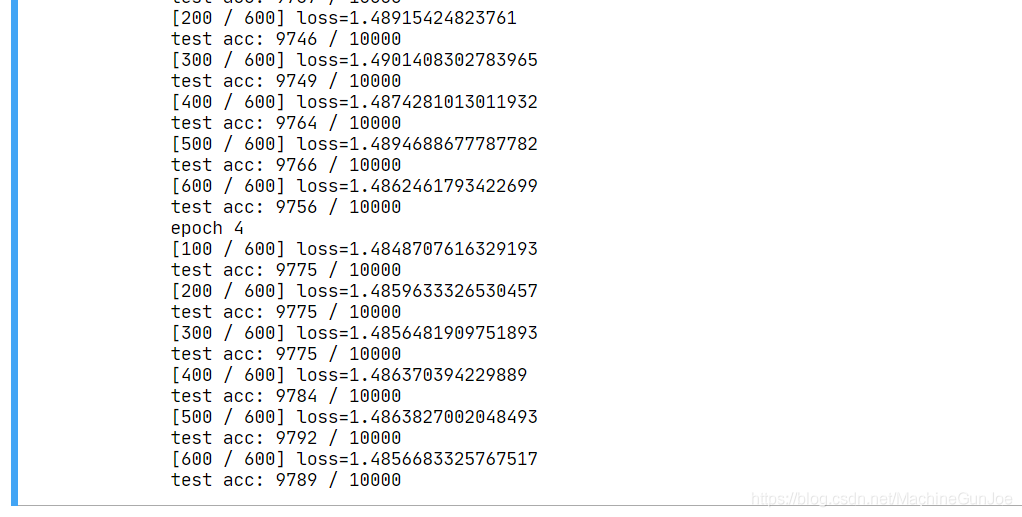

net.train()Look at the results after 5 epochs:

We trained a network with a test accuracy of 97.89%. Next, we began to attack the network.

Practice: deceiving the white box multi-layer perceptron

We all know all the parameters of the current network. In the CTF, typically provide network training codes, and by torch.save () derived model pre-trained, the player through model.load_state_dict () to import model parameters.

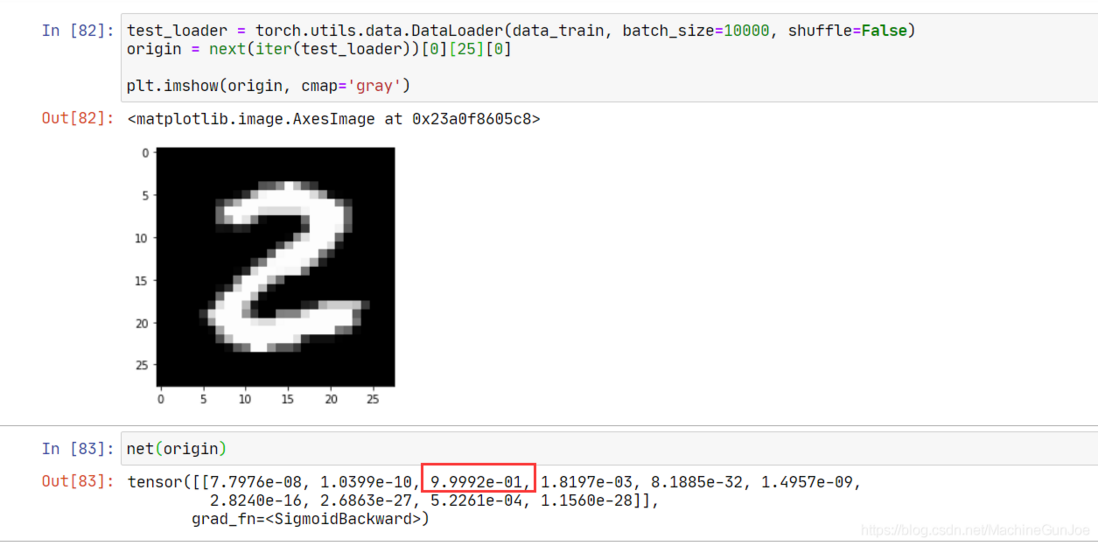

We randomly choose a piece of data as the original picture:

Our model classifies it as 2 with strong confidence. Next, we tampered with the original image so that the network misclassified it as 3. The process is as follows:

- Input the picture into the network and get the network output.

- Find the loss value between the network output and the expected output (cross entropy is used here).

- The image pixels are subtracted from their own gradient * alpha, without changing the network parameters.

Repeat the above process until the misleading is successful. code show as below:

def play(epoch):

net.requires_grad_(False) # 冻结网络参数

img.requires_grad_(True) # 追踪输入数据的梯度

loss_fn = nn.CrossEntropyLoss() # 交叉熵损失函数

for num_epoch in range(epoch):

output = net(img)

target = torch.tensor([3]) # 误导网络,使之分类为 3

loss = loss_fn(output, target)

loss.backward() # 计算梯度

img.data.sub_(img.grad * .05) # 梯度下降

img.grad.zero_()

if num_epoch % 10 == 9:

print(f'[{num_epoch + 1} / {epoch}] loss: {loss} pred: {torch.max(output, 1)[1].item()}')

if torch.max(output, 1)[1].item() == 3:

print(f'done in round {num_epoch + 1}')

return

img = origin.view(1, 28, 28)

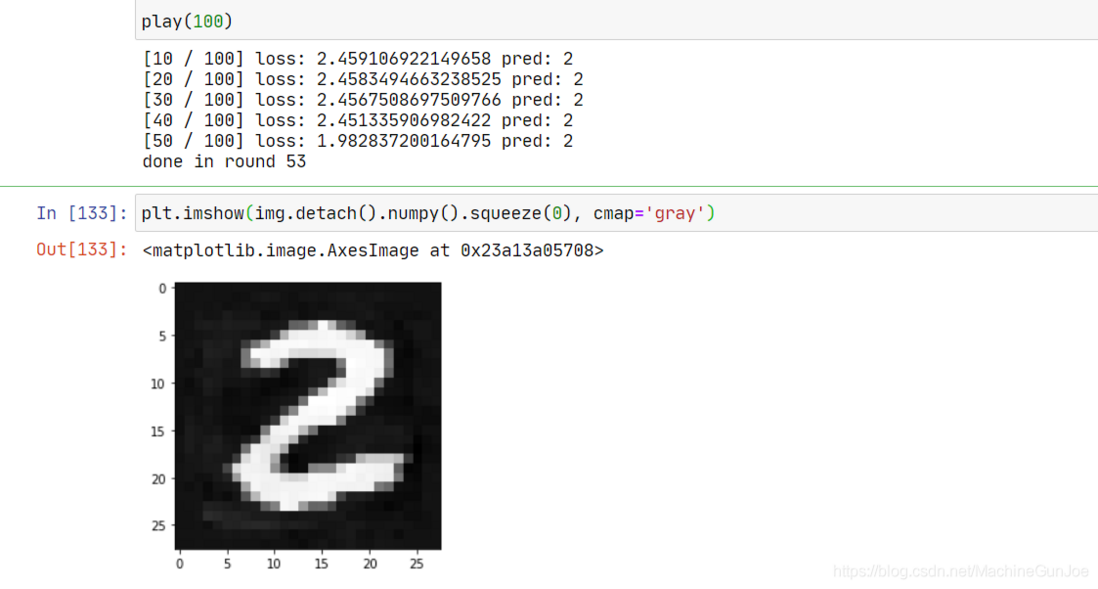

play(100)

We successfully constructed an adversarial sample. We humans obviously still see it as 2, but the model recognizes it as 3. So far, the task has been successfully completed. The comparison chart is as follows:

Summarize

Many CTF deception neural network problems can use the above set of codes. Players do not need to write the code for training the network, they only need to import the pre-trained model. In the iteration, the player should choose the appropriate learning rate alpha (0.05 in the author's code), and add some special constraints (for example, the modification distance of each pixel cannot exceed a specific value). In any case, the main idea of deceiving the white box neural network is often "fix the network parameters and modify the original image through gradient descent."

Further discussion

We have completed the deception of the white box neural network step by step. But in daily life, very few neural networks will advertise their parameters, which prevents us from using the above routines to attack. In addition, the picture we generated above is very unnatural, with a lot of background noise, which is not present in normal digital pictures.

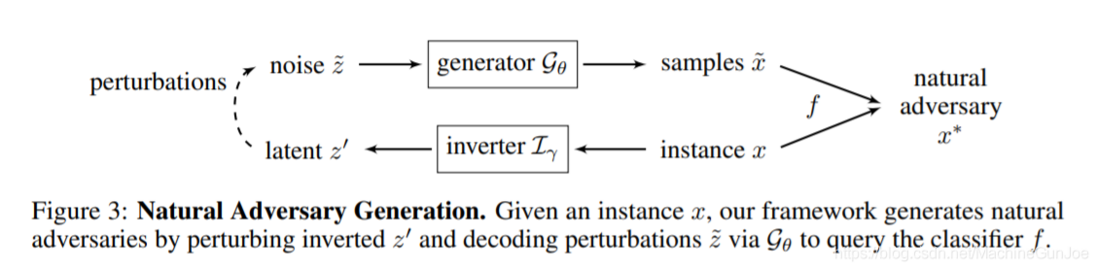

Regarding these issues, an ICLR2018 paper Generating natural adversarial examples can provide some inspiration. The method proposed in this paper does not require to know the network parameters in advance, or even the network model. And this scheme can generate more natural adversarial examples, as shown below:

So how did they do it? The principle is briefly described below. First of all, train a generator from latent space to picture and an encoder to infer z from picture through the idea similar to CycleGAN. Next, encode the original image into a vector z, and randomly select a lot of z's near z, use a generator to generate images from these z's, and then hand them to the target model to judge. If there is a picture that successfully misleads the model, the report is successful.

The author of the paper gave the effectiveness of this method in CV and NLP and successfully attacked Google Translate. Their code is open source on Github.

This is a relatively wide-ranging solution, but I think it may not be suitable for CTF questions. This is because training GAN is a time-consuming and laborious work that requires machine learning skills, which is beyond the scope of CTF general investigation; and because the subject model is a black box, it may be a good discriminant model for training model skills. Competitors who are quite different from the person who made the question are unlikely to succeed.

All in all, adversarial samples are an interesting research direction. The author today introduced the general steps of deceiving AI in CTF competitions, hoping to help CTF players.

**粗体** _斜体_ [链接](http://example.com) `代码` - 列表 > 引用。你还可以使用@来通知其他用户。