HTTP is called Hypertext Transfer Protocol, which is an application layer transfer protocol based on the World Wide Web. At the beginning of its establishment, it was mainly used to transfer Hypertext Markup Language ( HTML ) documents from the Web server to the client browser. The original version is HTTP 0.9 , which was produced in the late 1980s, and was later upgraded to 1.0 in 1996.

But WEB2.0 , our page has become more complicated, not just simple text and pictures. At the same time, our HTML page has CSS and Javascript to enrich our page display. When ajax appears, we have more A method of obtaining data from the server is provided. These are actually based on the HTTP protocol. Also in the mobile Internet era, our pages can run in mobile browsers, but PC , the mobile network situation is more complicated, which makes us have to understand the HTTP in depth and continue to optimize the process. So in 1997, HTTP1.1 appeared, and then until 2014, HTTP1.1 has been updated.

Then came 2015, in order to adapt quickly sent web needs of modern applications and browsers, in Google of SPDY project basis to develop a new HTTP2 agreement.

After another 4 years, in 2019, Google developed a new protocol standard QUIC , which is HTTP3 , and its purpose is to improve the speed and security of the interaction between API

HTTP1.0

Previous HTTP1.0 version, a stateless, connectionless application layer protocol.

HTTP1.0 stipulates that the browser and the server maintain a short connection. Each request of the browser needs to establish a TCP connection with the server. After the server is processed, the TCP connection is immediately disconnected (no connection). The server does not track each client or record Past requests (stateless).

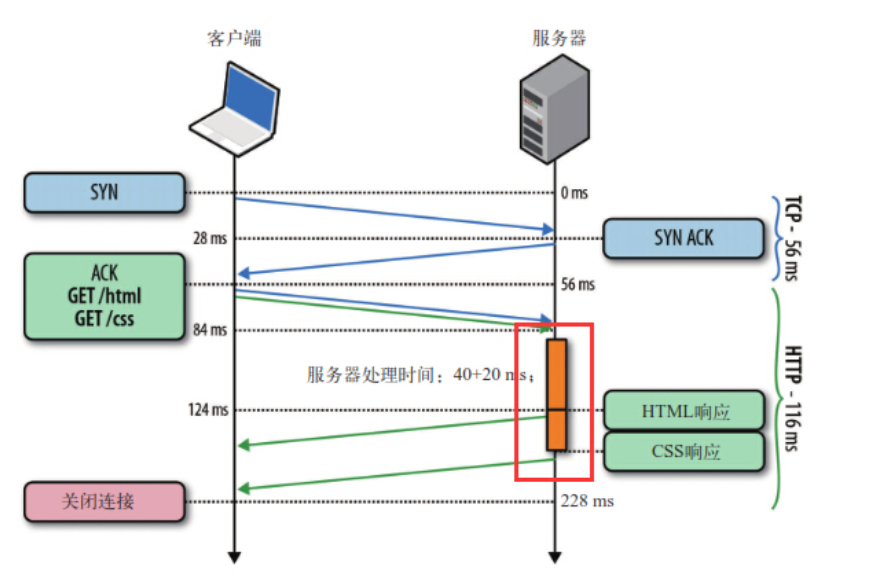

TCPConnection establishment time = 1.5RTT

- One go (

SYN)- Second time (

SYN + ACK)- Three to go (

ACK)

RTT(Round Trip Time)refers to the time of communication back and forth

This statelessness can use the cookie/session mechanism for identity authentication and status recording. The following two questions are more troublesome.

There is a very important prerequisite for understanding these two issues: the client establishes a connection to the server based on the domain name. Generally, the PC browser will establish 6 to 8 connections for a single domain name of server at the same time. The number of connections on the mobile phone is generally controlled at 4~6

cannot reuse the connection . Each request has to go through a three-way handshake and slow start. The three-way handshake has a more obvious impact in high-latency scenarios, while slow start has a greater impact on large file requests.

TCPconnection will "tune" itself over time, initially limiting the maximum speed of the connection. If the data is successfully transmitted, the transmission speed will increase over time. This tuning is calledTCPslow start.- line head is blocked (

head of line blocking) , becauseHTTP1.0stipulates that the next request must be sent after the previous request response arrives. Assuming that the response to the previous request has not arrived, the next request is not sent, and the same subsequent requests are also blocked.

In order to solve these problems, HTTP1.1 appeared.

HTTP1.1

For HTTP1.1 , it not only inherits the HTTP1.0 , but also overcomes many performance problems of HTTP1.0

- caching , in

HTTP1.0mainly used inheaderLaneIf-Modified-Since,Expiresdo standard cache determination,HTTP1.1it introduces more cache control strategy e.g.Entity tag,If-Unmodified-Since,If-Match, If-None-Matchother heads more alternative cache control buffer Strategy. - Bandwidth optimization and use of network connections In ,

HTTP1.0, there are some phenomena of wasting bandwidth. For example, the client only needs a part of an object, but the server sends the entire object, and does not support the function of resumable transmission.HTTP1.1rangeheader field in the request header, which allows only a certain part of the resource to be requested, that is, the return code is206(Partial Content), which facilitates the free choice of developers in order to make full use of bandwidth and connection. - management error notification , in

HTTP1.1added in the 24 states in response to the error code, such as409(Conflict)represents the current state of the resource and resource request conflicts;410(Gone)represents a resource on the server permanently deleted. - Host header processes . In

HTTP1.0, each server is considered to be bound to a uniqueIPURLin the request message does not convey the host name (hostname). But with the development of virtual host technology, there can be multiple virtual hosts (Multi-homed Web Servers) on a physical server, and they share aIPaddress.HTTP1.1the request message and response message ofHostshould support the 0613b108c85d0d header field, and if there is noHostheader field in the request message, an error (400 Bad Request) will be reported. - Persistent Connection ,

HTTP1.1added aConnectionfield, by settingKeep-Alivecan keep theHTTPconnection is not disconnected, to avoid each client and server request to repeatedly establish and release the establishment of theTCPconnection, which improves the utilization of the network. . If the client wants to close theHTTPconnection, it can carryConnection: falsein the request header to inform the server to close the request. - Pipelining (Pipelining) , based on

HTTP1.1, makes request pipelined possible. Pipelining allows requests to be transmitted in "parallel". For example, if the body of the response is ahtmlpage, which contains a lot ofimg, at this timekeep-alivea very important role and can send multiple requests in parallel.

The "parallel" here is not a true parallel transmission, because the server must send back the corresponding results in the order of the client request to ensure that the client can distinguish the response content of each request.

As shown in the figure, the client sends two requests at the same time to obtain html and css . If the server's css resource is ready first, the server will first send html and then css .

In other words, only after the resources of the html css response start to be transmitted. In other words, it is not allowed to have two parallel responses at the same time.

In addition, pipelining also has some defects:

pipeliningcan only be applied tohttp1.1. Generally speaking,http1.1serveris required to supportpipelining;- Only idempotent requests (

GET,HEAD) can usepipelining, non-idempotent requests such asPOSTcannot be used, because there may be sequential dependencies between requests; - Most of the

httpproxy servers do not supportpipelining; - There is a problem with the negotiation with the old server that does not support

pipelining - May cause the new

Front of queue blockingproblem;

It can be seen that HTTP1.1 still cannot solve the problem of head-of-line blocking ( head of line blocking At the same time, "pipeline" technology has various problems, so many browsers either don't support it at all, or simply close it by default, and the conditions for opening it are very harsh... and it doesn't seem to be very useful in fact.

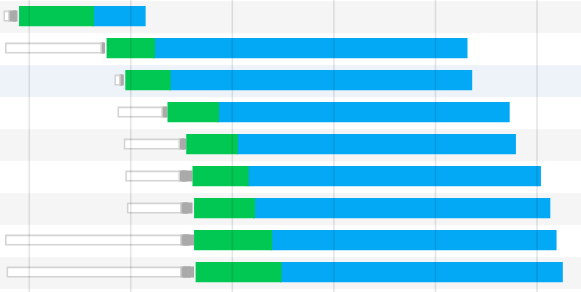

What about the parallel requests we see in the Google console?

As shown in the figure, the green part represents the waiting time from the request to the server response, and the blue part represents the download time of the resource. According to theory, the HTTP response should be that the resource of the previous response has been downloaded, and the resource of the next response can be downloaded. However, parallel downloads of response resources appeared here. This is why?

In fact, although HTTP1.1 supports pipelining, the server must also send back responses one by one, which is a big flaw. In fact, the current browser vendors have adopted another approach, which allows us to open multiple sessions of TCP In other words, the parallel we see in the above figure is actually the HTTP request and response TCP This is the limitation of browsers that we are familiar with to load 6~8 resources in parallel under the same domain. And this is the real parallel !

HTTP2.0

HTTP 2.0 arise, compared to HTTP 1.x , greatly enhance the web performance. On HTTP/1.1 , the network delay is further reduced. For front-end developers, the optimization work on the front-end is undoubtedly reduced. This article will HTTP 2.0 basic technical points of the 0613b108c860ed protocol, contact relevant knowledge, and explore HTTP 2.0 can improve performance.

edge

HTTP/2: the Future of the Internet This is an official demonstration established by the Akamai company to illustrate the significant performance improvement of HTTP/2 compared to the previous HTTP/1.1 At the same time, 379 pictures are requested. From Load time , we can see the speed advantage of HTTP/2

At this point if we open Chrome Developer Tools view Network column can be found, HTTP/2 in terms of network requests and HTTP /1.1 clear distinction.

HTTP/1:

HTTP/2:

Multiplexing

http1.1initially only support two pairs of the same domain nametcp, but for performance reasonsrfcfollowed by another modified to be used 6-8. In addition,keep-aliveusing ahttp pipeliningessence ismultiplexing, but because there will behead of line blockingproblems, major browsers are disabled by defaultpipelining, andhttp2.0only real solutionholproblem

multiplexing allows multiple request-response messages to be initiated simultaneously through a single HTTP/2

As we all know, in the HTTP/1.1 protocol, "Browser clients have a certain limit on the number of requests under the same domain name at the same time. Requests exceeding the limit will be blocked" .

Clients that use persistent connections SHOULD limit the number of simultaneous connections that they maintain to a given server. A single-user client SHOULD NOT maintain more than 2 connections with any server or proxy. A proxy SHOULD use up to 2*N connections to another server or proxy, where N is the number of simultaneously active users. These guidelines are intended to improve HTTP response times and avoid congestion.

The figure summarizes the number of restrictions imposed by different browsers.

This is also one of the reasons why some sites have multiple static resource CDN domain names. Take Twitter as an example, http://twimg.com, the purpose is to solve the problem of browser restriction blocking for the same domain name in disguise. The HTTP/2 multiplexing ( Multiplexing ) allows multiple request-response messages to be initiated HTTP/2 connection at the same time.

Therefore, HTTP/2 can easily implement multi-stream parallelism without relying on establishing multiple TCP connections. HTTP/2 HTTP the basic unit of 0613b108c86403 protocol communication to one frame, which corresponds to the message in the logical stream. In parallel, exchange messages in both directions TCP

Binary framing

Without changes HTTP/1.x semantics, methods, status code, URI lower and header fields ... .. case, HTTP/2 is how to do "to break HTTP1.1 performance constraints, improve transmission performance, low latency and high throughput" of?

One of the keys is to add a binary framing layer between the HTTP/2 ) and the transport layer ( TCP or UDP

In the binary framing layer, HTTP/2 information will be transmitted to all the divided message and the smaller frame ( frame ), and are encoded using a binary format, wherein HTTP1.x header information is encapsulated into HEADER frame , while the corresponding Request Body Then encapsulate it into DATA frame .

Here are a few concepts:

- Stream (

stream): A bidirectional byte stream on the established connection. - Message: A complete series of data frames corresponding to logical messages.

- Frame (

frame):HTTP2.0communication. Each frame contains the frame header and at least identifies the stream to which the current frame belongs (stream id)

HTTP/2 communication is completed on a connection, this connection can carry any number of bidirectional data streams.

Each data stream is sent in the form of a message, and the message consists of one or more frames. These frames can be sent out of order, and then stream id ) in the header of each frame.

For example, each request is a data stream. The data stream is sent as a message, and the message is divided into multiple frames. The header of the frame records stream id to identify the data stream it belongs to. Frames of different attributes can be connected Randomly mixed together. The receiver can stream id the frame to different requests according to 0613b108c86634.

In addition, multiplexing (connection sharing) may cause critical requests to be blocked. HTTP2.0 can be set with priority and dependency. The data stream with high priority will be processed by the server first and returned to the client. The data stream can also depend on other sub-data streams.

It can be seen that HTTP2.0 realizes true parallel transmission, which can make any number of HTTP requests TCP And this powerful function is based on the "binary framing" feature.

summary:

- Single connection with multiple resources, reducing the link pressure on the server side, less memory usage, and greater connection throughput

- Due

TCPconnection, the network congestion can be improved, and the slow start time is reduced, so that the congestion and packet loss recovery speed is faster

Header Compression

HTTP/1.1 does not support HTTP header compression. For this reason, SPDY and HTTP/2 came into being. SPDY uses the general DEFLATE algorithm, while HTTP/2 uses the special HPACK algorithm designed for header compression.

In HTTP1.x , the header metadata is sent in the form of plain text, which usually adds 500 to 800 bytes of load to each request.

For example, cookie , by default, the browser will attach cookie to header send it to the server every time a request is made. (Because cookie relatively large and is sent repeatedly every time, it generally does not store information, it is only used for status recording and identity authentication)

HTTP2.0 use encoder to reduce the need for transmission header size, their respective communications cache a header fields table, both to avoid repetition header transmission, but also reduces the size to be transferred. Efficient compression algorithm can greatly compress header , reducing the number of sent packets and thus reducing delay.

Server Push

Server push is a mechanism for sending data before the client requests it. In HTTP/2 , the server can send multiple responses to a client request. Server Push makes HTTP1.x era useless; if a request is initiated by your homepage, the server is likely to respond to the content of the homepage, logo and style sheets, because it knows that the client will use these things . This is equivalent to a HTML document, but compared with it, server push has a big advantage: it can be cached! It also makes it possible to share cache resources between different pages when following the same origin.

HTTP3

HTTP/3 has not yet been officially launched, but since 2017, HTTP/3 has been updated to 34 drafts, the basic features have been determined, and the package format may change in the future.

Therefore, this introduction to HTTP/3 will not involve the packet format, only its characteristics.

The flawed HTTP/2

HTTP/2 HTTP/1.1 through new features such as header compression, binary encoding, multiplexing, and server push, but the catch is that the HTTP/2 protocol is based on TCP , so there are three defects.

- The head of the line is blocked;

- The handshake between

TCPandTLS - Network migration requires reconnection;

Head-of-line blocking

HTTP/2 multiple requests are running in a TCP connection, then when the TCP packet is lost, the entire TCP has to wait for retransmission, then all requests in TCP

Because TCP is a byte stream protocol, the TCP layer must ensure that the received byte data is complete and orderly. If the TCP segment with a lower serial number is lost in network transmission, even if the TCP segment with a higher serial number has been After receiving it, the application layer cannot read this part of the data from the kernel. From the HTTP , the request is blocked.

Delay in the handshake between TCP and TLS

When a HTTP request is initiated, it needs to go through the TCP three-way handshake and the TLS four-way handshake ( TLS 1.2 ), so a total of three RTT delays are required to send the request data.

In addition, TCP has the characteristics of "congestion control", so TCP that has just established a connection will have a "slow start" process, which will have a "slow start" effect on the TCP connection.

Network migration requires reconnection

A TCP connection is determined by a four-tuple (source IP address, source port, destination IP address, destination port). This means that if the IP changes, it will cause the need to TCP TLS , which is not conducive to mobile switching network scenarios, such 4G network environment switched WIFI .

These problems are TCP protocol, no matter how the application layer HTTP/2 is designed, it cannot escape. To solve this problem, you must transport layer protocol with UDP . This bold decision HTTP/3 made by 0613b108c9e947!

Features of the QUIC

We are well aware that UDP is a simple and unreliable transmission protocol, and the UDP packets are disordered and have no dependencies.

Moreover, UDP does not need to be connected, and there is no need to shake hands and wave hands, so it is naturally faster TCP

Of course, HTTP/3 not only simply replaces the transmission protocol with UDP , but also QUIC protocol at the "application layer" UD P protocol. It has similar TCP for connection management, congestion window, and traffic control. Since the UDP protocol for unreliable transmission becomes "reliable", so there is no need to worry about packet loss.

QUIC protocol. Here are a few examples, such as:

- No head of line blocking;

- Faster connection establishment;

- Connection migration;

No head of line blocking

QUIC protocol also has HTTP/2 Stream and multiplexing. It can also transmit multiple Stream Stream can be considered as a HTTP request.

Since QUIC transmission protocol used is UDP , UDP order packets do not care if the packet loss, UDP not care.

However, the QUIC protocol will ensure the reliability of data packets, and each data packet has a unique serial number. When a data packet in a certain flow is lost, even if other data packets of the flow arrive, the data cannot be read by HTTP/3. The data will not be delivered to HTTP/3 QUIC retransmits the lost packet.

As long as the data packets of other streams are completely received, HTTP/3 can read the data. This is different HTTP/2 HTTP/2 as long as a data packet in a flow is lost, other flows will be affected as a result.

Therefore, QUIC connected to the Stream , and they are all independent. If a flow occurs, it will only affect the flow, and the other flows will not be affected.

Faster connection establishment

For the HTTP/1 and HTTP/2 protocols, TCP and TLS are layered, which belong to the transport layer implemented by the kernel and openssl library. Therefore, they are difficult to merge together and need to be divided into batches to shake hands, first TCP handshake, and then TLS .

Although HTTP/3 requires the QUIC protocol handshake before transmitting data, this handshake process only requires 1 RTT . The purpose of the handshake is to confirm the "connection ID " between the two parties. The connection migration is realized based on the connection ID

But HTTP/3 of QUIC agreement not to TLS stratification, but QUIC contains internal TLS , in its own frame carries TLS in the "record", plus QUIC using TLS1.3 , therefore only a RTT it can "simultaneously" to establish a connection with the completion of key agreement, even when the second connection, and application packets can QUIC handshake information (connection information + TLS information) sent together to achieve 0-RTT effect .

As shown in the right part of the figure below, HTTP/3 When the session resumes, the payload data is sent together with the first data packet, which can be 0-RTT :

Connection migration

As we mentioned earlier, HTTP TCP transmission protocol is determined by a four-tuple (source IP , source port, destination IP , destination port) to determine a TCP connection, then when the mobile device's network is switched 4G WIFI , Means that the IP changed, then the connection must be disconnected and then re-established. The process of establishing a connection includes the TCP three-way handshake of TLS four-way handshake of TCP , as well as the slow-start deceleration process of 0613b108c9edb4 for the user. It feels that the network suddenly freezes, so the migration cost of the connection is very high.

The QUIC protocol does not use a four-tuple to "bind" the connection, but uses the connection ID to mark the two endpoints of the communication. The client and the server can each choose a group of ID to mark themselves, so even if the mobile device After the change of the network, the IP changed. As long as the context information (such as the connection ID TLS key, etc.) is still maintained, the original connection can be reused "seamlessly", eliminating the cost of reconnection, and there is no sense of stutter , the function connection migration 1613b108c9ee08.

HTTP/3 protocol

After understanding QUIC protocol, let's take a look at what changes have been made to the HTTP/3 protocol at the HTTP

HTTP/3 with HTTP/2 uses the same frame structure of the binary, difference is that HTTP/2 binary frame's need to define Stream , and HTTP/3 itself does not need redefinition Stream , directly QUIC Lane Stream , so HTTP/3 frame structure also becomes simple.

As you can see from the figure above, the HTTP/3 frame header has only two fields: type and length.

According to the different frame types, it is roughly divided into two categories: data frame and control frame. HEADERS frame ( HTTP header) and DATA frame ( HTTP packet body) belong to data frames.

HTTP/3 has also been upgraded for the convenience of the header compression algorithm, and upgraded to QPACK . And HTTP/2 in HPACK encoding a similar manner, HTTP/3 the QPACK also use of a static table, dynamic table and Huffman coding.

Regarding the change of the static table, the static table of HTTP/2 in HPACK has only 61 items, while HTTP/3 in QPACK has expanded to 91 items.

HTTP/2 and HTTP/3 of Huffman encoding is not much different, but the different dynamic table codec.

The so-called dynamic table, after the first request-response, the two parties will Header items that are not included in the static table, and then use only one number in subsequent transmissions, and then the other party can use this number from When the dynamic table finds the corresponding data, there is no need to transmit long data every time, which greatly improves the coding efficiency.

It can be seen that the dynamic table is sequential. If the packet loss occurs in the first request and subsequent requests are received, the other party cannot decode the HPACK header because the other party has not established the dynamic table yet, so the subsequent Request decoding will block until the data packet lost in the first request is retransmitted to .

HTTP/3 of QPACK solves this problem, how does it solve it?

QUIC will have two special unidirectional flows. The so-called single flow can only send messages at one end, while bidirectional means that both ends can send messages. The transmission of HTTP messages uses a bidirectional flow. The usage of these two unidirectional flows:

- One is called

QPACK Encoder Stream, which is used to pass a dictionary (key-value) to the other party. For example, in the face of aHTTPrequest header that is not a static table, the client can send the dictionary through this Stream; - One is

QPACK Decoder Stream, which is used to respond to the other party, telling it that the dictionary it just sent has been updated to its own local dynamic table, and it can use this dictionary for encoding in the future.

This special two-way flow is used synchronous dynamic table both after decoding coding notified party update confirmation, dynamic table using only coding HTTP head.

Summarize

HTTP/2 has the ability to transmit multiple streams concurrently, the transport layer is the TCP protocol, so there are the following defects:

- head of the line blocks ,

HTTP/2multiple requests run in aTCPconnection, if theTCPsegment with the lower serial number is lost in the network transmission, even if theTCPsegment with the higher serial number has not been received from the application layer Reading this part of the data in the kernel, from the HTTP perspective, multiple requests are blocked; -

TCPandTLShandshake delay ,TCLthree-way handshake andTLSfour-way handshake, a total delay of3-RTT - connection migration needs to reconnect , from the mobile device

4Gswitching network environment toWIFI, sinceTCPis confirmed based on a four tupleTCPconnection, then the network environment changes, it will causeIPaddress or port change, thenTCPThe connection can only be disconnected and then re-established. The cost of switching the network environment is high;

HTTP/3 will transport layer from TCP replaced with UDP , and UDP developed the protocol QUIC protocol to ensure reliable data transmission.

Features of the QUIC

- no head of line blocking ,

QUICconnected to multipleStreamthere is no dependency, they are independent, and there will be no underlying protocol restrictions. If a stream is lost, it will only affect that stream, and other streams will not Affected; - establish a connection speed , because

QUICinterior containsTLS1.3, therefore only aRTTcan "simultaneously" to complete the connection to theTLSkey agreement, even when the second connection, the application packet canQUIChandshake (Connection information +TLSinformation) is sent together to achieve the effect0-RTT - connection migration ,

QUICprotocol does not use a four-tuple to "bind" the connection, but through "connectionID" to mark the two endpoints of the communication, the client and server can each choose a set ofIDto mark themselves, Therefore, even if the mobile device's network changes, causing theIPchange, as long as the context information (such as the connectionIDTLSkey, etc.) is still retained, the original connection can be reused "seamlessly", eliminating the cost of reconnection;

In addition, the HTTP/3 of QPACK synchronizes the dynamic tables of both parties through two special unidirectional flows, which solves the HTTP/2 of the head of HPACK

The following figure is from another cloud, which shows that HTTP/2 and HTTP/3 , the packet loss and its impact:

HTTP/2 multiplexes 2 requests. The response is broken down into multiple packets, once a packet is lost, both requests are blocked

HTTP/3 multiplexes 2 requests. Although the light-colored packets are lost, the dark-colored packets are transmitted well.

Reference:

[HTTP1.0 HTTP1.1 HTTP2.0 main feature comparison ]( https://segmentfault.com/a/1190000013028798)

**粗体** _斜体_ [链接](http://example.com) `代码` - 列表 > 引用。你还可以使用@来通知其他用户。