Author: Wang Jianghua Address: https://mp.weixin.qq.com/s/do9bXH9qJzt4kNyxlEOyJg

1. Introduction to Kafka

Kafka is a distributed message queue (Message Queue) based on the publish/subscribe model, mainly used in the real-time processing field of big data. The main design goals are as follows:

- Provides message persistence capabilities in a time complexity of O(1), and guarantees constant-time access performance even for data above TB level

- High throughput rate. Even on a very cheap machine, a single machine can support the transmission of 100K messages per second

- Support message partitioning between Kafka Servers, and distributed consumption, while ensuring the sequential transmission of messages in each partition, and supporting offline data processing and real-time data processing

2. Why use a message system

Kafka is essentially an MQ (Message Queue). What are the benefits of using message queues?

- Decoupling: Allows us to independently modify the processing on both sides of the queue without affecting each other.

- Redundancy: In some cases, our data processing process will fail and cause data loss. The message queue persists the data until they have been completely processed. In this way, the risk of data loss is avoided, ensuring that your data is stored safely until you finish using it.

- Peak processing capacity: The system will not crash due to sudden traffic requests. The message queue can make the service withstand the sudden access pressure, which helps to solve the inconsistency of the processing speed of the production message and the consumption message

- Asynchronous communication: The message queue allows users to put a message into the queue without processing it immediately, waiting for subsequent consumption processing.

3. Basic knowledge of kafka

Here are some important concepts of Kafka, so that everyone has an overall understanding and perception of Kafka

- Producer: The message producer, the client that sends messages to the Kafka Broker.

- Consumer: A message consumer, a client that reads messages from Kafka Broker.

- Consumer Group: Consumer group, each consumer in the consumer group is responsible for consuming data in different partitions to improve consumption power. A partition can only be consumed by one consumer in the group, and different consumer groups do not affect each other.

- Broker: A Kafka machine is a Broker. A cluster is composed of multiple Brokers and a Broker can accommodate multiple topics.

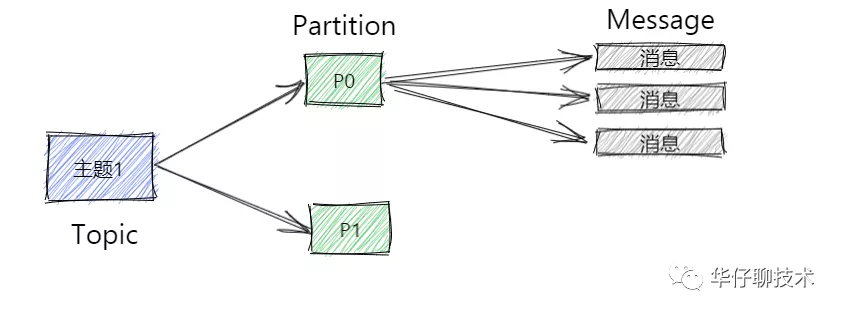

- Topic: It can be simply understood as a queue. Topic categorizes messages, and both producers and consumers face the same topic.

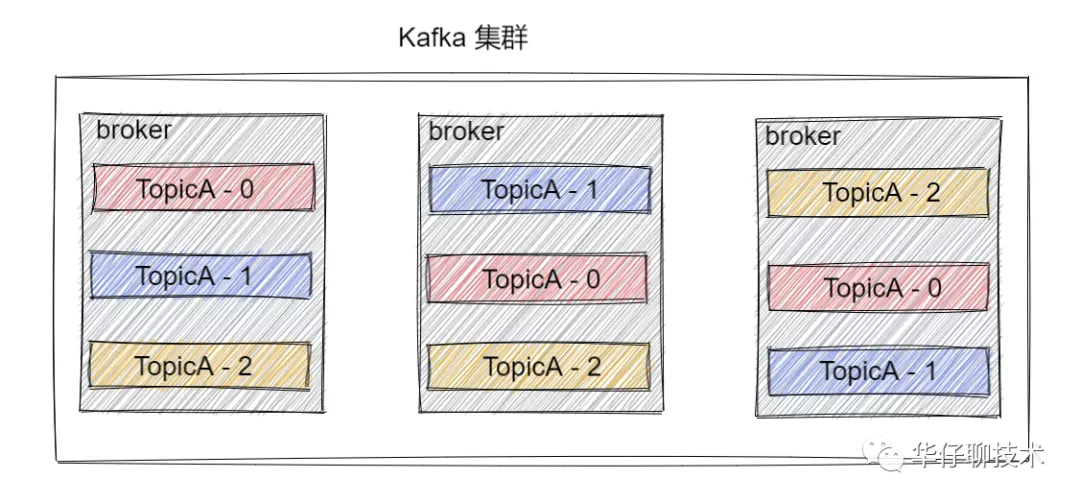

- Partition: In order to achieve topic scalability and improve concurrency, a very large topic can be distributed to multiple brokers, and a topic can be divided into multiple partitions for storage, and each partition is an ordered queue.

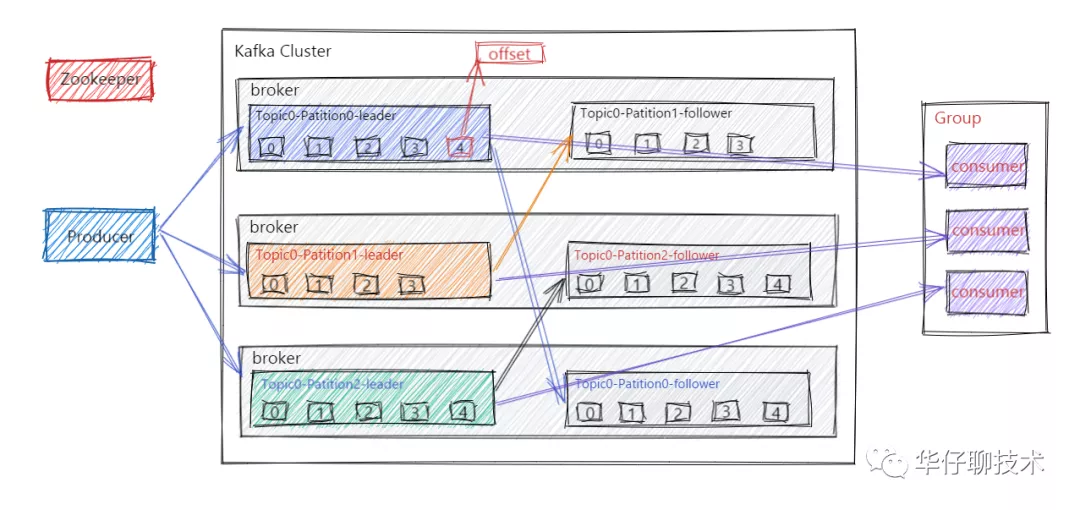

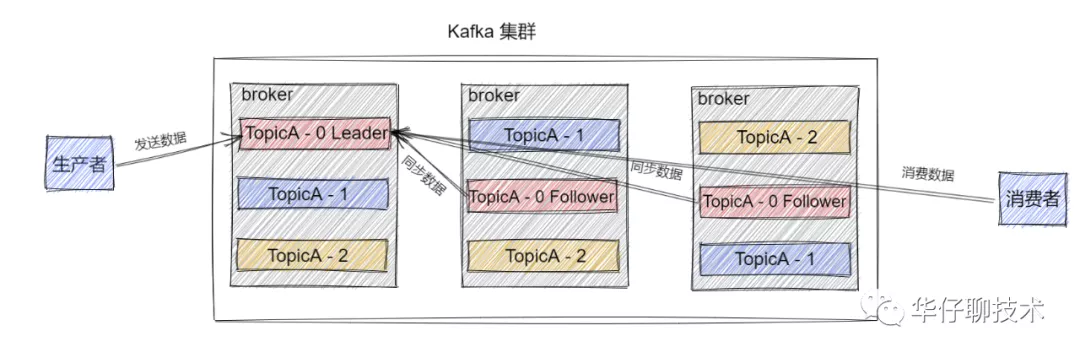

- Replica: Replica. In order to achieve the function of data backup, to ensure that when a node in the cluster fails, the Partition data on that node is not lost, and Kafka can still continue to work. For this reason, Kafka provides a copy mechanism, a Topic Each Partition has several copies, one Leader copy and several Follower copies.

- Leader: That is, the master copy of multiple copies of each partition, the object to which the producer sends data, and the object to which the consumer consumes data, are all leaders.

- Follower: The slave copy of multiple copies of each partition will synchronize data from the Leader copy in real time and keep the data synchronized with the Leader. When the leader fails, a follower will be elected and become a new leader, and cannot be on the same broker as the leader, to prevent the crash and the data can be recovered.

- Offset: The location information of the consumer's consumption, monitoring where the data is consumed, when the consumer hangs up and then resumes, the consumer can continue to consume from the consumer location.

- ZooKeeper service: The Kafka cluster can work normally and needs to rely on ZooKeeper. ZooKeeper helps Kafka store and manage cluster metadata information. In the latest version, ZooKeeper has slowly been separated.

4. Kafka cluster architecture

4.1 Work flow

Before understanding the Kafka cluster, let's first understand the workflow of Kafka. The Kafka cluster will store the message stream in the Topic, and each record will be composed of a Key, a Value, and a timestamp.

Messages in Kafka are classified by Topic. Producers produce messages and consumers consume messages. Both read and consume the same topic. But Topic is a logical concept, and Partition is a physical concept. Each Partition corresponds to a log file, and the log file stores the data produced by the Producer. Producer will be continuously appended to the end of the log file in sequence, and each piece of data will be recorded with its own Offset. And each consumer in the consumer group will also record in real time which offset they have currently consumed, so that when the crash recovers, they can continue to consume from the last offset location.

4.2 Storage mechanism

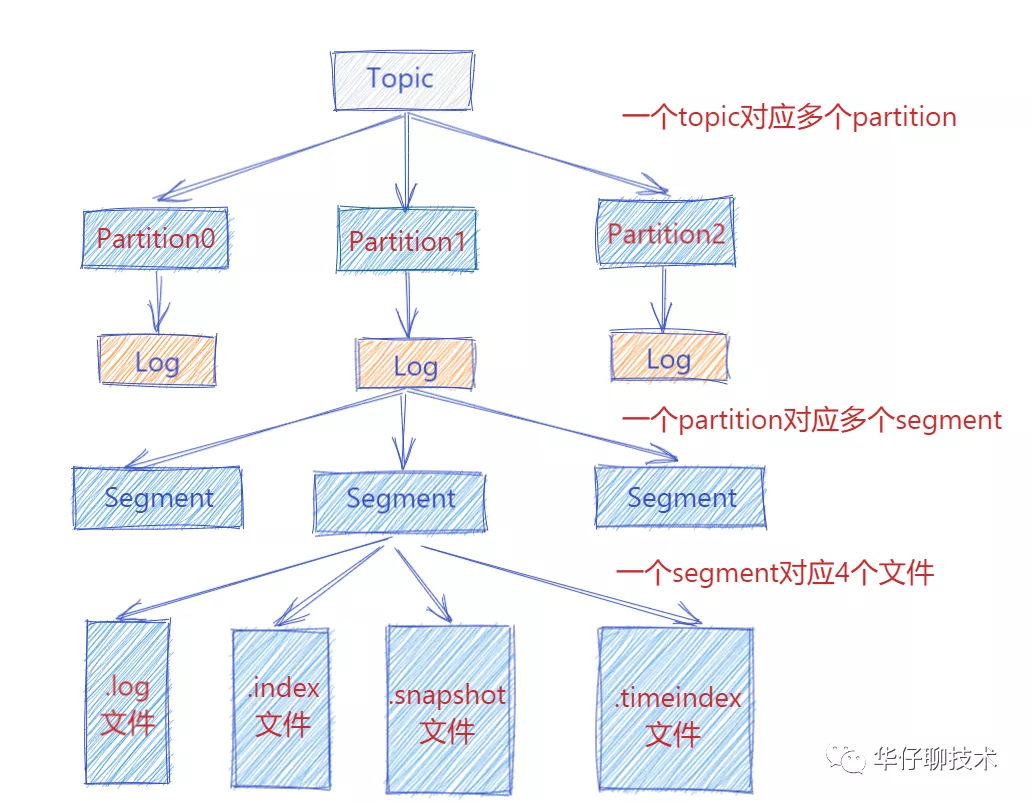

At this time, the messages produced by the Producer will continue to be appended to the end of the log file, so that the file will become larger and larger. In order to prevent the log file from being too large and resulting in inefficient data positioning, Kafka adopts a fragmentation and indexing mechanism. It divides each Partition into multiple segments, and each segment corresponds to 4 files: ".index" index file, ".log" data file, ".snapshot" snapshot file, and ".timeindex" time index file. These files are all located in the same folder, and the naming rule for this folder is: topic name-partition number. For example, the topic of the heartbeat report service has three partitions, and the corresponding folders are heartbeat-0, heartbeat-1, and heartbeat-2.

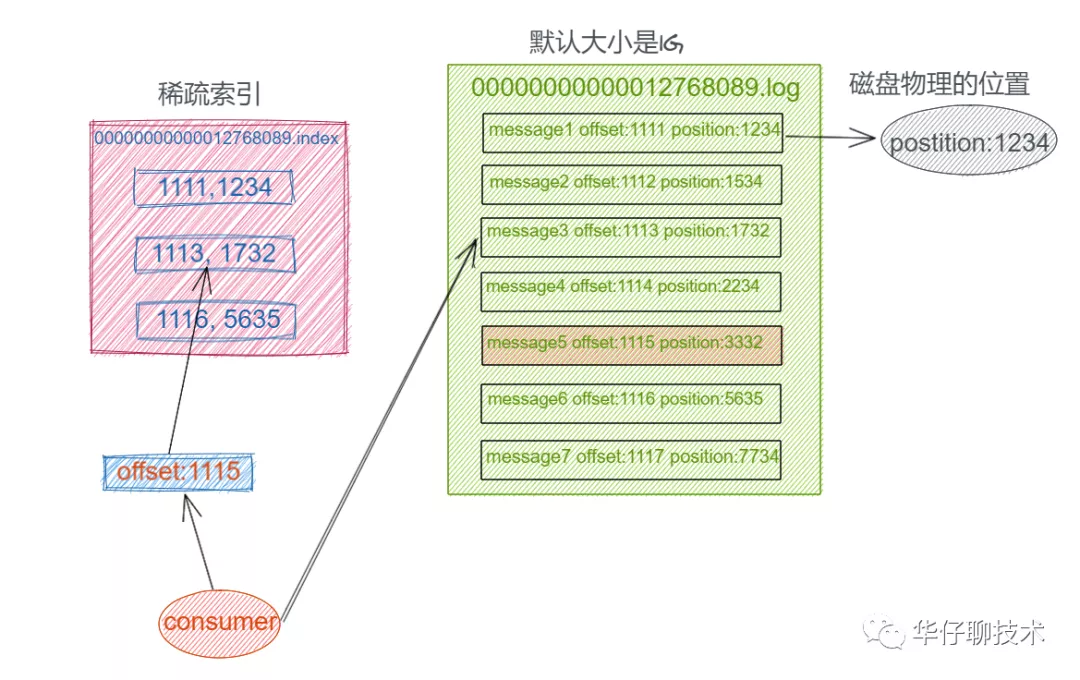

The index, log, snapshot, and timeindex files are named after the offset of the first message of the current segment. The ".index" file stores a large amount of index information, the ".log" file stores a large amount of data, and the metadata in the index file points to the physical offset of the Message in the corresponding data file.

The following figure shows the structure diagram of index file and log file:

4.3 Replica-Replica

Partition in Kafka In order to ensure data security, each Partition can have multiple copies. At this time, we set up 3 copies for partitions 0, 1, and 2 respectively (Note: It is more appropriate to set up two copies). And each copy is divided into "role", they will select one copy as the leader copy, and the other as the follower copy, when our Producer side sends data, it can only be sent to the Leader Partition, and then Follower Partition will go to Leader to synchronize data by itself. When Consumer consumes data, it can only consume data from Leader copy.

4.4 Controller

Kafka Controller is actually a Broker in a Kafka cluster. In addition to the message sending, consumption, and synchronization functions of ordinary Broker, it also needs to undertake some additional work. Kafka uses a fair election method to determine the Controller. The Broker that first successfully creates a temporary node/controller in ZooKeeper will become the Controller. Generally speaking, the first Broker started in the Kafka cluster will become the Controller, and its Broker number and other information Write into ZooKeeper temporary node/controller.

4.5 Maintenance of Offset

Consumers may experience failures such as power outages and downtime during the consumption process. After the Consumer recovers, it needs to continue to consume from the Offset position before the failure. Therefore, the Consumer needs to record which offset it consumes in real time, so that it can continue to consume after the fault is restored. Before Kafka version 0.9, Consumer saved Offset in ZooKeeper by default, but since version 0.9, Consumer saves Offset in a built-in Kafka Topic by default. The Topic is __consumer_offsets to support high-concurrency reading and writing.

4. Summary

The above and everyone discussed in depth the introduction, basic knowledge and cluster architecture of Kafka. The next article will elaborate on its ingenious design ideas from Kafka's three highs (high performance, high availability, and high concurrency). Everyone is looking forward to...

**粗体** _斜体_ [链接](http://example.com) `代码` - 列表 > 引用。你还可以使用@来通知其他用户。