Author: Wang Jianghua Original: https://mp.weixin.qq.com/s/jSAgh_cPgEo-jRuEwErFQg

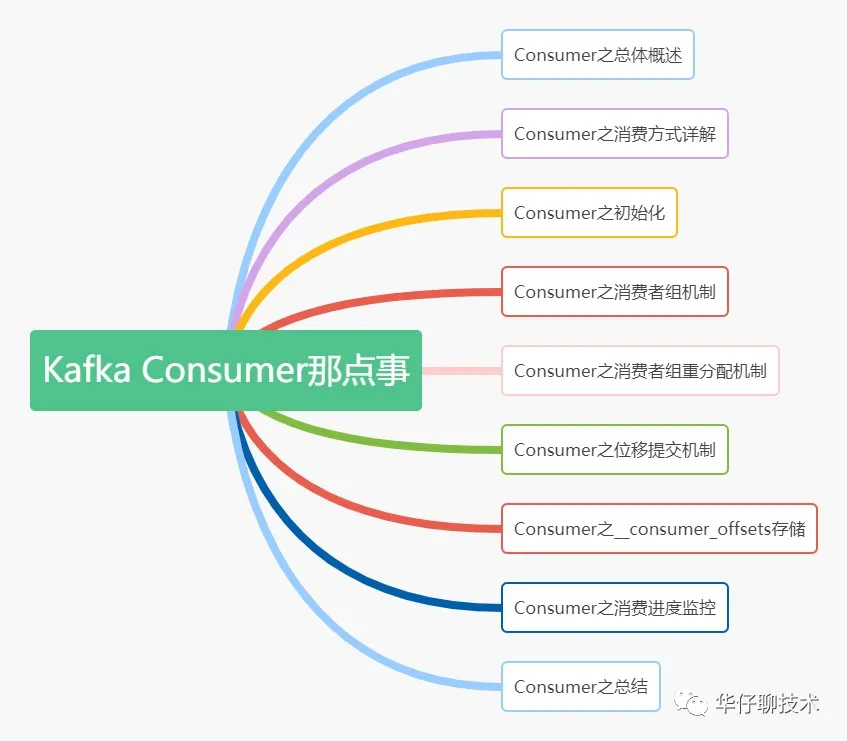

In the previous article, we talked in detail about the design ideas and details of the internal underlying principles of Kafka Producer. In this article, we mainly talk about the design ideas of Kafka Consumer, the internal underlying principles of consumers.

1. General overview of Consumer

In Kafka, we call the party that consumes the message Consumer, which is one of the core components of Kafka. Its main function is to consume and process the messages produced by the Producer to complete the consumption task. Then how are the messages generated by these Producers consumed by Consumers? What are the consumption methods based on consumption, what are the partition allocation strategies, how are consumer groups and rebalancing mechanisms handled, how offsets are submitted and stored, how to monitor consumption progress, and how to ensure the completion of consumption processing? Next, I will explain the instructions one by one.

2. Detailed explanation of Consumer's consumption method

We know that message queues are generally implemented in two ways, (1) Push (push mode) and (2) Pull (pull mode). So which way does Kafka Consumer consume? In fact, Kafka Consumer adopts the Pull mode that actively pulls Broker data for consumption. These two methods have their own advantages and disadvantages, let's analyze:

1) adopt Push mode? If you choose the Push mode, the biggest disadvantage is that the Broker does not know the consumer's consumption speed, and the push rate is controlled by the Broker, which can easily cause message accumulation. If the task operation performed in the Consumer is more time-consuming, then the Consumer will The processing is very slow, and serious situations may cause the system to crash.

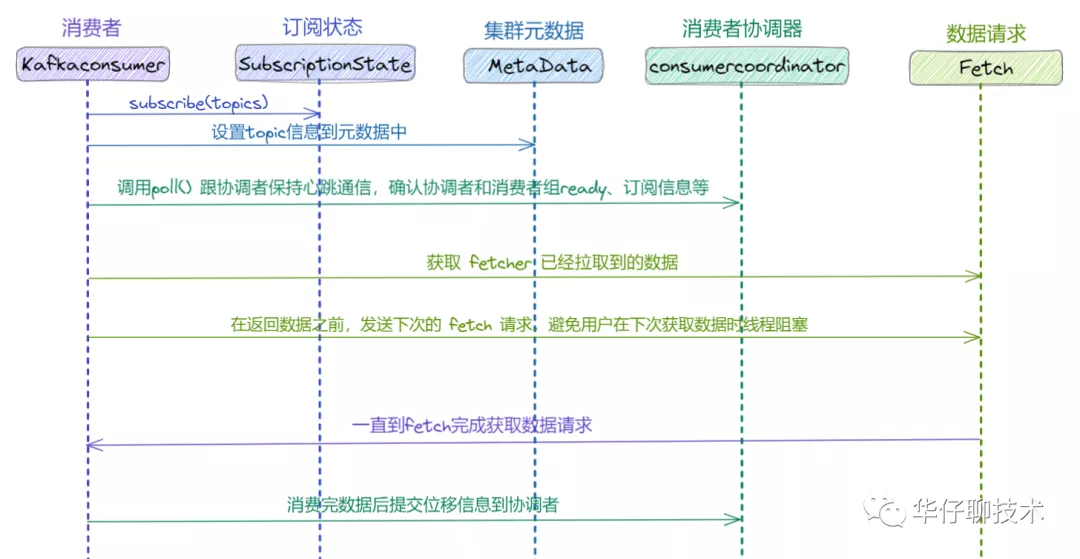

2) adopt the Pull mode? If the Pull mode is selected, the Consumer can pull data according to its own situation and status, or delay processing. But the Pull mode also has its shortcomings. How does Kafka solve this problem? If there is no message from the Kafka Broker, then every time Consumer pulls empty data, it may always return empty data in a loop. In response to this problem, the Consumer includes a timeout parameter every time it calls Poll() to consume data. When it returns empty data, it will block in Long Polling and wait for the timeout to consume again until the data arrives.

3. Initialization of Consumer

After talking about Consumer consumption methods and advantages and disadvantages, and how Kafka weighs and solves the disadvantages, let's talk about what Consumer initialization does?

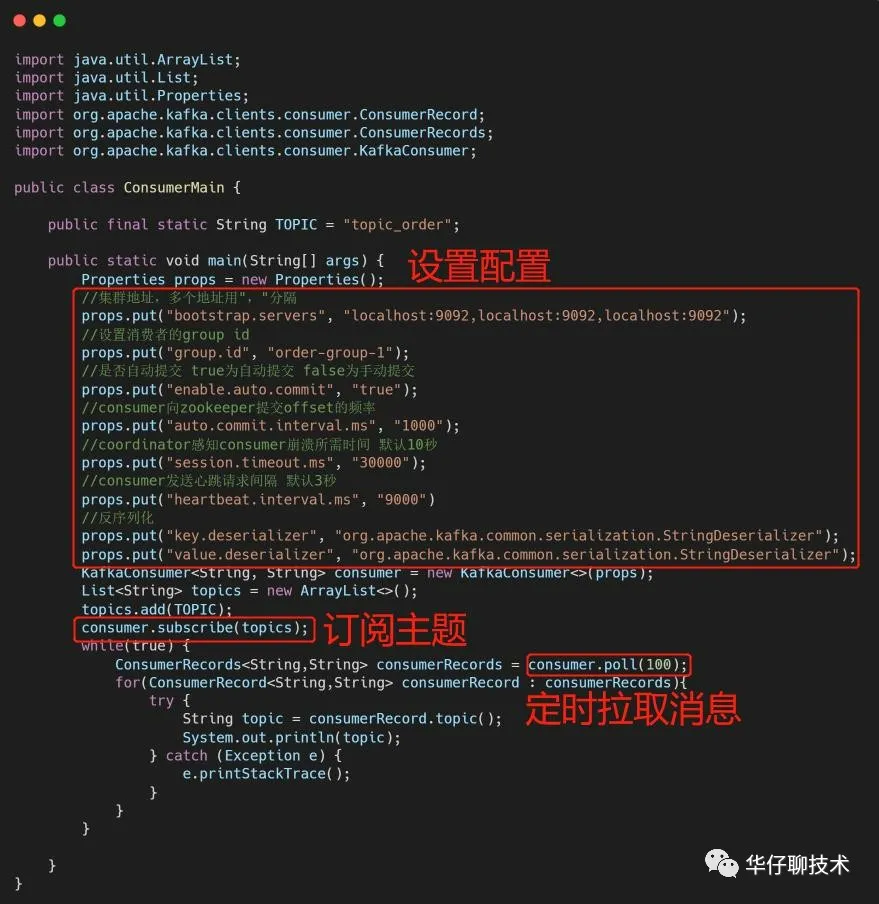

First look at the Kafka consumer initialization code:

It can be seen from the code that there are 4 steps to initialize Consumer:

- 1. Construct a Property object and perform Consumer-related configuration;

- 2. Create an object Consumer of KafkaConsumer;

- 3. Subscribe to the corresponding Topic list;

- 4. Call Consumer's poll() method to pull subscribed messages

The Kafka consumer consumption flow chart is as follows:

4. Consumer group mechanism of Consumer

4.1 Consumer Group mechanism

After talking about the initialization process of Consumer, let’s talk about Consumer consumer group mechanism, why Kafka wants to design Consumer Group, can’t it only be Consumer? We know that Kafka is a message queuing product with high throughput, low latency, high concurrency, and high scalability. If a topic has millions to tens of millions of data, it only depends on the Consumer process for consumption and consumption. The speed can be imagined, so a more scalable mechanism is needed to guarantee the consumption progress. At this time, the Consumer Group came into being. The Consumer Group is a scalable and fault-tolerant consumer mechanism provided by Kafka.

The characteristics of Kafka Consumer Group are as follows:

- 1. Each Consumer Group has one or more Consumers

- 2. Each Consumer Group has a public and unique Group ID

- 3. When Consumer Group consumes Topic, each Partition of Topic can only be assigned to a certain Consumer in the group. As long as it is consumed by any Consumer once, then this piece of data can be considered to be successfully consumed by the current Consumer Group

4.2 Partition allocation strategy mechanism

We know that there are multiple Consumers in a Consumer Group, and a Topic also has multiple Partitions, so it will inevitably involve the allocation of Partitions: the problem of determining which Partition is consumed by which Consumer.

The Kafka client provides three partition assignment strategies: RangeAssignor, RoundRobinAssignor and StickyAssignor. The first two assignment schemes are relatively simple. The StickyAssignor assignment scheme is relatively complicated.

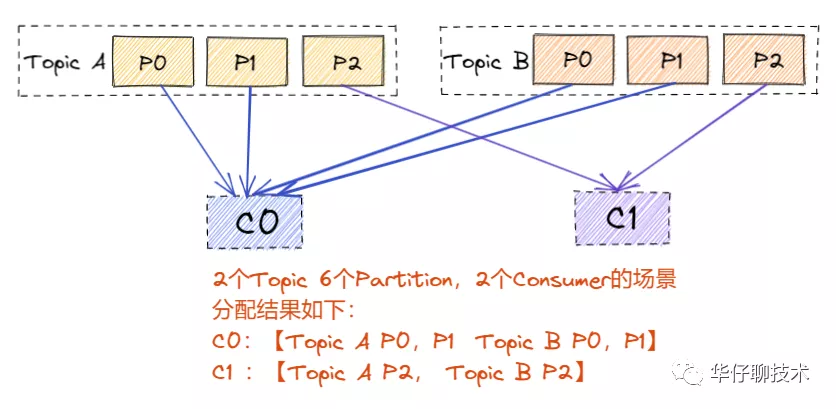

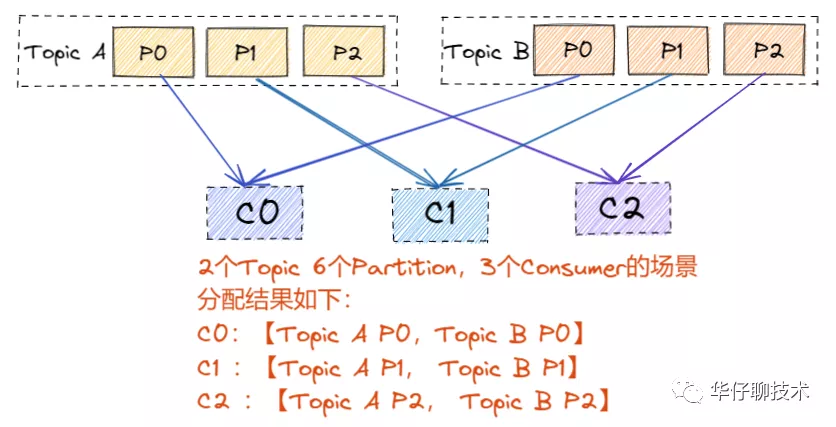

4.2.1 RangeAssignor

RangeAssignor is Kafka's default partition allocation algorithm. It is allocated according to the dimensions of the topic. For each topic, the Partition is first sorted according to the partition ID, and then the Consumers of the Consumer Group subscribing to this topic are sorted again, and then try to balance them. The partitions are allocated to Consumers according to the range section. At this time, the task of the Consumer process that allocates the partition first may be too heavy (the number of partitions cannot be evenly divided by the number of consumers).

The analysis of the partition allocation scenario is shown in the following figure (multiple consumers under the same consumer group):

Conclusion: The obvious problem with this distribution method is that as the number of topics subscribed by consumers increases, the imbalance problem will become more and more serious.

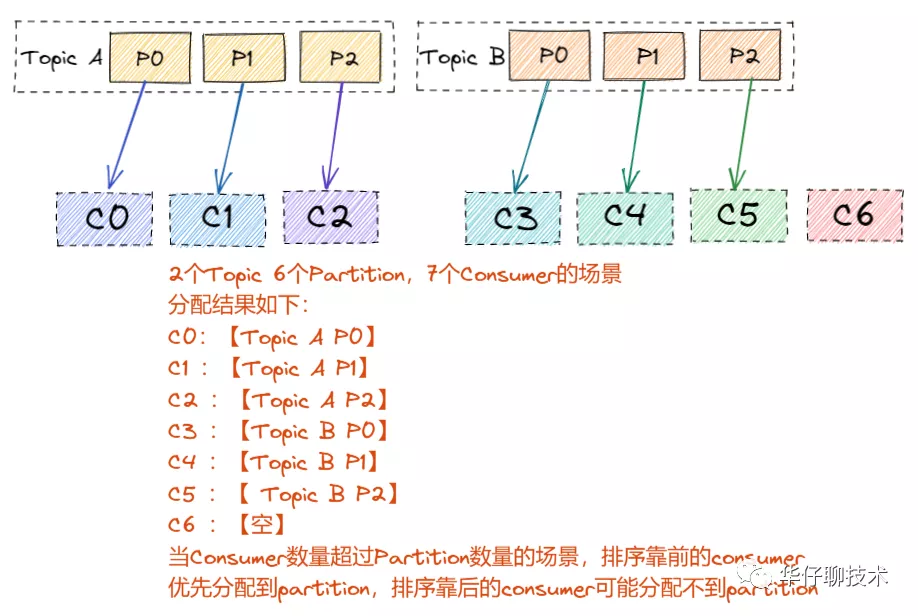

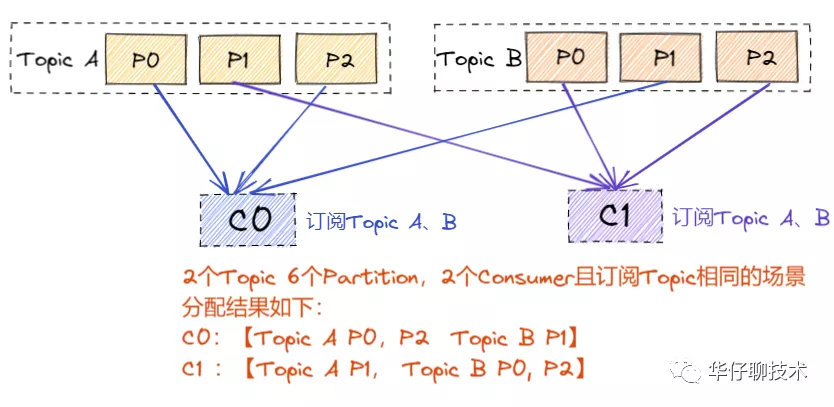

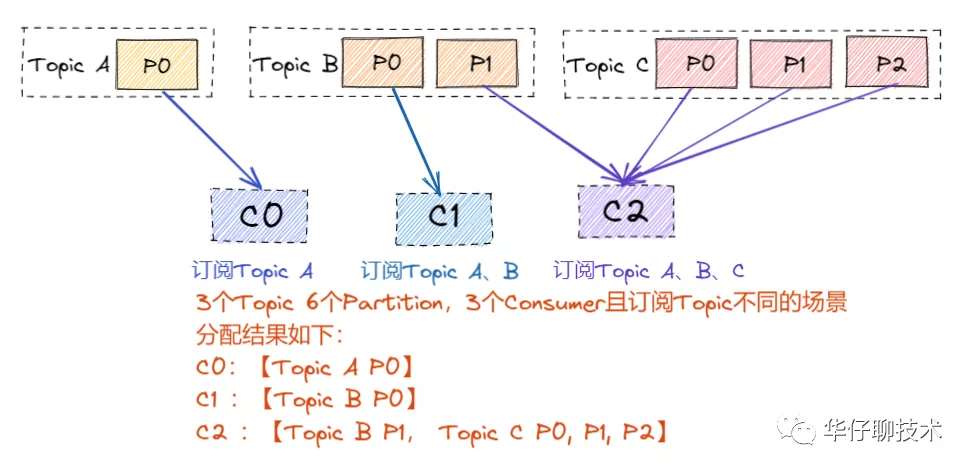

4.2.2 RoundRobinAssignor

The partition allocation strategy of RoundRobinAssignor is to sort all Topic Partitions and all Consumers subscribed in the Consumer Group, and then allocate them one by one in a balanced order as much as possible. If in the Consumer Group, every Consumer subscription is subscribed to the same topic, then the distribution result is balanced. If the subscription topics are different, then the distribution result is not guaranteed to be "as balanced as possible", because some Consumers may not participate in the distribution of some topics.

The analysis of the partition allocation scenario is shown in the figure below:

1) When the topics subscribed by each Consumer in the group are the same:

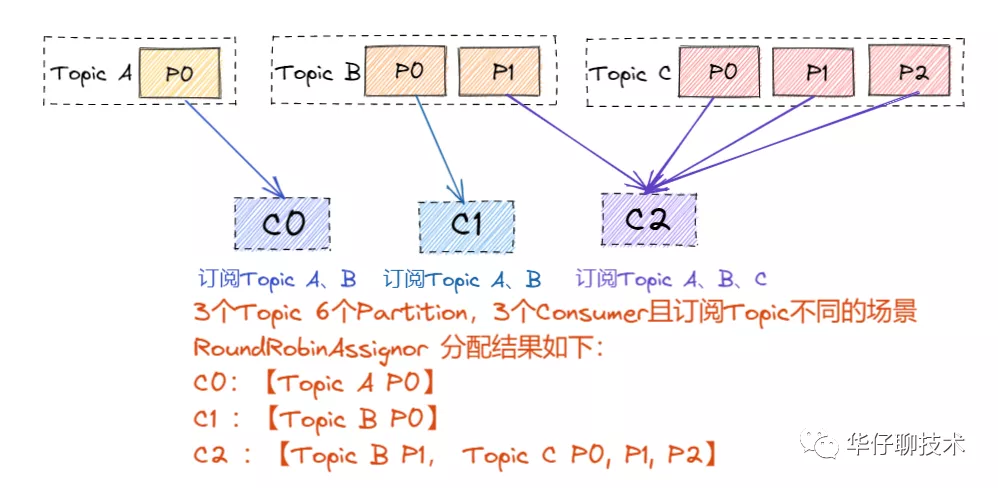

2) When the topic of each subscription in the group is different, this may cause the tilt of the partition subscription:

4.2.3 StickyAssignor

The StickyAssignor partition allocation algorithm is the most complex of the allocation strategies provided by the Kafka Java client. It can be set by the partition.assignment.strategy parameter. It has been introduced since version 0.11. The purpose is to perform the new allocation as far as possible in the last allocation. As a result, few adjustments were made, and the following two goals were mainly achieved:

1) The distribution of Topic Partition should be as balanced as possible.

2) When Rebalance (redistribution, which will be analyzed in detail later) occurs, try to keep the result consistent with the previous distribution.

Note: When two goals conflict, give priority to the first goal, which can make the distribution more even. The first goal is to try to complete the three allocation strategies as much as possible, and the second goal is the one. The essence of the algorithm lies.

Let's take an example to talk about the difference between RoundRobinAssignor and StickyAssignor.

The analysis of the partition allocation scenario is shown in the figure below:

1) The Topic subscribed by each Consumer in the group is the same, and the assignment of RoundRobinAssignor is consistent with StickyAssignor:

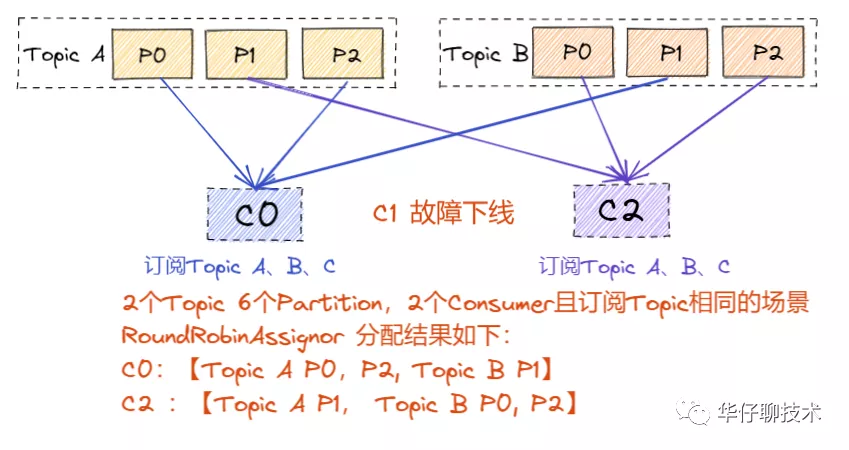

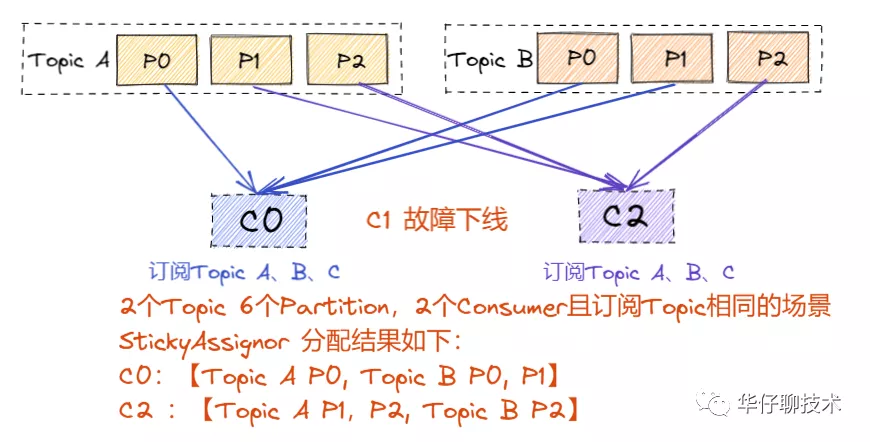

When Rebalance occurs in the above situation, the allocation may be different. If C1 fails to go offline at this time:

RoundRobinAssignor:

And StickyAssignor:

Conclusion: From the results after Rebalance above, it can be seen that although the two allocation strategies are evenly distributed in the end, RoundRoubinAssignor is completely re-allocated, while StickyAssignor has reached a uniform state on the original basis.

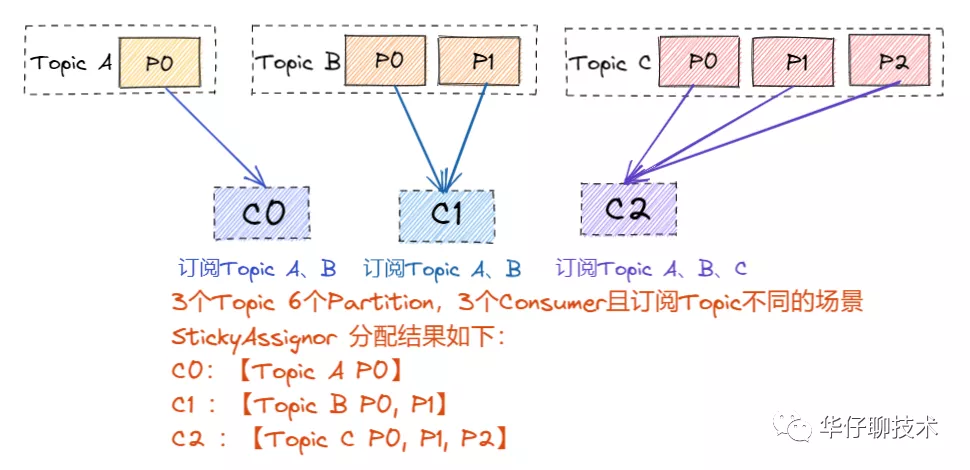

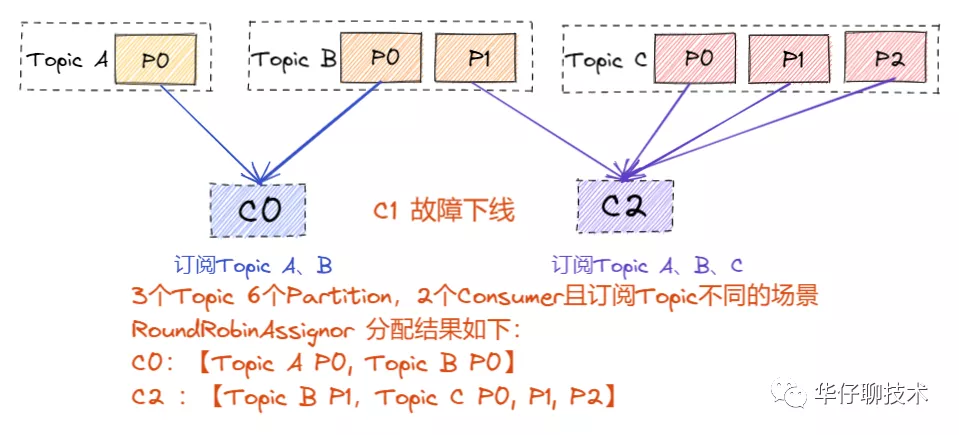

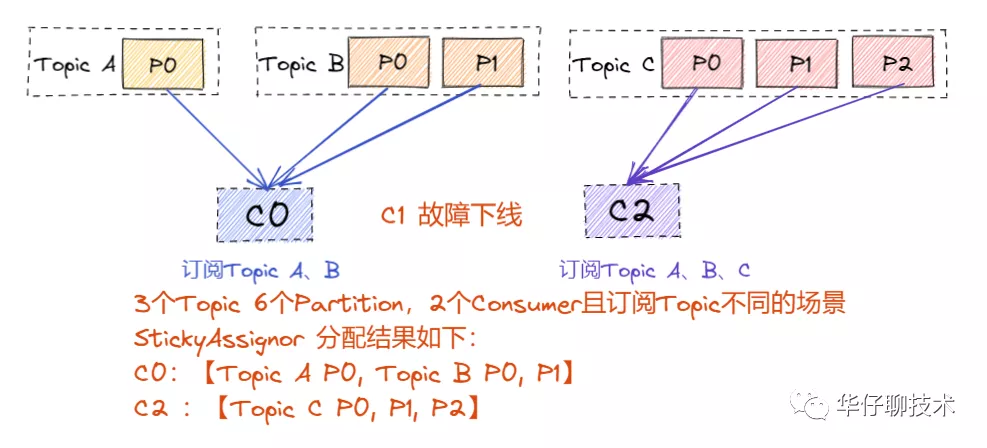

2) When the Topic subscribed by each Consumer in the group is different:

RoundRobinAssignor:

StickyAssignor:

When Rebalance occurs in the above situation, the allocation may be different. If C1 fails to go offline at this time:

RoundRobinAssignor:

StickyAssignor:

From the above results, it can be seen that the allocation strategy of RoundRoubin caused a serious allocation tilt after Rebalance. Therefore, if you want to reduce the overhead caused by reallocation in the production environment, you can choose StickyAssignor's partition allocation strategy.

5. Consumer's consumer group redistribution mechanism

After talking about the consumer group and partition allocation strategy above, let’s talk about the Rebalance (redistribution) mechanism in the Consumer Group. For the Consumer Group, there may be consumers joining or exiting at any time, so the changes in the Consumer list will inevitably cause Partition Redistribution. We call this allocation process Consumer Rebalance, but this allocation process needs to use the Coordinator coordinator component on the Broker side to complete the partition redistribution of the entire consumer group with the help of the Coordinator. It is also triggered by monitoring the /admin/reassign_partitions node of ZooKeeper.

5.1 Rebalance trigger and notification

There are three trigger conditions for Rebalance:

- 1. When the number of members of the Consumer Group group changes (actively join or leave the group, failure to go offline, etc.)

- 2. When the number of subscribed topics changes

- 3. When the number of partitions subscribed to the topic changes

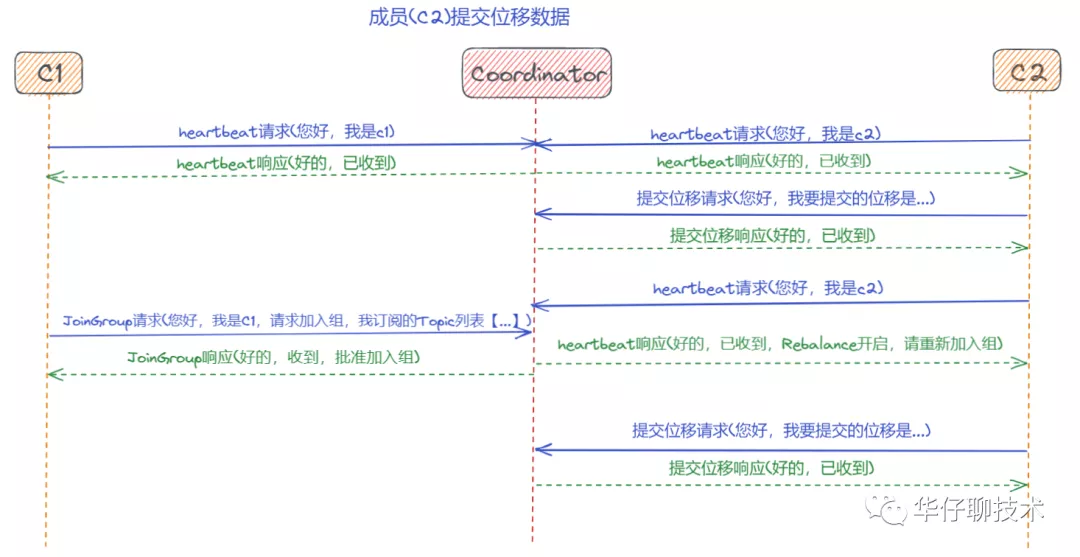

How does Rebalance notify other consumer processes?

The notification mechanism of Rebalance relies on the heartbeat thread on the Consumer side. It periodically sends heartbeat requests to the Coordinator on the Broker side. When the coordinator decides to enable Rebalance, it will encapsulate "REBALANCE_IN_PROGRESS" into the heartbeat request response and send it to the Consumer. When the Consumer finds it If the heartbeat response contains "REBALANCE_IN_PROGRESS", you know that Rebalance has started.

5.2 Protocol description

In fact, Rebalance is essentially a set of protocols. Consumer Group and Coordinator use it together to complete the Rebalance of Consumer Group. Let me see what these 5 protocols are and what functions they accomplish:

- 1. Heartbeat request: Consumer needs to periodically send heartbeat to Coordinator to prove that he is still alive.

- 2. LeaveGroup request: take the initiative to tell Coordinator to leave Consumer Group

- 3. SyncGroup request: Group Leader Consumer informs all members of the group of the distribution plan

- 4. JoinGroup request: members request to join the group

- 5. DescribeGroup request: Display all information of the group, including member information, agreement name, distribution plan, subscription information, etc. Usually this request is for administrators.

Coordinator mainly uses the first 4 types of requests during Rebalance

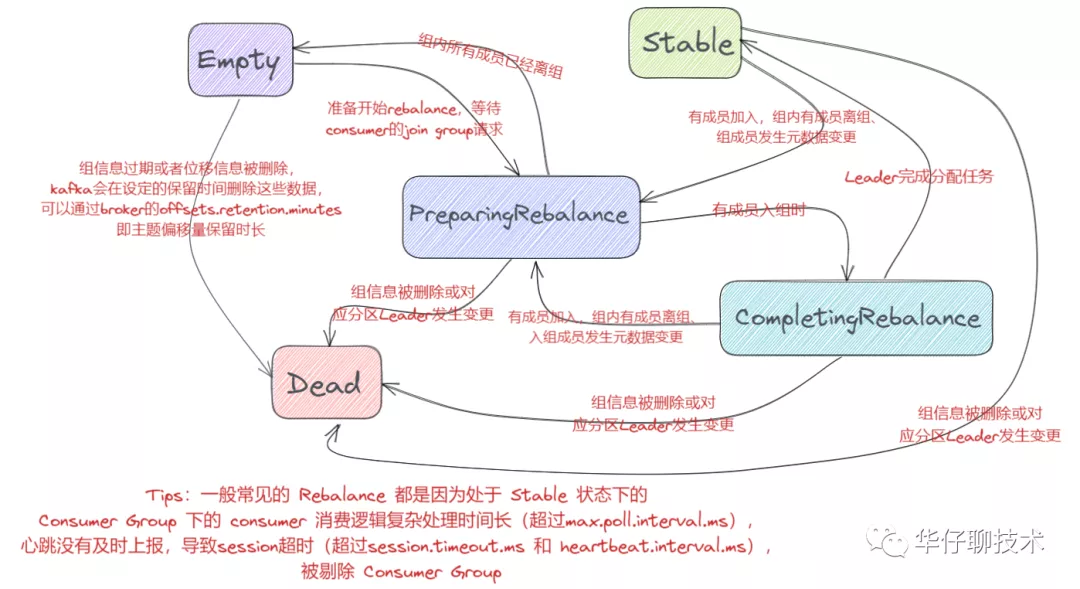

5.3 Consumer Group State Machine

Once Rebalance occurs, it will definitely involve the state flow of Consumer Group. At this time, Kafka designed a complete state machine mechanism for us to help Broker Coordinator complete the entire rebalancing process. Understanding the entire state flow process can help us deeply understand the design principles of Consumer Group.

five states of 1616aa9b3dcc31 are defined as follows:

Empty State

Empty 状态表示当前组内无成员, 但是可能存在 Consumer Group 已提交的位移数据,且未过期,这种状态只能响应 JoinGroup 请求。

Dead State

Dead 状态表示组内已经没有任何成员的状态,组内的元数据已经被 Broker Coordinator 移除,这种状态响应各种请求都是一个Response:UNKNOWN_MEMBER_ID。

PreparingRebalance status

PreparingRebalance 状态表示准备开始新的 Rebalance, 等待组内所有成员重新加入组内。

CompletingRebalance status

CompletingRebalance 状态表示组内成员都已经加入成功,正在等待分配方案,旧版本中叫“AwaitingSync”。

Stable State

Stable 状态表示 Rebalance 已经完成, 组内 Consumer 可以开始消费了。

The flow diagram of the 5 states is as follows:

5.4 Rebalance process analysis

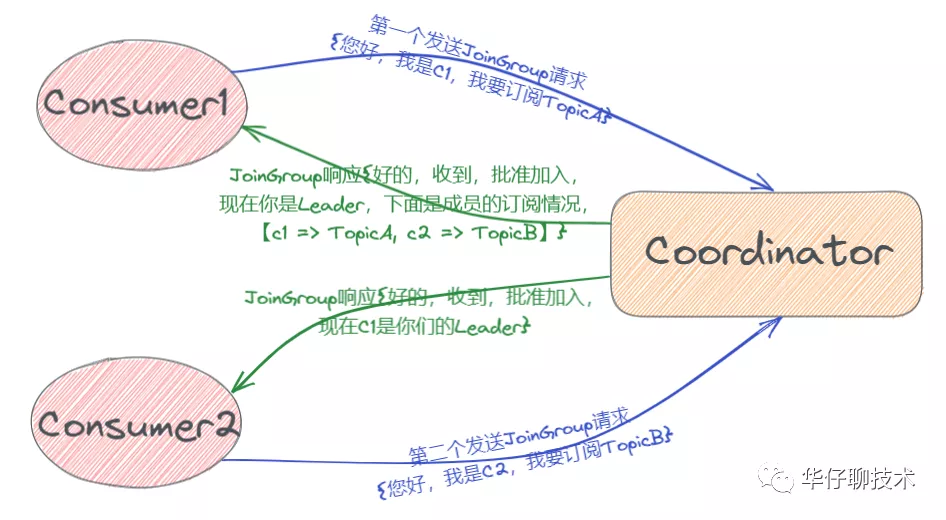

Next, let’s take a look at the Rebalance process. From the above 5 states, it can be seen that Rebalance is mainly divided into two steps: joining the group (corresponding to the JoinGroup request) and waiting for the Leader Consumer allocation plan (SyncGroup request).

1) JoinGroup request: All members in the group send a JoinGroup request to the Coordinator, request to join the group, and will report the Topic subscribed by the way, so that the Coordinator can collect the JoinGroup request and subscription Topic information of all members, and the Coordinator will receive information from these members. Choose one to be the leader of this Consumer Group (under normal circumstances, the first Consumer to send a request will become the Leader), , the Leader mentioned here refers to a specific consumer, and its task is to collect all members’ subscription Topic information , And then formulate a specific distribution plan for consumption zoning. After the leader is selected, the Coordinator will encapsulate the Topic subscription information of the Consumer Group into the Response of the JoinGroup request, and then send it to the Leader. Then, after the Leader makes a unified allocation plan, proceed to the next step, as shown in the following figure:

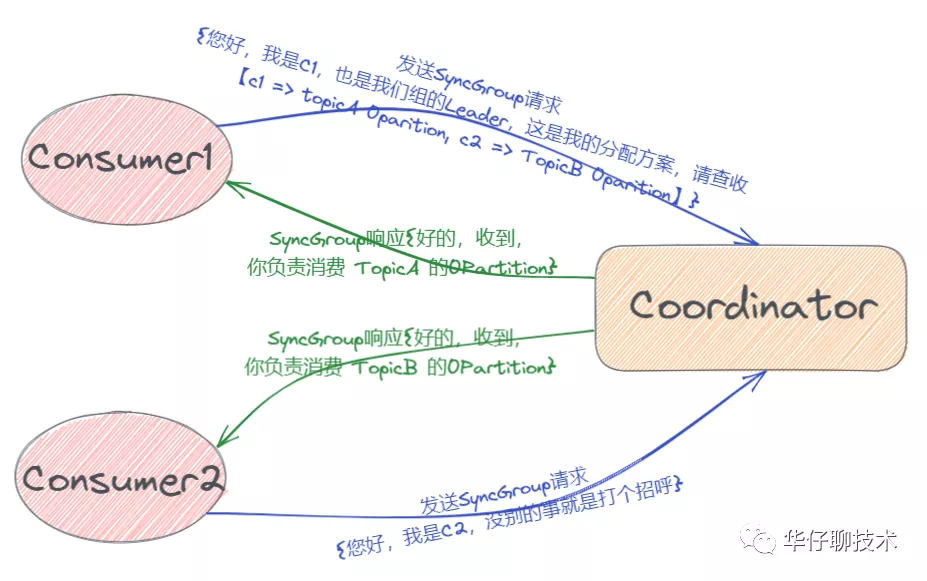

2) SyncGroup request: Leader starts to allocate consumption plan, which is which Consumer is responsible for consuming which Partition of which topic. is allocated, the Leader will encapsulate the allocation plan into a SyncGroup request and send it to the Coordinator, and other members will also send a SyncGroup request, but the content is empty. After the Coordinator receives the allocation plan, the plan will be encapsulated into the SyncGroup Response and sent Give each member in the group, so that they know which Partition should be consumed, as shown below:

5.5 Rebalance scenario analysis

Just talked about the state flow and process analysis of Rebalance in detail. Next, we will focus on analyzing several scenarios through the timing diagram to deepen the understanding of Rebalance.

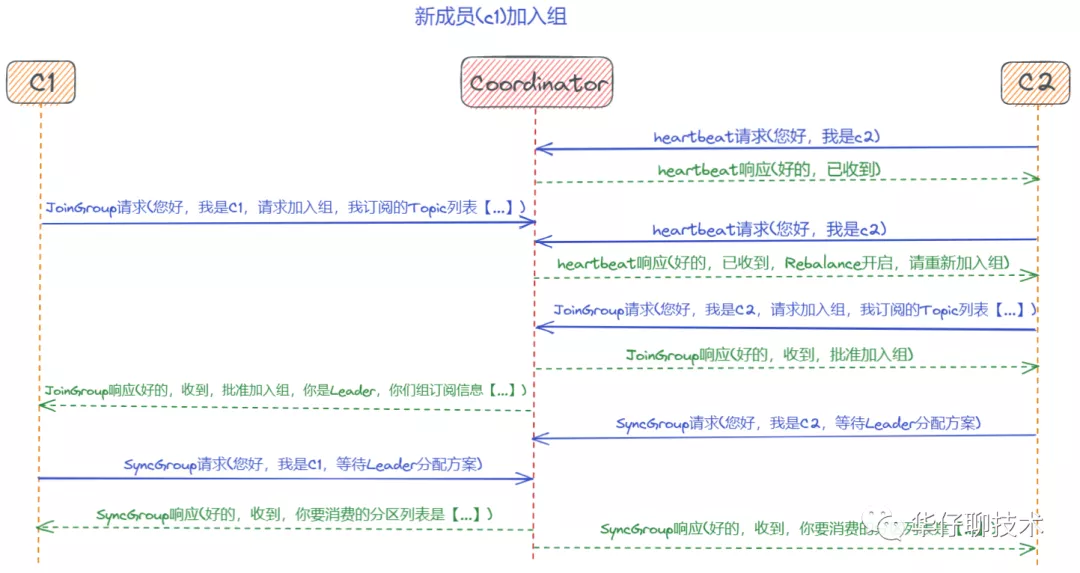

Scenario 1: A new member (c1) joins the group

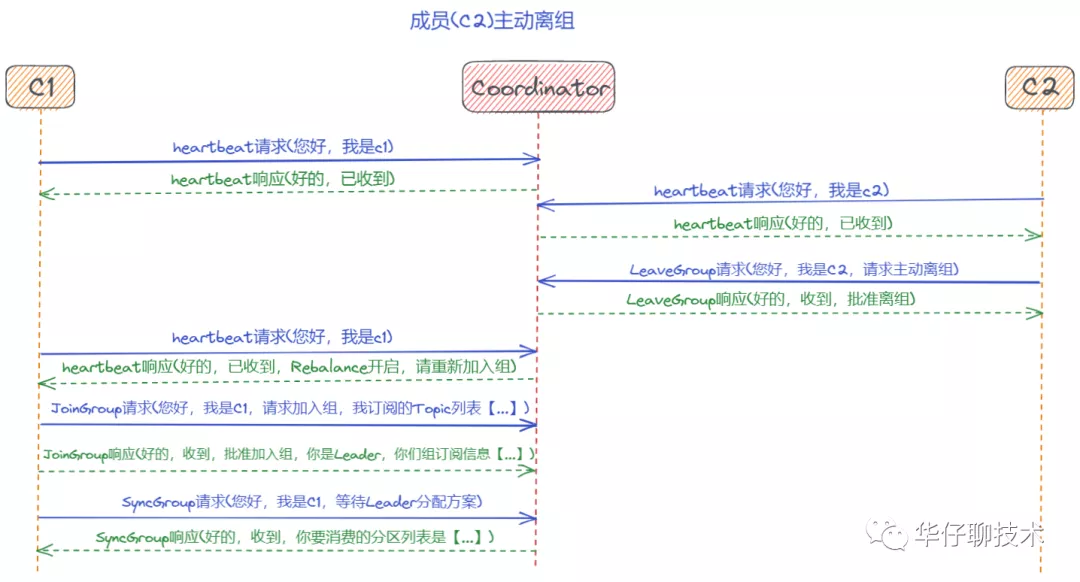

Scenario 2: Member (c2) voluntarily leaves the group

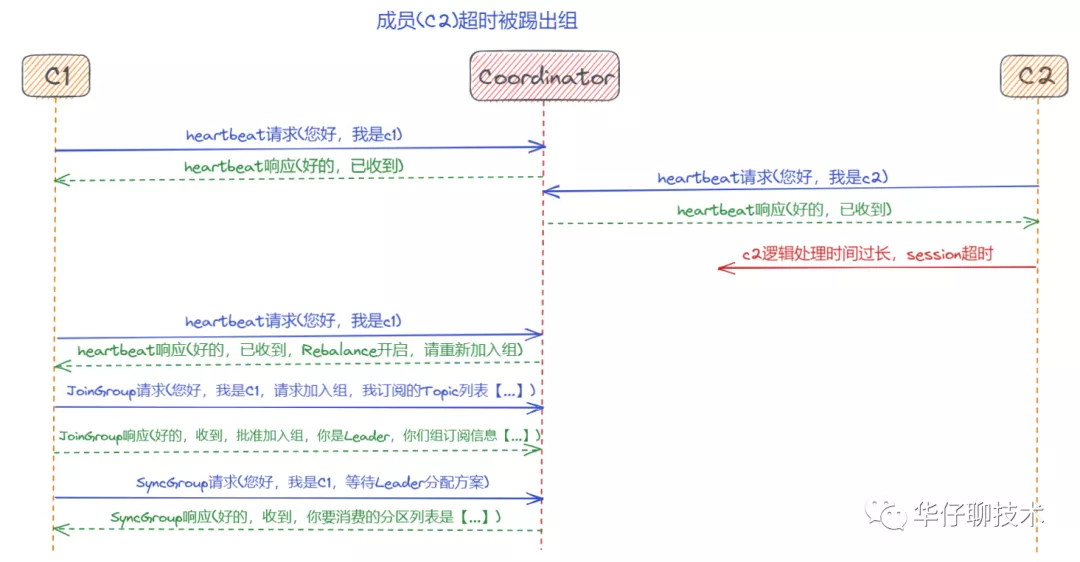

Scenario 3: Member (c2) is kicked out of the group overtime

Scenario 4: Member (c2) submits displacement data

6. Consumer's displacement submission mechanism

6.1 Offset submission concept understanding

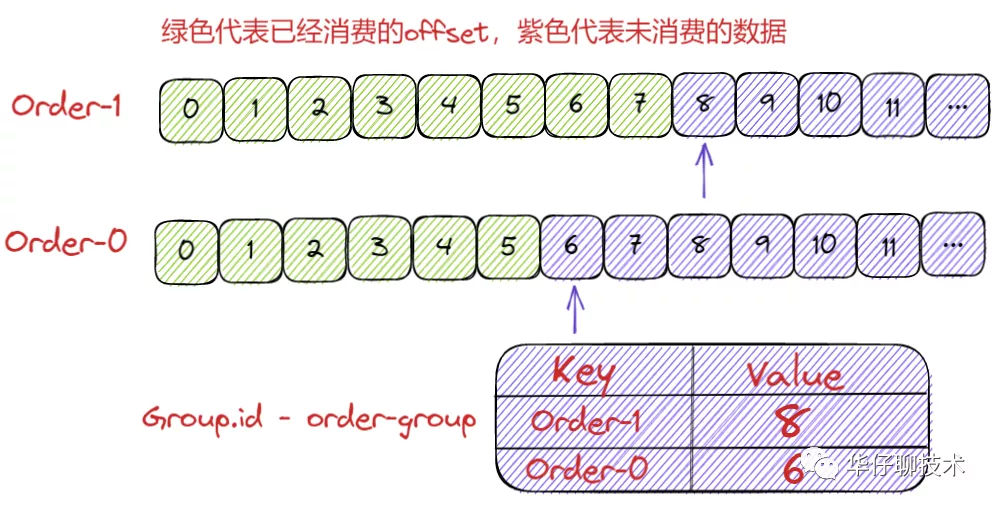

After talking about the consumer group Rebalance mechanism above, let’s talk about the Consumer’s displacement submission mechanism. Before talking about displacement submission, let’s review the difference between displacement and consumer displacement. The displacement usually refers to the storage offset of the Topic Partition on the Broker side, while the consumer displacement refers to the consumption offset of a Consumer Group on different Topic Partitions (also can be understood as the consumption progress), which records The displacement of the next message to be consumed by the Consumer.

Consumers need to report their own displacement data information to Kafka. We call this reporting process Committing Offsets. It is to ensure that the consumer's consumption progress is normal. When the Consumer fails and restarts, it can start consumption directly from the previously submitted Offset position without having to start over again (Kafka believes that messages smaller than the submitted Offset have been successfully consumed), Kafka This mechanism is designed to ensure the progress of consumption. We know that the Consumer can consume data from multiple partitions at the same time, so the displacement submission is reported according to the granularity of the partition, which means that the Consumer needs to submit its own displacement data for each partition assigned to it.

6.2 Analysis of multiple submission methods

Kafka Consumer provides a variety of submission methods. From the user's perspective: displacement submission can be divided into automatic submission and manual submission, but from the Consumer's perspective, displacement submission can be divided into synchronous submission and asynchronous submission. Talk about automatic submission and manual submission methods:

Automatic submission

Automatic submission means that the Kafka Consumer silently submits the displacement in the background, and the user does not need to care about this matter. To enable auto-commit displacement, when initializing KafkaConsumer, by setting the parameter enable.auto.commit = true (default is true), another parameter is required for cooperation after opening, namely auto.commit.interval.ms, this parameter represents Kafka Consumer The displacement is automatically submitted every X seconds, and the default value is 5 seconds.

The automatic submission seems to be pretty good, so will the consumption data be lost in the automatic submission? When enable.auto.commit = true is set, Kafka will ensure that when the Poll() method is called, the displacement of the previous batch of messages will be submitted, and the next batch of messages will be processed, so it can guarantee that there will be no loss of consumption . However, the automatic submission of displacement also has a design flaw, that is, it may cause repeated consumption. That is, when Rebalance occurs between the automatic submission intervals, the Offset has not been submitted yet. After the Rebalance is completed, all Consumers need to consume the messages before the Rebalance occurs again.

Manual submission

Corresponding to automatic submission is manual submission. The method to enable manual displacement submission is to set the parameter enable.auto.commit = false when initializing KafkaConsumer, but it is not enough to set it to false. It just tells Kafka Consumer not to submit displacement automatically. You also need to call it after processing the message The corresponding Consumer API manually submits the displacement. For the manual displacement, it is divided into synchronous submission and asynchronous submission.

1), synchronous submission API:

KafkaConsumer#commitSync(), this method will submit the latest displacement value returned by the KafkaConsumer#poll() method. It is a synchronous operation that will block and wait until the displacement is successfully submitted before returning. If an exception occurs during the submission process, the The method will throw an exception. Here we know that the time to call the commitSync() method is to submit after processing all the messages returned by the Poll() method. If the displacement is submitted prematurely, consumption data will be lost.

2), Asynchronous submission API:

KafkaConsumer#commitAsync(), this method is submitted asynchronously. After calling commitAsync(), it will return immediately without blocking, so it will not affect the Consumer's TPS. In addition, Kafka provides a callback for it, which is convenient for us to implement the logic after submission, such as logging or exception handling. Since it is an asynchronous operation, if there is a problem, it will not be retried. At this time, the retry displacement value may not be the latest value, so the retry is meaningless.

3), Mixed submission mode:

From the above analysis, it can be concluded that both commitSync and commitAsync have their own shortcomings. We need to use commitSync and commitAsync in combination to achieve the best results. It does not affect Consumer TPS, and can also use commitSync's automatic retry function to avoid some transient errors. (Network jitter, GC, Rebalance problem), in the production environment, it is recommended that you use the mixed submission mode to improve the robustness of Consumer.

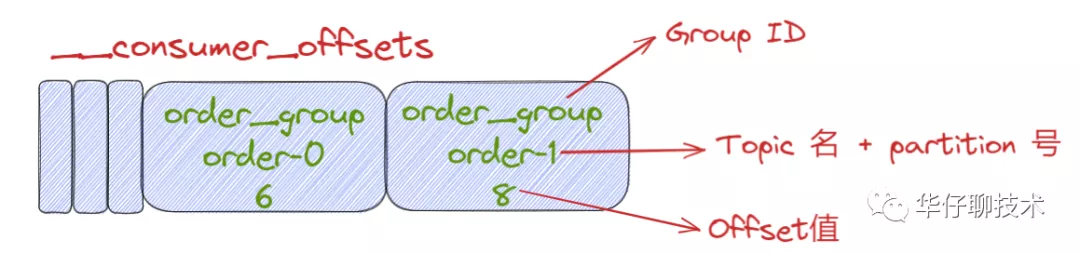

7. Consumer's __consumer_offsets storage

7.1 The secret of __consumer_offsets

After talking about consumer displacement submission above, we know that consumer needs to perform displacement submission after consuming the data. Then where is the submitted displacement data stored and how it is stored, then we will look at the new and old versions of Kafka for offset Storage method.

We know that the old version of Kafka (before version 0.8) relied heavily on Zookeeper to achieve various coordinated management. Of course, the old version of Consumer Group saved the displacement in ZooKeeper to reduce the Broker-side state storage overhead. In view of Zookeeper's storage architecture In terms of design, it is not suitable for frequent write updates, and the displacement submission of Consumer Group is a high-frequency write operation, which will slow down the performance of ZooKeeper cluster. Therefore, in the new version of Kafka, the community redesigned the displacement management method of Consumer Group. The displacement is stored inside Kafka (this is because Kafka Topic naturally supports high-frequency writing and persistence), which is the so-called famous __consumer_offsets.

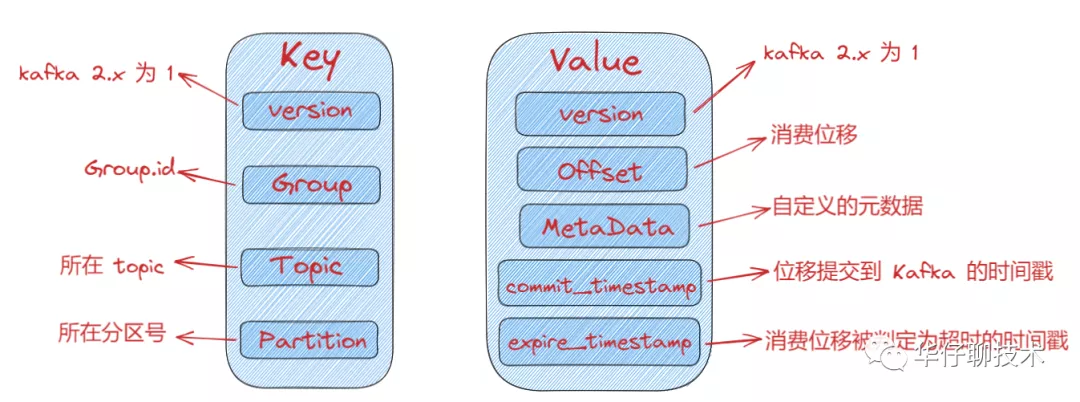

__consumer_offsets: Used to save the offset information submitted by the Kafka Consumer. In addition, it is automatically created by Kafka, which is the same as a common topic. Its message format is also defined by Kafka itself, and we cannot modify it. Here we are very curious about its message format, let us analyze and uncover its mystery together.

__consumer_offsets message format analysis reveals:

- 1. The so-called message format can be simply understood as a KV pair. Key and Value represent the key value and message body of the message, respectively.

- 2. So what is the key to store? Since it is storing the displacement information of Consumers, in Kafka, there will be a lot of Consumers, so there must be a field to identify which Consumer this displacement data belongs to. How to identify the Consumer field? When we explained Consumer Group earlier, we knew that it shared a public and unique Group ID, so is it enough to save it? We know that Consumer's submission displacement is carried out in the dimension of the partition. Obviously, the partition to which the Consumer wants to submit the displacement should also be stored in the key.

- 3. Summary: 3 parts of content should be stored in the key of the displacement topic: <Group ID, topic name, partition number>

- 4. Value can be simply considered as the offset value. Of course, the bottom layer also stores some other metadata to help Kafka complete some other operations, such as deleting expired displacement data.

Schematic diagram of __consumer_offsets message format:

7.2 __consumer_offsets creation process

After talking about the message format, let’s talk about how __consumer_offsets is created? When the first Consumer in the Kafka cluster starts, Kafka will automatically create __consumer_offsets. As mentioned earlier, it is an ordinary topic, and it also has a corresponding number of partitions. If it is automatically created by Kafka, how is the number of partitions set? This depends on the broker-side parameter offsets.topic.num.partitions (the default value is 50), so Kafka will automatically create a __consumer_offsets with 50 partitions. This is why we see many directories like __consumer_offsets-xxx in the Kafka log path. Since there is a number of partitions, there must be a corresponding number of replicas. This is dependent on another parameter offsets.topic.replication.factor on the Broker side (the default value is 3). To sum up, if __consumer_offsets is automatically created by Kafka, then the number of partitions of the topic is 50 and the number of copies is 3, and the consumption of the specific group is stored in which Partition, according to abs(GroupId.hashCode())% NumPartitions Calculated, so that it can be ensured that the Consumer Offset information and the Coordinator corresponding to the Consumer Group are on the same Broker node.

7.3 View __consumer_offsets data

Kafka provides scripts by default for users to view Consumer information. The specific viewing methods are as follows:

//1.查看 kafka 消费者组列表:

./bin/kafka-consumer-groups.sh --bootstrap-server <kafka-ip>:9092 --list

//2.查看 kafka 中某一个消费者组(test-group-1)的消费情况:

./bin/kafka-consumer-groups.sh --bootstrap-server <kafka-ip>:9092 --group test-group-1 --describe

//3.计算 group.id 对应的 partition 的公式为:

abs(GroupId.hashCode()) % NumPartitions //其中GroupId:test-group-1 NumPartitions:50

//3.找到 group.id 对应的 partition 后,就可以指定分区消费了

//kafka 0.11以后

./bin/kafka-console-consumer.sh --bootstrap-server message-1:9092 --topic __consumer_offsets --formatter "kafka.coordinator.group.GroupMetadataManager\$OffsetsMessageFormatter" --partition xx

//kafka 0.11以前

./bin/kafka-console-consumer.sh --bootstrap-server message-1:9092 --topic __consumer_offsets --formatter "kafka.coordinator.GroupMetadataManager\$OffsetsMessageFormatter" --partition xx

//4.获取指定consumer group的位移信息

//kafka 0.11以后

kafka-simple-consumer-shell.sh --topic __consumer_offsets --partition xx --broker-list <kafka-ip>:9092 --formatter "kafka.coordinator.group.GroupMetadataManager\$OffsetsMessageFormatter"

//kafka 0.11以前

kafka-simple-consumer-shell.sh --topic __consumer_offsets --partition xx --broker-list <kafka-ip>:9092 --formatter "kafka.coordinator.GroupMetadataManager\$OffsetsMessageFormatter"

//5.脚本执行后输出的元数据信息

//格式:[消费者组 : 消费的topic : 消费的分区] :: [offset位移], [offset提交时间], [元数据过期时间]

[order-group-1,topic-order,0]::[OffsetMetadata[36672,NO_METADATA],CommitTime 1633694193000,ExpirationTime 1633866993000]8. Consumer's consumption progress monitoring

After talking about the various implementation details of Consumer above, let’s talk about the most important thing for Consumer, which is the monitoring of consumption progress, or the degree of lag (the degree to which Consumer is currently behind Producer). Here is a professional term called Consumer Lag. For example: Kafka Producer has successfully produced 10 million messages to a topic. At this time, the Consumer currently consumes 9 million messages. Then it can be considered that the Consumer is lagging behind by 1 million messages, that is, Lag is equal to 1 million.

For Consumer, Lag should be regarded as the most important monitoring indicator. It directly reflects the operation of a Consumer. The smaller the Lag value, it means that the Consumer can consume the messages produced by the Producer in time, with a small lag; if the value has a tendency to increase, it means that there may be accumulation, which will seriously slow down the downstream processing speed.

For such an important indicator, how should we monitor it? There are mainly the following methods:

- 1. Use the command line tool kafka-consumer-groups script that comes with Kafka

- 2. Programming with Kafka Java Consumer API

- 3. Use Kafka's own JMX monitoring indicators

- 4. If it is a cloud product, you can directly use the monitoring function that comes with the cloud product

9. Summary of Consumer

So far, I have thoroughly analyzed all aspects of Kafka Consumer's internal underlying principles and design. This is the end of the chapter on kafka principles. The follow-up will conduct special and source code analysis on Kafka's detailed technical points. Please stay tuned...

Insist on summarizing and continue to output high-quality articles. Follow me: Huazai Talking Technology

**粗体** _斜体_ [链接](http://example.com) `代码` - 列表 > 引用。你还可以使用@来通知其他用户。