Abstract: This article is the simplest adaptation we made to clickhouse to support obs.

This article is shared from the Huawei Cloud Community " Clickhouse Storage and Computing Separation Practice in Huawei Cloud", author: he lifu.

Clickhouse is a very good OLAP database system. When it was just open sourced in 2016, it got everyone's attention because of its excellent performance. In the past two years, the large-scale application and promotion of domestic Internet companies have made it famous in the industry. Has been unanimously recognized by everyone.

Judging from the publicly shared information on the Internet and the summary of customer use cases, clickhouse is mainly used in two scenarios of real-time data warehouse and offline acceleration. Some of the real-time services will be configured with full SSD in order to pursue the ultimate performance. Taking real-time into account The limited scale of the data set, this cost is still acceptable, but for offline accelerated business, the data set will generally be large. At this time, the cost of the full SSD configuration will become expensive. Is there any way to meet the higher requirements? Performance can keep the cost as low as possible? Our idea is to scale elastically, store data on low-cost object storage, dynamically increase computing resources to meet high-performance requirements in high-frequency periods, and control the cost of low-frequency periods by recycling computing resources, so we set the goal In terms of the separation of storage and calculation.

1. Current Status of Separation of Deposit and Calculation

Clickhouse is a database system that integrates storage and calculation. The data is directly on the local disk (including cloud hard disks). Readers who are concerned about community dynamics already know that the latest version can support data persistence to object storage and HDFS. The following is our opinion Clickhouse made the simplest adaptation to support obs, the same as native support for S3:

1. Configure S3 Disk

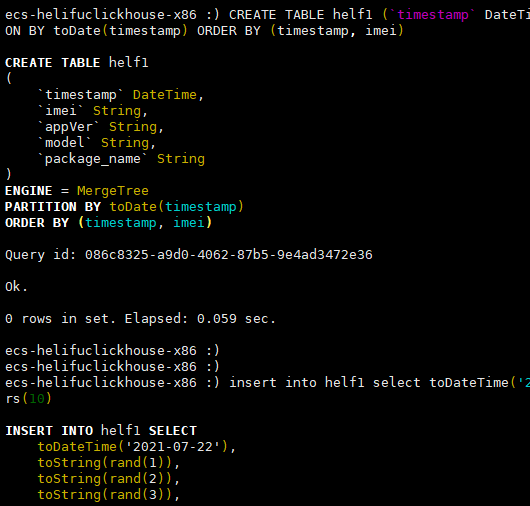

2. Create a table and fill in the data

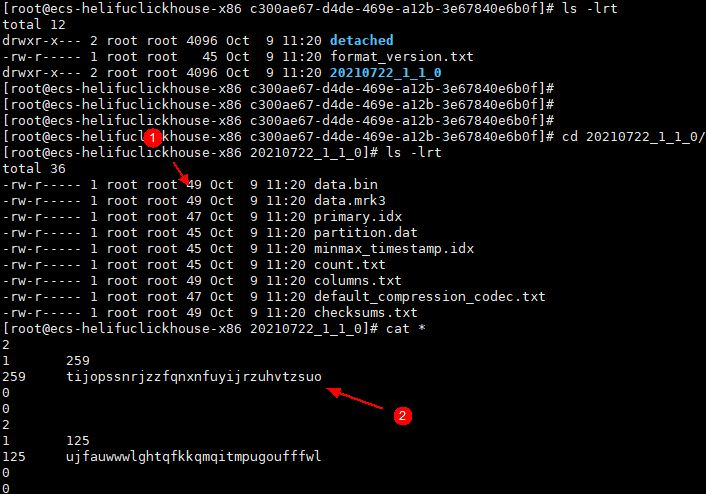

3. Check the local disk data

4. Check the object storage data

From the above pictures, we can find that the content of the data file on the local disk records the file name (uuid) on obs, which means that the clickhouse and obs objects are related by the "mapping" relationship in the local data file. Yes, note that this "mapping" relationship is persistent locally, which means redundancy is required to meet reliability.

Then, further, we see that the community is also working hard to promote clickhouse in the direction of separating storage and computing:

- The Add the ability to backup/restore metadata files for DiskS3 introduced in the v21.3 version allows the mapping of local data files to obs objects, the directory structure of local data and other attributes to be placed in the properties of the obs object (metadata of the object) , This decouples the restriction that the data directory must be local, and also removes the condition of maintaining the reliability of the local file of the mapping relationship and at least double copy;

- The S3 zero copy replication introduced in the v21.4 version allows multiple copies to share a remote data, which significantly reduces the storage cost of multiple copies of the storage-computing engine.

But in fact, through the verification test, it can be found that the current stage of the storage and calculation separation distance can be a long way to go to production, such as: how to move the table under the atomic library to the object storage (the UUID that identifies the uniqueness in the table definition sql file Correspondence with the UUID of the data directory), how to quickly and effectively balance data during elastic expansion and shrinking (copying data will greatly lengthen the operation window), how to modify local disk files and remote objects to ensure consistency, and how to quickly recover from node downtime Wait for difficult questions.

2. Our practice

In the cloud-native era, the separation of storage and computing is the trend and our direction of work. The following discussion will focus on Huawei's public cloud object storage obs.

1. Introduce file semantics

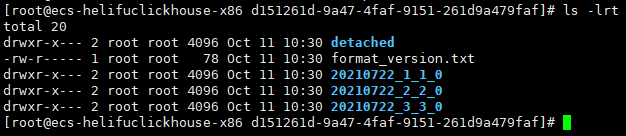

Here we need to emphasize the biggest difference between Huawei Cloud Object Storage obs and other competing products: obs supports file semantics, and supports the rename operation of files and folders . This is very valuable for us in the next system design and implementation of elastic scaling, so we integrated the obs driver into clickhouse, and then modified the logic of clickhouse, so that the data on obs is exactly the same as the local one:

Local Disk:

OBS:

With a complete data directory structure, it is more convenient to support merge, detach, attach, mutate, and part recovery operations.

2. Offline scene

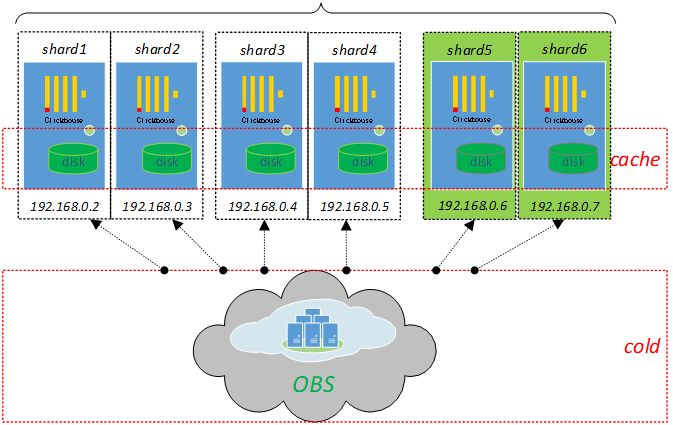

From bottom to top, let's look at the system structure again. In the offline acceleration scenario, the dependency on zookeeper is removed, and each shard has a replica:

Then there is the data balance function when the node is expanded and contracted. The low-cost movement to the part level is completed through the rename operation of obs (different from the data redistribution balance of the clickhouse copier tool). After the node is down, the new node is constructed from the object storage side. Export the local data file directory.

3. Fusion scene

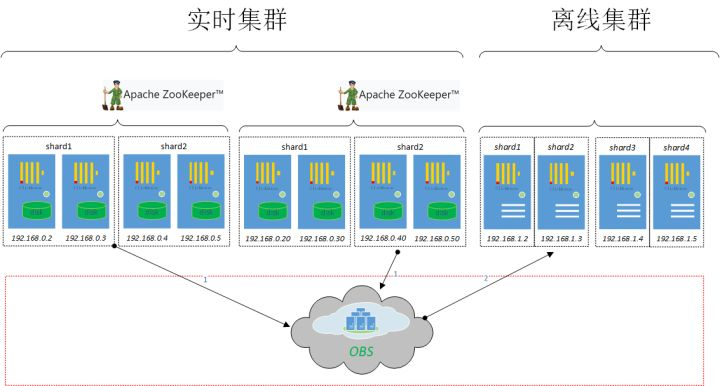

Ok, on the basis of the offline scenario above, we continue to integrate into the real-time scenario (the "real-time cluster" part in the figure below), clickhouse clusters of different businesses can be separated by cold and hot tiered storage (this function is relatively mature, It is widely used in the industry to reduce storage costs), and the cold data is eliminated from the real-time cluster, and then mounted to the "offline cluster" through the obs rename operation, so that we can cover the complete life cycle of the data from real-time to offline (including The ETL process from hive to clickhouse):

3. Prospects for the future

The previous practice is our first attempt in the direction of separation of storage and computing. We are still improving and optimizing. From a macro perspective, we still use obs as a stretched disk, but thanks to the high throughput of obs, Under the premise of the same computing resources, the performance delay of SSD and obs running Star Schema Benchmark is about 5x, but the storage cost has been significantly reduced by 10x. In the future, based on the previous work, we will remove obs as the attribute of the remote disk, unify the data of a single table in a data directory, collect the metadata of clickhouse, and make it a stateless computing node to reach sql The effect of on hadoop is similar to the MPP database of impala.

Click to follow to learn about Huawei Cloud's fresh technology for the first time~

**粗体** _斜体_ [链接](http://example.com) `代码` - 列表 > 引用。你还可以使用@来通知其他用户。