Welcome to my GitHub

https://github.com/zq2599/blog_demos

Content: Classification and summary of all original articles and supporting source code, involving Java, Docker, Kubernetes, DevOPS, etc.;

Overview of this article

- As the fourth chapter of "DL4J Actual Combat", today we will not write code, but make some preparations for future actual combat: use GPU to accelerate the deep learning training process under the DL4J framework;

- If you have an NVIDIA graphics card on your computer, and successfully installed CUDA, then let's take the actual operation with this article. The full text consists of the following:

- Software and hardware environment reference information

- DL4J's dependent libraries and versions

- Specific steps to use GPU

- GPU training and CPU training comparison

Software and hardware environment reference information

- As we all know, Xin Chen is a poor man, so a computer with NVIDIA graphics card is a worn-out Lenovo notebook. The relevant information is as follows:

- Operating system: Ubuntu16 desktop version

- Graphics card model: GTX950M

- CUDA:9.2

- CPU:i5-6300HQ

- Memory: 32G DDR4

- Hard Disk: NvMe 1T

- It turns out that the above configuration can run smoothly. "DL4J Actual Combat 3: Classic Convolution Example (LeNet-5)" The example in the article 16170b492629f2, and can be accelerated by GPU training (the comparison data of GPU and CPU will be given later)

- For the process of installing NVIDIA driver and CUDA9.2 in the Ubuntu16 environment, please refer to the article "Installing CUDA (9.1) and cuDNN on Pure Ubuntu16" , the CUDA version installed here is 9.1, please change to version 9.2 by yourself

DL4J's dependent libraries and versions

- The first thing to emphasize is: do not use CUDA 11.2 version (this is the version output when nvidia-smi is executed). As of this writing, using CUDA 11.2 and its dependent libraries will cause ClassNotFound exceptions at startup

- I have not tried the CUDA 10.X version here, so I will not comment

- Both CUDA 9.1 and 9.2 versions have been tried and can be used normally

- Why not use 9.1? Let's first go to the central warehouse to see the version of the DL4J core library, as shown in the figure below, the latest version has arrived <font color="blue">1.0.0-M1</font>:

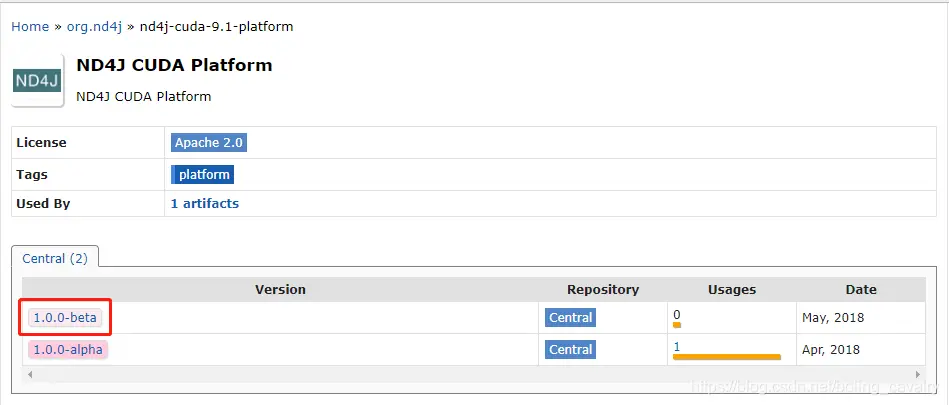

- Let's take a look at the version of the nd4j library corresponding to CUDA 9.1, as shown in the red box below. The latest one is <font color="blue">1.0.0-beta</font> in 2018, which is too far behind the core library:

- Okay, let’s take a look at the version of the nd4j library corresponding to CUDA 9.2, as shown in the red box below. The latest one is <font color="blue">1.0.0-beta6</font>, which is two versions different from the core library. Therefore, it is recommended to use CUDA 9.2:

Specific steps to use GPU

- Whether to use CPU or GPU, the specific steps are very simple: just switch between different dependent libraries, which are introduced below

- If you use CPU for training, the dependent libraries and versions are as follows:

<!--核心库,不论是CPU还是GPU都要用到-->

<dependency>

<groupId>org.deeplearning4j</groupId>

<artifactId>deeplearning4j-core</artifactId>

<version>1.0.0-beta6</version>

</dependency>

<!--CPU要用到-->

<dependency>

<groupId>org.nd4j</groupId>

<artifactId>nd4j-native</artifactId>

<version>1.0.0-beta6</version>

</dependency>If you use GPU for training and the CUDA version is 9.2, the dependent libraries and versions are as follows:

<!--核心库,不论是CPU还是GPU都要用到-->

<dependency>

<groupId>org.deeplearning4j</groupId>

<artifactId>deeplearning4j-core</artifactId>

<version>1.0.0-beta6</version>

</dependency>

<!--GPU要用到-->

<dependency>

<groupId>org.deeplearning4j</groupId>

<artifactId>deeplearning4j-cuda-9.2</artifactId>

<version>1.0.0-beta6</version>

</dependency>

<!--GPU要用到-->

<dependency>

<groupId>org.nd4j</groupId>

<artifactId>nd4j-cuda-9.2-platform</artifactId>

<version>1.0.0-beta6</version>

</dependency>- The java code is not posted here. The code in "DL4J Actual Combat 3: Classic Convolution Example (LeNet-5)" is used without any changes.

Memory settings

- When using IDEA to run the code, you can increase the memory appropriately according to the current hardware situation. The steps are as follows:

- Please adjust as appropriate, I set it to 8G here

- After setting up, then perform training and testing with CPU and GPU on the same computer, and check the GPU acceleration effect by comparison

CPU version

- On this shabby laptop, it is very difficult to use the CPU for training, as shown in the figure below, it is almost drained:

- The console output is as follows, which takes <font color="blue">158</font> seconds, which is a really long process:

=========================Confusion Matrix=========================

0 1 2 3 4 5 6 7 8 9

---------------------------------------------------

973 1 0 0 0 0 2 2 1 1 | 0 = 0

0 1132 0 2 0 0 1 0 0 0 | 1 = 1

1 5 1018 1 1 0 0 4 2 0 | 2 = 2

0 0 2 1003 0 3 0 1 1 0 | 3 = 3

0 0 1 0 975 0 2 0 0 4 | 4 = 4

2 0 0 6 0 880 2 1 1 0 | 5 = 5

6 1 0 0 3 4 944 0 0 0 | 6 = 6

0 3 6 1 0 0 0 1012 2 4 | 7 = 7

3 0 1 1 0 1 1 2 964 1 | 8 = 8

0 0 0 2 6 2 0 2 0 997 | 9 = 9

Confusion matrix format: Actual (rowClass) predicted as (columnClass) N times

==================================================================

13:24:31.616 [main] INFO com.bolingcavalry.convolution.LeNetMNISTReLu - 完成训练和测试,耗时[158739]毫秒

13:24:32.116 [main] INFO com.bolingcavalry.convolution.LeNetMNISTReLu - 最新的MINIST模型保存在[/home/will/temp/202106/26/minist-model.zip]GPU version

- Next, modify the pom.xml file according to the dependencies given above to enable the GPU. During operation, the console outputs the following to indicate that the GPU is enabled:

13:27:08.277 [main] INFO org.nd4j.linalg.api.ops.executioner.DefaultOpExecutioner - Backend used: [CUDA]; OS: [Linux]

13:27:08.277 [main] INFO org.nd4j.linalg.api.ops.executioner.DefaultOpExecutioner - Cores: [4]; Memory: [7.7GB];

13:27:08.277 [main] INFO org.nd4j.linalg.api.ops.executioner.DefaultOpExecutioner - Blas vendor: [CUBLAS]

13:27:08.300 [main] INFO org.nd4j.linalg.jcublas.JCublasBackend - ND4J CUDA build version: 9.2.148

13:27:08.301 [main] INFO org.nd4j.linalg.jcublas.JCublasBackend - CUDA device 0: [GeForce GTX 950M]; cc: [5.0]; Total memory: [4242604032]- This time, the running process is obviously much smoother, and the CPU usage rate has dropped a lot:

- The console output is as follows, which takes <font color="blue">21</font> seconds. It can be seen that the GPU acceleration effect is still very obvious:

=========================Confusion Matrix=========================

0 1 2 3 4 5 6 7 8 9

---------------------------------------------------

973 1 0 0 0 0 2 2 1 1 | 0 = 0

0 1129 0 2 0 0 2 2 0 0 | 1 = 1

1 3 1021 0 1 0 0 4 2 0 | 2 = 2

0 0 1 1003 0 3 0 1 2 0 | 3 = 3

0 0 1 0 973 0 3 0 0 5 | 4 = 4

1 0 0 6 0 882 2 1 0 0 | 5 = 5

6 1 0 0 2 5 944 0 0 0 | 6 = 6

0 2 4 1 0 0 0 1016 2 3 | 7 = 7

1 0 2 1 0 1 0 2 964 3 | 8 = 8

0 0 0 2 6 3 0 2 1 995 | 9 = 9

Confusion matrix format: Actual (rowClass) predicted as (columnClass) N times

==================================================================

13:27:30.722 [main] INFO com.bolingcavalry.convolution.LeNetMNISTReLu - 完成训练和测试,耗时[21441]毫秒

13:27:31.323 [main] INFO com.bolingcavalry.convolution.LeNetMNISTReLu - 最新的MINIST模型保存在[/home/will/temp/202106/26/minist-model.zip]

Process finished with exit code 0- At this point, the actual combat of GPU acceleration under the DL4J framework is complete. If you have an NVIDIA graphics card in your hand, you can try it. I hope this article can give you some reference.

You are not alone, Xinchen and original are with you all the way

- Java series

- Spring series

- Docker series

- kubernetes series

- database + middleware series

- DevOps series

Welcome to pay attention to the public account: programmer Xin Chen

Search "Programmer Xin Chen" on WeChat, I am Xin Chen, and I look forward to traveling the Java world with you...

https://github.com/zq2599/blog_demos

**粗体** _斜体_ [链接](http://example.com) `代码` - 列表 > 引用。你还可以使用@来通知其他用户。