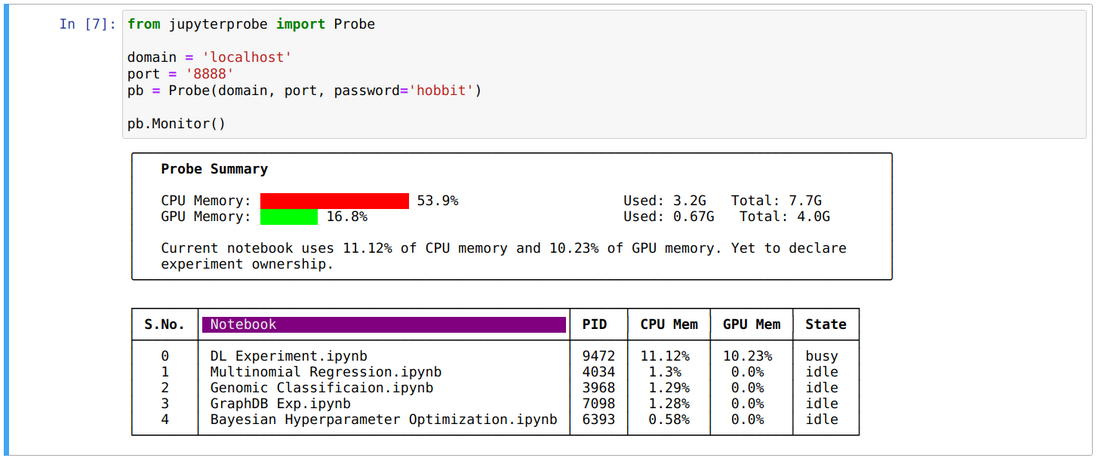

The deployment of Jupyter Notebooks on Kubernetes often requires binding a GPU, and most of the time the GPU is not used, so the utilization rate is low. In order to solve this problem, we open elastic-jupyter-operator , separately deploy the Kernel component that occupies the GPU, and automatically reclaim and release the occupied GPU when it is idle for a long time. This article mainly introduces the use and working principle of this open source project.

Jupyter Notebooks is currently the most widely used interactive development environment. It satisfies the code development needs of data science, deep learning model construction and other scenarios. However, while Jupyter Notebooks facilitates the daily development work of algorithm engineers and data scientists, it also poses more challenges to the infrastructure.

The problem of resource utilization

The biggest challenge comes from GPU resource utilization. Even if there is no code running during the operation, the notebook will occupy the GPU for a long time, causing problems such as vacancy of the GPU. In the scenario of large-scale deployment of Jupyter instances, multiple notebook instances are generally created through Kubernetes and assigned to different algorithm engineers. In this case, we need to apply for the GPU in the corresponding Deployment in advance, so that the GPU will be bound to the corresponding Notebook instance, and each Notebook instance will occupy a GPU graphics card.

However, at the same time, not all algorithm engineers are using GPUs. In Jupyter, the process of editing code does not require the use of computing resources. Only when the code fragments in the Cell are executed, hardware resources such as CPU or GPU will be used to execute and return the result. It can be foreseen that if such a deployment method is adopted, a considerable degree of waste of resources will be caused.

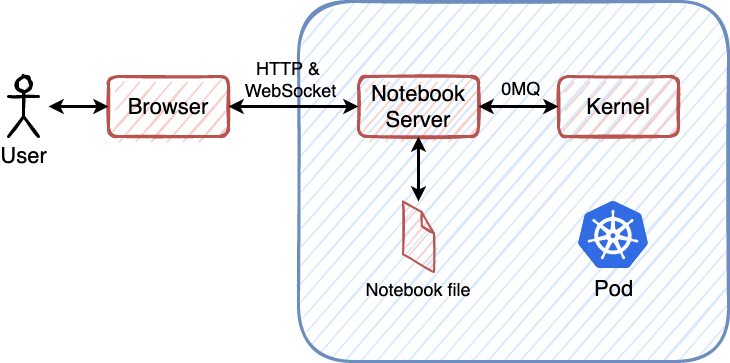

The main reason for this problem is that the native Jupyter Notebooks does not adapt well to Kubernetes. Before introducing the cause of the problem, let's briefly outline the technical architecture of Jupyter Notebook. As shown in the figure below, Jupyter Notebook is mainly composed of three parts, namely user and browser side, Notebook Server and Kernel.

Among them, the user and the browser are the front end of Jupyter, which is mainly responsible for displaying code and execution results. Notebook Server is its back-end server. The code execution request from the browser will be processed by Notebook Server and dispatched to Kernel for execution. Kernel is really responsible for executing code and returning results.

In the traditional way of use, users will jupyter notebook $CODE_PATH such as 061712c1e16f5a, and then access the Jupyter interactive development interface in the browser. When the code needs to be executed, the Notebook Server will create a separate Kernel process, which will run using GPU and so on. When the Kernel is idle for a long time and there is no code to execute, the process will be terminated and the GPU will no longer be occupied.

When deployed on Kuberenetes, the problem arises. Notebook Server and Kernel run in the same container in the same Pod, although the Kernel components that need to be run only when executing code require GPUs, while the long-running Notebook Server does not need them, but is limited by the resource management of Kubernetes Mechanism, it is still necessary to apply for GPU resources in advance.

In the entire life cycle of Notebook Server, this GPU is always bound to the Pod. Although the Kernel process will be recycled when it is idle, the GPU card that has been allocated to the Pod cannot be returned to Kubernetes for scheduling.

solution

In order to solve this problem, we open sourced the project elastic-jupyter-operator . The idea is very simple: the problem stems from the fact that Notebook Server and Kernel are in the same Pod, which makes it impossible for us to apply for computing resources for these two components separately. As long as they are deployed separately, the Notebook Server is in a separate Pod, and the Kernel is also in a separate Pod, communicating with each other through ZeroMQ.

In this way, the Kernel will be released when it is idle. When needed, it will be temporarily applied for GPU again and run. In order to achieve this, we implemented 5 CRDs in Kubernetes and introduced a new KernelLauncher implementation for Jupyter. Through them, users can recycle and release the Kernel when the GPU is idle, dynamically apply for GPU resources when the code needs to be executed, and create a Kernel Pod for code execution.

Simple example

Below we will introduce how to use it through an example. First we need to create JupyterNotebook CR (CustomResource), this CR will create the corresponding Notebook Server:

apiVersion: kubeflow.tkestack.io/v1alpha1

kind: JupyterNotebook

metadata:

name: jupyternotebook-elastic

spec:

gateway:

name: jupytergateway-elastic

namespace: default

auth:

mode: disableThe gateway is specified, which is another CR JupyterGateway. In order for Jupyter to support remote Kernel, such a gateway is needed to forward requests. We also need to create such a CR:

apiVersion: kubeflow.tkestack.io/v1alpha1

kind: JupyterGateway

metadata:

name: jupytergateway-elastic

spec:

cullIdleTimeout: 3600

image: ccr.ccs.tencentyun.com/kubeflow-oteam/enterprise-gateway:2.5.0The configuration cullIdleTimeout JupyterGateway CR specifies the amount of idle time after which the Kernel Pod managed by it will be recycled and released by the system. In the example it is 1 hour. After creating these two resources, you can experience the flexible Jupyter Notebook. If it has not been used within an hour, the Kernel will be recycled.

$ kubectl apply -f ./examples/elastic/kubeflow.tkestack.io_v1alpha1_jupyternotebook.yaml

$ kubectl apply -f ./examples/elastic/kubeflow.tkestack.io_v1alpha1_jupytergateway.yaml

$ kubectl port-forward deploy/jupyternotebook-elastic 8888:8888

$ kubectl get pods

NAME READY STATUS RESTARTS AGE

jovyan-219cfd49-89ad-428c-8e0d-3e61e15d79a7 1/1 Running 0 170m

jupytergateway-elastic-868d8f465c-8mg44 1/1 Running 0 3h

jupyternotebook-elastic-787d94bb4b-xdwnc 1/1 Running 0 3h10mIn addition, due to the decoupling design of Notebook and Kernel, users can easily modify the Kernel mirroring and resource quotas, and add new Kernels to the already running Notebook.

Design and implementation

After introducing the usage method, we briefly introduce its design and implementation.

When the user chooses to execute code in the browser, the first request will be sent to the Notebook Server running on Kubernetes. Since there is currently no Kernel running on the cluster, the code execution task cannot be assigned, so the Notebook Server will send a request to create a Kernel to the Gateway. Gateway is responsible for managing the life cycle of the remote Kernel. It will create the corresponding JupyterKernel CR in the Kubernetes cluster. Then it will interact with the Kernel that has been created in the cluster through ZeroMQ, and then send the code execution request to the Kernel for execution, and then send the result to the Notebook Server and then return it to the front end for rendering and display.

Gateway will periodically check whether there are any instances in the currently managed Kernel that meet the requirements and need to be recycled based on the resource recycling parameters defined in JupyterGateway CR. When the Kernel idle time reaches the defined threshold, Gateway will delete the corresponding JupyterKernel CR, reclaim it, and release the GPU.

Summarize

At present, there are still many challenges in the development and production of deep learning. elastic-jupyter-operator aims at the problem of GPU utilization and development efficiency in the development process, and proposes a feasible solution. The Kernel component that occupies the GPU is deployed separately, and automatically reclaimed under long-term idle conditions to release the occupied GPU, while improving resource utilization in this way, it also gives algorithm engineer users more flexibility.

From an algorithm engineer’s point of view, elastic-jupyter-operator supports a custom Kernel. You can choose to install Python packages or system dependencies in the container image of the Kernel. You don’t need to worry about the version consistency of the notebook unified image within the team. , Improve research and development efficiency.

From the perspective of operation and maintenance and resource management, elastic-jupyter-operator follows the cloud-native design concept and provides external services in the form of 5 CRDs, which has lower operation and maintenance costs for the team that has already landed in Kuerbenetes. .

License

- This article is licensed under CC BY-NC-SA 3.0.

Please contact me for commercial use.

[Tencent Cloud Native] Yunshuo new products, Yunyan new technology, Yunyou Xinhuo, Yunxiang information, scan the QR code to follow the public account of the same name, and get more dry goods in time! !

**粗体** _斜体_ [链接](http://example.com) `代码` - 列表 > 引用。你还可以使用@来通知其他用户。