Welcome to my GitHub

https://github.com/zq2599/blog_demos

Content: Classification and summary of all original articles and supporting source code, involving Java, Docker, Kubernetes, DevOPS, etc.;

The relationship between FFmpeg, JavaCPP, JavaCV

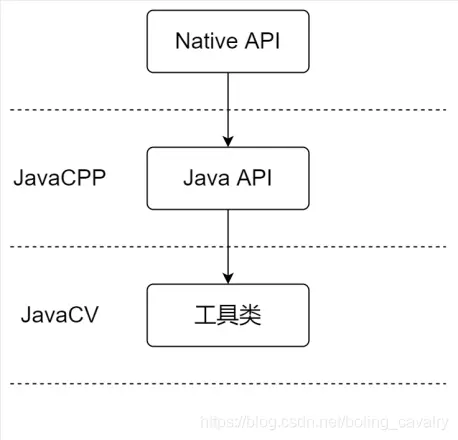

- First, briefly sort out the relationship between FFmpeg, JavaCPP, and JavaCV:

- FFmpeg and OpenCV can be understood as a native library of the C language version, and Java applications cannot be used directly

- JavaCPP wraps common libraries such as FFmpeg and OpenCV so that Java applications can also use these Native APIs (the underlying implementation of JavaCPP is JNI)

- These JavaCPP-packaged APIs are encapsulated by JavaCV into utility classes, which are simpler and easier to use than native APIs

- Simply put, as shown in the figure below, JavaCPP is Native API to Java API, and JavaCV is Java API encapsulated into tool classes. These tool classes are easier to use:

Learning purpose

- Xin Chen's goal is to learn and master JavaCV, and to go deep into JavaCV to understand the JavaCPP it uses is equivalent to laying a good foundation. When using JavaCV in the future, you can also understand its internal implementation principles;

- Ever since, using FFmpeg through JavaCPP has become a basic skill. This article will develop a java application that calls the JavaCPP API to complete the following tasks:

- Open the specified streaming media

- Take a frame and decode it to get an image in YUV420P format

- Convert images in YUV420P format to YUVJ420P format

- Save the image in jpg format in the specified location

- Release all open resources

- It can be seen that the above series of steps have covered common operations such as encoding, decoding and image processing, which are of great help to our understanding of the FFmpeg library.

Knowledge reserve

- Before the actual encoding, it is recommended that you do some understanding of the important data structure and API of FFmpeg. The most classic information in this area is Raytheon's series of tutorials, especially the data structure (context) involved in the solution protocol, decapsulation, and decoding. And API, you should understand it briefly

- If you are too busy and don’t have time to read these classics, I have prepared a fast-food version with a brief summary of important knowledge points. Here I want to make a statement: Xinchen’s fast-food version is far inferior to Thor’s classic series...

- First look at the data structure, which is mainly divided into two categories: media data and context, as well as the java class corresponding to the underlying pointer:

- Followed by the commonly used APIs. According to Raytheon's solution protocol, decapsulation, and decoding ideas (and the reverse encoding and encapsulation processing) to classify and understand them, it is easy to sort them out:

Version Information

The version information of the operating system, software, and library involved in this coding is as follows:

- Operating system: win10 64 bit

- IDE:IDEA 2021.1.3 (Ultimate Edition)

- JDK:1.8.0_291

- maven:3.8.1

- javacpp:1.4.3

- ffmpeg: 4.0.2 (so the version of the ffmpeg-platform library is 4.0.2-1.4.3)

Source download

- The complete source code in this actual combat can be downloaded on GitHub, the address and link information are shown in the following table ( https://github.com/zq2599/blog_demos):

| name | Link | Remark |

|---|---|---|

| Project homepage | https://github.com/zq2599/blog_demos | The project's homepage on GitHub |

| git warehouse address (https) | https://github.com/zq2599/blog_demos.git | The warehouse address of the source code of the project, https protocol |

| git warehouse address (ssh) | git@github.com:zq2599/blog_demos.git | The warehouse address of the source code of the project, ssh protocol |

- There are multiple folders in this git project. The source code of this article is under the <font color="blue">javacv-tutorials</font> folder, as shown in the red box below:

- There are multiple sub-projects under the <font color="blue">javacv-tutorials</font> folder. The source code of this article is under the <font color="red">ffmpeg-basic</font> folder, as shown in the red below frame:

Start coding

- In order to manage the source code and jar dependencies in a unified way, the project adopts the maven parent-child structure. The parent project is named <font color="blue">javacv-tutorials</font>. There are some jar version definitions, so I won’t say more.

- Create a new sub-project named <font color="red">ffmpeg-basic</font> under <font color="blue">javacv-tutorials</font>, and its pom.xml content is as follows, it can be seen that only used JavaCPP, JavaCV is not used:

<?xml version="1.0" encoding="UTF-8"?>

<project xmlns="http://maven.apache.org/POM/4.0.0"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd">

<parent>

<artifactId>javacv-tutorials</artifactId>

<groupId>com.bolingcavalry</groupId>

<version>1.0-SNAPSHOT</version>

</parent>

<modelVersion>4.0.0</modelVersion>

<artifactId>ffmpeg-basic</artifactId>

<dependencies>

<dependency>

<groupId>org.bytedeco</groupId>

<artifactId>javacpp</artifactId>

</dependency>

<dependency>

<groupId>org.bytedeco.javacpp-presets</groupId>

<artifactId>ffmpeg-platform</artifactId>

</dependency>

<dependency>

<groupId>org.projectlombok</groupId>

<artifactId>lombok</artifactId>

</dependency>

<dependency>

<groupId>org.slf4j</groupId>

<artifactId>slf4j-api</artifactId>

</dependency>

<dependency>

<groupId>ch.qos.logback</groupId>

<artifactId>logback-classic</artifactId>

</dependency>

</dependencies>

</project>- Next, start coding. First, write a simplest internal class, put AVFrame and its corresponding data pointer BytePointer in this class, which is convenient to pass when calling the method:

class FrameData {

AVFrame avFrame;

BytePointer buffer;

public FrameData(AVFrame avFrame, BytePointer buffer) {

this.avFrame = avFrame;

this.buffer = buffer;

}

}- Next is the most important method of the entire program <font color="blue">openMediaAndSaveImage</font>. This method is the main body of the entire program. It is responsible for the five steps of opening streaming media, decoding, formatting, saving, and releasing. Up, the whole function can be completed by calling this method from the outside:

/**

* 打开流媒体,取一帧,转为YUVJ420P,再保存为jpg文件

* @param url

* @param out_file

* @throws IOException

*/

public void openMediaAndSaveImage(String url,String out_file) throws IOException {

log.info("正在打开流媒体 [{}]", url);

// 打开指定流媒体,进行解封装,得到解封装上下文

AVFormatContext pFormatCtx = getFormatContext(url);

if (null==pFormatCtx) {

log.error("获取解封装上下文失败");

return;

}

// 控制台打印流媒体信息

av_dump_format(pFormatCtx, 0, url, 0);

// 流媒体解封装后有一个保存了所有流的数组,videoStreamIndex表示视频流在数组中的位置

int videoStreamIndex = getVideoStreamIndex(pFormatCtx);

// 找不到视频流就直接返回

if (videoStreamIndex<0) {

log.error("没有找到视频流");

return;

}

log.info("视频流在流数组中的第[{}]个流是视频流(从0开始)", videoStreamIndex);

// 得到解码上下文,已经完成了初始化

AVCodecContext pCodecCtx = getCodecContext(pFormatCtx, videoStreamIndex);

if (null==pCodecCtx) {

log.error("生成解码上下文失败");

return;

}

// 从视频流中解码一帧

AVFrame pFrame = getSingleFrame(pCodecCtx,pFormatCtx, videoStreamIndex);

if (null==pFrame) {

log.error("从视频流中取帧失败");

return;

}

// 将YUV420P图像转成YUVJ420P

// 转换后的图片的AVFrame,及其对应的数据指针,都放在frameData对象中

FrameData frameData = YUV420PToYUVJ420P(pCodecCtx, pFrame);

if (null==frameData) {

log.info("YUV420P格式转成YUVJ420P格式失败");

return;

}

// 持久化存储

saveImg(frameData.avFrame,out_file);

// 按顺序释放

release(true, null, null, pCodecCtx, pFormatCtx, frameData.buffer, frameData.avFrame, pFrame);

log.info("操作成功");

}- Now that the overall logic is clear, let's look at the source code of the methods called in openMediaAndSaveImage, first look at the <font color="blue">getFormatContext</font> that opens the streaming media:

/**

* 生成解封装上下文

* @param url

* @return

*/

private AVFormatContext getFormatContext(String url) {

// 解封装上下文

AVFormatContext pFormatCtx = new avformat.AVFormatContext(null);

// 打开流媒体

if (avformat_open_input(pFormatCtx, url, null, null) != 0) {

log.error("打开媒体失败");

return null;

}

// 读取流媒体数据,以获得流的信息

if (avformat_find_stream_info(pFormatCtx, (PointerPointer<Pointer>) null) < 0) {

log.error("获得媒体流信息失败");

return null;

}

return pFormatCtx;

}- After the stream media is decapsulated, there is an array that saves all the streams. The <font color="blue">getVideoStreamIndex</font> method will find the position of the video stream in the array:

/**

* 流媒体解封装后得到多个流组成的数组,该方法找到视频流咋数组中的位置

* @param pFormatCtx

* @return

*/

private static int getVideoStreamIndex(AVFormatContext pFormatCtx) {

int videoStream = -1;

// 解封装后有多个流,找出视频流是第几个

for (int i = 0; i < pFormatCtx.nb_streams(); i++) {

if (pFormatCtx.streams(i).codec().codec_type() == AVMEDIA_TYPE_VIDEO) {

videoStream = i;

break;

}

}

return videoStream;

}- After decapsulation is decoding, the <font color="blue">getCodecContext</font> method gets the decoding context object:

/**

* 生成解码上下文

* @param pFormatCtx

* @param videoStreamIndex

* @return

*/

private AVCodecContext getCodecContext(AVFormatContext pFormatCtx, int videoStreamIndex) {

//解码器

AVCodec pCodec;

// 得到解码上下文

AVCodecContext pCodecCtx = pFormatCtx.streams(videoStreamIndex).codec();

// 根据解码上下文得到解码器

pCodec = avcodec_find_decoder(pCodecCtx.codec_id());

if (pCodec == null) {

return null;

}

// 用解码器来初始化解码上下文

if (avcodec_open2(pCodecCtx, pCodec, (AVDictionary)null) < 0) {

return null;

}

return pCodecCtx;

}- Then decode the frame and decode from the video stream:

/**

* 取一帧然后解码

* @param pCodecCtx

* @param pFormatCtx

* @param videoStreamIndex

* @return

*/

private AVFrame getSingleFrame(AVCodecContext pCodecCtx, AVFormatContext pFormatCtx, int videoStreamIndex) {

// 分配帧对象

AVFrame pFrame = av_frame_alloc();

// frameFinished用于检查是否有图像

int[] frameFinished = new int[1];

// 是否找到的标志

boolean exists = false;

AVPacket packet = new AVPacket();

try {

// 每一次while循环都会读取一个packet

while (av_read_frame(pFormatCtx, packet) >= 0) {

// 检查packet所属的流是不是视频流

if (packet.stream_index() == videoStreamIndex) {

// 将AVPacket解码成AVFrame

avcodec_decode_video2(pCodecCtx, pFrame, frameFinished, packet);// Decode video frame

// 如果有图像就返回

if (frameFinished != null && frameFinished[0] != 0 && !pFrame.isNull()) {

exists = true;

break;

}

}

}

} finally {

// 一定要执行释放操作

av_free_packet(packet);

}

// 找不到就返回空

return exists ? pFrame : null;

}- The decoded image is in YUV420P format, let’s convert it to YUVJ420P:

/**

* 将YUV420P格式的图像转为YUVJ420P格式

* @param pCodecCtx 解码上下文

* @param sourceFrame 源数据

* @return 转换后的帧极其对应的数据指针

*/

private static FrameData YUV420PToYUVJ420P(AVCodecContext pCodecCtx, AVFrame sourceFrame) {

// 分配一个帧对象,保存从YUV420P转为YUVJ420P的结果

AVFrame pFrameRGB = av_frame_alloc();

if (pFrameRGB == null) {

return null;

}

int width = pCodecCtx.width(), height = pCodecCtx.height();

// 一些参数设定

pFrameRGB.width(width);

pFrameRGB.height(height);

pFrameRGB.format(AV_PIX_FMT_YUVJ420P);

// 计算转为YUVJ420P之后的图片字节数

int numBytes = avpicture_get_size(AV_PIX_FMT_YUVJ420P, width, height);

// 分配内存

BytePointer buffer = new BytePointer(av_malloc(numBytes));

// 图片处理工具的初始化操作

SwsContext sws_ctx = sws_getContext(width, height, pCodecCtx.pix_fmt(), width, height, AV_PIX_FMT_YUVJ420P, SWS_BICUBIC, null, null, (DoublePointer) null);

// 将pFrameRGB的data指针指向刚才分配好的内存(即buffer)

avpicture_fill(new avcodec.AVPicture(pFrameRGB), buffer, AV_PIX_FMT_YUVJ420P, width, height);

// 转换图像格式,将解压出来的YUV420P的图像转换为YUVJ420P的图像

sws_scale(sws_ctx, sourceFrame.data(), sourceFrame.linesize(), 0, height, pFrameRGB.data(), pFrameRGB.linesize());

// 及时释放

sws_freeContext(sws_ctx);

// 将AVFrame和BytePointer打包到FrameData中返回,这两个对象都要做显示的释放操作

return new FrameData(pFrameRGB, buffer);

}- Then there is another very important method <font color="blue">saveImg</font>, which is a typical encoding and output process. We have already seen the operation of opening the media stream, unpacking and decoding, now we have to see how to make it Media stream, including encoding, packaging and output:

/**

* 将传入的帧以图片的形式保存在指定位置

* @param pFrame

* @param out_file

* @return 小于0表示失败

*/

private int saveImg(avutil.AVFrame pFrame, String out_file) {

av_log_set_level(AV_LOG_ERROR);//设置FFmpeg日志级别(默认是debug,设置成error可以屏蔽大多数不必要的控制台消息)

AVPacket pkt = null;

AVStream pAVStream = null;

int width = pFrame.width(), height = pFrame.height();

// 分配AVFormatContext对象

avformat.AVFormatContext pFormatCtx = avformat_alloc_context();

// 设置输出格式(涉及到封装和容器)

pFormatCtx.oformat(av_guess_format("mjpeg", null, null));

if (pFormatCtx.oformat() == null) {

log.error("输出媒体流的封装格式设置失败");

return -1;

}

try {

// 创建并初始化一个和该url相关的AVIOContext

avformat.AVIOContext pb = new avformat.AVIOContext();

// 打开输出文件

if (avio_open(pb, out_file, AVIO_FLAG_READ_WRITE) < 0) {

log.info("输出文件打开失败");

return -1;

}

// 封装之上是协议,这里将封装上下文和协议上下文关联

pFormatCtx.pb(pb);

// 构建一个新stream

pAVStream = avformat_new_stream(pFormatCtx, null);

if (pAVStream == null) {

log.error("将新的流放入媒体文件失败");

return -1;

}

int codec_id = pFormatCtx.oformat().video_codec();

// 设置该stream的信息

avcodec.AVCodecContext pCodecCtx = pAVStream.codec();

pCodecCtx.codec_id(codec_id);

pCodecCtx.codec_type(AVMEDIA_TYPE_VIDEO);

pCodecCtx.pix_fmt(AV_PIX_FMT_YUVJ420P);

pCodecCtx.width(width);

pCodecCtx.height(height);

pCodecCtx.time_base().num(1);

pCodecCtx.time_base().den(25);

// 打印媒体信息

av_dump_format(pFormatCtx, 0, out_file, 1);

// 查找解码器

avcodec.AVCodec pCodec = avcodec_find_encoder(codec_id);

if (pCodec == null) {

log.info("获取解码器失败");

return -1;

}

// 用解码器来初始化解码上下文

if (avcodec_open2(pCodecCtx, pCodec, (PointerPointer<Pointer>) null) < 0) {

log.error("解码上下文初始化失败");

return -1;

}

// 输出的Packet

pkt = new avcodec.AVPacket();

// 分配

if (av_new_packet(pkt, width * height * 3) < 0) {

return -1;

}

int[] got_picture = { 0 };

// 把流的头信息写到要输出的媒体文件中

avformat_write_header(pFormatCtx, (PointerPointer<Pointer>) null);

// 把帧的内容进行编码

if (avcodec_encode_video2(pCodecCtx, pkt, pFrame, got_picture)<0) {

log.error("把帧编码为packet失败");

return -1;

}

// 输出一帧

if ((av_write_frame(pFormatCtx, pkt)) < 0) {

log.error("输出一帧失败");

return -1;

}

// 写文件尾

if (av_write_trailer(pFormatCtx) < 0) {

log.error("写文件尾失败");

return -1;

}

return 0;

} finally {

// 资源清理

release(false, pkt, pFormatCtx.pb(), pAVStream.codec(), pFormatCtx);

}

}- The last is the operation of releasing resources. Please note that the APIs used to release different objects are also different. In addition, the APIs used in different AVFormatContext scenarios (input and output scenarios) are also different. If you use it wrong, it will crash. In addition, the release method will be called altogether. Two times, that is to say, the resources and objects used to open the media stream and output the media stream need to be released and recycled in the end:

/**

* 释放资源,顺序是先释放数据,再释放上下文

* @param pCodecCtx

* @param pFormatCtx

* @param ptrs

*/

private void release(boolean isInput, AVPacket pkt, AVIOContext pb, AVCodecContext pCodecCtx, AVFormatContext pFormatCtx, Pointer...ptrs) {

if (null!=pkt) {

av_free_packet(pkt);

}

// 解码后,这是个数组,要遍历处理

if (null!=ptrs) {

Arrays.stream(ptrs).forEach(avutil::av_free);

}

// 解码

if (null!=pCodecCtx) {

avcodec_close(pCodecCtx);

}

// 解协议

if (null!=pb) {

avio_close(pb);

}

// 解封装

if (null!=pFormatCtx) {

if (isInput) {

avformat_close_input(pFormatCtx);

} else {

avformat_free_context(pFormatCtx);

}

}

}- Finally, write a main method, call openMediaAndSaveImage to try, pass in the address of the media stream, and the path to store the image:

public static void main(String[] args) throws Exception {

// CCTV13,1920*1080分辨率,不稳定,打开失败时请多试几次

String url = "http://ivi.bupt.edu.cn/hls/cctv13hd.m3u8";

// 安徽卫视,1024*576分辨率,较为稳定

// String url = "rtmp://58.200.131.2:1935/livetv/ahtv";

// 本地视频文件,请改为您自己的本地文件地址

// String url = "E:\\temp\\202107\\24\\test.mp4";

// 完整图片存放路径,注意文件名是当前的年月日时分秒

String localPath = "E:\\temp\\202107\\24\\save\\" + new SimpleDateFormat("yyyyMMddHHmmss").format(new Date()) + ".jpg";

// 开始操作

new Stream2Image().openMediaAndSaveImage(url, localPath);

}- All the above codes are in the file <font color="red">Stream2Image.java</font> of the subproject <font color="blue">ffmpeg-basic</font>. Run the main method and the console output is as follows, It can be seen that the streaming media is successfully opened, and detailed media information is output:

18:28:35.553 [main] INFO com.bolingcavalry.basic.Stream2Image - 正在打开流媒体 [http://ivi.bupt.edu.cn/hls/cctv13hd.m3u8]

18:28:37.062 [main] INFO com.bolingcavalry.basic.Stream2Image - 视频流在流数组中的第[0]个流是视频流(从0开始)

18:28:37.219 [main] INFO com.bolingcavalry.basic.Stream2Image - 操作成功

[hls,applehttp @ 00000188548ab140] Opening 'http://ivi.bupt.edu.cn/hls/cctv13hd-1627208880000.ts' for reading

[hls,applehttp @ 00000188548ab140] Opening 'http://ivi.bupt.edu.cn/hls/cctv13hd-1627208890000.ts' for reading

[NULL @ 000001887ba68bc0] non-existing SPS 0 referenced in buffering period

[NULL @ 000001887ba68bc0] SPS unavailable in decode_picture_timing

[h264 @ 000001887ba6aa80] non-existing SPS 0 referenced in buffering period

[h264 @ 000001887ba6aa80] SPS unavailable in decode_picture_timing

Input #0, hls,applehttp, from 'http://ivi.bupt.edu.cn/hls/cctv13hd.m3u8':

Duration: N/A, start: 1730.227267, bitrate: N/A

Program 0

Metadata:

variant_bitrate : 0

Stream #0:0: Video: h264 (Main) ([27][0][0][0] / 0x001B), yuv420p, 1920x1080 [SAR 1:1 DAR 16:9], 25 fps, 25 tbr, 90k tbn, 50 tbc

Metadata:

variant_bitrate : 0

Stream #0:1: Audio: aac (LC) ([15][0][0][0] / 0x000F), 48000 Hz, 5.1, fltp

Metadata:

variant_bitrate : 0

[swscaler @ 000001887cb28bc0] deprecated pixel format used, make sure you did set range correctly

Process finished with exit code 0- Go to the directory where the picture is stored and check that the picture has been generated:

- At this point, the actual combat of Java Edition streaming media decoding and saving images is completed. We have a basic understanding of the commonly used functions of FFmpeg packaged by JavaCPP, and we know the common routines of encoding, decoding and image processing. Later, when using the JavaCV tool class, we also understand With its internal basic principles, it has more advantages in scenarios such as positioning problems, performance optimization, and in-depth research.

You are not lonely, Xinchen is with you all the way

- Java series

- Spring series

- Docker series

- kubernetes series

- database + middleware series

- DevOps series

Welcome to pay attention to the public account: programmer Xin Chen

Search "Programmer Xin Chen" on WeChat, I am Xin Chen, and I look forward to traveling the Java world with you...

https://github.com/zq2599/blog_demos

**粗体** _斜体_ [链接](http://example.com) `代码` - 列表 > 引用。你还可以使用@来通知其他用户。