📄

Text|Wei Xikai (Technical Expert of Ant Group)

This article is 6320 words read in 10 minutes

▼

The memory-safe Rust, although there is basically no memory leak, how to allocate memory reasonably is a problem that every complex application must face. Often with different businesses, the same code may produce different memory usage. Therefore, there is a high probability that the memory will be used too much, and the memory will gradually grow and not be released.

In this article, I want to share about the problem of excessive memory usage that we encountered in the course of practice. For these memory problems, we will make a simple classification in this article, and provide us with a method of troubleshooting and positioning in a production environment for your reference.

This article was first published in Rust Magazine Chinese Monthly

(https://rustmagazine.github.io/rust_magazine_2021/chapter_5/rust-memory-troubleshootting.html)

Memory allocator

First of all, in the production environment, we often do not choose the default memory allocator (malloc), but choose jemalloc, which can provide better multi-core performance and better avoid memory fragmentation (for detailed reasons, please refer to [1]). In the Rust ecosystem, there are many excellent libraries for jemalloc packaging. Here we are not entangled in which library is better. We are more concerned about how to use the analysis capabilities provided by jemalloc to help us diagnose memory problems.

Reading the usage documentation of jemalloc, you can know that it provides memory profile capabilities based on sampling, and you can set the two options prof.active and prof.dump through mallctl to dynamically control the switch of the memory profile and output the memory profile information Effect.

The memory grows rapidly until oom

Such a situation is generally that the same code will appear when facing different business scenarios, because a certain input (usually a large amount of data) causes the program's memory to grow rapidly.

However, with the memory profiling function mentioned above, rapid memory growth is actually a very easy to solve situation, because we can turn on the profile switch during the rapid growth process, after a period of time, output the profile results and visualize them with the corresponding tools , You can clearly understand which functions are called and which structures are allocated for memory.

However, there are two situations here: reproducible and difficult to reproduce. The handling methods for the two cases are different. The following is an operable solution for these two cases.

Can be reproduced

Scenes that can be reproduced are actually the easiest problem to solve, because we can dynamically open the profile during the reproduction period and obtain a large amount of memory allocation information in a short period of time.

Here is a complete demo to show how to perform dynamic memory profile in Rust application.

In this article, I will use the jemalloc-sys jemallocator jemalloc-ctl to profile the memory. The main functions of these three libraries are:

jemalloc-sys : Encapsulate jemalloc.

jemallocator : Rust realized the GlobalAlloc , to replace the default memory allocator.

jemalloc-ctl : Provides a package for mallctl, which can be used for tuning, dynamically configuring the configuration of the distributor, and obtaining the statistical information of the distributor.

The following are the dependencies of the demo project:

[dependencies]

jemallocator = "0.3.2"

jemalloc-ctl = "0.3.2"

[dependencies.jemalloc-sys]

version = "0.3.2"

features = ["stats", "profiling", "unprefixed_malloc_on_supported_platforms"]

[profile.release]

debug = trueThe most important thing is jemalloc-sys need to be opened, otherwise the subsequent profile will fail. In addition, it needs to be emphasized that the operating environment of the demo is running under the Linux environment.

Then the code of src/main.rs of the demo is as follows:

use jemallocator;

use jemalloc_ctl::{AsName, Access};

use std::collections::HashMap;

#[global_allocator]

static ALLOC: jemallocator::Jemalloc = jemallocator::Jemalloc;

const PROF_ACTIVE: &'static [u8] = b"prof.active\0";

const PROF_DUMP: &'static [u8] = b"prof.dump\0";

const PROFILE_OUTPUT: &'static [u8] = b"profile.out\0";

fn set_prof_active(active: bool) {

let name = PROF_ACTIVE.name();

name.write(active).expect("Should succeed to set prof");

}

fn dump_profile() {

let name = PROF_DUMP.name();

name.write(PROFILE_OUTPUT).expect("Should succeed to dump profile")

}

fn main() {

set_prof_active(true);

let mut buffers: Vec<HashMap<i32, i32>> = Vec::new();

for _ in 0..100 {

buffers.push(HashMap::with_capacity(1024));

}

set_prof_active(false);

dump_profile();

}The demo is already a very simplified test case. The main explanations are as follows:

set_prof_active and dump_profile are to call mallctl function jemalloc provided by jemalloc-ctl, by giving the appropriate key to set the value, such as this is to prof.active set the Boolean value to profile.dump set the dump file path.

After the compilation is complete, it is not possible to run the program directly, you need to set the environment variables (enable the memory profile function):

export MALLOC_CONF=prof:true

Then run the program again, it will output a memory profile file, the file name in the demo is profile.out -coded-0618136608c1cb, this is a text file, which is not conducive to direct observation (no intuitive symbol).

Through tools such as jeprof, it can be directly converted into visual graphics:

jeprof --show_bytes --pdf <path_to_binary> ./profile.out > ./profile.pdf

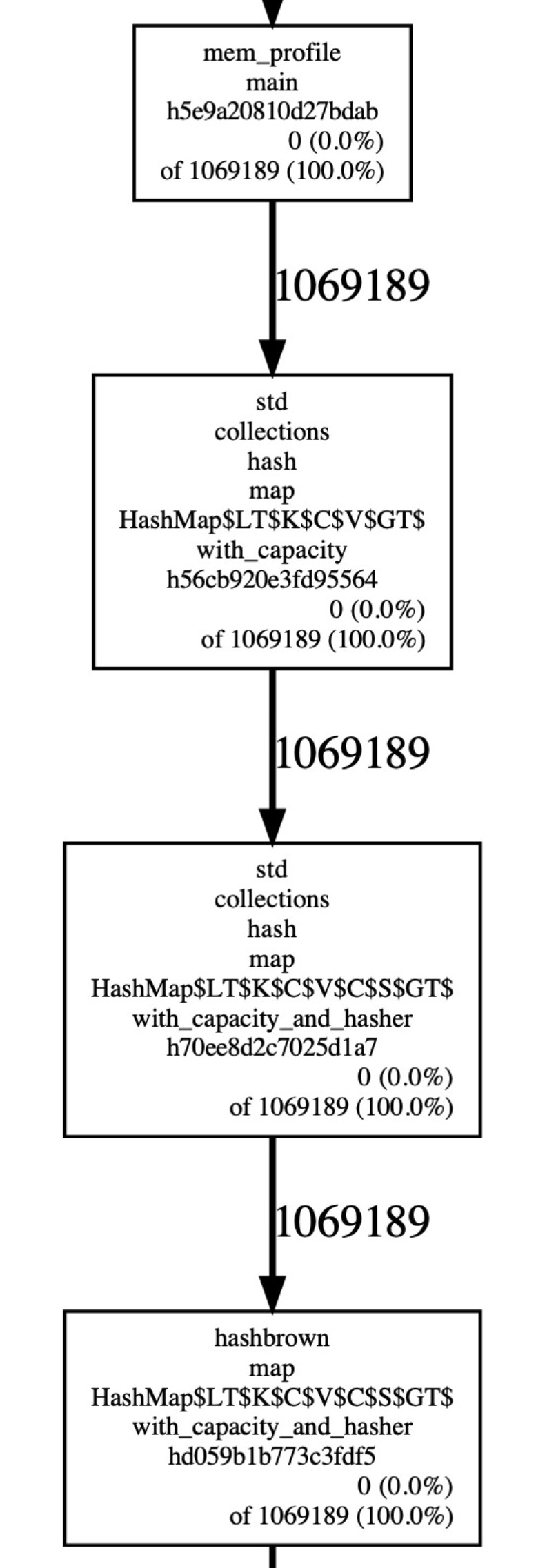

In this way, it can be visualized. From the figure below, we can clearly see all memory sources:

The overall process of this demo is complete. If it is applied to production, only trivial work is missing. The following is our production practice:

- Encapsulate it as an HTTP service, which can be directly triggered by curl command, and the result is returned through HTTP response.

- Support setting profile duration.

- Handle situations where profiles are triggered concurrently.

Having said that, there is actually one advantage of this scheme that has not been mentioned, that is, its dynamic nature. Because turning on the memory profile function is bound to have a certain impact on performance (although the impact here is not particularly large), we naturally hope to avoid turning on the profile function when there is no problem, so this dynamic switch is still very practical of.

Difficult to reproduce

In fact, problems that can be reproduced stably are not a problem. In production, the most troublesome problem is the problem that is difficult to reproduce. The problem that is difficult to reproduce is like a time bomb. The reproducibility conditions are very harsh and it is difficult to accurately locate the problem, but the problem will appear suddenly, which is very troublesome. .

Generally, for problems that are difficult to reproduce, the main idea is to prepare the site in advance. When a problem occurs, although there is a problem with the service, we save the site where the problem occurred. For example, the problem of excessive memory usage here is a very good idea: when oom, a coredump is generated.

However, we did not use the coredump method in our production practice. The main reason is that the server node memory in the production environment is often large, and the coredump generated is also very large. It takes a lot of time to generate the coredump, which will affect the speed of restarting immediately. In addition, analysis, transmission, and storage are not very convenient.

Here is an introduction to the solution we adopted in the production environment. In fact, it is also a very simple method. Through the functions provided by jemalloc, it is easy to indirectly output the memory profile results.

After starting a long-running program that uses jemalloc, use environment variables to set the jemalloc parameters:

export MALLOC_CONF=prof:true,lg_prof_interval:30

The parameter here adds a lg_prof_interval:30 , which means that every time the memory increases by 1GB (2^30, it can be modified as needed, here is just an example), a memory profile is output. In this way, over time, if there is a sudden increase in memory (exceeding the set threshold), then the corresponding profile will be generated, then we can locate the problem according to the creation date of the file when a problem occurs At that moment, what kind of memory allocation has occurred.

The memory grows slowly and is not released

Different from the rapid growth of memory, the overall use of memory is in a stable state, but as time goes by, memory is growing steadily and slowly. Through the methods mentioned above, it is difficult to find out where the memory is used.

This problem is also one of the very difficult problems we encountered in production. Compared with the previous drastic changes, we no longer care about those allocation events that have occurred. We are more concerned about the current memory distribution, but in the absence of GC In Rust, observing the memory distribution of the current program is not a very simple matter (especially if it does not affect the production operation).

In response to this situation, our practice in the production environment is as follows:

Manually release part of the structure (often cache) memory

Then observe the memory changes before and after (how much memory is released) to determine the memory size of each module

With the statistical function of jemalloc, the current memory usage can be obtained, and we can repeat the release of the memory of the specified module + calculate the release size to determine the distribution of the memory.

The shortcomings of this solution are also obvious, that is, the modules involved in the memory occupation detection are a priori (you can't find the memory occupation modules outside your knowledge), but this flaw is still acceptable, because a program may occupy too much memory. We often know about big places.

A demo project is given below, which can be applied to production based on this demo project.

The following are the dependencies of the demo project:

[dependencies]

jemallocator = "0.3.2"

jemalloc-ctl = "0.3.2"

[dependencies.jemalloc-sys]

version = "0.3.2"

features = ["stats", "profiling", "unprefixed_malloc_on_supported_platforms"]

[profile.release]

debug = trueThe code of src/main.rs of demo:

use jemallocator;

use jemalloc_ctl::{epoch, stats};

#[global_allocator]

static ALLOC: jemallocator::Jemalloc = jemallocator::Jemalloc;

fn alloc_cache() -> Vec<i8> {

let mut v = Vec::with_capacity(1024 * 1024);

v.push(0i8);

v

}

fn main() {

let cache_0 = alloc_cache();

let cache_1 = alloc_cache();

let e = epoch::mib().unwrap();

let allocated_stats = stats::allocated::mib().unwrap();

let mut heap_size = allocated_stats.read().unwrap();

drop(cache_0);

e.advance().unwrap();

let new_heap_size = allocated_stats.read().unwrap();

println!("cache_0 size:{}B", heap_size - new_heap_size);

heap_size = new_heap_size;

drop(cache_1);

e.advance().unwrap();

let new_heap_size = allocated_stats.read().unwrap();

println!("cache_1 size:{}B", heap_size - new_heap_size);

heap_size = new_heap_size;

println!("current heap size:{}B", heap_size);

}Compared with the previous demo, it is a bit longer, but the idea is very simple. Just briefly explain a note about the use of jemalloc-ctl. Before obtaining new statistics, you must first call epoch.advance() .

The following is the output information of my run after compilation:

cache_0 size:1048576B

cache_1 size:1038336B

current heap size:80488BIt can be found here that the size of cache_1 is not strictly 1MB. This can be said to be normal. Generally speaking (not for this demo), there are two main reasons:

During memory statistics, other memory changes are taking place.

The stats data provided by jemalloc is not necessarily completely accurate, because it is impossible to use global statistics for better multi-core performance, so it is actually for performance, giving up the consistency of statistical information.

However, the inaccuracy of this information will not hinder the problem of excessively high positioning memory, because the released memory is often huge, and a small disturbance will not affect the final result.

In addition, there is actually a simpler solution, which is to directly observe the memory changes of the machine by releasing the cache, but what you need to know is that the memory is not necessarily returned to the OS immediately, and it is more tiring to rely on eye observation. A better solution is Integrate this memory distribution check function into your Rust application.

Other general solutions

metrics

In addition, there is a very effective solution that we have been using all the time. When a large amount of memory is allocated, the allocated memory size is recorded as an indicator for subsequent collection and observation.

The overall plan is as follows:

- Use Prometheus Client to record allocated memory (application layer statistics)

- Expose metrics interface

- Configure Promethues server to pull metrics

- Configure Grafana and connect to Prometheus server for visual display

Memory troubleshooting tool

In the process of investigating excessive memory usage, other powerful tools have also been tried, such as heaptrack, valgrind and other tools, but these tools have a huge drawback, that is, they will bring a very large overhead.

Generally speaking, it is basically impossible for the application to run in production using this type of tool.

Because of this, in a production environment, we rarely use such tools to troubleshoot memory problems.

Summary

Although Rust has helped us avoid the problem of memory leaks, but the problem of excessive memory usage, I think many programs that run for a long time in production will still have a very high probability. This article mainly shares several high memory usage problem scenarios that we encountered in the production environment, as well as some common troubleshooting solutions that can quickly locate problems without affecting the normal service of production. I hope to show you Bring some inspiration and help.

Of course, it is certain that there are other memory problems that we have not encountered before, and there are better and more convenient solutions to locate and troubleshoot memory problems. I hope students who know can communicate more together.

Reference

[1] Experimental Study of Memory Allocation forHigh-Performance Query Processing

[2] jemalloc usage documentation

[3] jemallocator

We are the time series storage team in the middle of the ant intelligent monitoring technology. We are using Rust to build a new generation of time series database with high performance, low cost and real-time analysis capabilities.

Welcome to join or recommend

Please contact: jiachun.fjc@antgroup.com

*Recommended reading this week*

new generation log system in SOFAJRaft

next frontier of Kubernetes: multi-cluster management

RAFT-based production-level high-performance Java implementation-SOFAJRaft series content collection

finally! SOFATracer completed its link visualization journey

**粗体** _斜体_ [链接](http://example.com) `代码` - 列表 > 引用。你还可以使用@来通知其他用户。