1. Basic Concepts of Node

1.1 What is Node

Node.js is an open source and cross-platform JavaScript runtime environment. Run the V8 JavaScript engine (Google Chrome's core) outside the browser, and use event-driven, non-blocking and asynchronous input and output models to improve performance. We can understand it as: Node.js is a server-side, non-blocking I/O, event-driven JavaScript runtime environment.

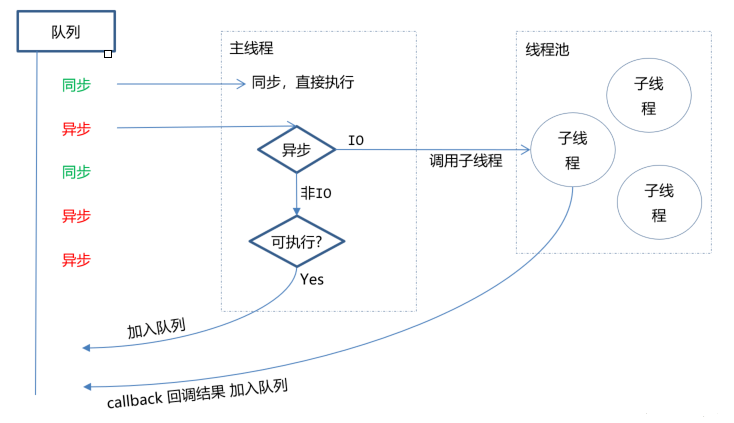

To understand Node, there are several basic concepts: non-blocking asynchronous and event-driven.

- Non-blocking asynchronous: Nodejs uses a non-blocking I/O mechanism, which will not cause any blocking when doing I/O operations. When it is completed, it will notify the execution of the operation in the form of time. For example, after the code to access the database is executed, the code behind it will be executed immediately, and the processing code for the result returned by the database is placed in the callback function, thereby improving the execution efficiency of the program.

- event-driven: event-driven means that when a new request comes in, the request will be pressed into an event queue, and then through a loop to detect the state change of the event in the queue, if a state change event is detected, Then execute the processing code corresponding to the event, which is generally a callback function. For example, when reading a file, after the file is read, the corresponding state will be triggered, and then the corresponding callback function will be used for processing.

1.2 Application scenarios and disadvantages of Node

1.2.1 Advantages and disadvantages

Node.js is suitable for I/O-intensive applications. The value is that when the application is running at its limit, the CPU occupancy rate is still relatively low, and most of the time is doing I/O hard disk memory read and write operations. The disadvantages are as follows:

- Not suitable for CPU-intensive applications

- Only supports single-core CPU, can not make full use of CPU

- Low reliability, once a certain part of the code crashes, the entire system crashes

For the third point, the commonly used solution is to use Nnigx reverse proxy, open multiple processes to bind multiple ports, or open multiple processes to listen to the same port.

1.2.1 Application Scenario

After being familiar with the advantages and disadvantages of Nodejs, we can see that it is suitable for the following application scenarios:

- Good at I/O, not good at calculation. Because Nodejs is a single thread, if there are too many calculations (synchronization), this thread will be blocked.

- A large number of concurrent I/O does not require very complicated processing within the application.

- Cooperate with WeSocket to develop real-time interactive applications with long connections.

The specific usage scenarios are as follows:

- User form collection system, background management system, real-time interactive system, examination system, networking software, and high-concurrency web applications.

- Multiplayer online games based on web, canvas, etc.

- Web-based multi-person real-time chat client, chat room, live graphic and text.

- Single page browser application.

- Operate the database, provide json-based API for the front end and mobile terminal.

Two, all Node objects

In browser JavaScript, window is the global object, while the global object in is 1618c722a0ae19 global .

In NodeJS, it is impossible to define a variable in the outermost layer, because all user code is in the current module and is only available in the current module, but it can be passed to the outside of the module through the use of the exports object. Therefore, in NodeJS, variables declared with var are not global variables and only take effect in the current module. Like the above-mentioned global global object is in the global scope, any global variable, function, object is an attribute value of the object.

2.1 Common global objects

The common global objects of Node are as follows:

- Class:Buffer

- process

- console

- clearInterval、setInterval

- clearTimeout、setTimeout

- global

Class:Buffer

Class: Buffer can be used to process binary and non-Unicode encoded data. The original data is stored in the Buffer class instantiation. Buffer is similar to an integer array. Memory is allocated to it in the original storage space of the V8 heap. Once a Buffer instance is created, the size cannot be changed.

process

Process represents the process object, which provides information and control about the current process. Including in the process of executing the node program, if we need to pass parameters, we need to get this parameter in the process built-in object. For example, we have the following file:

process.argv.forEach((val, index) => {

console.log(`${index}: ${val}`);

});When we need to start a process, we can use the following command:

node index.js 参数...console

The console is mainly used to print stdout and stderr, the most commonly used such as log output: console.log . The command to clear the console is: console.clear . If you need to print the call stack of a function, you can use the command console.trace .

clearInterval、setInterval

setInterval is used to set the timer, the syntax format is as follows:

setInterval(callback, delay[, ...args])clearInterval is used to clear the timer, and the callback is executed repeatedly every delay milliseconds.

clearTimeout、setTimeout

Like setInterval, setTimeout is mainly used to set the delay, and clearTimeout is used to clear the set delay.

global

Global is a global namespace object. The process, console, setTimeout, etc. mentioned above can be placed in global, for example:

console.log(process === global.process) //输出true2.2 Global objects in the module

In addition to the global objects provided by the system, there are some that just appear in the module and look like global variables, as shown below:

- __dirname

- __filename

- exports

- module

- require

__dirname

__dirname is mainly used to obtain the path where the current file is located, excluding the following file name. For example, in /Users/mjr operation node example.js , the print results are as follows:

console.log(__dirname); // 打印: /Users/mjr__filename

__filename is used to obtain the path and file name of the current file, including the file name that follows. For example, in /Users/mjr operation node example.js , the results of printing are as follows:

console.log(__filename);// 打印: /Users/mjr/example.jsexports

module.exports is used to export the contents of a specified module, and then you can also use require() to access the contents.

exports.name = name;exports.age = age;

exports.sayHello = sayHello;require

Require is mainly used to import modules, JSON, or local files. Modules can be imported from node_modules. You can use a relative path to import a local module or JSON file, and the path will be processed according to the directory name defined by __dirname or the current working directory.

Three, talk about the understanding of process

3.1 Basic concepts

We know that the basic unit of process computer system for resource allocation and scheduling is the foundation of the operating system structure and the container of threads. When we start a js file, we actually start a service process. Each process has its own independent space address and data stack. Like another process cannot access the variables and data structure of the current process. Only after data communication, the process Data can only be shared between.

The process object is a global variable of Node, which provides information about the current Node.js process and controls it.

Since JavaScript is a single-threaded language, there is only one main thread after starting a file through node xxx.

3.2 Common attributes and methods

The common attributes of process are as follows:

- process.env: environment variables, for example, through `process.env.NODE_ENV to obtain configuration information of different environment projects

- process.nextTick: This is often mentioned when talking about EventLoop

- process.pid: Get the current process id

- process.ppid: the parent process corresponding to the current process

- process.cwd(): Get the current process working directory

- process.platform: Get the operating system platform on which the current process is running

- process.uptime(): The running time of the current process, for example: the uptime value of the pm2 daemon

Process events: process.on('uncaughtException',cb) captures exception information, process.on('exit',cb) process launches monitoring - Three standard streams: process.stdout standard output, process.stdin standard input, process.stderr standard error output

- process.title: used to specify the process name, sometimes you need to specify a name for the process

Fourth, talk about your understanding of the fs module

4.1 What is fs

fs (filesystem) is a file system module that provides read and write capabilities of local files. It is basically a simple package of POSIX file operation commands. It can be said that all operations with files are implemented through the fs core module.

Before using, you need to import the fs module, as follows:

const fs = require('fs');4.2 Document basics

In the computer, the basic knowledge about files are as follows:

- Permission bit mode

- Flag

- The file description is fd

4.2.1 Permission bit mode

Assign permissions for the file owner, file group, and other users. The types are divided into read, write, and execute, with permission bits 4, 2, 1, and not having permission 0. For example, the command to view file permission bits in linux is as follows:

drwxr-xr-x 1 PandaShen 197121 0 Jun 28 14:41 core

-rw-r--r-- 1 PandaShen 197121 293 Jun 23 17:44 index.mdIn the first ten digits, d is a folder,-is a file, and the last nine digits represent the permissions of the current user, the user’s group and other users. They are divided into three digits, representing read (r), write (w) ) And execute (x),-means that there is no permission corresponding to the current position.

4.2.2 Identification bit

The identification bit represents the operation mode of the file, such as readable, writable, readable and writable, etc., as shown in the following table:

4.2.3 File description fd

The operating system assigns a numerical identifier called a file descriptor to each open file, and file operations use these file descriptors to identify and track each specific file.

The Window System uses a different but conceptually similar mechanism to track resources. For the convenience of users, NodeJS abstracts the differences between different operating systems and assigns numerical file descriptors to all open files.

In NodeJS, every time you operate a file, the file descriptor is incremented. The file descriptor generally starts from 3, because there are three special descriptors, 0, 1, and 2, which represent process.stdin (standard input), process.stdout (standard output) and process.stderr (error output).

4.3 Common methods

Since the fs module mainly manipulates files, the common file operation methods are as follows:

- File reading

- File writing

- File append write

- File copy

- Create a directory

4.3.1 File reading

There are two commonly used file reading methods: readFileSync and readFile. Among them, readFileSync means synchronous reading, as follows:

const fs = require("fs");

let buf = fs.readFileSync("1.txt");

let data = fs.readFileSync("1.txt", "utf8");

console.log(buf); // <Buffer 48 65 6c 6c 6f>

console.log(data); // Hello- The first parameter is the path or file descriptor of the read file.

- The second parameter is options, the default value is null, which includes encoding (encoding, default is null) and flag (identification bit, default is r), encoding can also be passed in directly.

readFile is an asynchronous reading method. The first two parameters of readFile and readFileSync are the same. The last parameter is a callback function. There are two parameters err (error) and data (data) in the function. This method has no return value, and the callback function is reading Execute after fetching files successfully.

const fs = require("fs");

fs.readFile("1.txt", "utf8", (err, data) => {

if(!err){

console.log(data); // Hello

}

});4.3.2 File writing

File writing needs to use writeFileSync and writeFile two methods. writeFileSync means synchronous writing, as shown below.

const fs = require("fs");

fs.writeFileSync("2.txt", "Hello world");

let data = fs.readFileSync("2.txt", "utf8");

console.log(data); // Hello world- The first parameter is the path or file descriptor to write the file.

- The second parameter is the data to be written, the type is String or Buffer.

- The third parameter is options, the default value is null, which includes encoding (encoding, default is utf8), flag (identification bit, default is w) and mode (permission bit, default is 0o666), or encoding can be directly passed in.

writeFile means asynchronous writing. The first three parameters of writeFile and writeFileSync are the same. The last parameter is a callback function. There is a parameter err (error) in the function. The callback function is executed after the file is successfully written.

const fs = require("fs");

fs.writeFile("2.txt", "Hello world", err => {

if (!err) {

fs.readFile("2.txt", "utf8", (err, data) => {

console.log(data); // Hello world

});

}

});4.3.3 File append write

File append writing needs to use appendFileSync and appendFile two methods. appendFileSync means synchronous writing, as follows.

const fs = require("fs");

fs.appendFileSync("3.txt", " world");

let data = fs.readFileSync("3.txt", "utf8");- The first parameter is the path or file descriptor to write the file.

- The second parameter is the data to be written, the type is String or Buffer.

- The third parameter is options, the default value is null, which includes encoding (encoding, default is utf8), flag (identification bit, default is a) and mode (permission bit, default is 0o666), or encoding can be directly passed in.

appendFile means asynchronous append writing. The first three parameters of appendFile are the same as appendFileSync. The last parameter is a callback function. There is a parameter err (error) in the function. The callback function is executed after the file is successfully appended to write data, as shown below .

const fs = require("fs");

fs.appendFile("3.txt", " world", err => {

if (!err) {

fs.readFile("3.txt", "utf8", (err, data) => {

console.log(data); // Hello world

});

}

});4.3.4 Create Directory

There are two main methods for creating a directory: mkdirSync and mkdir. Among them, mkdirSync is created synchronously, the parameter is the path of a directory, and there is no return value. In the process of creating a directory, it must be ensured that all file directories in front of the passed path exist, otherwise an exception will be thrown.

// 假设已经有了 a 文件夹和 a 下的 b 文件夹

fs.mkdirSync("a/b/c")mkdir is created asynchronously, and the second parameter is the callback function, as shown below.

fs.mkdir("a/b/c", err => {

if (!err) console.log("创建成功");

});5. Talk about your understanding of Stream

5.1 Basic concepts

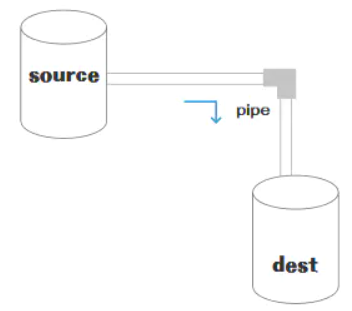

Stream is a means of data transmission, an end-to-end information exchange method, and it is sequential. It reads data block by block, processes content, and is used to read input or write output sequentially. In Node, Stream is divided into three parts: source, dest, and pipe.

Among them, there is a connected pipe pipe between source and dest. Its basic syntax is source.pipe(dest). Source and dest are connected through pipe to allow data to flow from source to dest, as shown in the following figure:

5.2 Classification of streams

In Node, streams can be divided into four categories:

- writable stream : a stream that can write data, for example, fs.createWriteStream() can use a stream to write data to a file.

- readable stream : a stream that can read data, for example, fs.createReadStream() can read content from a file.

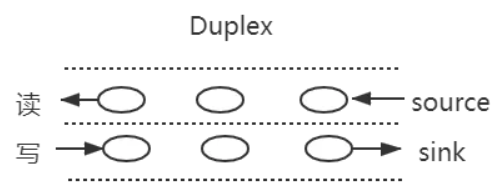

- Duplex stream : A stream that is both readable and writable, such as net.Socket.

- Conversion stream : A stream that can modify or convert data when writing and reading data. For example, in a file compression operation, you can write compressed data to the file and read decompressed data from the file.

In Node's HTTP server module, request is a readable stream, and response is a writable stream. For the fs module, it can handle both readable and writable file streams. Both readable and writable streams are one-way, which is easier to understand. The Socket is bidirectional, readable and writable.

5.2.1 Duplex flow

In Node, the most common full-duplex communication is websocket, because the sender and receiver are independent methods, and there is nothing to do with sending and receiving.

The basic usage method is as follows:

const { Duplex } = require('stream');

const myDuplex = new Duplex({

read(size) {

// ...

},

write(chunk, encoding, callback) {

// ...

}

});5.3 Usage scenarios

Common usage scenarios for streams are:

- The get request returns the file to the client

- File operations

- Low-level operations of some packaging tools

5.3.1 Network request

A common use scenario for streams is network requests. For example, using stream to return files, res is also a stream object, which returns file data through pipes.

const server = http.createServer(function (req, res) {

const method = req.method;

// get 请求

if (method === 'GET') {

const fileName = path.resolve(__dirname, 'data.txt');

let stream = fs.createReadStream(fileName);

stream.pipe(res);

}

});

server.listen(8080);5.3.2 File operations

File reading is also a stream operation. A readable data stream readStream is created, and a writable data stream writeStream is created, and the data stream is transferred through the pipe.

const fs = require('fs')

const path = require('path')

// 两个文件名

const fileName1 = path.resolve(__dirname, 'data.txt')

const fileName2 = path.resolve(__dirname, 'data-bak.txt')

// 读取文件的 stream 对象

const readStream = fs.createReadStream(fileName1)

// 写入文件的 stream 对象

const writeStream = fs.createWriteStream(fileName2)

// 通过 pipe执行拷贝,数据流转

readStream.pipe(writeStream)

// 数据读取完成监听,即拷贝完成

readStream.on('end', function () {

console.log('拷贝完成')

})In addition, some packaging tools such as Webpack and Vite involve many stream operations.

Six, event loop mechanism

6.1 What is the browser event loop

Node.js maintains an event queue in the main thread. When a request is received, it puts the request into this queue as an event, and then continues to receive other requests. When the main thread is idle (when there is no request for access), it starts to circulate the event queue to check whether there are events to be processed in the queue. At this time, there are two situations: if it is a non-I/O task, it will be handled by itself and the callback will be passed The function returns to the upper call; if it is an I/O task, a thread is taken from the thread pool to handle the event, and the callback function is specified, and then the other events in the loop are continued.

When the I/O task in the thread is completed, the specified callback function is executed, and the completed event is placed at the end of the event queue, and the event loop is waited. When the main thread loops to the event again, it directly processes and returns to Called from the upper layer. This process is called Event Loop, and its operating principle is shown in the figure below.

From left to right, from top to bottom, Node.js is divided into four layers, namely the application layer, the V8 engine layer, the Node API layer and the LIBUV layer.

- Application layer : JavaScript interaction layer, the common ones are Node.js modules, such as http, fs

- V8 engine layer : Use the V8 engine to parse JavaScript grammar, and then interact with the lower-level API

- Node API layer : Provides system calls for upper modules, which are generally implemented by C language and interact with the operating system.

- LIBUV layer : It is a cross-platform bottom encapsulation, which implements event loops, file operations, etc., and is the core of Node.js's asynchronous implementation.

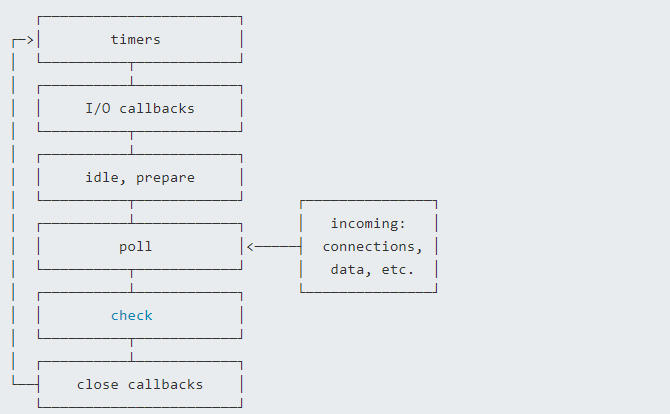

In Node, what we call the event loop is implemented based on libuv, which is a multi-platform library focused on asynchronous IO. The EVENT_QUEUE in the above picture looks like there is only one queue, but in fact there are 6 stages in EventLoop, and each stage has a corresponding first-in first-out callback queue.

6.2 The six stages of the event loop

The event loop can be divided into six stages, as shown in the figure below.

- Timers phase: This phase mainly executes the callback of timer (setTimeout, setInterval).

- I/O callback phase (I/O callbacks): I/O callbacks that are delayed until the next loop iteration are executed, that is, some I/O callbacks that were not executed in the previous loop.

- Idle phase (idle, prepare): only used internally by the system.

- Polling phase (poll): Retrieve new I/O events; execute I/O-related callbacks (in almost all cases, except for closed callback functions, those scheduled by timers and setImmediate()), the rest The situation node will block here when appropriate.

- Check phase (check): setImmediate() callback function is executed here

- Close callback phase (close callback): some closed callback functions, such as: socket.on('close', ...)

Each stage corresponds to a queue. When the event loop enters a certain stage, callbacks will be executed in that stage until the queue is exhausted or the maximum number of callbacks has been executed, then the next processing stage will be entered, as shown in the following figure.

Seven, EventEmitter

7.1 Basic concepts

As mentioned earlier, Node uses an event-driven mechanism, and EventEmitter is the basis for Node to implement event-driven. On the basis of EventEmitter, almost all Node modules inherit this class. These modules have their own events, can bind and trigger listeners, and implement asynchronous operations.

Many objects in Node.js will dispatch events. For example, the fs.readStream object will trigger an event when the file is opened. These event-generating objects are all instances of events.EventEmitter and are used to bind one or more functions. To the named event.

7.2 Basic usage

Node's events module only provides an EventEmitter class, which implements the basic pattern of Node's asynchronous event-driven architecture: the observer pattern.

In this model, the observed (subject) maintains a set of observers sent (registered) by other objects. If there is a new object interested in the subject, register the observer, unsubscribe if not interested, and the subject will update. Inform the observers in turn, the usage is as follows.

const EventEmitter = require('events')

class MyEmitter extends EventEmitter {}

const myEmitter = new MyEmitter()

function callback() {

console.log('触发了event事件!')

}

myEmitter.on('event', callback)

myEmitter.emit('event')

myEmitter.removeListener('event', callback);In the above code, we register an event named event through the on method of the instance object, trigger the event through the emit method, and removeListener is used to cancel event monitoring.

In addition to the methods described above, other commonly used methods are as follows:

- emitter.addListener/on(eventName, listener) : Add a listener event of type eventName to the end of the event array.

- emitter.prependListener(eventName, listener) : Add a listener event of type eventName to the head of the event array.

- emitter.emit(eventName[, ...args]) : Trigger the listener event of eventName.

- emitter.removeListener/off(eventName, listener) : Remove the listener event of type eventName.

- emitter.once(eventName, listener) : Add a listener event of type eventName, which can only be executed once and deleted in the future.

- emitter.removeAllListeners([eventName]) : Remove all listener events of type eventName.

7.3 Implementation Principle

EventEmitter is actually a constructor, and there is an object containing all events inside.

class EventEmitter {

constructor() {

this.events = {};

}

}Among them, the structure of the function for monitoring events stored in events is as follows:

{

"event1": [f1,f2,f3],

"event2": [f4,f5],

...

}Then, start to implement the instance method step by step. The first is emit, the first parameter is the type of event, and the second parameter is the parameter of the trigger event function. The implementation is as follows:

emit(type, ...args) {

this.events[type].forEach((item) => {

Reflect.apply(item, this, args);

});

}After the emit method is implemented, the three instance methods on, addListener, and prependListener are implemented in turn, all of which add event listener trigger functions.

on(type, handler) {

if (!this.events[type]) {

this.events[type] = [];

}

this.events[type].push(handler);

}

addListener(type,handler){

this.on(type,handler)

}

prependListener(type, handler) {

if (!this.events[type]) {

this.events[type] = [];

}

this.events[type].unshift(handler);

}To remove event listeners, you can use the method removeListener/on.

removeListener(type, handler) {

if (!this.events[type]) {

return;

}

this.events[type] = this.events[type].filter(item => item !== handler);

}

off(type,handler){

this.removeListener(type,handler)

}Implement the once method, and then encapsulate when the event listener processing function is passed in, use the feature of the closure to maintain the current state, and judge whether the event function has been executed through the fired attribute value.

once(type, handler) {

this.on(type, this._onceWrap(type, handler, this));

}

_onceWrap(type, handler, target) {

const state = { fired: false, handler, type , target};

const wrapFn = this._onceWrapper.bind(state);

state.wrapFn = wrapFn;

return wrapFn;

}

_onceWrapper(...args) {

if (!this.fired) {

this.fired = true;

Reflect.apply(this.handler, this.target, args);

this.target.off(this.type, this.wrapFn);

}

}Here is the completed test code:

class EventEmitter {

constructor() {

this.events = {};

}

on(type, handler) {

if (!this.events[type]) {

this.events[type] = [];

}

this.events[type].push(handler);

}

addListener(type,handler){

this.on(type,handler)

}

prependListener(type, handler) {

if (!this.events[type]) {

this.events[type] = [];

}

this.events[type].unshift(handler);

}

removeListener(type, handler) {

if (!this.events[type]) {

return;

}

this.events[type] = this.events[type].filter(item => item !== handler);

}

off(type,handler){

this.removeListener(type,handler)

}

emit(type, ...args) {

this.events[type].forEach((item) => {

Reflect.apply(item, this, args);

});

}

once(type, handler) {

this.on(type, this._onceWrap(type, handler, this));

}

_onceWrap(type, handler, target) {

const state = { fired: false, handler, type , target};

const wrapFn = this._onceWrapper.bind(state);

state.wrapFn = wrapFn;

return wrapFn;

}

_onceWrapper(...args) {

if (!this.fired) {

this.fired = true;

Reflect.apply(this.handler, this.target, args);

this.target.off(this.type, this.wrapFn);

}

}

}8. Middleware

8.1 Basic concepts

Middleware is a type of software between the application system and the system software. It uses the basic services (functions) provided by the system software to connect various parts of the application system or different applications on the network to achieve resource sharing , The purpose of function sharing.

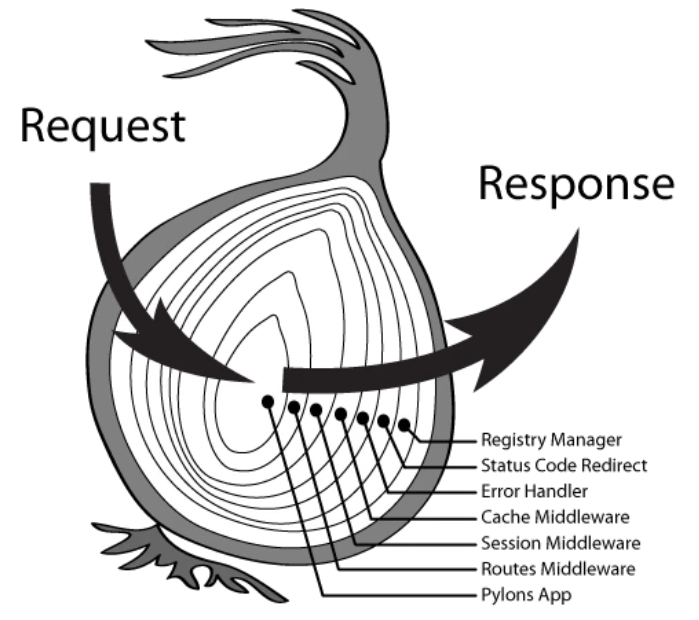

In Node, middleware mainly refers to the method of encapsulating the details of http request processing. For example, in web frameworks such as express and koa, the essence of middleware is a callback function, and the parameters include the request object, response object, and the function to execute the next middleware. The schematic diagram of the architecture is as follows.

Usually, in these middleware functions, we can execute business logic codes, modify request and response objects, and return response data.

8.2 koa

Koa is based on Node's current popular web framework. It does not support many functions, and all functions can be implemented through middleware extensions. Koa does not bundle any middleware, but provides a set of elegant methods to help developers write server-side applications quickly and happily.

Koa middleware uses the onion ring model, and each time the next middleware is executed, two parameters are passed in:

- ctx: encapsulates the variables of request and response

- next: enter the next middleware function to be executed

Through the previous introduction, we know that Koa middleware is essentially a function, which can be an async function or an ordinary function. The following is the encapsulation of middleware for koa:

// async 函数

app.use(async (ctx, next) => {

const start = Date.now();

await next();

const ms = Date.now() - start;

console.log(`${ctx.method} ${ctx.url} - ${ms}ms`);

});

// 普通函数

app.use((ctx, next) => {

const start = Date.now();

return next().then(() => {

const ms = Date.now() - start;

console.log(`${ctx.method} ${ctx.url} - ${ms}ms`);

});

});Of course, we can also encapsulate several commonly used functions in the http request process through middleware:

token verification

module.exports = (options) => async (ctx, next) {

try {

// 获取 token

const token = ctx.header.authorization

if (token) {

try {

// verify 函数验证 token,并获取用户相关信息

await verify(token)

} catch (err) {

console.log(err)

}

}

// 进入下一个中间件

await next()

} catch (err) {

console.log(err)

}

}log module

const fs = require('fs')

module.exports = (options) => async (ctx, next) => {

const startTime = Date.now()

const requestTime = new Date()

await next()

const ms = Date.now() - startTime;

let logout = `${ctx.request.ip} -- ${requestTime} -- ${ctx.method} -- ${ctx.url} -- ${ms}ms`;

// 输出日志文件

fs.appendFileSync('./log.txt', logout + '\n')

}There are many third-party middleware in Koa, such as koa-bodyparser, koa-static, etc.

8.3 Koa middleware

koa-bodyparser

The koa-bodyparser middleware converts our post request and query string submitted by the form into an object, and hangs it on ctx.request.body, so that we can get values from other middleware or interfaces.

// 文件:my-koa-bodyparser.js

const querystring = require("querystring");

module.exports = function bodyParser() {

return async (ctx, next) => {

await new Promise((resolve, reject) => {

// 存储数据的数组

let dataArr = [];

// 接收数据

ctx.req.on("data", data => dataArr.push(data));

// 整合数据并使用 Promise 成功

ctx.req.on("end", () => {

// 获取请求数据的类型 json 或表单

let contentType = ctx.get("Content-Type");

// 获取数据 Buffer 格式

let data = Buffer.concat(dataArr).toString();

if (contentType === "application/x-www-form-urlencoded") {

// 如果是表单提交,则将查询字符串转换成对象赋值给 ctx.request.body

ctx.request.body = querystring.parse(data);

} else if (contentType === "applaction/json") {

// 如果是 json,则将字符串格式的对象转换成对象赋值给 ctx.request.body

ctx.request.body = JSON.parse(data);

}

// 执行成功的回调

resolve();

});

});

// 继续向下执行

await next();

};

};koa-static

The role of koa-static middleware is to help us process static files when the server receives a request, for example.

const fs = require("fs");

const path = require("path");

const mime = require("mime");

const { promisify } = require("util");

// 将 stat 和 access 转换成 Promise

const stat = promisify(fs.stat);

const access = promisify(fs.access)

module.exports = function (dir) {

return async (ctx, next) => {

// 将访问的路由处理成绝对路径,这里要使用 join 因为有可能是 /

let realPath = path.join(dir, ctx.path);

try {

// 获取 stat 对象

let statObj = await stat(realPath);

// 如果是文件,则设置文件类型并直接响应内容,否则当作文件夹寻找 index.html

if (statObj.isFile()) {

ctx.set("Content-Type", `${mime.getType()};charset=utf8`);

ctx.body = fs.createReadStream(realPath);

} else {

let filename = path.join(realPath, "index.html");

// 如果不存在该文件则执行 catch 中的 next 交给其他中间件处理

await access(filename);

// 存在设置文件类型并响应内容

ctx.set("Content-Type", "text/html;charset=utf8");

ctx.body = fs.createReadStream(filename);

}

} catch (e) {

await next();

}

}

}In general, when implementing middleware, a single middleware should be simple enough, single responsibilities, middleware coding should be efficient, and data should be repeatedly obtained through caching when necessary.

Nine, how to design and implement JWT authentication

9.1 What is JWT

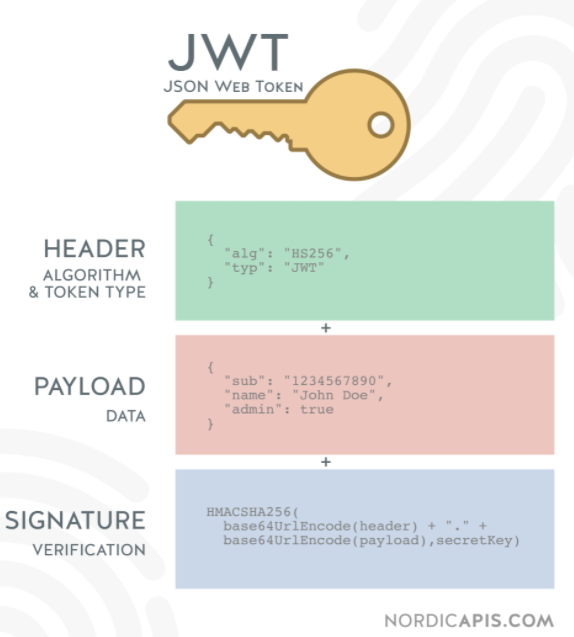

JWT (JSON Web Token) is essentially a string writing specification, which is used to transmit safe and reliable data between the user and the server, as shown in the figure below.

In the current development process where the front and back ends are separated, using the token authentication mechanism for identity verification is the most common solution. The process is as follows:

- When the server verifies that the user account and password are correct, it issues a token to the user, which serves as a credential for subsequent users to access some interfaces.

Subsequent access will use this token to determine when the user has permission to access.

Token is divided into three parts, Header, Payload, Signature, and spliced

.The header and payload are stored in JSON format, but are encoded. The schematic diagram is as follows.9.1.1 header

Each JWT will carry header information, and the algorithm used here is mainly stated. The field that declares the algorithm is named alg, and there is also a typ field. The default JWT is enough. The algorithm in the following example is HS256:

{ "alg": "HS256", "typ": "JWT" } Because JWT is a string, we also need to Base64 encode the above content. The encoded string is as follows:

eyJhbGciOiJIUzI1NiIsInR5cCI6IkpXVCJ9 9.1.2 payload

The payload is the message body. The actual content is stored here, which is the data declaration of the token, such as the user's id and name. By default, it will also carry the token issuance time iat. You can also set the expiration time, as follows:

{

"sub": "1234567890",

"name": "John Doe",

"iat": 1516239022

}After the same Base64 encoding, the string is as follows:

eyJzdWIiOiIxMjM0NTY3ODkwIiwibmFtZSI6IkpvaG4gRG9lIiwiaWF0IjoxNTE2MjM5MDIyfQ9.1.3 Signature

Signature is to sign the header and payload content. Generally, a secretKey is set, and the HMACSHA25 algorithm is applied to the results of the first two. The formula is as follows:

Signature = HMACSHA256(base64Url(header)+.+base64Url(payload),secretKey)Therefore, even if the first two parts of data are tampered with, as long as the key used for server encryption is not leaked, the signature obtained must be inconsistent with the previous signature.

9.2 Design and Implementation

Generally, the use of Token is divided into two parts: token generation and verification token.

- generates a token : when the login is successful, a token is issued.

- verification token : when accessing certain resources or interfaces, verify the token.

9.2.1 Generate token

With the help of the third-party library jsonwebtoken, a token is generated through the sign method of jsonwebtoken. Sign has three parameters:

- The first parameter refers to Payload.

- The second is the secret key, which is unique to the server.

- The third parameter is option, which can define the expiration time of the token.

The following is an example of token generation on the front end:

const crypto = require("crypto"),

jwt = require("jsonwebtoken");

// TODO:使用数据库

// 这里应该是用数据库存储,这里只是演示用

let userList = [];

class UserController {

// 用户登录

static async login(ctx) {

const data = ctx.request.body;

if (!data.name || !data.password) {

return ctx.body = {

code: "000002",

message: "参数不合法"

}

}

const result = userList.find(item => item.name === data.name && item.password === crypto.createHash('md5').update(data.password).digest('hex'))

if (result) {

// 生成token

const token = jwt.sign(

{

name: result.name

},

"test_token", // secret

{ expiresIn: 60 * 60 } // 过期时间:60 * 60 s

);

return ctx.body = {

code: "0",

message: "登录成功",

data: {

token

}

};

} else {

return ctx.body = {

code: "000002",

message: "用户名或密码错误"

};

}

}

}

module.exports = UserController;After receiving the token at the front end, it will generally be cached through localStorage, and then put the token in the HTTP request header Authorization. Regarding the Authorization setting, you need to add Bearer before it. Note that there is a space after it, as shown below.

axios.interceptors.request.use(config => {

const token = localStorage.getItem('token');

config.headers.common['Authorization'] = 'Bearer ' + token; // 留意这里的 Authorization

return config;

})9.2.2 Verify token

First of all, we need to use the koa-jwt middleware to verify, the method is relatively simple, just verify before the route jump, as follows.

app.use(koajwt({

secret: 'test_token'

}).unless({

// 配置白名单

path: [/\/api\/register/, /\/api\/login/]

}))When using koa-jwt middleware for verification, you need to pay attention to the following points:

- The secret must be consistent with the sign.

- You can configure the interface whitelist through unless, that is, which URLs do not need to be verified, such as login/register without verification.

- The middleware for verification needs to be placed in front of the route to be verified, and the previous URL cannot be verified.

The method of obtaining user token information is as follows:

router.get('/api/userInfo',async (ctx,next) =>{

const authorization = ctx.header.authorization // 获取jwt

const token = authorization.replace('Beraer ','')

const result = jwt.verify(token,'test_token')

ctx.body = result

}Note : The above-mentioned HMA256 encryption algorithm is in the form of a single secret key. Once it is leaked, the consequences are very dangerous.

In a distributed system, each subsystem must obtain a secret key, and then this subsystem can issue and verify tokens based on the secret key, but some servers only need to verify the token. At this time, you can use asymmetric encryption, use the private key to issue the token, and the public key to verify the token. The encryption algorithm can choose asymmetric algorithms such as RS256.

In addition, JWT authentication also needs to pay attention to the following points:

- The payload part is just a simple encoding, so it can only be used to store non-sensitive information necessary for logic.

- The encryption key needs to be protected, and once it is leaked, the consequences will be disastrous.

- To avoid the token being hijacked, it is best to use the https protocol.

10. Node performance monitoring and optimization

10.1 Node optimization points

Node, as a server-side language, is particularly important in terms of performance. Its measurement indicators are generally as follows:

- CPU

- RAM

- I/O

- The internet

10.1.1 CPU

Regarding the CPU indicators, we mainly focus on the following two points:

- CPU load : The total number of processes occupying and waiting for the CPU in a certain period of time.

- CPU usage rate : CPU time occupancy status, equal to 1-idle time (idle time) / total CPU time.

These two indicators are both quantitative indicators used to evaluate the current CPU busyness of the system. Node applications generally do not consume a lot of CPU. If the CPU occupancy rate is high, it indicates that there are many synchronous operations in the application, causing asynchronous task callbacks to be blocked.

10.1.2 Memory Index

Memory is a very easy to quantify indicator. Memory usage is a common indicator for judging the memory bottleneck of a system. For Node, the usage status of the internal memory stack is also a quantifiable indicator. You can use the following code to obtain memory-related data:

// /app/lib/memory.js

const os = require('os');

// 获取当前Node内存堆栈情况

const { rss, heapUsed, heapTotal } = process.memoryUsage();

// 获取系统空闲内存

const sysFree = os.freemem();

// 获取系统总内存

const sysTotal = os.totalmem();

module.exports = {

memory: () => {

return {

sys: 1 - sysFree / sysTotal, // 系统内存占用率

heap: heapUsed / headTotal, // Node堆内存占用率

node: rss / sysTotal, // Node占用系统内存的比例

}

}

}- rss: Represents the total amount of memory occupied by the node process.

- heapTotal: Represents the total amount of heap memory.

- heapUsed: Actual heap memory usage.

- external: The memory usage of external programs, including the memory usage of Node core C++ programs.

In Node, the maximum memory capacity of a process is 1.5GB, so please control the memory usage reasonably in actual use.

10.13 Disk I/O

The IO overhead of the hard disk is very expensive, and the CPU clock cycle of the hard disk IO is 164000 times that of the memory. Memory IO is much faster than disk IO, so using memory to cache data is an effective optimization method. Commonly used tools such as redis, memcached, etc.

Moreover, not all data needs to be cached. The access frequency is high, and the generation cost is relatively high. Only consider whether to cache, that is to say, consider caching that affects your performance bottleneck, and the cache has problems such as cache avalanche and cache penetration. solve.

10.2 How to monitor

Regarding performance monitoring, tools are generally required, such as Easy-Monitor, Alibaba Node performance platform, etc.

Easy-Monitor 2.0 is used here, which is a lightweight Node.js project kernel performance monitoring + analysis tool. In the default mode, you only need to require once in the project entry file, and you can enable kernel-level performance without changing any business code. Monitoring and analysis.

Easy-Monitor is also relatively simple to use, introduced in the project entry file as follows.

const easyMonitor = require('easy-monitor');

easyMonitor('项目名称');Open your browser, visit http://localhost:12333 , you can see the process interface, for more detailed content, please refer to official website

10.3 Node performance optimization

There are several ways to optimize the performance of Node:

- Use the latest version of Node.js

- Use Stream correctly

- Code level optimization

- Memory management optimization

10.3.1 Use the latest version of Node.js

The performance improvement of each version mainly comes from two aspects:

- V8 version update

- Update and optimization of Node.js internal code

10.3.2 Correct use of streams

In Node, many objects implement streams. For a large file, it can be sent in the form of a stream, and it does not need to be completely read into memory.

const http = require('http');

const fs = require('fs');

// 错误方式

http.createServer(function (req, res) {

fs.readFile(__dirname + '/data.txt', function (err, data) {

res.end(data);

});

});

// 正确方式

http.createServer(function (req, res) {

const stream = fs.createReadStream(__dirname + '/data.txt');

stream.pipe(res);

});10.3.3 Code level optimization

Combined query, multiple queries are combined once, reducing the number of database queries.

// 错误方式

for user_id in userIds

let account = user_account.findOne(user_id)

// 正确方式

const user_account_map = {}

// 注意这个对象将会消耗大量内存。

user_account.find(user_id in user_ids).forEach(account){

user_account_map[account.user_id] = account

}

for user_id in userIds

var account = user_account_map[user_id]10.3.4 Memory management optimization

In V8, the memory is mainly divided into two generations: the young generation and the old generation:

- Cenozoic : The survival time of the subject is shorter. Newborn objects or objects that have been garbage collected only once.

- Old generation : The subject has a longer survival time. Objects that have been garbage collected one or more times.

If the memory space of the young generation is not enough, it is directly allocated to the old generation. By reducing the memory footprint, the performance of the server can be improved. If there is a memory leak, it will also cause a large number of objects to be stored in the old generation, and the server performance will be greatly reduced, such as the following example.

const buffer = fs.readFileSync(__dirname + '/source/index.htm');

app.use(

mount('/', async (ctx) => {

ctx.status = 200;

ctx.type = 'html';

ctx.body = buffer;

leak.push(fs.readFileSync(__dirname + '/source/index.htm'));

})

);

const leak = [];When the leak memory is very large, it may cause memory leaks, and such operations should be avoided.

Reducing memory usage can significantly improve service performance. The best way to save memory is to use a pool, which stores frequently used and reusable objects and reduces creation and destruction operations. For example, there is a picture request interface, every time you request, you need to use the class. If you need to renew these classes every time, it is not very suitable. In a large number of requests, these classes are frequently created and destroyed, causing memory jitter. Using the object pool mechanism, such objects that frequently need to be created and destroyed are stored in an object pool, so as to avoid re-reading initialization operations, thereby improving the performance of the framework.

**粗体** _斜体_ [链接](http://example.com) `代码` - 列表 > 引用。你还可以使用@来通知其他用户。