Preface

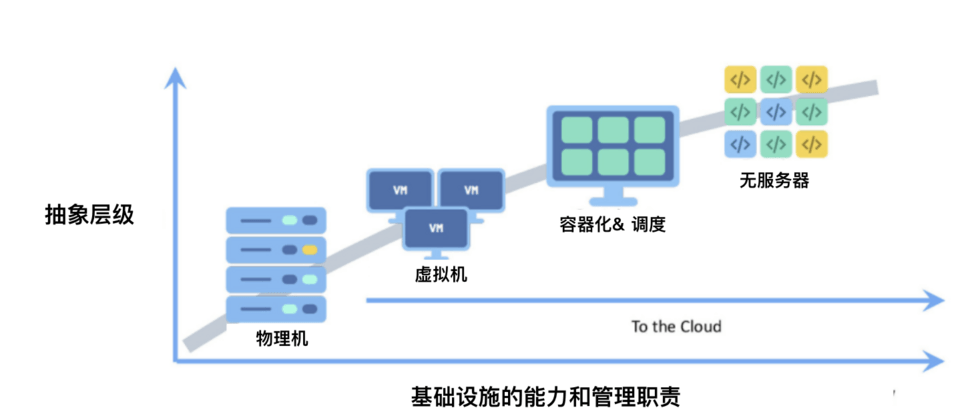

With the development of technology, we have more and more choices to implement our business logic. Serverless, as a cutting-edge technology nowadays, can we also explore the new possibilities of microservice architecture?

This article is to summarize some of the results of the SpringBoot microservice project that I have explored in the recent past.

What is serverless

I don't need to explain what is microservice and what is springBoot.

So what is Serverless?

According to the definition of CNCF, Serverless (serverless) refers to the concept of building and running applications that do not require server management.

Serverless does not mean that calculations can be performed without a server, but for developers or companies, calculations can be performed without understanding and managing the underlying server.

In layman's terms, Serverless encapsulates the underlying computing resources. You only need to provide a function to run it.

There is also a concept mentioned here, that is, FaaS (Function as a Service), function as a service. The logic we usually run on Serverless is the granularity of the function level.

Therefore, microservices with reasonable split granularity control are very suitable for serverless.

The value of serverless for microservices

- Each microservice API is called at a different frequency, and Serverless can be used to accurately manage costs and flexibility.

- Don't worry about the large number of API calls and the need to expand the entire service. Serverless can automatically expand and shrink.

- There is no need to operate and maintain how many containers and servers are deployed behind each service, and there is no need to do load balancing.

- The complex learning cost of container orchestration such as K8S is shielded.

- The stateless nature of Serverless is also very consistent with the feature of microservices using Restful API.

Preliminary practice

First, you need to prepare a SpringBoot project, which can be quickly created start.spring.io

In terms of business development, Serverless is no different from traditional microservice development. So I quickly wrote a todo back-end service and completed the addition, deletion, modification, and checking function.

The sample code is in here is .

So where are the real differences in using Serverless?

If you simply want to deploy a single service, the main difference lies in two aspects:

- Deployment method

- Start method

Deployment method

Since we can't touch the server anymore, the deployment method has changed a lot.

Traditional microservice deployment is usually directly deployed to a virtual machine to run, or K8S is used for containerized scheduling.

The traditional deployment relationship is roughly as follows.

If serverless is used, we usually require our microservices to be split at a finer granularity in order to achieve FaaS.

Therefore, the relationship between using Serverless to deploy microservices is roughly as follows.

Serverless only needs to provide code. Because serverless comes with its own operating environment, there are usually two ways to deploy microservices in serverless:

- Code package upload and deployment

- Image deployment

The first method is the biggest difference compared with traditional deployment. It requires us to package and upload the written code. And you need to specify an entry function or specify a listening port.

The second method is almost the same as the traditional method, which is to upload the finished image to our mirror warehouse. Then select the corresponding image when deploying the serverless platform.

Start method

Because serverless will only create the corresponding instance when it is used, and destroy the instance when it is not in use, which reflects the characteristics of serverless billing.

So serverless has a cold start process when it is first called. The so-called cold start refers to the need for the platform to allocate computing resources, load and start code. Therefore, there may be different cold start times depending on different operating environments and codes.

As a static language, Java has been criticized for its startup speed. However, there is even slower, that is, the startup time of spring, which is obvious to all. Therefore, the powerful combination of java+spring creates a sloth-like startup speed. It may cause an excessively long waiting time for the first call to the service.

However, don't worry, Spring has provided two solutions to shorten the startup time.

- One is SpringFu

- The other is Spring Native .

SpringFu

Spring Fu is an incubator of JaFu (Java DSL) and KoFu (Kotlin DSL). It uses code to explicitly configure Spring Boot in a declarative manner. Due to its automatic completion, it has high discoverability. It provides fast startup (40% faster than regular automatic configuration on minimal Spring MVC applications), low memory consumption, and is very suitable for GraalVM native due to its (almost) reflection-free approach. If paired with GraalVM compiler, the application startup speed can drop to about 1% of the original.

However, currently SpringFu is still in a particularly early stage, and there are many problems during its use. In addition, using SpringFu will have a larger code transformation cost, because it kills all annotations, so I did not use SpringFu this time.

Spring Native

Spring Native uses the GraalVM native-image compiler to compile Spring applications into native executable files to provide native deployment options packaged in a lightweight container. The goal of Spring Native is to support Spring Boot applications with almost no code transformation costs on this new platform.

So I chose Spring native, because it does not need to modify the code, only need to add some plug-ins and dependencies to achieve native image.

Native image has several advantages:

- Unused code will be removed during build

- The classpath is determined at the time of construction

- No class delay loading: all the contents of the executable file will be loaded into memory at startup

- Some code is run at build time

Based on these characteristics, it can greatly speed up the startup time of the program.

On how to use it I will explain in the next article, you can view the detailed tutorial official tutorial . I also did it with reference to this tutorial.

Let me talk about the results of my test comparison.

I put the compiled images locally, deployed and tested the cloud functions of Tencent Cloud serverless and AWS serverless lambda.

| Specification | SpringBoot cold start time | SpringNative cold start time |

|---|---|---|

| Local 16G RAM Mac | 1 second | 79 ms |

| Tencent Cloud Serverless 256M RAM | 13 seconds | 300 milliseconds |

| AWS Serverless 256M RAM | 21 seconds | 1 second |

From the test results, Spring Native has greatly improved the startup speed. Increasing the serverless specifications can further increase the speed.

If the cold start speed of Serverless is controlled to within 1 second, then most businesses are acceptable. And only the first request will have a cold start, and the response time of other requests is the same as that of ordinary microservices.

In addition, Serverless on all major platforms currently supports preset instances, that is, instances are created in advance before access arrives to reduce cold start time. Bring a higher response time in business.

Summarize

Serverless as the current advanced technology, it brings us many benefits.

- Elasticity and concurrency of auto-scaling

- Fine-grained resource allocation

- Loosely coupled

- Free operation and maintenance

But serverless is not perfect. When we try to use it in the field of microservices, we can still see that it has many problems waiting to be solved.

Difficult to monitor and debug

- This is currently recognized as a pain

There may be more cold starts

- When we split the microservices to adapt to the granularity of functions, we also dispersed the call time of each function, resulting in a lower frequency of each function call, resulting in more cold starts.

The interaction between functions will be more complicated

- As the function granularity becomes finer, in large-scale microservice projects, the originally intricate microservices will become more complicated.

To sum up, serverless still has a long way to go on the path of microservices if you want to completely replace traditional virtual machines.

Next step

I will continue to explore the practice of serverless and microservices.

In the following articles, I will discuss the following topics

- Inter-service calls in Serverless

- Database Access in Serverless

- Registration and discovery of services in Serverless

- Service fusing and degradation in Serverless

- Service split in Serverless

**粗体** _斜体_ [链接](http://example.com) `代码` - 列表 > 引用。你还可以使用@来通知其他用户。