Introduction to This practice will take an international educational institution as an example to introduce in detail the log service data preprocessing under the cloud native architecture and the corresponding solutions and best practice operation manuals, so that users can quickly identify themselves and solve cloud native Common log problems under the architecture.

Direct best practice: [ https://bp.aliyun.com/detail/207 ]

Watch the video:【 https://yqh.aliyun.com/live/detail/23950 】

Alibaba Cloud best practices currently cover 23 common scenarios, and there are more than 200 best practices, which involve the best use scenarios for more than 110 Alibaba Cloud products. Currently, best practices have successfully helped a large number of customers realize self-service cloud migration.

Shared person

- Solution Architect-Qi Ling

- Log Service Product Manager-Gana

This practice will introduce to you the log service data preprocessing under the cloud native architecture from three parts, hoping to give you a deeper understanding of it and apply it to the project to achieve the purpose of reducing costs and improving efficiency. The main content of this article is divided into the following three aspects:

- Explanation of best practices

- Introduction to core product capabilities

- Scenario-based demo demonstration

1. Explanation of Best Practices

1. Data processing under cloud native

There are various definitions of cloud native. There are "microservices + containers + continuous delivery + DevOps" from the CNCF community, and there are also sayings from different cloud vendors that "born in the cloud, better than the cloud". For example, the concepts we often hear about cloud-native databases, cloud-native big data, cloud-native containers, cloud-native middleware, cloud-native security, etc., are all service-oriented cloud-native products that can be obtained on the cloud, and are traditional lines. Services that are not available under the Internet can obtain the ultimate flexibility online. Here, the data processing we mentioned is one of the capabilities of the cloud native log service SLS provided by Alibaba Cloud. I believe that everyone is very familiar with the data storage and data query capabilities of Log Service, but may not know much about the data processing and alarm notifications it provides. The built-in data processing capabilities of SLS can process all types of logs into structured data, with the characteristics of full hosting, real-time, and high throughput. It faces the field of log analysis, provides a very rich operator, supports out-of-the-box scenario-based UDF (such as Syslog, non-standard json, accessLog analysis, etc.). At the same time, it is deeply integrated with Alibaba Cloud's big data products (OSS, MC, EMR, ADB, etc.) and open source ecosystems (Flink, Spark), reducing the threshold for data analysis.

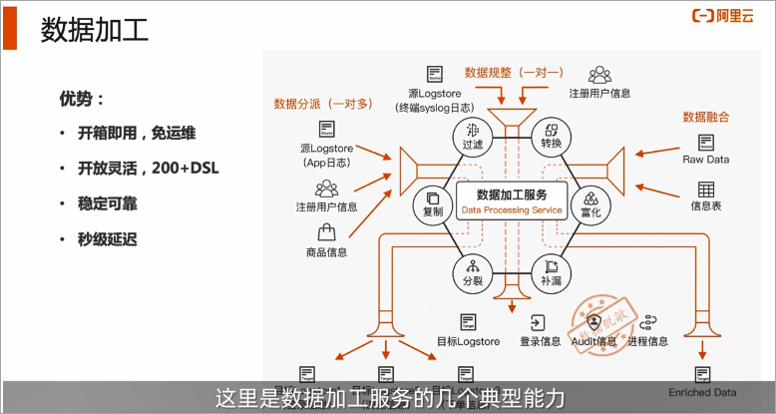

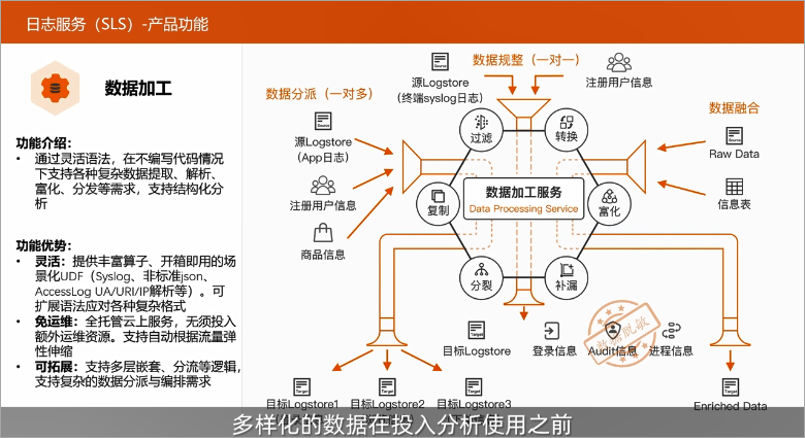

2. Typical capabilities of cloud-native data processing

The following figure shows several typical capabilities of data processing services, including data replication, filtering, conversion, enrichment, leakage, and splitting. The overall advantage can be summarized into the following four points in a nutshell:

- Out of the box, no operation and maintenance

- Open and flexible, support 200+DSL

- Stable and reliable

- Can achieve second-level delay

3. Typical application scenarios of cloud-native data processing

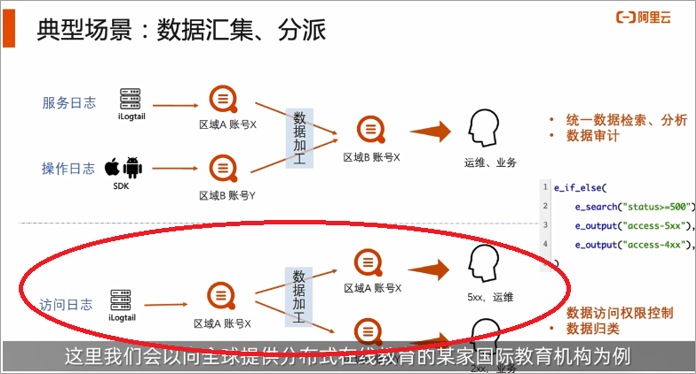

Taking an international education institution that provides distributed online education to the world as an example, I will introduce a few typical application scenarios for your reference and reference.

- Typical scenario 1: Cross-regional and cross-account data collection

Assuming that the main users of this online education are concentrated in Silicon Valley, USA and Shanghai, China, in order to better provide users with personalized services, the system will collect user behavior logs and device metadata through multiple terminals (Android/IOS/Web). Device information, software version). For the sake of network proximity and stability considerations, all client logs of Silicon Valley in the US are uploaded to the Silicon Valley region of the United States, and client logs in Shanghai, China are all uploaded to the Shanghai region of China, for centralized query and management by the customer service center or operation and maintenance team. , The data of the two places will be gathered together through data processing. As shown in the upper layer of the figure below, the service logs and operation logs of cross-accounts and cross-regions are gathered together through data processing. It is worth noting that cross-regional data collection will use the public network by default, and stability cannot be guaranteed. Therefore, it is recommended to use DCDN for global acceleration. Typical scenario two: unified collection of data, distribution according to business, classification of data

The customer's business system is deployed on Alibaba Cloud Container Service ACK, and system logs are collected to Logstore through DaemonSet. For the purpose of subsequent business analysis, it is necessary to distribute the logs of different services to different Logstores through the Log Service SLS, and then each team will conduct further analysis. For example, the operation and maintenance team is more concerned about the error reported by the 5XX server; the business team is more concerned about the normal business log of the 2XX. As shown in the lower part of the figure below:Typical scenario 3: Data content enrichment (join dimension table)

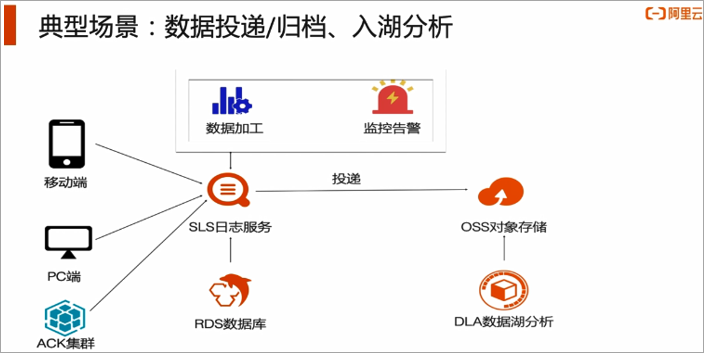

In daily work, the staff of the customer service center tastes that they need to retrieve the user's mobile terminal operation records quickly by retrieving the account ID, but the mobile terminal data and user account information are collected and stored separately and cannot be directly associated. Therefore, at the system level, it is necessary to join multi-terminal logs with dimension tables (such as user information Mysql tables) to add more dimensional information to the original log information for analysis or question answering.- Typical scenario 4: data delivery/archiving, lake entry analysis, and monitoring alarms

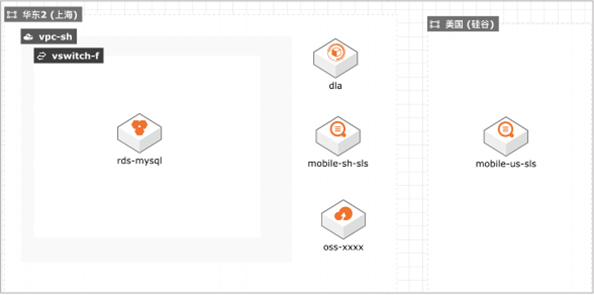

The operation department hopes to conduct further offline analysis of user behavior data, and needs to archive the data to OSS for subsequent use, such as further data mining through DLA. However, due to the inconsistent log format of different clients, the log service needs to be used to organize the data before delivery to facilitate subsequent analysis. For example, expand the json format reported by the mobile terminal, make the formatting regularization, and then post the regularized data to OSS, and then use DLA for analysis. And in this process, we can monitor the delay of the processing task. When the delay time of the processing task exceeds the set threshold, an alarm is triggered and the relevant action strategy is executed. For example, according to the length of the delay time, set different alarm severity, and set the corresponding alarm form: severe is SMS alarm, medium is mailbox alarm. Notify the corresponding operation and maintenance personnel, and by setting the noise reduction strategy, similar alarms can be merged to avoid the impact of alarm storms. In fact, the following architecture diagram is also a schematic diagram of the best practice architecture, which includes the core components involved in the plan. The follow-up will be one-click deployment through the cloud and CADT to complete the creation of basic resources.

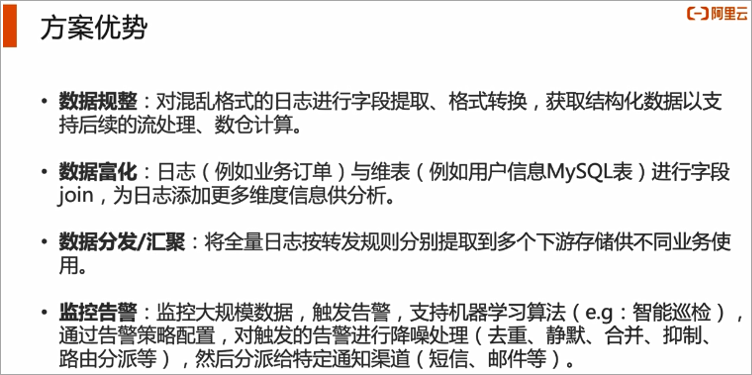

4. Advantages of using cloud-native data processing solutions

Through this best practice, we can know how to perform data regulation, how to perform data enrichment, data distribution/aggregation, and how to configure monitoring alarms.

2. Introduction of core product capabilities

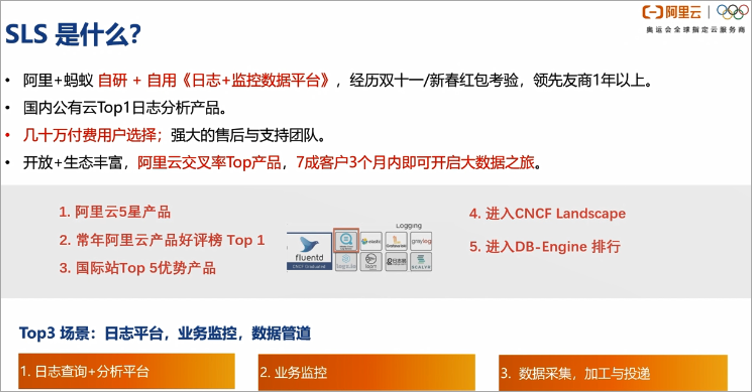

What is SLS?

SLS is a product that is described in one sentence: SLS is a cloud-native observation and analysis platform that provides large-scale, low-cost, real-time platform services for data such as LOG/METRIC/TRACE. It can provide one-stop data collection, processing, analysis, alarm visualization and delivery functions, and can comprehensively improve the digital analysis capabilities of R&D, operation and maintenance, operations, and security scenarios. In layman's terms, relevant log data including log logs, trace logs, and metric logs can be collected by SLS, processed and analyzed in SLS, and finally applied to the customer's business scenarios. The main scenarios include: business monitoring, exceptions Diagnostics, network analysis, application monitoring, growth hacking, etc.

SLS is a product incubated from the Alibaba Cloud Feitian monitoring system. It is a "Log + Monitoring Data Platform" developed by Alibaba and widely used internally and externally in Alibaba. At the same time, it has experienced many years of double eleven and new year red envelopes for external customers. The test is the log analysis product of the TOP1 public cloud in China.

SLS application scenarios

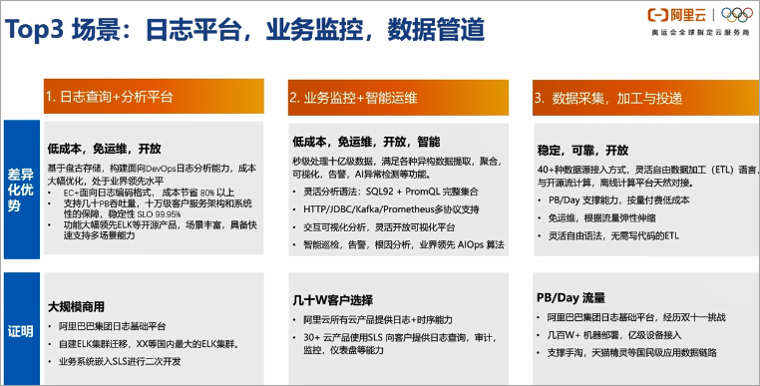

As a log product, SLS has an open product concept and a rich ecological intersection. Both business logs and audit logs of cloud products with relatively large user data can be connected to SLS. At the same time, the delivery and consumption functions of SLS and big data products have strong solution combination capabilities. Then let's take a closer look at the main application scenarios of SLS, as the log platform, business monitoring, and data pipeline are currently the three most used scenarios.

Log platform

The log platform is easy to understand. As long as there are users of a certain scale, there will definitely be business operation and system operation and maintenance needs, which will give rise to the needs of the log platform. Before the productization of SLS, most users used open source services for combination. The more mainstream ones, such as the use of ELK. Compared with these self-built platforms, the log service is free of operation and maintenance, low cost, and rich in features. The advantage of is unmatched by the self-built system.

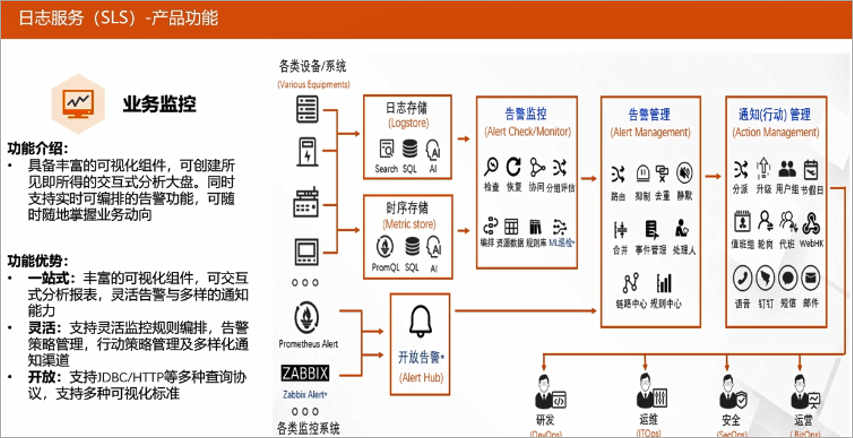

- Business monitoring

Business monitoring and intelligent operation and maintenance are actually the requirements of general scenarios in the operation and maintenance field. SLS has the ability to process billions of data in a second, and can meet the needs of various heterogeneous data extraction, aggregation, and visualization. At the same time, combined with our alarm and AI anomaly detection capabilities, it can help customers quickly build a complete monitoring and alarm system. Finally, combined with the abnormal inspection, timing prediction, root cause analysis and other capabilities provided by the log service can help users improve problems Discover and analyze positioning efficiency. - Data pipeline

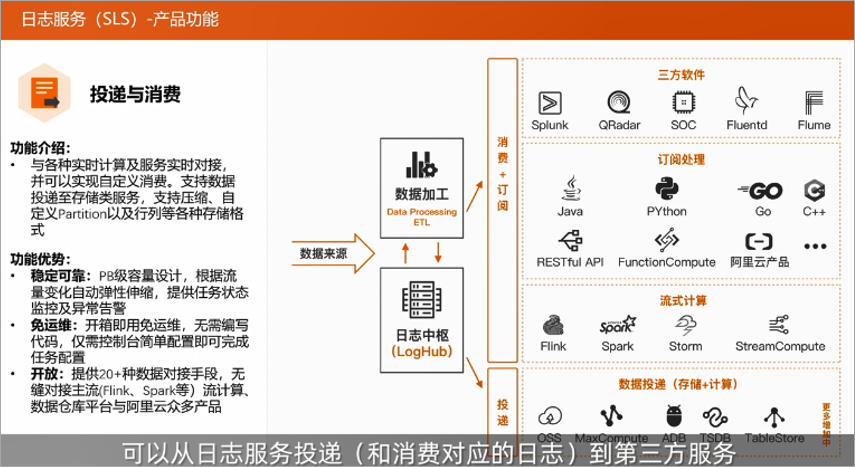

Because SLS has a strong unified data collection capability, it currently supports the access of more than 40 data sources. At the same time, the data processing capability can clean and enrich data through flexible ETL, and finally through the function of delivery and consumption, it is in line with the mainstream flow. Big data analysis platform docking with offline and offline platforms. Therefore, it is also one of the main scenarios to be used as a data pipeline in solutions such as big data.

Main functions of SLS

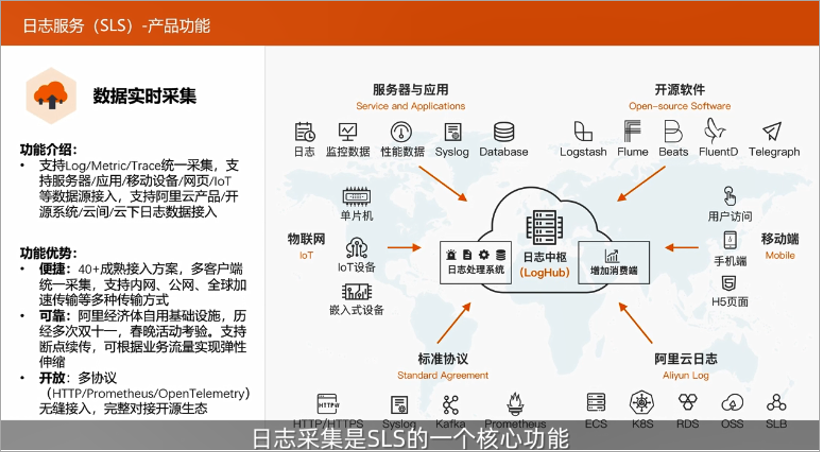

Functions include data collection, data processing, query analysis, business monitoring, log auditing, delivery and consumption.

Log collection

Log collection is a core function of SLS. It is a prerequisite to help customers perform log storage and analysis. In terms of log collection, SLS is basically working on the ultimate idea. Whether it is LOG/TRACE/METRIC logs, user server and application logs, mobile data logs, IoT device logs, Alibaba Cloud product logs, or other scenarios, as long as it is a log that meets the standard protocol transmission, it is all Unified collection can be carried out through the collection platform of SLS.

Data processing

After completing the work of data collection, before the diversified data is put into use, the work of formatting is often required. At this time, the function of data processing needs to be used. The data processing provided by SLS is an out-of-the-box function that supports data filtering, conversion, enrichment, and splitting. In order to achieve this capability, data processing provides 200+ built-in functions, 400+ Grok, rich text processing, search operators, and can freely arrange and combine operations through simple codes to achieve the required data processing capabilities.

At the same time, the second-level processing performance, large throughput performance and horizontal expansion capability of data processing can ensure the reliable execution of customer tasks.Query analysis

Through data processing, customers can process the original log into structured data, which can then be inquired and analyzed. The query analysis of SLS provides a variety of query methods such as keywords, standard SQL92\ALOPS functions, and supports text-oriented + structured data real-time query analysis, abnormal inspection and intelligent analysis. At the same time, SLS has the ultimate query performance. 100 million data can be returned in seconds.

Business monitoring

Through the data of the query obtained after these analyses, the visualization capabilities of SLS can be used to form reports to facilitate secondary queries. Once SQL is used for a long time, what you see is what you get. At the same time, SLS supports drill-down analysis and roll-up analysis, and customers can set corresponding report combinations according to actual business needs. In addition, SLS supports flexible alarm strategies and joint alarm monitoring of multiple data sources. It also supports intelligent settings such as merging, suppression, and silence to effectively reduce alarm storms, so that users can be notified of truly effective and valuable data, which is convenient for users at any time Grasp business trends anywhere.

Log audit

Log audit mainly responds to the security needs of customers, and can help customers quickly access audit data to comply with the security/net security law/GDPR agreement. At the same time, it is fully connected with a third-party SOC, and the data can be used for the second time. At present, log audit has covered all log-related product log automatic collection, which can realize automatic real-time discovery of new resources and real-time collection across multiple master accounts. We have built-in nearly a hundred CIS, best practice and other scene monitoring rules in the log audit, which can be opened with one click to detect non-compliant behaviors in time.

Delivery function

The delivery function is to deliver from the log service (log corresponding to consumption) to a third-party service when the customer has data archiving or complex data analysis needs. At present, the mainstream stream computing engine and data warehouse storage have been docked. The consumption function refers to the user's ECS\container, mobile terminal, open source software, JS and other data. After being connected to the SLS through collection, the consumption group can be customized through the SDK/API, and the data can be consumed in real time from the SLS.

3. Scene-based demo demonstration

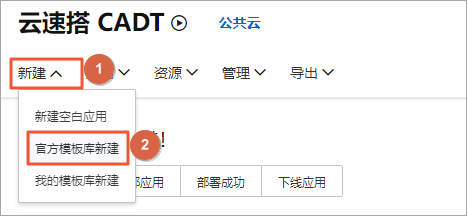

This best practice uses cloud speed and CADT to deploy the resources that need to be used. It is a product that provides self-service cloud architecture management for cloud applications, which significantly reduces the difficulty and time cost of application cloud management. This product provides a large number of pre-made application architecture templates. It also supports self-service drag-and-drop method to define the application cloud architecture, supports the configuration and management of a large number of Alibaba Cloud services, and can easily calculate the cost, deployment, operation and maintenance, and recovery of cloud architecture solutions. Carry out the management of the whole life cycle. The following is a brief demonstration of the process of building an architecture deployment environment.

- Log in to cloud speed to take the CADT console.

Click create a new > official template library to create a new .

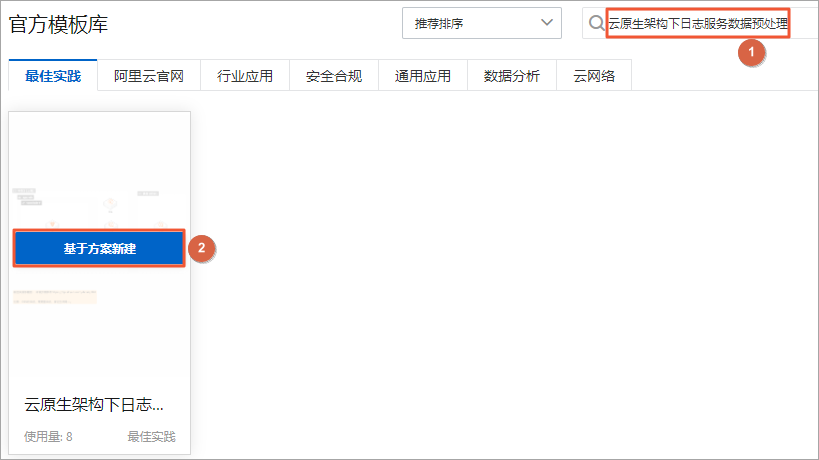

Search "Log service data preprocessing under cloud native architecture" in the search box, find the target template, and click to create a new based on the scheme.

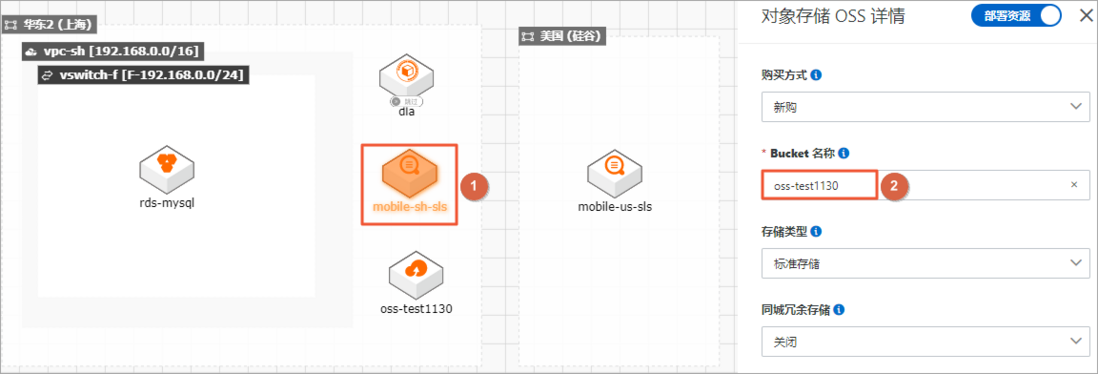

The system generates application architecture diagrams based on templates:- Double-click OSS, it needs to be renamed to ensure that it is globally unique, and other resource configurations are modified according to actual conditions.

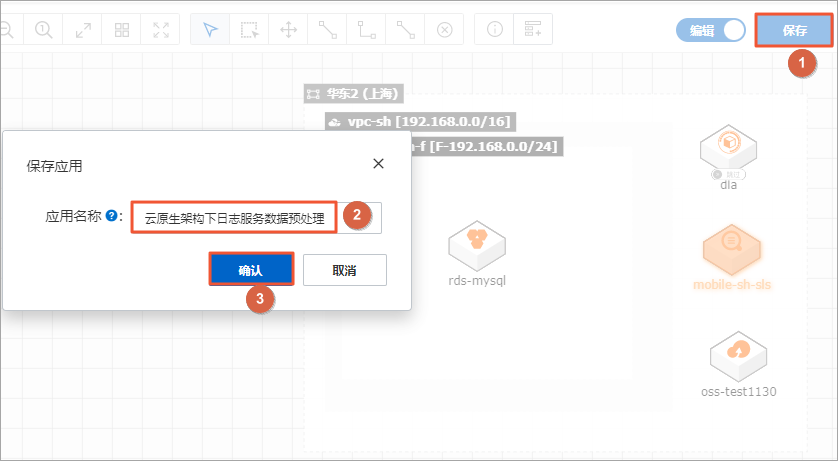

After completing the configuration, click upper right corner to save , set the application name , and click confirm .

Then click deploy the application .

- Follow the prompts on the interface to complete the process of resource verification, order confirmation, and order creation in sequence. After the resource is successfully deployed, you can click each resource under the resource name column to view it.

Regarding the complete construction demonstration process, you can access the content of this best practice document through the link below or access the QR code, which contains the best practice scenarios and the complete construction process.

Direct Best Practice" https://bp.aliyun.com/detail/207

Copyright Notice: content of this article is contributed spontaneously by Alibaba Cloud real-name registered users, and the copyright belongs to the original author. The Alibaba Cloud Developer Community does not own its copyright and does not assume corresponding legal responsibilities. For specific rules, please refer to the "Alibaba Cloud Developer Community User Service Agreement" and the "Alibaba Cloud Developer Community Intellectual Property Protection Guidelines". If you find suspected plagiarism in this community, fill in the infringement complaint form to report it. Once verified, the community will immediately delete the suspected infringing content.

**粗体** _斜体_ [链接](http://example.com) `代码` - 列表 > 引用。你还可以使用@来通知其他用户。