1. Background

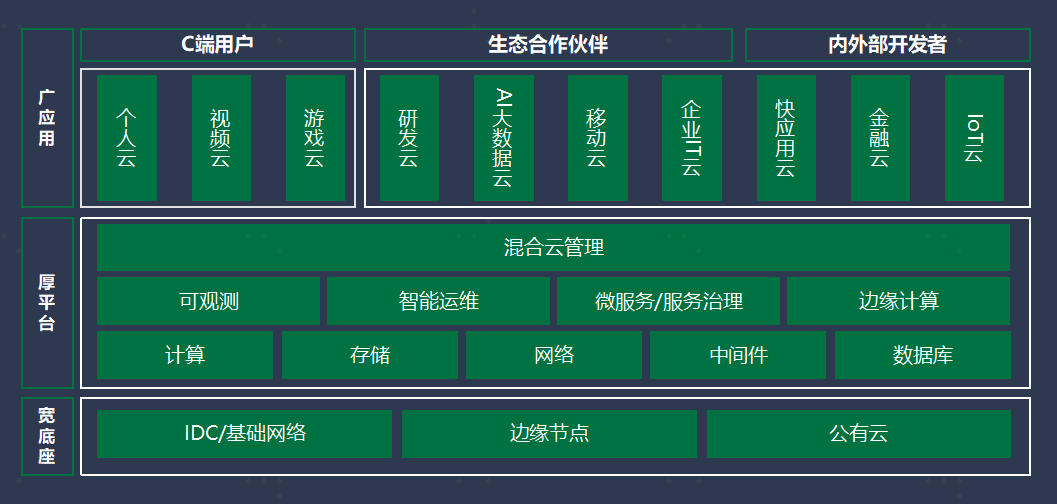

OPPO started in 19 and spent two years, with K8S and containerization as the core, completed the company's hybrid cloud construction, and realized 100% online business to the cloud. OPPO’s business currently covers China, South Asia, Europe, and the Americas. We have our own computer rooms in China, and we have more cooperation with public clouds, such as AWS and Google. OPPO's cloud is a cloud on the cloud, and the cooperation with the shared cloud is more about purchasing machine resources and deploying our own services. OPPO Cloud has brought hundreds of millions of cost reduction bonuses to our company, which has been widely recognized by the company.

At present, OPPO's data platform has a scale of nearly 10,000 computing resources, nearly 1 EB of storage, nearly one million offline tasks, and thousands of real-time tasks. Counting our growth rate over the past few years, on average, there has been an annual growth rate of about 30%. Under such a scale growth, the system SLA is three 9s and the task is 100% on time. We must guarantee that, at the same time, the company hopes that the data platform can reduce the cost of the rapid growth in the past. Therefore, under the premise of rapid business growth and system SLA and task punctuality maintaining a high level, how to further reduce costs and increase efficiency is a problem we must solve.

For such a problem, our solution is to carry out a series of technical upgrades, which mainly include three aspects: batch-flow integration, cloud-digital integration scheduling, and data lake storage.

2. Batch flow integration

As shown in the figure above, the upper part is a typical Lambda architecture. Two computing links are batched and the metadata is separated. The multiple OLAP engines on the application side also have their own storage methods and metadata management.

We usually say that the batch flow integration generally involves the unification of three aspects, metadata, storage, and engine. In these three aspects, OPPO pays more attention to the first two points. For the unification of metadata, we focus on the HMS service, and at the same time strengthen it through Waggle-Dance. In order to unify the storage, we introduced Iceberg to break the storage boundary of real-time data warehouse and offline data, and at the same time improve the real-time performance of data warehouse.

In the past two years, Data Lake Format represented by Delta, Hudi, and Iceberg has become very popular in the open source community. They are near real-time, ACID semantics, and support snapshot language backtracking and other features, which have attracted many developers. In the introduction just now, everyone has also seen that OPPO’s choice is Iceberg, the most important of which is to support the near real-time feature of CDC. However, the near real-time feature seems a bit tasteless when we are doing business promotion. In offline scenarios, hourly tasks can meet the timeliness requirements of most scenarios. Real-time scenes are degraded to near real-time with lower cost, and it is difficult to accept the delay in business.

In order to realize the real-timeization of Iceberg, we have made some technical improvements to it, which are introduced in two scenarios below.

Scenario 1: It is the scene where the CDC of the database enters the lake, which needs to support data changes. In the field of big data, to solve the problem of real-time data writing, the general investigation will use the LSM structure. Therefore, we have introduced Parker in the architecture, a KV that supports distributed LSM, and is responsible for data buffering before Iceberg. The introduction of KV can also get better support for upsert based on the primary key.

Scenario 2: Based on the data reporting of mobile phone buried points, the daily data reporting volume is very large, trillions of dollars. In this link, a lot of Kafka resources are used. For our real-time data warehouse link, Kafka’s data storage period is T+3 ~ T+1 days. In this process, we choose to use Iceberg to use more efficient columnar storage, reduce Kafka’s storage water level, and make Kafka’s The data storage period becomes T+3 hours. It not only guarantees real-time performance, but also reduces Kafka storage costs.

3. Cloud data integration scheduling

OPPO's annual computing power growth is 30%. According to assessment, there will be a 8w computing power gap in 2022. If it is not sourced, is there a way to fill the gap in computing power? Partners with platform experience generally understand that during the day, it is usually the peak of online business. At night, offline computing often fills up the cluster computing resources, and the load during the day is usually around 50%. It is our inevitable choice to realize tidal scheduling and the integration of online and offline computing power.

To achieve the integration of computing power, we did not completely follow the path of cloud native, computing resources are completely scheduled by K8S. Because YARN's scheduling logic is simpler than K8S, it is much more efficient than K8S for scenarios where big data task resources are frequently released and recycled. Therefore, we chose the YARN+K8S scheduling logic to implement yarn-operator on K8S. During task peaks, K8S releases resources for the big data cluster, and after the peak period, they are automatically recovered.

In cloud-digital integration scheduling, you may be worried about whether the computing power released by the container can meet the computing requirements in terms of performance. Here you can take a look at some of our tests. The test comparison items are physical machines, SSD containers, SATA containers, and VM containers. It can be seen from the test that under the same configuration conditions, the performance of the physical machine is the best, followed by the performance of the SSD container and the SATA container. The performance of the container on the VM has dropped drastically. So, the performance loss of SSD container and SATA container is acceptable.

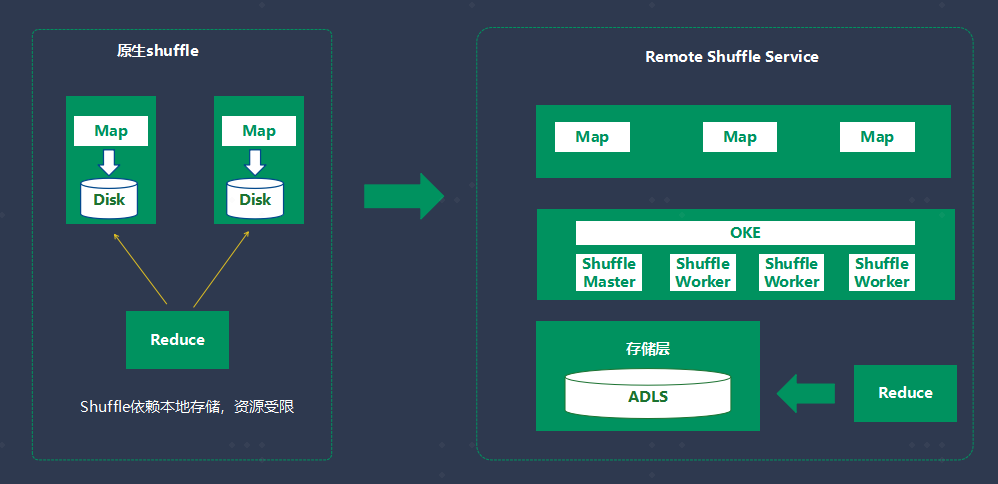

In cloud-digital fusion scheduling, everyone usually faces a problem, that is, how to stably guarantee the Shuffle efficiency of the computing engine. We are using OPPO's self-developed Shuffle Service. The original intention of OPPO's self-developed Shuffle Service is to reduce the Shuffle failure rate of large tasks. After the launch of our platform to calculate bills, from time to time there will be users because of Shuffle failures, and hope that we can reduce the calculation fee. Because it is important to know that the tasks that Shuffle fails to run are usually not small in scale, and the cost may be hundreds of thousands of dollars. From the perspective of the platform, of course, you don’t want the task to fail, so you can reduce the cost. In the cloud-digital integration, Shuffle Service also plays a very good role. The remote Shuffle service effectively reduces the pressure on local storage and can also ensure stable computing during resource expansion and contraction.

Here, I can show you the test data of Shuffle Service. This is the test result of TPC-H, 1TB data. Shuffle Service does not mean that all SQL tasks can improve execution efficiency. But for large tasks like Q16 and Q21, the efficiency improvement is still very obvious. On average, there is an increase of about 16%.

4. Data Lake Storage

OPPO's data storage has gone through three stages.

Phase 1: Full HDFS storage. Phase 2: Introduce object storage. Phase 3: Self-developed ADLS data lake storage.

Phase one to phase two is mainly to set the data through the cycle of the data asset platform, and after reaching a certain point in time, it is automatically migrated to object storage as cold data. Stage two to stage three are unified file and object storage, and the upgraded file system will solve the metadata bottleneck, and store in hot, cold and warm layers. Self-developed storage, key technologies include multi-protocol adaptation, distributed metadata, flat command space acceleration, multi-level caching, etc. Let me introduce them one by one.

The file system provides a hierarchical namespace view. The logical directory tree of the entire file system is divided into multiple layers. As shown in the figure above, each metadata node (MetaNode) contains hundreds of metadata fragments (MetaPartition), each Sharding is composed of InodeTree (BTree) and DentryTree (BTree). Each dentry represents a directory item, and the dentry is composed of parentId and name. In DentryTree, the index is composed of PartentId and name for storage and retrieval; in InodeTree, it is indexed by inode id. The multiRaft protocol is used to ensure high availability and data consistent replication, and each node set will contain a large number of shard groups, and each shard group corresponds to a raft group; each shard group belongs to a certain volume; each shard The group is a section of metadata range (a section of inode id) of a certain volume; the metadata subsystem completes dynamic expansion through splitting; when the resources (performance, capacity) of a shard group are immediately adjacent to the value, the resource manager service will Estimate an end point and notify this group of node devices to only serve the data before this point. At the same time, a new group of nodes will be selected and dynamically added to the current business system.

A single directory supports a million-level capacity, and the metadata is fully memorized to ensure excellent read and write performance. The memory metadata fragments are persisted to disk through snapshots for backup and recovery.

Object storage provides a flat namespace; for example, to access the object whose objectkey is /bucket/a/b/c, starting from the root directory, through layer-by-layer analysis of the "/" separator, find the last directory (/bucket /a/b) Dentry, and finally found /bucket/a/b/c for Inode, this process involves multiple interactions between nodes, the deeper the level, the poorer performance; therefore, we introduce the PathCache module to accelerate ObjectKey analysis , The simple way is to cache the Dentry of the parent directory of ObjectKey (/bucket/a/b) in PathCache; analyzing online clusters, we found that the average size of the directory is about 100. Assuming the storage cluster size is at the level of 100 billion, directory entries Only 1 billion, the single-machine cache efficiency is very high, and the read performance can be improved through node expansion; while supporting the design of "flat" and "hierarchical" namespace management, CBFS is more concise than other systems in the industry. , More efficient, can easily realize a piece of data without any conversion, multiple protocol access and intercommunication, and there is no data consistency problem.

One of the significant benefits brought by the data lake architecture is cost savings, but the storage-computing architecture will also encounter bandwidth bottlenecks and performance challenges. Therefore, we also provide a series of access acceleration technologies:

1. Multi-level cache capability

The first level cache: local cache, which is deployed on the same machine as the computing node, supports metadata and data cache, and supports different types of media such as memory, PMem, NVme, and HDD. It is characterized by low access latency but low capacity.

The second level of cache: distributed cache, the number of copies is flexible and variable, it provides location awareness, supports active warm-up and passive cache at the user/bucket/object level, and the data elimination strategy can also be configured

The multi-level caching strategy has a good acceleration effect in our machine learning training scenarios.

2. Predicate pushdown operation

In addition, the storage data layer also supports predicate pushdown operations, which can significantly reduce the large amount of data flow between storage and computing nodes, reduce resource overhead and improve computing performance;

There is still a lot of detailed work to accelerate the data lake, and we are also in the process of continuous improvement.

5. Outlook

Finally, combined with the three technical directions just mentioned, let's talk about some of our future plans and prospects.

With batch and stream integrated computing, we have achieved unified metadata and unified storage. The unified computing engine is a direction that can be actively explored. Although the computing engine represented by Flink continues to claim that it can achieve batch flow integration, from a practical point of view, a system wants to do too much, and often cannot achieve the ultimate in every direction. I have reservations about a unified computing engine, but I do not exclude exploration in this direction. In this regard, I personally prefer to have a common layer on batch, stream and interactive computing engines, through which the common layer shields the adaptation costs brought by different engines, rather than achieving complete computing unity at the engine layer.

Cloud-digital integration scheduling aims to achieve resource elasticity, which is currently implemented mainly through a timing mechanism. Because we know the rules of business resource utilization, we can configure such rules into our flexible strategy through rules. However, elastic scheduling should be more agile and flexible. The system can sense load conditions and automatically release and recover resources. Because in daily business, there will often be large-scale task reruns and sudden increase in tasks. In this case, a flexible and autonomous expansion strategy will better help our business.

In terms of storage, the cold, hot, and warm tiered storage we mentioned just now still needs to be defined by the user. For example, how long does the data of a certain fact table become cold storage, and whether a certain dimension table always needs hot cache acceleration? As the business changes, cold data may become hot data, and manual parameter adjustments are also required. In fact, the data can be divided into cold, hot, and warm data, which can be automatically identified and transformed through algorithms based on some dynamically monitored index data, so that different data uses different storage media, and thus has a more reasonable storage cost.

Finally, I want to say that we will continue to integrate the data platform with the cloud. Snowflake, which has been widely discussed in recent years, is very representative of moving the data warehouse to the cloud and supporting multi-cloud deployment. Our products and capabilities are grafted onto the cloud, and our services can be exported more widely.

Author profile

Keung Chau OPPO Head of Data Architecture

Responsible for the construction and technological evolution of OPPO data platform.

Get more exciting content, scan the code to follow the [OPPO Digital Intelligence Technology] public account

**粗体** _斜体_ [链接](http://example.com) `代码` - 列表 > 引用。你还可以使用@来通知其他用户。