Introduction

ClickHouse cluster data at the time of writing, although by Distributed engine sharding_key specified policy, so as to ensure a certain degree of equilibrium data, but this is not the final solution.

For example, the rand() balance strategy can ensure the relative balance of the data, but it may destroy the internal business logic of the data. As a simple example, we want to kafka data is written clickhouse cluster, if rand() strategy, it may be the same partition data split into clickhouse cluster different shard , the data for subsequent analysis resulted in Certainly trouble.

Although there are clickhouse-sinker , which can achieve the balance of data import, once the cluster expands the nodes, it still cannot balance the stock data to the newly added nodes. As a result, the data of the existing nodes is still a lot, and the data of the newly-added nodes is relatively small, and it cannot play a good role in load balancing.

Discussion on Data Balancing Scheme

When discussing data balancing solutions, we first need to clarify two prerequisites:

- For the

clickhousecluster, not a single point - For

MergeTreefamily (data tables of other engines cannot be read and written through distributed tables, so they do not have the meaning of data balancing)

We know that the clickhouse is completely columnar, which means that partition (although it can be done, it is more troublesome). Therefore, the most scientific plan for data balancing is to use partition as the unit, and the entire partition is relocated. This means that the smaller the granularity of the partition, the closer the final data is to equilibrium.

Another issue we have to think about is that if we have been writing data to one of the partitions, we cannot get the actual size of the partition (because it is constantly changing). Then, if the partition data is also parameter data balanced, the partition that participates in the balance may not be a complete partition, which will cause the partition data to be broken up, causing unpredictable problems. Therefore, we hope that the latest partition will not participate in the calculation of data balance.

How can I get the latest partition? In fact, you can query SQL

SELECT argMax(partition, modification_time) FROM system.parts WHERE database='?' AND table='?'The above SQL query is the latest partition of the specified database table. Excluding this partition, the remaining partitions are all partitions that can participate in data balancing.

Another core question is how to move partition data between different nodes? We can easily think of attach and detach , but attach and detach is that we must set the current operating user allow_drop_detached flag 1 . For clusters with replicas, we can zookeeper easily transfer the partition data to fetch between different nodes through the path of 061b406343c1eb.

-- 在目标节点执行

ALTER TABLE {{.tbname}} FETCH PARTITION '{{.partition}}' FROM '{{.zoopath}}'

ALTER TABLE {{.tbname}} ATTACH PARTITION '{{.partition}}'

-- 在原始节点执行

ALTER TABLE {{.tbname}} DROP PARTITION '{{.partition}}'But for non-replica clusters, it is not so simple. Because we cannot know zoopath , fetch . Therefore, we can only use physical means to transmit the data (such as scp , rsync etc.) to the designated node. Considering the transmission efficiency, here we use rsync .

-- 原始节点执行

ALTER TABLE {{.tbname}} DETACH PARTITION '{{.partition}}'# 原始节点执行

rsync -e "ssh -o StrictHostKeyChecking=false" -avp /{{.datapath}}/clickhouse/data/{{.database}}/{{.table}}/detached dstHost:/{{.datapath}}/clickhouse/data/{{.database}}/{{.table}}/detached

rm -fr /{{.datapath}}/clickhouse/data/{{.database}}/{{.table}}/detached-- 目标节点执行

ALTER TABLE {{.tbname}} ATTACH PARTITION '{{.partition}}'

-- 原始节点执行

ALTER TABLE {{.tbname}} DROP DETACHED PARTITION '{{.partition}}'However, there is a prerequisite for the method of rsync rsync tool must be installed on each node first. If it is not installed, you can install it through the following command:

yum install -y rsyncSecondly, it is necessary to configure the mutual trust between the nodes (mainly the mutual trust between the node of moveout the node of movein , but in fact, we don't know how the data moves between nodes, so it is best to configure them all).

After the above problems are solved, the core problem is left. How is the data balanced?

What needs to be explained here is that because it is partition , it is impossible to achieve absolute balance, but can only achieve relative data balance. partition , the more accurate the balance.

A more scientific solution is to arrange the partition data of each node according to the size, move the largest node data to the smallest node, move the second largest node to the second smallest node, and so on, continue to the middle Move closer until a certain threshold is met, then no longer move.

The code implementation of this section has been ckman project. If you are interested, you can read the source code through the following link: ckman:rebalancer .

Therefore, it is not difficult to find that in the process of data balancing, the partitioned data may have been

detach, but it has not had time toattachon the new node. At this time, there may be a certain probability of inaccuracy when querying.Therefore, in the process of data balancing, it is best not to query operations.

The insert operation is not affected, because we have excluded the latest partition from participating in the equalization operation.

How ckman achieves data balance

ckman as a visualization tool for managing and monitoring the ClickHouse cluster, naturally integrates the function of data balancing. Just click the "Balance Cluster" button on the cluster management page to achieve data balancing operations.

At the same time, ckman also provides a command line data balancing tool rebalancer , the parameters of which are as follows:

-ch-data-dir

clickhouseCluster Data Directory

-ch-hosts

- Node list (only one for

shardshard, it is not necessary to list all of them)

-ch-password

clickhouseuser password

-ch-port

clickhouseport ofTCP, default9000

-ch-user

clickhousedefault, use the user 061b406343c529 for interface operation

-os-password

- The node's

sshlogin password (required for non-copy mode)

-os-port

- The node's

sshport, the default is22(required in non-replica mode)

-os-user

sshuser of the node (required in non-replica mode)

like:

rebalancer -ch-data-dir=/var/lib/ --ch-hosts=192.168.0.1,192.168.0.2,192.168.0.3 --ch-password=123123 --ch-port=9000 --ch-user=default --os-password=123456 --os-port=22 --os-user=rootPractical case

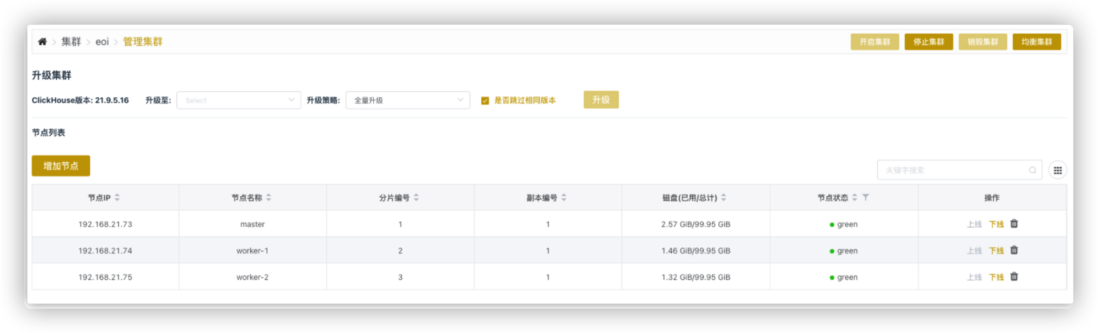

We ckman preparation of a named eoi cluster, the cluster has three nodes, respectively 192.168.21.73 , 192.168.21.74 , 192.168.21.75 , a copy of the non-cluster mode.

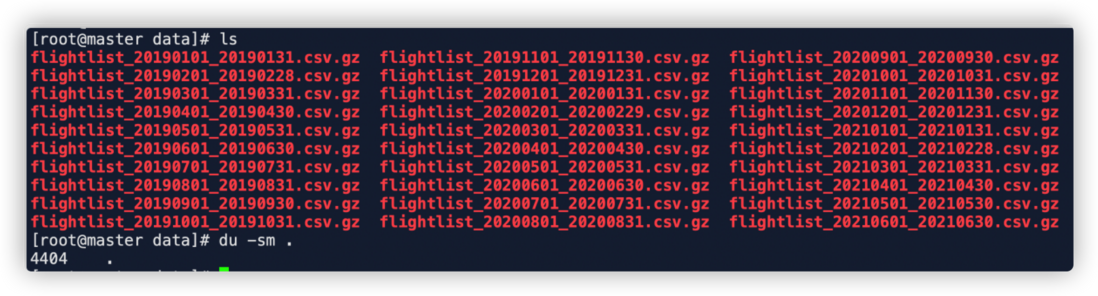

We import the following data from the data set given in the official document: https://clickhouse.com/docs/en/getting-started/example-datasets/opensky/

The data is a total of 30 months of aviation data from January 2019 to May 2021. In order to show the data balance more intuitively, this article fine-tunes the official table building statement, partitions them by month, and divides them in each cluster. Create tables for all nodes:

CREATE TABLE opensky ON CLUSTER eoi

(

callsign String,

number String,

icao24 String,

registration String,

typecode String,

origin String,

destination String,

firstseen DateTime,

lastseen DateTime,

day DateTime,

latitude_1 Float64,

longitude_1 Float64,

altitude_1 Float64,

latitude_2 Float64,

longitude_2 Float64,

altitude_2 Float64

) ENGINE = MergeTree

PARTITION BY toYYYYMM(day)

ORDER BY (origin, destination, callsign);And create a distributed table:

CREATE TABLE dist_opensky ON CLUSTER eoi AS opensky

ENGINE = Distributed(eoi, default, opensky, rand())Download data:

wget -O- https://zenodo.org/record/5092942 | grep -oP 'https://zenodo.org/record/5092942/files/flightlist_\d+_\d+\.csv\.gz' | xargs wgetThe data download is about 4.3G .

Use the following script to import data to one of the nodes:

for file in flightlist_*.csv.gz; do gzip -c -d "$file" | clickhouse-client --password 123123 --date_time_input_format best_effort --query "INSERT INTO opensky FORMAT CSVWithNames"; doneAfter the import is complete, check the data of each node as follows:

-- 总数据

master :) select count() from dist_opensky;

SELECT count()

FROM dist_opensky

Query id: b7bf794b-086b-4986-b616-aef1d40963e3

┌──count()─┐

│ 66010819 │

└──────────┘

1 rows in set. Elapsed: 0.024 sec.

-- node 21.73

master :) select count() from opensky;

SELECT count()

FROM opensky

Query id: 5339e93c-b2ed-4085-9f58-da099a641f8f

┌──count()─┐

│ 66010819 │

└──────────┘

1 rows in set. Elapsed: 0.002 sec.

-- node 21.74

worker-1 :) select count() from opensky;

SELECT count()

FROM opensky

Query id: 60155715-064e-4c4a-9103-4fd6bf9b7667

┌─count()─┐

│ 0 │

└─────────┘

1 rows in set. Elapsed: 0.002 sec.

-- node 21.75

worker-2 :) select count() from opensky;

SELECT count()

FROM opensky

Query id: d04f42df-d1a4-4d90-ad47-f944b7a32a3d

┌─count()─┐

│ 0 │

└─────────┘

1 rows in set. Elapsed: 0.002 sec.

From the above information, we can know, the original data 6600 ten thousand in all 21.73 on this node, the other two nodes 21.74 and 21.75 no data.

You can see the following information from the ckman

Then click Data Balance. After waiting for a period of time, you will see the interface prompting that the data balance is successful. Check the data of each node again:

-- 总数据

master :) select count() from dist_opensky;

SELECT count()

FROM dist_opensky

Query id: bc4d27a9-12bf-4993-b37c-9f332ed958c9

┌──count()─┐

│ 66010819 │

└──────────┘

1 rows in set. Elapsed: 0.006 sec.

-- node 21.73

master :) select count() from opensky;

SELECT count()

FROM opensky

Query id: a4da9246-190c-4663-8091-d09b2a9a2ea3

┌──count()─┐

│ 24304792 │

└──────────┘

1 rows in set. Elapsed: 0.002 sec.

-- node 21.74

worker-1 :) select count() from opensky;

SELECT count()

FROM opensky

Query id: 5f6a8c89-c21a-4ae1-b69f-2755246ca5d7

┌──count()─┐

│ 20529143 │

└──────────┘

1 rows in set. Elapsed: 0.002 sec.

-- node 21.75

worker-2 :) select count() from opensky;

SELECT count()

FROM opensky

Query id: 569d7c63-5279-48ad-a296-013dc1df6756

┌──count()─┐

│ 21176884 │

└──────────┘

1 rows in set. Elapsed: 0.002 sec.By the above operation, a simple demonstration of the data balance ckman implementation of the original data 6600 ten thousand all at node1 , after the adoption of a balanced, which node1 data 2400 million, the node2 bit 2000 million, the node3 bit 2100 million, the realized Rough data balance.

Concluding remarks

Although we can ckman , which greatly improves the convenience of operation, data balance itself is a very complicated proposition, once it involves storage strategies (such as data storage on the remote HDFS ) , Then it will increase the complexity of data balancing. These are ckman cannot currently support (data balancing for remote data is meaningless, but its metadata can be balanced, so that the CPU performance of each node can be fully utilized when querying) . Therefore, if data balance wants to be scientific and accurate, more efforts are still needed.

**粗体** _斜体_ [链接](http://example.com) `代码` - 列表 > 引用。你还可以使用@来通知其他用户。