1 Status of OPPO Cloud Network

With the rapid development of OPPO business, the scale of OPPO cloud has grown rapidly. The elastic scaling and low latency requirements of large-scale virtual instances pose many challenges to the network. The original VLAN-based private network cannot solve these problems, which brings challenges to network operation and maintenance and the rapid launch of services.

The main problems in sorting out are as follows:

- The network lacks a centralized control system, business activation is mainly manual configuration, and there is almost no automatic activation capability, which lays hidden dangers for later maintenance and upgrades.

- The virtual network and the physical network are closely coupled, and there is no separation of services. The MAC or route of the virtual network will be transmitted to the physical device, which places high requirements on the capacity of the second and third layer table entries of the physical device. Large-scale expansion of virtual instances may exhaust routing table entries on hardware devices, rendering services unavailable.

- The virtual network lacks a security strategy, the existing security strategy cannot well control the mutual access and communication between virtual networks, and the network access control mechanism is not sound.

- The bandwidth of the virtual network card cannot support high-performance services. The virtual network card of the network only supports veth/tap devices, and the network bandwidth is not high, which cannot meet the needs of network data-intensive services.

- The virtual network system does not realize the separation of control and forwarding, and the control plane failure or upgrade will affect the business.

2 Virtual network selection

After analyzing the root causes of the above problems, I decided to choose the current mainstream SDN network technology in the industry to solve the above-mentioned problems of insufficient network isolation and operation and maintenance automation capabilities. I chose the smart network card OFFLOAD function to achieve network acceleration of virtual instances. ) Technology to manage the business network of different users and improve the robustness and security of the system.

The data plane of SDN is implemented using open source OVS. The main reason is that OVS has been widely used in data centers. Its forwarding performance, stability, and security have been proven in practice, and its maintenance costs are relatively low.

VPC controller, VPC network interface (CNI) plug-in, VPC gateway, and VPC load balance (Load Balance) decided to adopt self-developed methods. Although the community already has similar solutions (Neutron/OpenDaylight), these projects are more bloated. It focuses on telecommunications business scenarios, with high maintenance costs, and cannot be directly applied to data center Internet business scenarios, and there is a lot of integrated development work with existing platform systems. Self-research also has many advantages, such as rapid development of new functions without relying on the community, convenient custom development, light weight, high performance, and strong stability.

3 VPC network solution

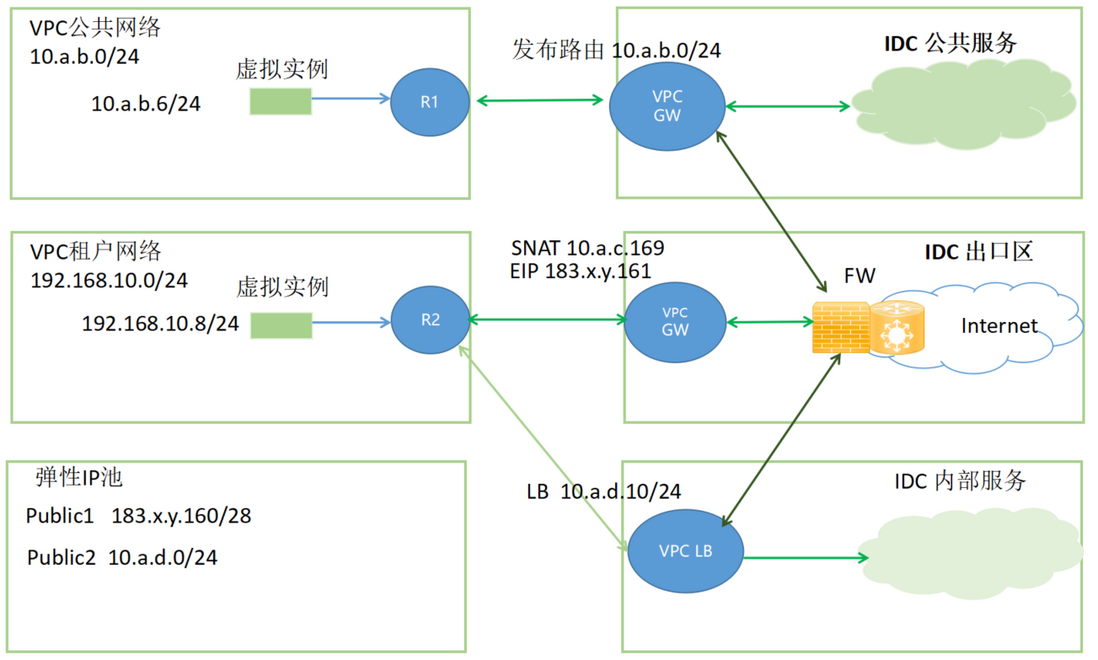

The entire VPC network system architecture is shown in the figure below.

The VPC console provides a unified business portal and convenient interface operations, business operations are visualized, and key data is stored in a database for easy maintenance and use.

VPC SERVER is the center of the entire system, providing efficient and concise business interfaces to the cloud platform. It is the message forwarding hub of the entire system.

FlowEngine is the OVS controller located at the computing node. It uses rule-based reasoning to calculate the flow table and instructs OVS how to forward business packets. Together, FlowEngine and VPC Server are called the central hub of the network.

VPC CNI Agent is responsible for the management and distribution of network card resources of computing nodes, and provides service interfaces such as creation and deletion for the cloud platform. It uses Smart Network Card Virtualization (SR-IOV) technology to achieve network acceleration of virtual instances.

VPC gateways are divided into east-west distributed gateways and north-south centralized gateways, which provide communication between different subnets and solve the single-point failure problem of centralized gateways. The north-south centralized gateway supports VXLAN tunnels, realizes the intercommunication between virtual and physical networks, and supports horizontal expansion and DPDK acceleration technology.

VPC LB implements four-layer load balancing and provides high-availability solutions for external services.

3.1 VPC network type

The current VPC network supports tenant networks, public networks, and elastic IP networks. The virtual instances in the tenant network can access each other, and can only access the external network through the VPC gateway. The IP address of the virtual instance will not be published, so it cannot be accessed by external hosts. Only when an elastic IP is bound can it directly provide services to the outside world. This kind of network is particularly suitable for switching from a development and test environment to a production environment, and is conducive to the rapid release of services.

The tenant network can also use the LB gateway to publish virtual IP (VIP) routes to provide services to the outside world.

The public network is similar to the traditional VLAN network, and its network segment will be published by the VPC gateway to the uplink device, which can directly communicate with the IDC network. It is convenient to deploy the monitoring services and public services of the basic virtual cloud platform.

3.2 Tenant isolated tunnel VXLAN

The type of VPC network is a VXLAN tunnel. Each subnet is assigned a unique VXLAN tunnel ID. It is used with the security group technology to ensure complete isolation of different user networks and solve the security risks of business going to the cloud.

The data plane forwarding of OVS is controlled by the local controller, and controller failure will not affect the communication of other nodes and the forwarding of existing tunnel traffic.

The use of the VXLAN offloading function of the smart network card will not affect the service forwarding due to the performance loss of VXLAN, and at the same time save the CPU resources of the host.

3.3 VPC tunnel encapsulation description

3.3.1 VXLAN tunnel isolation

The VPC network supports virtual machines and containers on the same Layer 2 network. From a network perspective, they are all in the same VPC subnet. The mutual access of virtual instances of different nodes requires the help of a VXLAN tunnel. For communication with a virtual instance of a subnet, the VXLAN ID of the message is the ID of the local subnet, and for a virtual instance of a different subnet, the VXLAN ID of the message is the ID of the destination subnet.

A virtual instance needs to use a VPC gateway to access the external network of a VPC. The VPC gateway is implemented by software, accelerated by DPDK and high-speed network card, and can run on an X86 server. Currently, third-party VPC gateways are also supported. VPC gateways can exchange VXLAN routing information with VPC SERVER through HTTP API or BGP-EVPN interface.

3.3.2 VPC load balancing

VPC load balancing is implemented in full NAT mode, and packets are encapsulated in VXLAN packets for forwarding. The routing and tunnel information is synchronized to the LB controller by the VPC SERVER, and the LB controller is responsible for updating the routing information to the corresponding network element.

The message interaction scheme can be clearly seen from the above figure. The LB network element will maintain the business session session and support scheduling algorithms such as round robin and minimum load priority. Support consistent hashing algorithm, seamless migration of business functions.

The LB network element adopts DPDK and high-speed network card to realize, the message forwarding rate (PPS) can reach 8 million or more, the number of sessions can reach 100,000 or more, and the delay is less than 1ms. Meet the high concurrency business requirements of Internet and IOT scenarios.

3.4 VPC performance indicators

After the VPC virtual instance transparently transmits the VF network card, the forwarding performance can reach 25000Gbit/s. The PktGen test packet forwarding rate (PPS) can reach 24 million, and the bandwidth can reach 25Gbps. Both bandwidth and PPS can reach more than twice that of a virtual tap device, while reducing the CPU usage of network forwarding.

The feature of VPC tenant isolation is a good solution to the problem of rapid mutual access of millions of virtual instances in multiple tenants. The improvement of single instance and LB performance solves the bandwidth bottleneck of the data calculation of millions of nodes in the existing network. Greatly improve the service carrying capacity of the system. VPC network technology empowers AI and big data computing platforms.

4 Benefits and outlook

After going online, the VPC architecture simplifies the network architecture, improves network security, improves the system's business carrying capacity and operation and maintenance efficiency, and brings a good experience to users and is unanimously appreciated by customers.

The VPC version is still being iterated. The new functions to be released include VPC unified management of containers, virtual machines, logic and other types of computing instances, high availability of data plane, high availability of OVS flow table computing engine, 100G latest smart network card, etc. .

Author profile

Andy Wang OPPO Senior Backend Engineer

Mainly responsible for VPC network architecture design and implementation. Dedicated to the practice of new SDN network technology for a long time. Served as a senior network engineer and architect in ZTE, IBM, and Saites.

Get more exciting content, scan the code and follow the [OPPO Digital Intelligence Technology] public account

**粗体** _斜体_ [链接](http://example.com) `代码` - 列表 > 引用。你还可以使用@来通知其他用户。