1 background

When we use Flink to develop real-time tasks, we will use the DataStream API provided by the framework itself, which makes it impossible for users to write business logic without Java, Scala, or even Python; although this method is flexible and expressive, it has certain implications for users. Development threshold, and with the continuous update of the version, the DataStream API also has many incompatibility issues with the old version. Therefore, Flink SQL has become the best choice for the majority of development users. The reason why Flink launched SQL API is mainly because SQL has the following important features:

- Declarative API:

The user only cares about what to do, not how to do it; - Automatic optimization:

Shield the complexity of the underlying API and automatically optimize; - easy to understand:

SQL is used in different industries and fields, and the learning cost is low; - Not easy to change:

The grammar follows the SQL standard and is not easy to change; - Flow batch unification:

The same SQL code can be executed in streams and batches.

Although Flink provides SQL capabilities, it is still necessary to build its own platform based on Flink SQL. Currently, there are several ways to build a SQL platform:

- Flink native API:

Use the SQL API provided by Flink to package a general pipeline jar, and use the flink shell script tool to submit SQL tasks; - Apache Zeppelin:

An open source product that uses the notebook method to manage sql tasks. It has been integrated with Flink and provides a wealth of SDK; - Flink Sql Gateway:

A Sql gateway officially produced by Flink, which executes Flink Sql in Rest mode.

The first method lacks flexibility and has a performance bottleneck when a large number of tasks are submitted; while Zeppelin is powerful, but the page functions are limited. If you want to build a SQL platform based on Zeppelin, you must either use the SDK or do heavy secondary development on Zeppelin ; Therefore Flink Sql Gateway is more suitable for platform construction, because it is an independent gateway service, which is convenient to integrate with the company's existing systems and is completely decoupled from other systems. This article also mainly describes the practice and exploration of Flink Sql Gateway.

2 Introduction to Flink Sql Gateway

2.1 Architecture

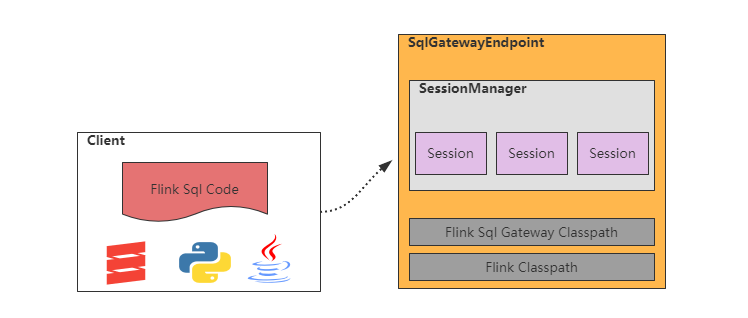

As shown in the figure above, the architecture of Flink Sql Gateway is relatively simple. The main component is SqlGatewayEndpoint. It is a Netty service based on Flink's RestServerEndpoint. It implements a variety of handlers to complete the creation and deployment of SQL tasks, as well as management capabilities. . SqlGatewayEndpoint is mainly composed of SessionManager (session management), SessionManager maintains a session map, and the inside of the session is mainly some context configuration and environmental information.

- SqlGatewayEndpoint:

Based on the Netty service implemented by RestServerEndpoint, Rest Api is provided externally; - SessionManager :

Session manager, manage session creation and deletion; - Session:

A session, which stores the Flink configuration and context information required by the task, and is responsible for the execution of the task; - Classpath:

Flink Sql Gateway loads the classpath of the flink installation directory when it starts, so flink sql gateway basically has no dependencies other than flink.

2.2 Execution process

SQL Gateway is actually just an ordinary NIO server. Each Handler will hold a SessionManager reference, so it can access the same SessionManager object together. When the request arrives, the Handler will get the parameters in the request, such as SessionId, etc., and go to the SessionManager to query the corresponding Session, so as to perform tasks such as submitting SQL and querying task status. The request process is shown in the figure below:

Creating a session is the first step in using SQL Gateway. SessionManager will encapsulate the task execution mode, configuration, planner engine method and other parameters passed in by the user into a Session object, put it in the map, and return the sessionid to the user;

The user holds the sessionid, initiates a sql request request, the gateway finds the corresponding Session object according to the sessionid, and starts to deploy the sql job to yarn/kubernetes;

2.3 Function

2.3.1 Task deployment

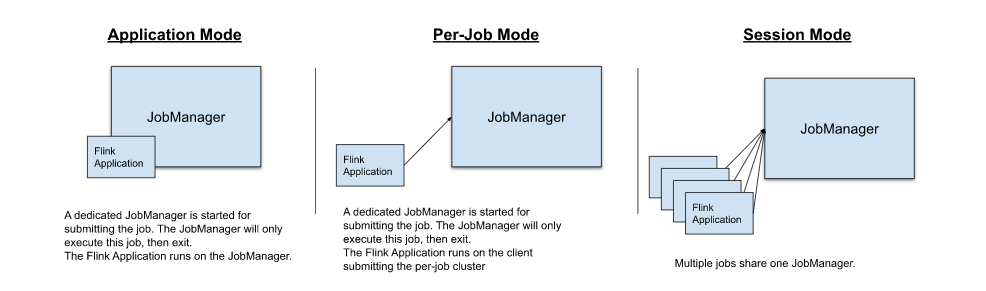

Flink Sql Gateway, as the client of Flink, directly uses Flink's capabilities for task deployment, and Flink currently supports three deployment modes:

- in Application Mode,

- in a Per-Job Mode,

- in Session Mode。

The three modes have the following two differences:

- Cluster life cycle and resource isolation:

The cluster life cycle of per-job mode is the same as that of job, but there is a strong guarantee of resource isolation. - The main() method of the application is executed on the client side or on the cluster:

Session mode and per-job mode are executed on the client, while application mode is executed on the cluster.

As can be seen from the above, Application Mode creates a session cluster for each application and executes the main() method of the application on the cluster, so it is a compromise between session mode and per-job.

So far, Flink only supports the application mode of the jar package task, so if you want to realize the application mode of the sql task, you need to modify the implementation yourself. The implementation method will be discussed later.

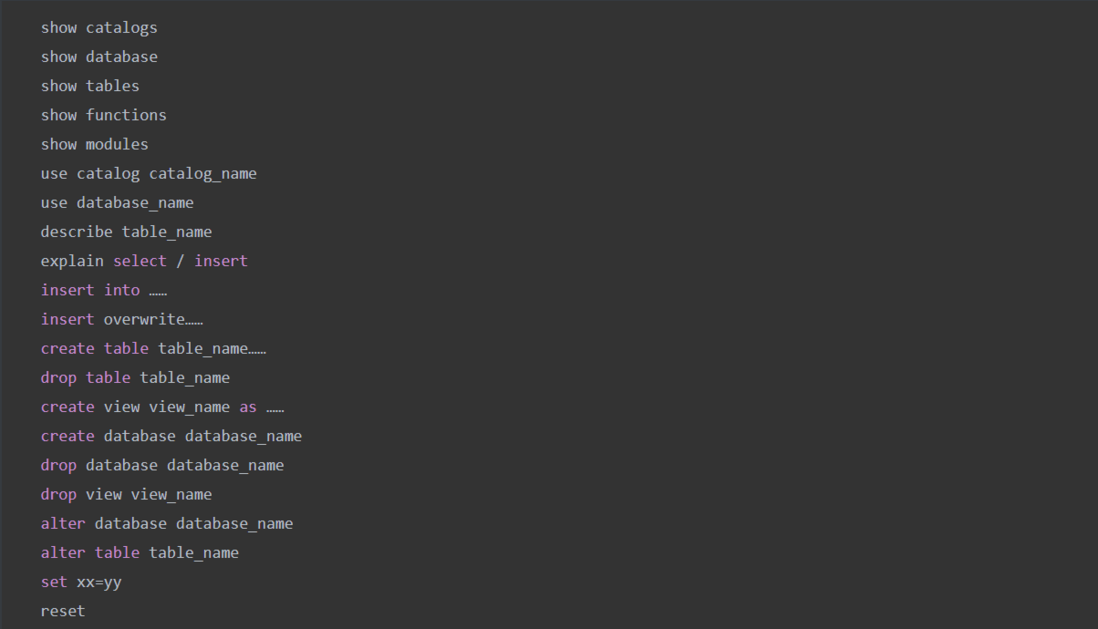

2.3.2 SQL capabilities

The Sql syntax supported by Flink Sql Gateway is as follows:

Flink Sql Gateway supports all Flink Sql syntax, but it also has some limitations:

- Does not support multiple SQL execution, multiple insert into execution will generate multiple tasks;

- Incomplete set support, there is a bug in the set syntax support;

- Sql Hit support is not very friendly, and it is easier to make mistakes when writing in SQL.

3 Platform transformation

3.1 Implementation of SQL application mode

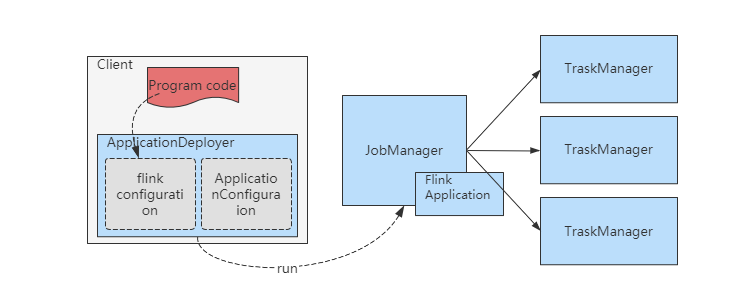

As mentioned earlier, flink does not support the application mode deployment of sql tasks, but only supports jar package tasks. The application mode implementation of the jar package task is shown in the following figure:

- flink-clients parses out the user's configuration and jar package information;

- The entry class name and input parameters of the main method are specified in ApplicationConfiguration;

- ApplicationDeployer is responsible for starting Jobmanager and executing the main method of Flink Application when it is started.

It can be seen from the above process that to realize the application mode of sql, the key is to realize the pipeline jar for general execution of sql:

Implement a general pipeline jar package for executing SQL, and transfer it to yarn or k8s in advance, as shown below:

Specified in ApplicationConfiguration

Entrance and parameters of the main method of pepeline jar:

3.2 Multiple Yarn cluster support

Currently Flink only supports task deployment in a single Yarn environment. For scenarios with multiple Yarn environments, multiple Flink environments need to be deployed, and each Flink corresponds to a Yarn environment configuration; although this method can solve the problem, it is not optimal solution. Those familiar with Flink should know that Flink uses the SPI of ClusterClientFactory to generate the access medium (ClusterDescriptor) with the external resource system (Yarn/kubernetes). Through the ClusterDescriptor, the interaction with the resource system can be completed, such as YarnClusterDescriptor, which holds YarnClient objects. Interaction with Yarn; so for multi-Yarn environments, we only need to ensure that the YarnClient objects held in YarnClusterDescriptor correspond to the Yarn environment one-to-one. The code is shown in the following figure:

Author profile

Zheng OPPO Senior Data Platform Engineer

Mainly responsible for the development of Flink-based real-time computing platform. He has rich R&D experience for Flink and has also participated in the contributions of the Flink community.

Get more exciting content, scan the code to follow the [OPPO Digital Intelligence Technology] public account

**粗体** _斜体_ [链接](http://example.com) `代码` - 列表 > 引用。你还可以使用@来通知其他用户。