1 Introduction

OPPO's big data offline computing development, what stages has it gone through? What are the classic big data problems encountered in production? How did we solve it, and what are the architectural upgrades and evolutions from it? What are the directions for the future OPPO offline platform? Today I will reveal the secrets to everyone one by one.

2 The development history of OPPO big data offline computing

2.1 The development stage of the big data industry

The technological development of a company is inseparable from the development background of the entire industry. Let's briefly return to the development of the big data industry. Through Google's BigData search popularity, we can roughly divide the progress of big data in the past ten years.

From the above heat curve, the development of big data can be roughly divided into three stages:

growth period of (2009-2015). This period mainly represents the rapid growth of Hadoop 1.0 and related ecology;

peak period (2015-2018), this period is mainly represented by Hadoop2.0 and Spark quickly becoming the industry factual foundation of big data infrastructure and computing engines;

mature period (2018-now), this period mainly represents the prosperity of computing engines such as Spark and Flink and OLAP engines;

From this heat curve, there is a small question. The popularity of big data has dropped rapidly in the past two years, so what technology has become the most popular technology in recent years?

2.2 OPPO Big Data Development Stage

OPPO big data started a little later than the entire industry, let’s take a look at the development timeline:

In 2013, at the beginning of the peak period of big data, OPPO began to build big data clusters and teams, using Hadoop version 0.20 (Hadoop 1.0).

In 2015, using CDH services, the cluster began to take shape.

In 2018, the self-built cluster has reached a medium scale, using Hive as the computing engine.

In 2020, start large-scale migration of SQL jobs from Hive to Spark computing engine.

In 2021, upgrade and transform from the big data resource layer and the computing layer.

OPPO's big data development can be summarized into two stages:

Development period : From 2013 to 2018, OPPO big data grew out of nothing and slowly grew, and the scale of computing nodes expanded from 0 to medium scale;

boom period : 2018-now, three years of rapid development of big data, technically upgraded from hadoop1.0 to hadoop2.0, computing engine upgraded from hive to spark; self-developed technology and architecture upgrade to solve common problems after cluster expansion;

3 Frequently Asked Questions in the Field of Big Data Computing

There are many classic problems in the big data field. Here we have selected five typical problems encountered in the production environment to illustrate; we will focus on these five problems and introduce the evolution of OPPO big data offline computing architecture.

3.1 Shuffle problem

Shuffle is a key part of big data computing. Shuffle has an important impact on task performance and stability. The following factors cause shuffle performance to slow down and stability to deteriorate:

spill&merge memory data to the disk according to a certain size, and finally do sort and merge, which will generate multiple disk io.

Disk random read : Each reduce only reads part of the data output by each map, resulting in random reads on the disk on the map side.

Too many RPC connections : Suppose there are M maps, N reduce, and MxN RPC connections are established during the shuffle process (considering that multiple maps may be on the same machine, this MxN is the maximum number of connections).

The shuffle problem will not only affect the performance and stability of the task, but also in the process of going to the cloud for big data tasks, the acceptance of shuffle data has also become an obstacle to going to the cloud. The dynamic recovery of resources on the cloud requires waiting for the downstream to read the upstream shuffle data before releasing the resources safely, otherwise the shuffle will fail.

3.2 Small file problem

The problem of small files is almost a problem that big data platforms must face. Small files have two main hazards:

- Too many small files put a lot of pressure on the NameNode nodes stored in HDFS.

- Too many small files will affect the concurrency of downstream tasks. Each small file generates a map task to read data, causing too many tasks to be generated, and there will be too many fragments to read.

What are the reasons for the small file problem?

- The amount of task data is small and the concurrent writing is relatively large. A typical scenario is dynamic partitioning.

- Data is skewed, the total amount of data may be relatively large, but there is data skewing, only some files are relatively large, and other files are relatively small.

3.3 Multi-cluster resource coordination problem

With the development of business, clusters are expanding rapidly, and the scale of a single cluster is getting larger and larger. At the same time, the number of clusters has also expanded to multiple. How to coordinate resources in a multi-cluster environment is a challenge we face.

First look at the advantages and disadvantages of multiple clusters:

Advantages: isolation of resources in each cluster, isolation of risks, and exclusive resources for some businesses.

Disadvantages: resource isolation, formation of resource islands, loss of the advantages of large clusters, and uneven resource utilization.

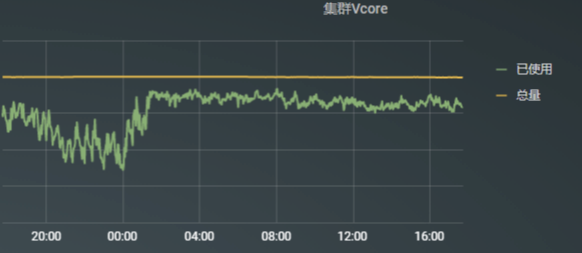

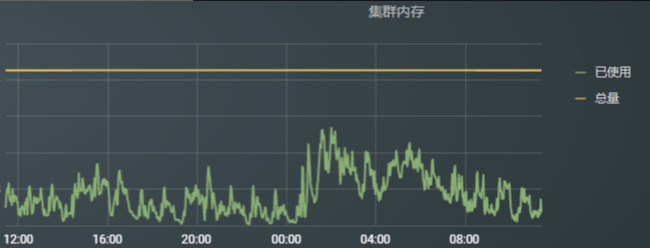

For example, compare our online cluster vcore resource usage:

From the perspective of resource usage, the resource utilization of cluster 2 is significantly lower than that of cluster 1, which causes uneven load among clusters, low resource utilization, and waste of resources.

3.4 Metadata expansion problem

Due to historical reasons, a single MySQL instance is selected for storage of metadata in the initial stage of cluster construction. With the rapid growth of business data, metadata is also growing rapidly. This single-point metadata storage has become the biggest hidden danger to the stability and performance of the entire big data system. At the same time, in the past year, our cluster experienced two major failures due to metadata service issues. In this context, the expansion of metadata has become an urgent and important matter.

The question is, what kind of expansion plan to choose?

Several options for researching the industry, including:

- Use distributed databases such as TiDB;

- From the distribution of new planning metadata, split to different MySQL;

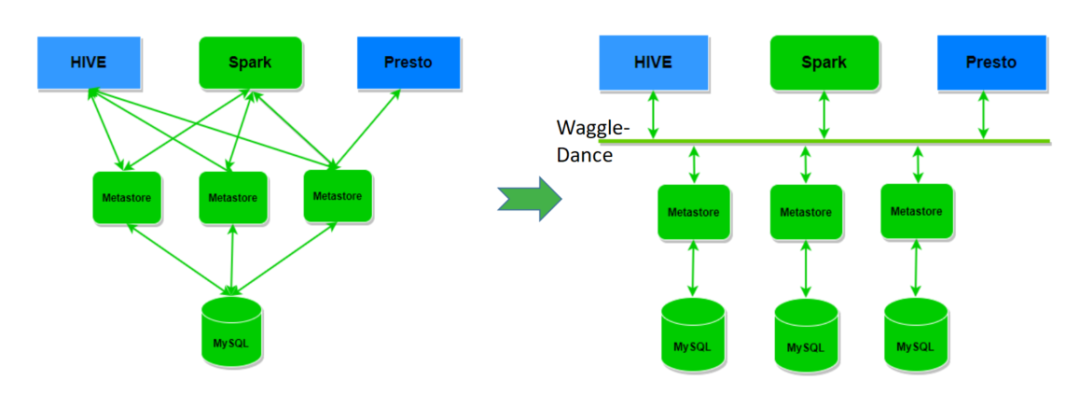

- Use Waggle-Dance as the routing layer of metadata;

In the selection process, we consider the least impact on users as much as possible, so as to be transparent to users and expand smoothly;

3.5 Calculate the unified entry

While we are migrating sql tasks from hive to spark engine, the first question we encountered is: Can SparkSQL tasks be submitted via beeline or jdbc as easily as HiveSQL? The native spark submits tasks through the submit script that comes with spark. This method is obviously not suitable for large-scale production applications.

Therefore, we propose the goal of a unified computing portal.

Not only the SparkSQL task submission is unified, but the jar package tasks must also be unified.

The above five problems are typical problems that we have constantly encountered in the production environment, constantly explored, and summed up. Of course, there are more typical problems in the field of big data computing, limited to the length of the article, here is only to discuss these five problems.

4 OPPO offline computing platform solution

In response to the five problems mentioned earlier, we introduce OPPO's solution, which is also the evolution of our offline computing platform.

4.1 OPPO Remote Shuffle Service

In order to solve the performance and stability issues of shuffle and pave the way for big data tasks to go to the cloud, we self-developed OPPO Remote Shuffle Service (ORS2).

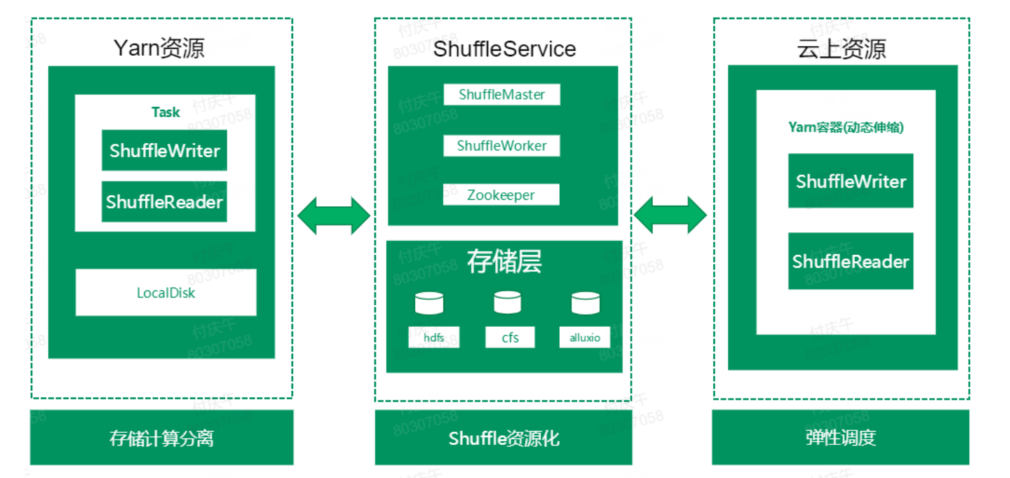

4.1.1 The overall architecture of ORS2 in the big data platform

With ORS2, not only the shuffle process of the spark task is decoupled from the local disk, but also the shuffle data of the big data computing task of the cloud resource is undertaken.

From the ShuffleService itself, there is a separate service role that is responsible for integrating the shuffle data of the computing task. At the same time, ShuffleService itself can be deployed to resources on the cloud, dynamically expand and shrink, and make shuffle resources. In terms of overall architecture, ShuffleService is divided into two layers. The upper Service layer has two roles: ShuffleMaster and ShuffleWorker.

ShuffleMaster is responsible for the management, monitoring and distribution of ShuffleWorker. ShuffleWorker reports its own relevant information to ShuffleMaster, and the master manages the health of the workers; it provides management operations such as worker blackening and punching. The allocation strategy can be customized, such as: Random strategy, Roundrobin strategy, LoadBalance strategy.

ShuffleWorker is responsible for collecting data and writing the data of the same partition to a file. The process of Reduce reading partition data becomes sequential reading, which avoids random reads and MxN RPC communication.

At the storage layer, our ShuffleService can flexibly select different distributed storage file systems, and the management and stability of partition files are guaranteed by the distributed file system. Currently supports HDFS, CFS, Alluxio three distributed file system interfaces. Different storage media can be used according to different needs. For example, for small task jobs or jobs with higher performance requirements, you can consider using memory shuffle; for jobs with higher stability requirements and higher job importance, you can choose ssd ; For low-level operations that do not require high performance, you can choose SATA storage; seek the best balance between performance and cost.

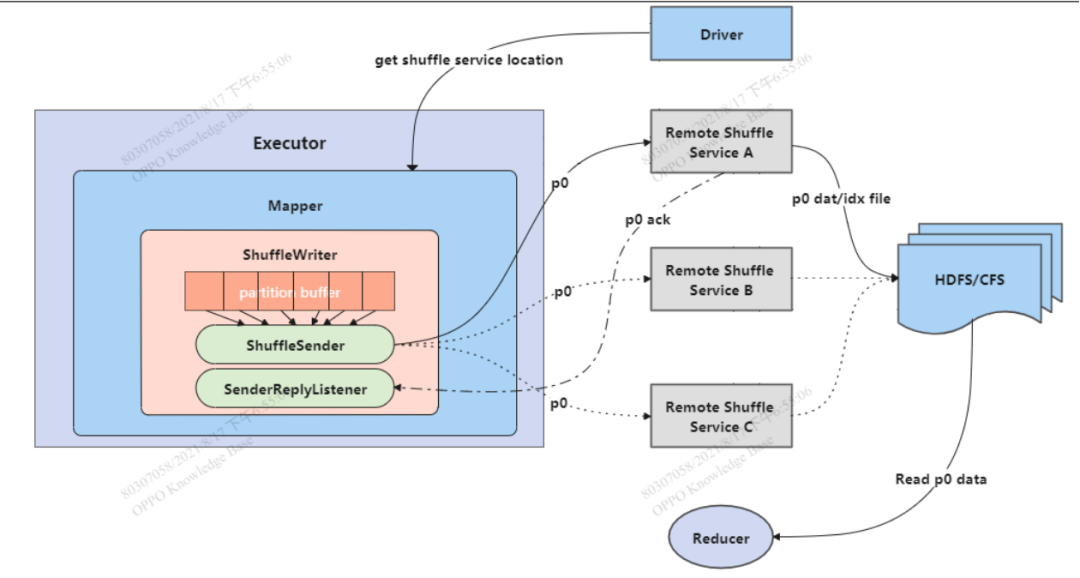

4.1.2 The core architecture of ORS2

From the perspective of the core architecture of ShuffleService, it is divided into three stages:

ShuffleWriter:

Map tasks use ShuffleWriter to complete data aggregation and transmission, and use multi-threaded asynchronous transmission; use off-heap memory, and memory management is unified by the spark native memory management system to avoid additional memory overhead and reduce OOM risks. In order to improve the stability of sending data, we have designed the shuffleworker that switches the destination in the middle. When the ShuffleWorker that is sending fails, the Writer can immediately switch the destination Worker and continue to send data.

ShuffleWorker:

Shuffle is responsible for collecting the data and storing the data in the distributed file system at the same time. We have done many designs for the performance and stability of ShuffleWorker, including flow control, customized thread model, customized message analysis, checksum mechanism, etc.

ShuffleReader:

ShuffleReader reads data directly from the distributed file system without passing through ShuffleWorker. In order to match the read data characteristics of different storage systems, we have optimized the pipeline read on the Reader side.

After the above multiple optimizations, we use the online large-scale operation test, and ShuffleService can speed up by about 30%.

4.2 OPPO Small File Solution

We hope to solve the small file problem transparently to users, without user intervention, and the engine side can be solved by modifying the configuration. After understanding Spark's mechanism for writing files, we self-developed a transparent solution for small files.

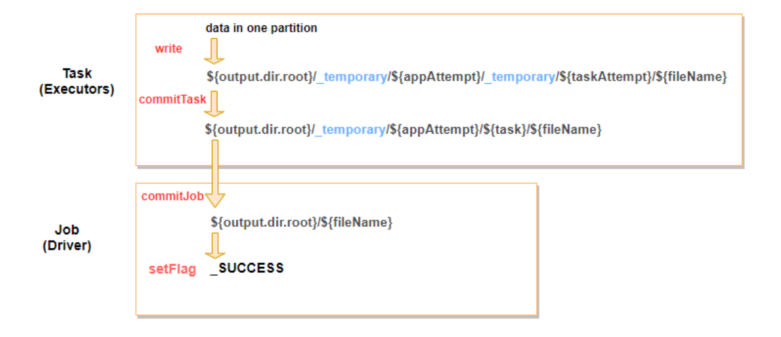

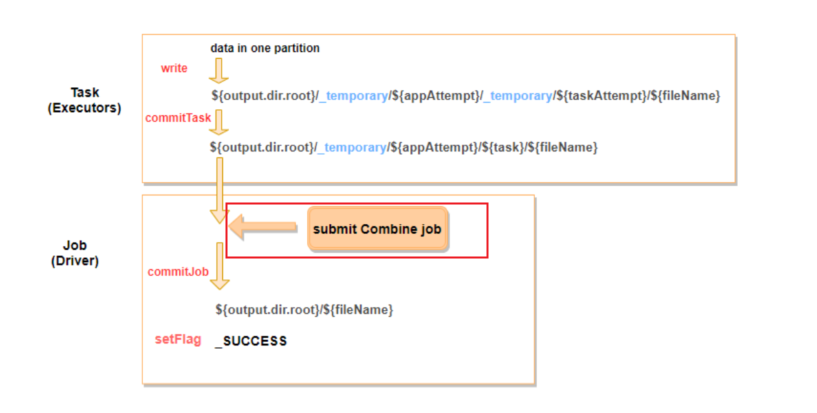

There are currently three Commit methods in the process of Spark task writing data at the end:

(V1, V2, S3 commit), we introduce our small file solution in the V1 version of Commit.

Commit of Spark's V1 version is divided into two stages, the commit on the Task side and the commit on the Driver side. The commit on the task side is responsible for moving the files generated by the task itself to the task-level temporary directory; the commit on the driver side moves the temporary directories of all task commits to the final directory, and finally creates the _SUCCESS file, marking the successful operation of the job .

We implemented our own CommitProtocol, and added the operation of merging small files in the early stage of the Driver commit stage, scanning:

The small files under the ${output.dir.root}_temporary/${appAttempt}/ directory, and then generate the corresponding small file merge job. After merging the small files, call the original commit and move the merged files to the ${output.dir.root}/ directory.

This method cleverly avoids the explicit submission of additional jobs to merge the result data. At the same time, the number of files moved when the result file is moved by Driver commit is reduced by orders of magnitude, reducing the time consumption of file movement. At present, we have all integrated small files online in domestic and overseas environments.

4.3 OPPO Yarn Router-Multi-cluster resource coordination

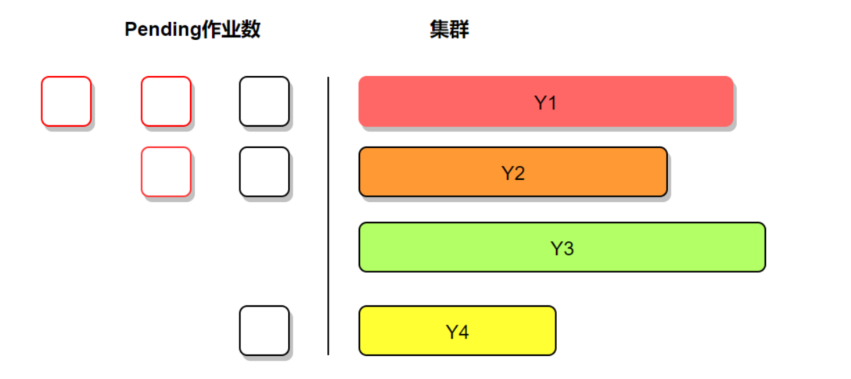

Earlier we mentioned that the main disadvantage of multi-cluster is that it leads to resource islands, the load of the cluster is not balanced, and the overall resource utilization is low. Below we abstract a simple diagram:

From the schematic diagram, the left side represents the pending job, and the right side represents the resource situation of the cluster; the length represents the amount of resources, the color represents the resource load, and the darker represents the higher the load. Obviously, it can be seen that the current resource load of each cluster is not balanced, and the pending job situation is also proportional to the resource usage ratio of the cluster. For example, the resource load of the Y1 cluster is very high, but the pending job is also very high, and the Y3 cluster resources are very idle. , But there is no job pending for this cluster.

How can we solve this problem?

We have introduced the Yarn Router function of the community. The tasks submitted by users are sent to the router, and the router is then distributed to each yarn cluster to achieve federated scheduling.

The router strategy of the community version is relatively simple and can only be routed to different clusters through simple proportional distribution. This method can only simply implement the function of routing jobs, and has no perception of the resource usage and job running conditions of the cluster. Therefore, the decisions made will still cause uneven cluster load, for example:

In order to completely solve the problem of load balancing, we have developed an intelligent routing strategy by ourselves.

The ResourceManager reports to the router the resource and job running status of its own cluster in real time, and gives a forecast of the amount of resource released. The router generates a global view based on the information reported by each cluster. According to the global view, the router makes more reasonable routing decisions.

On the whole, with a global perspective of the Router role, in a multi-cluster scenario, the advantages of multi-cluster can be fully utilized while avoiding the shortcomings of multi-cluster. In the future, we plan to give Router more capabilities not only to solve job pending, but also to improve resource utilization. We will also do more work in terms of job operating efficiency, so that jobs can better match computing and storage resources, making computing more valuable.

4.4 Metadata Extension Tool-Waggle Dance

Waggle Dance provides routing agents for Hive MetaStore and is an Apache open source project. Waggle Dance is fully compatible with HiveMetaStore's native interface, seamlessly connecting to the existing system, and achieving transparent upgrades to users. This is the main reason why we chose this technical solution.

The working principle of Waggle Dance is to route the existing Hive databases to different groups of Metastores according to the library names. Each group of Metastores corresponds to an independent MySQL DB instance to physically isolate the metadata.

In the above schematic diagram, the left side is the original HiveMetastore architecture. From the perspective of the architecture diagram itself, there is an obvious single point problem with the overall architecture, and the data exchange process is not graceful enough. After upgrading with Waggle Dance, the overall structure is clearer and more beautiful. Waggle Dance acts as the "bus" for metadata exchange, routing requests from the upper computing engine to the corresponding Metastore according to the library name.

During the actual operation of online segmentation of metadata, the overall Metastore downtime was within 10 minutes. We customized and optimized Waggle Dance and added a data caching layer to improve routing efficiency. At the same time, we integrated Waggle Dance with our internal management system to provide metadata management services for interface words.

4.5 Computing Unified Entry-Olivia

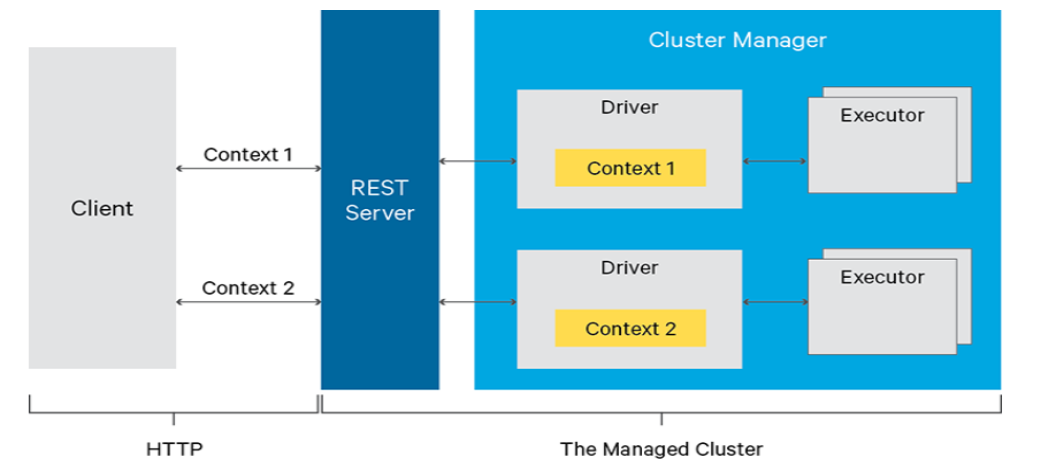

In order to solve the problem of Spark task submission entry, we still set our sights on the open source community and found that Livy can solve the SparkSQL task submission very well.

Livy is a REST service that submits Spark tasks. You can submit jobs to Livy in a variety of ways. For example, we often use beeline to submit sql tasks, and there are others such as web interface submission;

After the task is submitted to Livy, Livy submits the task to the Yarn cluster, and the Livy client generates Spark Context and pulls up the Driver. Livy can manage multiple Spark Contexts at the same time, supports two submission modes, batch and interactive, and functions basically similar to HiveServer.

Can Livy meet our needs? Let's first look at what are the problems with Livy itself.

The Livy we summarized has three main flaws:

Lack of high availability: If the Livy Server process restarts or the service is disconnected, the Spark Context session managed above will get out of control, causing the task to fail.

Lack of load balancing: The task allocation of Livy Server is a random process. A Livy Server in the zk namespace is randomly selected. This random process will cause a set of Livy Servers to be unbalanced.

Insufficient support for spark submit jobs: For the jar package tasks submitted by spark submit, the current support is not perfect.

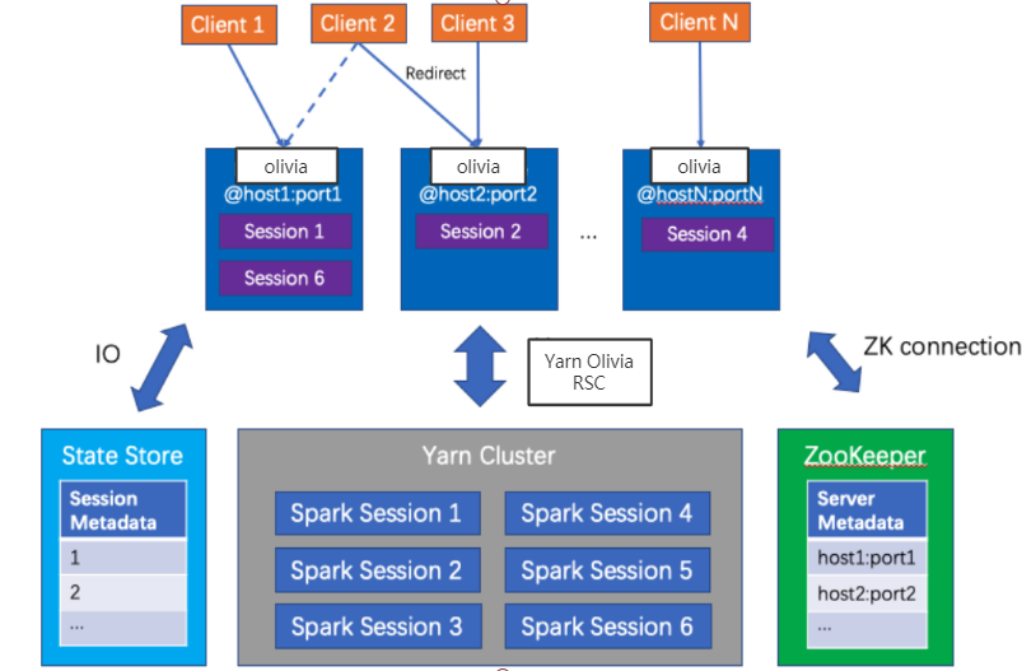

In response to the above problems, we self-developed Olivia based on Livy, which is a highly available, load-balancing, unified computing portal that supports both spark submit jar package tasks and python scripts.

Olivia uses domain names to submit jobs, and users don’t need to know which Server supports job submission and management. The background uses consistent Hash to achieve load balancing. If there is a Server going online and offline, load balancing will be automatically completed. For failover, we use zk to store spark session information. If a server has a problem, the corresponding managed session will be automatically transferred to another server for management. For the support of Spark submit tasks, we added an Olivia client role. The client will automatically upload jar packages and python scripts to the cluster to facilitate Olivia Server to submit jobs.

4.6 Overview

In the previous section, we introduced our solutions to five kinds of problems. The series connection is our topic today: the evolution of the big data offline computing platform.

At the end of this chapter, we look at the current offline computing architecture view from the collation.

From top to bottom, we can abstract six levels, namely:

Job Submit : This layer is mainly our offline job scheduling Oflow, complete task scheduling, dag management, job operation management; the core function is to realize the task submission.

Job Control : This layer mainly has task control components such as HiveServer, Livy, and Olivia, responsible for task submission and management to the cluster.

Compute Engine : The engine layer mainly uses Spark and MR.

Shuffle Service : This layer is to provide shuffle service for the Spark engine, and the subsequent Shuffle Service will also take over the shuffle data of the Flink engine.

MetaData Control : Waggle Dance and MetaStore and the underlying MySQL form our metadata control layer. Using Waggle Dance makes our metadata management more flexible.

Resource Control : The resource control layer is our computing resources. Yarn Router controls the job routing of each cluster, and each Yarn cluster completes resource management and job operation. We not only have self-developed strategies on Router, but we have also explored more scheduling modes in RM resource scheduling, such as: dynamic tags, resource sales restrictions, and smarter preemptive scheduling.

5 Development Outlook of OPPO Offline Computing Platform

The evolution of technology has been going on. What the future of OPPO's offline computing will look like is also a proposition that we have been thinking about. We consider both vertical and horizontal directions.

5.1 Lateral thinking

Horizontally, consider integrating with other resources and computing models.

We are working with the elastic computing team to connect big data with resources on the cloud, using the peak shift characteristics of online services and big data computing, and making full use of the company's existing resources to achieve offline hybrid scheduling.

At the same time, we are cooperating with the real-time computing team to explore more suitable scheduling modes for real-time computing.

5.2 Longitudinal thinking

Vertically, we think about how to make the existing architecture deeper and more refined.

Big HBO concept: We are exploring an architecture upgrade of a big HBO concept, from Oflow to yarn scheduling, to HBO optimization of spark engine and OLAP engine. The core is to provide faster, more automatic, and lower cost calculations.

Shuffle continues to evolve, thinking about the subsequent evolution of Shuffle, and more integrated with engine job scheduling, providing the pipeline computing form of spark batch computing. At the same time, consider adding the Shuffle Sorter role to the Shuffle Service, move the sort process to the Shuffle Service layer, and parallelize the spark sort operator to speed up the sort operation.

Finally, thank you all for your attention, and welcome everyone to exchange more technical thoughts on big data computing.

Author profile

David OPPO Senior Data Platform Engineer

He is mainly responsible for the design and development of OPPO big data offline computing architecture, and has participated in the development of self-developed big data computing engine in domestic first-line manufacturers. Have relatively rich experience in the construction of big data platforms.

**粗体** _斜体_ [链接](http://example.com) `代码` - 列表 > 引用。你还可以使用@来通知其他用户。