Hi everyone, this is Zhang Jintao.

Cilium is an open source software based on eBPF technology to provide secure and observable network connections between container workloads.

If you don’t know much about Cilium, you can refer to my two previous articles:

- K8S Ecological Weekly | Google chooses Cilium as GKE's next-generation data plane

- Cilium hands-on practice

Recently, Cilium v1.11.0 officially released , adding support for Open Telemetry and other enhanced features. At the same time, the Cilium Service Mesh plan was also announced. Cilium Service Mesh is currently in the testing phase and is expected to be merged into Cilium v1.12 in 2022.

Cilium Service Mesh also brings a brand new model.

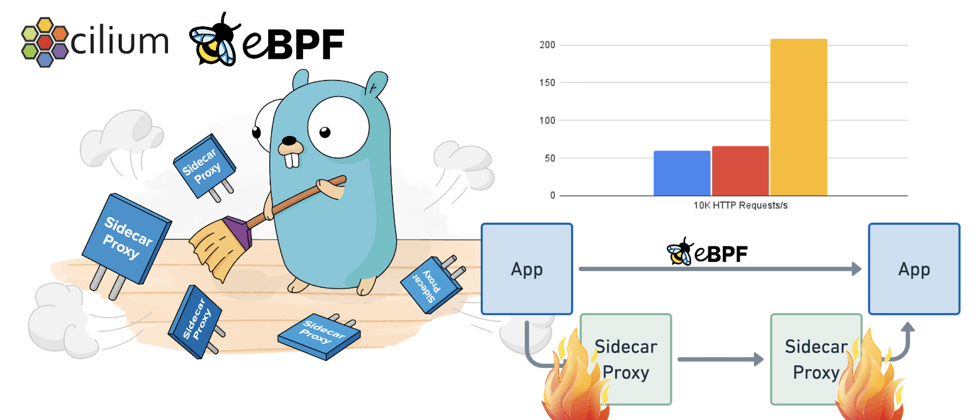

Compared with our conventional Istio/Linkerd solutions, the most notable feature of the Service Mesh implemented by Cilium directly through eBPF technology is that it replaces the Sidecar proxy model with the Kernel model, as shown in the following figure:

It is no longer necessary to place a Sidecar next to each application, and directly provide support on each Node.

I heard about this news a few months ago and had some discussions. Recently, with an article by How eBPF will solve Service Mesh-Goodbye Sidecars , Cilium Service Mesh has also become the focus of everyone's attention.

In this article, I will take you to experience Cilium Service Mesh.

Installation and deployment

Here I use KIND as the test environment, and my kernel version is 5.15.8.

Prepare KIND cluster

I won’t go into details about the installation of the KIND command line tool here. Interested friends can refer to my previous article "Using KIND to build your own local Kubernetes test environment".

The following is the configuration file I used to create the cluster:

apiVersion: kind.x-k8s.io/v1alpha4

kind: Cluster

nodes:

- role: control-plane

- role: worker

- role: worker

- role: worker

networking:

disableDefaultCNI: trueCreate a cluster:

➜ cilium-mesh kind create cluster --config kind-config.yaml

Creating cluster "kind" ...

✓ Ensuring node image (kindest/node:v1.22.4) 🖼

✓ Preparing nodes 📦 📦 📦 📦

✓ Writing configuration 📜

✓ Starting control-plane 🕹️

✓ Installing StorageClass 💾

✓ Joining worker nodes 🚜

Set kubectl context to "kind-kind"

You can now use your cluster with:

kubectl cluster-info --context kind-kind

Not sure what to do next? 😅 Check out https://kind.sigs.k8s.io/docs/user/quick-start/Install Cilium CLI

Here we use the Cilium CLI tool to deploy Cilium.

➜ cilium-mesh curl -L --remote-name-all https://github.com/cilium/cilium-cli/releases/latest/download/cilium-linux-amd64.tar.gz\{,.sha256sum\}

[1/2]: https://github.com/cilium/cilium-cli/releases/latest/download/cilium-linux-amd64.tar.gz --> cilium-linux-amd64.tar.gz

--_curl_--https://github.com/cilium/cilium-cli/releases/latest/download/cilium-linux-amd64.tar.gz

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

100 154 100 154 0 0 243 0 --:--:-- --:--:-- --:--:-- 242

100 664 100 664 0 0 579 0 0:00:01 0:00:01 --:--:-- 579

100 14.6M 100 14.6M 0 0 2928k 0 0:00:05 0:00:05 --:--:-- 3910k

[2/2]: https://github.com/cilium/cilium-cli/releases/latest/download/cilium-linux-amd64.tar.gz.sha256sum --> cilium-linux-amd64.tar.gz.sha256sum

--_curl_--https://github.com/cilium/cilium-cli/releases/latest/download/cilium-linux-amd64.tar.gz.sha256sum

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

100 164 100 164 0 0 419 0 --:--:-- --:--:-- --:--:-- 418

100 674 100 674 0 0 861 0 --:--:-- --:--:-- --:--:-- 861

100 92 100 92 0 0 67 0 0:00:01 0:00:01 --:--:-- 0

➜ cilium-mesh ls

cilium-linux-amd64.tar.gz cilium-linux-amd64.tar.gz.sha256sum kind-config.yaml

➜ cilium-mesh tar -zxvf cilium-linux-amd64.tar.gz

ciliumLoad image

In the process of deploying Cilium, some images are needed. We can download them in advance and load them into the Node node of KIND. If your network is relatively smooth, then this step can be skipped.

➜ cilium-mesh ciliumMeshImage=("quay.io/cilium/cilium-service-mesh:v1.11.0-beta.1" "quay.io/cilium/operator-generic-service-mesh:v1.11.0-beta.1" "quay.io/cilium/hubble-relay-service-mesh:v1.11.0-beta.1")

➜ cilium-mesh for i in ${ciliumMeshImage[@]}

do

docker pull $i

kind load docker-image $i

doneDeploy cilium

Next, we directly use the Cilium CLI to complete the deployment. Pay attention to the parameters here.

➜ cilium-mesh cilium install --version -service-mesh:v1.11.0-beta.1 --config enable-envoy-config=true --kube-proxy-replacement=probe --agent-image='quay.io/cilium/cilium-service-mesh:v1.11.0-beta.1' --operator-image='quay.io/cilium/operator-generic-service-mesh:v1.11.0-beta.1' --datapath-mode=vxlan

🔮 Auto-detected Kubernetes kind: kind

✨ Running "kind" validation checks

✅ Detected kind version "0.12.0"

ℹ️ using Cilium version "-service-mesh:v1.11.0-beta.1"

🔮 Auto-detected cluster name: kind-kind

🔮 Auto-detected IPAM mode: kubernetes

🔮 Custom datapath mode: vxlan

🔑 Found CA in secret cilium-ca

🔑 Generating certificates for Hubble...

🚀 Creating Service accounts...

🚀 Creating Cluster roles...

🚀 Creating ConfigMap for Cilium version 1.11.0...

ℹ️ Manual overwrite in ConfigMap: enable-envoy-config=true

🚀 Creating Agent DaemonSet...

🚀 Creating Operator Deployment...

⌛ Waiting for Cilium to be installed and ready...

✅ Cilium was successfully installed! Run 'cilium status' to view installation healthCheck status

After the installation is successful, you can use the cilium status command to view the current deployment of Cilium.

➜ cilium-mesh cilium status

/¯¯\

/¯¯\__/¯¯\ Cilium: OK

\__/¯¯\__/ Operator: OK

/¯¯\__/¯¯\ Hubble: disabled

\__/¯¯\__/ ClusterMesh: disabled

\__/

Deployment cilium-operator Desired: 1, Ready: 1/1, Available: 1/1

DaemonSet cilium Desired: 4, Ready: 4/4, Available: 4/4

Containers: cilium Running: 4

cilium-operator Running: 1

Cluster Pods: 3/3 managed by Cilium

Image versions cilium quay.io/cilium/cilium-service-mesh:v1.11.0-beta.1: 4

cilium-operator quay.io/cilium/operator-generic-service-mesh:v1.11.0-beta.1: 1

Enable Hubble

Hubble is mainly used to provide observability. Before enabling it, you need to load a mirror, if the network is unblocked, you can skip it.

docker.io/envoyproxy/envoy:v1.18.2@sha256:e8b37c1d75787dd1e712ff389b0d37337dc8a174a63bed9c34ba73359dc67da7Then use Cilium CLI to open Hubble:

➜ cilium-mesh cilium hubble enable --relay-image='quay.io/cilium/hubble-relay-service-mesh:v1.11.0-beta.1' --ui

🔑 Found CA in secret cilium-ca

✨ Patching ConfigMap cilium-config to enable Hubble...

♻️ Restarted Cilium pods

⌛ Waiting for Cilium to become ready before deploying other Hubble component(s)...

🔑 Generating certificates for Relay...

✨ Deploying Relay from quay.io/cilium/hubble-relay-service-mesh:v1.11.0-beta.1...

✨ Deploying Hubble UI from quay.io/cilium/hubble-ui:v0.8.3 and Hubble UI Backend from quay.io/cilium/hubble-ui-backend:v0.8.3...

⌛ Waiting for Hubble to be installed...

/¯¯\

/¯¯\__/¯¯\ Cilium: OK

\__/¯¯\__/ Operator: OK

/¯¯\__/¯¯\ Hubble: OK

\__/¯¯\__/ ClusterMesh: disabled

\__/

DaemonSet cilium Desired: 4, Ready: 4/4, Available: 4/4

Deployment cilium-operator Desired: 1, Ready: 1/1, Available: 1/1

Deployment hubble-relay Desired: 1, Ready: 1/1, Available: 1/1

Deployment hubble-ui Desired: 1, Unavailable: 1/1

Containers: cilium Running: 4

cilium-operator Running: 1

hubble-relay Running: 1

hubble-ui Running: 1

Cluster Pods: 5/5 managed by Cilium

Image versions cilium quay.io/cilium/cilium-service-mesh:v1.11.0-beta.1: 4

cilium-operator quay.io/cilium/operator-generic-service-mesh:v1.11.0-beta.1: 1

hubble-relay quay.io/cilium/hubble-relay-service-mesh:v1.11.0-beta.1: 1

hubble-ui quay.io/cilium/hubble-ui:v0.8.3: 1

hubble-ui quay.io/cilium/hubble-ui-backend:v0.8.3: 1

hubble-ui docker.io/envoyproxy/envoy:v1.18.2@sha256:e8b37c1d75787dd1e712ff389b0d37337dc8a174a63bed9c34ba73359dc67da7: 1Test 7-layer Ingress traffic management

Install LB

Here we can install MetaLB in the KIND cluster so that we can use LoadBalancer type svc resources (Cilium will create a LoadBalancer type svc by default). If you don't install MetaLB, you can also use NodePort instead.

The specific process will not be introduced one by one, just follow the steps below.

➜ cilium-mesh kubectl apply -f https://raw.githubusercontent.com/metallb/metallb/master/manifests/namespace.yaml

namespace/metallb-system created

➜ cilium-mesh kubectl create secret generic -n metallb-system memberlist --from-literal=secretkey="$(openssl rand -base64 128)"

secret/memberlist created

➜ cilium-mesh kubectl apply -f https://raw.githubusercontent.com/metallb/metallb/master/manifests/metallb.yaml

Warning: policy/v1beta1 PodSecurityPolicy is deprecated in v1.21+, unavailable in v1.25+

podsecuritypolicy.policy/controller created

podsecuritypolicy.policy/speaker created

serviceaccount/controller created

serviceaccount/speaker created

clusterrole.rbac.authorization.k8s.io/metallb-system:controller created

clusterrole.rbac.authorization.k8s.io/metallb-system:speaker created

role.rbac.authorization.k8s.io/config-watcher created

role.rbac.authorization.k8s.io/pod-lister created

role.rbac.authorization.k8s.io/controller created

clusterrolebinding.rbac.authorization.k8s.io/metallb-system:controller created

clusterrolebinding.rbac.authorization.k8s.io/metallb-system:speaker created

rolebinding.rbac.authorization.k8s.io/config-watcher created

rolebinding.rbac.authorization.k8s.io/pod-lister created

rolebinding.rbac.authorization.k8s.io/controller created

daemonset.apps/speaker created

deployment.apps/controller created

➜ cilium-mesh docker network inspect -f '{{.IPAM.Config}}' kind

[{172.18.0.0/16 172.18.0.1 map[]} {fc00:f853:ccd:e793::/64 fc00:f853:ccd:e793::1 map[]}]

➜ cilium-mesh vim kind-lb-cm.yaml

➜ cilium-mesh cat kind-lb-cm.yaml

apiVersion: v1

kind: ConfigMap

metadata:

namespace: metallb-system

name: config

data:

config: |

address-pools:

- name: default

protocol: layer2

addresses:

- 172.18.255.200-172.18.255.250

➜ cilium-mesh kubectl apply -f kind-lb-cm.yaml

configmap/config createdLoad image

Here we use hashicorp/http-echo:0.2.3 as sample programs, which can respond to different content according to different startup parameters.

➜ cilium-mesh docker pull hashicorp/http-echo:0.2.3

0.2.3: Pulling from hashicorp/http-echo

86399148984b: Pull complete

Digest: sha256:ba27d460cd1f22a1a4331bdf74f4fccbc025552357e8a3249c40ae216275de96

Status: Downloaded newer image for hashicorp/http-echo:0.2.3

docker.io/hashicorp/http-echo:0.2.3

➜ cilium-mesh kind load docker-image hashicorp/http-echo:0.2.3

Image: "hashicorp/http-echo:0.2.3" with ID "sha256:a6838e9a6ff6ab3624720a7bd36152dda540ce3987714398003e14780e61478a" not yet present on node "kind-worker", loading...

Image: "hashicorp/http-echo:0.2.3" with ID "sha256:a6838e9a6ff6ab3624720a7bd36152dda540ce3987714398003e14780e61478a" not yet present on node "kind-worker2", loading...

Image: "hashicorp/http-echo:0.2.3" with ID "sha256:a6838e9a6ff6ab3624720a7bd36152dda540ce3987714398003e14780e61478a" not yet present on node "kind-control-plane", loading...

Image: "hashicorp/http-echo:0.2.3" with ID "sha256:a6838e9a6ff6ab3624720a7bd36152dda540ce3987714398003e14780e61478a" not yet present on node "kind-worker3", loading...Deployment test service

All configuration files in this article can be obtained in the https://github.com/tao12345666333/practical-kubernetes/tree/main/cilium-mesh code repository.

We use the following configuration to deploy the test service:

apiVersion: v1

kind: Pod

metadata:

labels:

run: foo-app

name: foo-app

spec:

containers:

- image: hashicorp/http-echo:0.2.3

args:

- "-text=foo"

name: foo-app

ports:

- containerPort: 5678

resources: {}

dnsPolicy: ClusterFirst

restartPolicy: Always

status: {}

---

apiVersion: v1

kind: Service

metadata:

labels:

run: foo-app

name: foo-app

spec:

ports:

- port: 5678

protocol: TCP

targetPort: 5678

selector:

run: foo-app

---

apiVersion: v1

kind: Pod

metadata:

labels:

run: bar-app

name: bar-app

spec:

containers:

- image: hashicorp/http-echo:0.2.3

args:

- "-text=bar"

name: bar-app

ports:

- containerPort: 5678

resources: {}

dnsPolicy: ClusterFirst

restartPolicy: Always

---

apiVersion: v1

kind: Service

metadata:

labels:

run: bar-app

name: bar-app

spec:

ports:

- port: 5678

protocol: TCP

targetPort: 5678

selector:

run: bar-app

Create the following Ingress resource file:

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: cilium-ingress

namespace: default

spec:

ingressClassName: cilium

rules:

- http:

paths:

- backend:

service:

name: foo-app

port:

number: 5678

path: /foo

pathType: Prefix

- backend:

service:

name: bar-app

port:

number: 5678

path: /bar

pathType: PrefixCreate an Ingress resource, and then you can see that a new LoadBalancer type svc is generated.

➜ cilium-mesh kubectl apply -f cilium-ingress.yaml

ingress.networking.k8s.io/cilium-ingress created

➜ cilium-mesh kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

bar-app ClusterIP 10.96.229.141 <none> 5678/TCP 106s

cilium-ingress-cilium-ingress LoadBalancer 10.96.161.128 172.18.255.200 80:31643/TCP 4s

foo-app ClusterIP 10.96.166.212 <none> 5678/TCP 106s

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 81m

➜ cilium-mesh kubectl get ing

NAME CLASS HOSTS ADDRESS PORTS AGE

cilium-ingress cilium * 172.18.255.200 80 1mtest

Use the curl command for test access, and found that the correct response can be obtained according to the configuration in the Ingress resource. Looking at the response header, we will find that the proxy here is actually done by Envoy.

➜ cilium-mesh curl 172.18.255.200

➜ cilium-mesh curl 172.18.255.200/foo

foo

➜ cilium-mesh curl 172.18.255.200/bar

bar

➜ cilium-mesh curl -I 172.18.255.200/bar

HTTP/1.1 200 OK

Content-Length: 4

Connection: keep-alive

Content-Type: text/plain; charset=utf-8

Date: Sat, 18 Dec 2021 06:02:56 GMT

Keep-Alive: timeout=4

Proxy-Connection: keep-alive

Server: envoy

X-App-Name: http-echo

X-App-Version: 0.2.3

X-Envoy-Upstream-Service-Time: 0

➜ cilium-mesh curl -I 172.18.255.200/foo

HTTP/1.1 200 OK

Content-Length: 4

Connection: keep-alive

Content-Type: text/plain; charset=utf-8

Date: Sat, 18 Dec 2021 06:03:01 GMT

Keep-Alive: timeout=4

Proxy-Connection: keep-alive

Server: envoy

X-App-Name: http-echo

X-App-Version: 0.2.3

X-Envoy-Upstream-Service-Time: 0

Test CiliumEnvoyConfig

After CIlium is deployed using the above method, it actually installs some CRD resources. One of them is CiliumEnvoyConfig for configuring the proxy between services.

➜ cilium-mesh kubectl api-resources |grep cilium.io

ciliumclusterwidenetworkpolicies ccnp cilium.io/v2 false CiliumClusterwideNetworkPolicy

ciliumendpoints cep,ciliumep cilium.io/v2 true CiliumEndpoint

ciliumenvoyconfigs cec cilium.io/v2alpha1 false CiliumEnvoyConfig

ciliumexternalworkloads cew cilium.io/v2 false CiliumExternalWorkload

ciliumidentities ciliumid cilium.io/v2 false CiliumIdentity

ciliumnetworkpolicies cnp,ciliumnp cilium.io/v2 true CiliumNetworkPolicy

ciliumnodes cn,ciliumn cilium.io/v2 false CiliumNodeDeployment test service

You can perform Hubble port-forward first

➜ cilium-mesh cilium hubble port-forwardBy default, it will monitor port 4245. If you do not perform this operation in advance, the following content will appear

🔭 Enabling Hubble telescope...

⚠️ Unable to contact Hubble Relay, disabling Hubble telescope and flow validation: rpc error: code = Unavailable desc = connection error: desc = "transport: Error while dialing dial tcp [::1]:4245: connect: connection refused"If Hubble's port-forward has been turned on, you will get the following output under normal circumstances:

➜ cilium-mesh cilium connectivity test --test egress-l7

ℹ️ Monitor aggregation detected, will skip some flow validation steps

⌛ [kind-kind] Waiting for deployments [client client2 echo-same-node] to become ready...

⌛ [kind-kind] Waiting for deployments [echo-other-node] to become ready...

⌛ [kind-kind] Waiting for CiliumEndpoint for pod cilium-test/client-6488dcf5d4-pk6w9 to appear...

⌛ [kind-kind] Waiting for CiliumEndpoint for pod cilium-test/client2-5998d566b4-hrhrb to appear...

⌛ [kind-kind] Waiting for CiliumEndpoint for pod cilium-test/echo-other-node-f4d46f75b-bqpcb to appear...

⌛ [kind-kind] Waiting for CiliumEndpoint for pod cilium-test/echo-same-node-745bd5c77-zpzdn to appear...

⌛ [kind-kind] Waiting for Service cilium-test/echo-other-node to become ready...

⌛ [kind-kind] Waiting for Service cilium-test/echo-same-node to become ready...

⌛ [kind-kind] Waiting for NodePort 172.18.0.5:32751 (cilium-test/echo-other-node) to become ready...

⌛ [kind-kind] Waiting for NodePort 172.18.0.5:32133 (cilium-test/echo-same-node) to become ready...

⌛ [kind-kind] Waiting for NodePort 172.18.0.3:32133 (cilium-test/echo-same-node) to become ready...

⌛ [kind-kind] Waiting for NodePort 172.18.0.3:32751 (cilium-test/echo-other-node) to become ready...

⌛ [kind-kind] Waiting for NodePort 172.18.0.2:32751 (cilium-test/echo-other-node) to become ready...

⌛ [kind-kind] Waiting for NodePort 172.18.0.2:32133 (cilium-test/echo-same-node) to become ready...

⌛ [kind-kind] Waiting for NodePort 172.18.0.4:32751 (cilium-test/echo-other-node) to become ready...

⌛ [kind-kind] Waiting for NodePort 172.18.0.4:32133 (cilium-test/echo-same-node) to become ready...

ℹ️ Skipping IPCache check

⌛ [kind-kind] Waiting for pod cilium-test/client-6488dcf5d4-pk6w9 to reach default/kubernetes service...

⌛ [kind-kind] Waiting for pod cilium-test/client2-5998d566b4-hrhrb to reach default/kubernetes service...

🔭 Enabling Hubble telescope...

ℹ️ Hubble is OK, flows: 16380/16380

🏃 Running tests...

[=] Skipping Test [no-policies]

[=] Skipping Test [allow-all]

[=] Skipping Test [client-ingress]

[=] Skipping Test [echo-ingress]

[=] Skipping Test [client-egress]

[=] Skipping Test [to-entities-world]

[=] Skipping Test [to-cidr-1111]

[=] Skipping Test [echo-ingress-l7]

[=] Test [client-egress-l7]

..........

[=] Skipping Test [dns-only]

[=] Skipping Test [to-fqdns]

✅ All 1 tests (10 actions) successful, 10 tests skipped, 0 scenarios skipped.We can also open the UI at the same time to see:

➜ cilium-mesh cilium hubble ui

ℹ️ Opening "http://localhost:12000" in your browser...The renderings are as follows:

This operation will actually be deployed as follows:

➜ cilium-mesh kubectl -n cilium-test get all

NAME READY STATUS RESTARTS AGE

pod/client-6488dcf5d4-pk6w9 1/1 Running 0 66m

pod/client2-5998d566b4-hrhrb 1/1 Running 0 66m

pod/echo-other-node-f4d46f75b-bqpcb 1/1 Running 0 66m

pod/echo-same-node-745bd5c77-zpzdn 1/1 Running 0 66m

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/echo-other-node NodePort 10.96.124.211 <none> 8080:32751/TCP 66m

service/echo-same-node NodePort 10.96.136.252 <none> 8080:32133/TCP 66m

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/client 1/1 1 1 66m

deployment.apps/client2 1/1 1 1 66m

deployment.apps/echo-other-node 1/1 1 1 66m

deployment.apps/echo-same-node 1/1 1 1 66m

NAME DESIRED CURRENT READY AGE

replicaset.apps/client-6488dcf5d4 1 1 1 66m

replicaset.apps/client2-5998d566b4 1 1 1 66m

replicaset.apps/echo-other-node-f4d46f75b 1 1 1 66m

replicaset.apps/echo-same-node-745bd5c77 1 1 1 66mWe can also look at its label:

➜ cilium-mesh kubectl get pods -n cilium-test --show-labels -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES LABELS

client-6488dcf5d4-pk6w9 1/1 Running 0 67m 10.244.3.7 kind-worker3 <none> <none> kind=client,name=client,pod-template-hash=6488dcf5d4

client2-5998d566b4-hrhrb 1/1 Running 0 67m 10.244.3.18 kind-worker3 <none> <none> kind=client,name=client2,other=client,pod-template-hash=5998d566b4

echo-other-node-f4d46f75b-bqpcb 1/1 Running 0 67m 10.244.1.146 kind-worker2 <none> <none> kind=echo,name=echo-other-node,pod-template-hash=f4d46f75b

echo-same-node-745bd5c77-zpzdn 1/1 Running 0 67m 10.244.3.164 kind-worker3 <none> <none> kind=echo,name=echo-same-node,other=echo,pod-template-hash=745bd5c77test

Here we operate on the host, first get the Pod name of client2, and then observe all traffic accessing this Pod through the Hubble command.

➜ cilium-mesh export CLIENT2=client2-5998d566b4-hrhrb

➜ cilium-mesh hubble observe --from-pod cilium-test/$CLIENT2 -f

Dec 18 14:07:37.200: cilium-test/client2-5998d566b4-hrhrb:44805 <> kube-system/coredns-78fcd69978-7lbwh:53 to-overlay FORWARDED (UDP)

Dec 18 14:07:37.200: cilium-test/client2-5998d566b4-hrhrb:44805 -> kube-system/coredns-78fcd69978-7lbwh:53 to-endpoint FORWARDED (UDP)

Dec 18 14:07:37.200: cilium-test/client2-5998d566b4-hrhrb:44805 <> kube-system/coredns-78fcd69978-7lbwh:53 to-overlay FORWARDED (UDP)

Dec 18 14:07:37.200: cilium-test/client2-5998d566b4-hrhrb:44805 -> kube-system/coredns-78fcd69978-7lbwh:53 to-endpoint FORWARDED (UDP)

Dec 18 14:07:37.200: cilium-test/client2-5998d566b4-hrhrb:42260 -> cilium-test/echo-same-node-745bd5c77-zpzdn:8080 to-endpoint FORWARDED (TCP Flags: SYN)

Dec 18 14:07:37.201: cilium-test/client2-5998d566b4-hrhrb:42260 -> cilium-test/echo-same-node-745bd5c77-zpzdn:8080 to-endpoint FORWARDED (TCP Flags: ACK)

Dec 18 14:07:37.201: cilium-test/client2-5998d566b4-hrhrb:42260 -> cilium-test/echo-same-node-745bd5c77-zpzdn:8080 to-endpoint FORWARDED (TCP Flags: ACK, PSH)

Dec 18 14:07:37.202: cilium-test/client2-5998d566b4-hrhrb:42260 -> cilium-test/echo-same-node-745bd5c77-zpzdn:8080 to-endpoint FORWARDED (TCP Flags: ACK, FIN)

Dec 18 14:07:37.203: cilium-test/client2-5998d566b4-hrhrb:42260 -> cilium-test/echo-same-node-745bd5c77-zpzdn:8080 to-endpoint FORWARDED (TCP Flags: ACK)

Dec 18 14:07:50.769: cilium-test/client2-5998d566b4-hrhrb:36768 <> kube-system/coredns-78fcd69978-7lbwh:53 to-overlay FORWARDED (UDP)

Dec 18 14:07:50.769: cilium-test/client2-5998d566b4-hrhrb:36768 <> kube-system/coredns-78fcd69978-7lbwh:53 to-overlay FORWARDED (UDP)

Dec 18 14:07:50.769: cilium-test/client2-5998d566b4-hrhrb:36768 -> kube-system/coredns-78fcd69978-7lbwh:53 to-endpoint FORWARDED (UDP)

Dec 18 14:07:50.769: cilium-test/client2-5998d566b4-hrhrb:36768 -> kube-system/coredns-78fcd69978-7lbwh:53 to-endpoint FORWARDED (UDP)

Dec 18 14:07:50.770: cilium-test/client2-5998d566b4-hrhrb:42068 <> cilium-test/echo-other-node-f4d46f75b-bqpcb:8080 to-overlay FORWARDED (TCP Flags: SYN)

Dec 18 14:07:50.770: cilium-test/client2-5998d566b4-hrhrb:42068 -> cilium-test/echo-other-node-f4d46f75b-bqpcb:8080 to-endpoint FORWARDED (TCP Flags: SYN)

Dec 18 14:07:50.770: cilium-test/client2-5998d566b4-hrhrb:42068 <> cilium-test/echo-other-node-f4d46f75b-bqpcb:8080 to-overlay FORWARDED (TCP Flags: ACK)

Dec 18 14:07:50.770: cilium-test/client2-5998d566b4-hrhrb:42068 -> cilium-test/echo-other-node-f4d46f75b-bqpcb:8080 to-endpoint FORWARDED (TCP Flags: ACK)

Dec 18 14:07:50.770: cilium-test/client2-5998d566b4-hrhrb:42068 <> cilium-test/echo-other-node-f4d46f75b-bqpcb:8080 to-overlay FORWARDED (TCP Flags: ACK, PSH)

Dec 18 14:07:50.770: cilium-test/client2-5998d566b4-hrhrb:42068 -> cilium-test/echo-other-node-f4d46f75b-bqpcb:8080 to-endpoint FORWARDED (TCP Flags: ACK, PSH)

Dec 18 14:07:50.771: cilium-test/client2-5998d566b4-hrhrb:42068 <> cilium-test/echo-other-node-f4d46f75b-bqpcb:8080 to-overlay FORWARDED (TCP Flags: ACK, FIN)

Dec 18 14:07:50.771: cilium-test/client2-5998d566b4-hrhrb:42068 -> cilium-test/echo-other-node-f4d46f75b-bqpcb:8080 to-endpoint FORWARDED (TCP Flags: ACK, FIN)

Dec 18 14:07:50.772: cilium-test/client2-5998d566b4-hrhrb:42068 <> cilium-test/echo-other-node-f4d46f75b-bqpcb:8080 to-overlay FORWARDED (TCP Flags: ACK)

Dec 18 14:07:50.772: cilium-test/client2-5998d566b4-hrhrb:42068 -> cilium-test/echo-other-node-f4d46f75b-bqpcb:8080 to-endpoint FORWARDED (TCP Flags: ACK)

The above output is because we performed the following operations:

kubectl exec -it -n cilium-test $CLIENT2 -- curl -v echo-same-node:8080/

kubectl exec -it -n cilium-test $CLIENT2 -- curl -v echo-other-node:8080/The logs are basically to-endpoint or to-overlay .

Test using proxy

Need to install networkpolicy first, we can get it directly from the Cilium CLI warehouse.

kubectl apply -f https://raw.githubusercontent.com/cilium/cilium-cli/master/connectivity/manifests/client-egress-l7-http.yaml

kubectl apply -f https://raw.githubusercontent.com/cilium/cilium-cli/master/connectivity/manifests/client-egress-only-dns.yamlThen repeat the above request:

Dec 18 14:33:40.570: cilium-test/client2-5998d566b4-hrhrb:44344 -> kube-system/coredns-78fcd69978-2ww28:53 L3-L4 REDIRECTED (UDP)

Dec 18 14:33:40.570: cilium-test/client2-5998d566b4-hrhrb:44344 -> kube-system/coredns-78fcd69978-2ww28:53 to-proxy FORWARDED (UDP)

Dec 18 14:33:40.570: cilium-test/client2-5998d566b4-hrhrb:44344 -> kube-system/coredns-78fcd69978-2ww28:53 to-proxy FORWARDED (UDP)

Dec 18 14:33:40.570: cilium-test/client2-5998d566b4-hrhrb:44344 -> kube-system/coredns-78fcd69978-2ww28:53 dns-request FORWARDED (DNS Query echo-other-node.cilium-test.svc.cluster.local. A)

Dec 18 14:33:40.570: cilium-test/client2-5998d566b4-hrhrb:44344 -> kube-system/coredns-78fcd69978-2ww28:53 dns-request FORWARDED (DNS Query echo-other-node.cilium-test.svc.cluster.local. AAAA)

Dec 18 14:33:40.571: cilium-test/client2-5998d566b4-hrhrb:42074 -> cilium-test/echo-other-node-f4d46f75b-bqpcb:8080 L3-L4 REDIRECTED (TCP Flags: SYN)

Dec 18 14:33:40.571: cilium-test/client2-5998d566b4-hrhrb:42074 -> cilium-test/echo-other-node-f4d46f75b-bqpcb:8080 to-proxy FORWARDED (TCP Flags: SYN)

Dec 18 14:33:40.571: cilium-test/client2-5998d566b4-hrhrb:42074 -> cilium-test/echo-other-node-f4d46f75b-bqpcb:8080 to-proxy FORWARDED (TCP Flags: ACK)

Dec 18 14:33:40.571: cilium-test/client2-5998d566b4-hrhrb:42074 -> cilium-test/echo-other-node-f4d46f75b-bqpcb:8080 to-proxy FORWARDED (TCP Flags: ACK, PSH)

Dec 18 14:33:40.572: cilium-test/client2-5998d566b4-hrhrb:42074 -> cilium-test/echo-other-node-f4d46f75b-bqpcb:8080 http-request FORWARDED (HTTP/1.1 GET http://echo-other-node:8080/)

Dec 18 14:33:40.573: cilium-test/client2-5998d566b4-hrhrb:42074 -> cilium-test/echo-other-node-f4d46f75b-bqpcb:8080 to-proxy FORWARDED (TCP Flags: ACK, FIN)

Dec 18 14:33:40.573: cilium-test/client2-5998d566b4-hrhrb:42074 -> cilium-test/echo-other-node-f4d46f75b-bqpcb:8080 to-proxy FORWARDED (TCP Flags: ACK)Perform another request:

➜ cilium-mesh kubectl exec -it -n cilium-test $CLIENT2 -- curl -v echo-same-node:8080/You can also see the following output, which contains the words to-proxy

Dec 18 14:45:18.857: cilium-test/client2-5998d566b4-hrhrb:58895 -> kube-system/coredns-78fcd69978-2ww28:53 L3-L4 REDIRECTED (UDP)

Dec 18 14:45:18.857: cilium-test/client2-5998d566b4-hrhrb:58895 -> kube-system/coredns-78fcd69978-2ww28:53 to-proxy FORWARDED (UDP)

Dec 18 14:45:18.857: cilium-test/client2-5998d566b4-hrhrb:58895 -> kube-system/coredns-78fcd69978-2ww28:53 to-proxy FORWARDED (UDP)

Dec 18 14:45:18.857: cilium-test/client2-5998d566b4-hrhrb:58895 -> kube-system/coredns-78fcd69978-2ww28:53 dns-request FORWARDED (DNS Query echo-same-node.cilium-test.svc.cluster.local. AAAA)

Dec 18 14:45:18.857: cilium-test/client2-5998d566b4-hrhrb:58895 -> kube-system/coredns-78fcd69978-2ww28:53 dns-request FORWARDED (DNS Query echo-same-node.cilium-test.svc.cluster.local. A)

Dec 18 14:45:18.858: cilium-test/client2-5998d566b4-hrhrb:42266 -> cilium-test/echo-same-node-745bd5c77-zpzdn:8080 L3-L4 REDIRECTED (TCP Flags: SYN)

Dec 18 14:45:18.858: cilium-test/client2-5998d566b4-hrhrb:42266 -> cilium-test/echo-same-node-745bd5c77-zpzdn:8080 to-proxy FORWARDED (TCP Flags: SYN)

Dec 18 14:45:18.858: cilium-test/client2-5998d566b4-hrhrb:42266 -> cilium-test/echo-same-node-745bd5c77-zpzdn:8080 to-proxy FORWARDED (TCP Flags: ACK)

Dec 18 14:45:18.858: cilium-test/client2-5998d566b4-hrhrb:42266 -> cilium-test/echo-same-node-745bd5c77-zpzdn:8080 to-proxy FORWARDED (TCP Flags: ACK, PSH)

Dec 18 14:45:18.858: cilium-test/client2-5998d566b4-hrhrb:42266 -> cilium-test/echo-same-node-745bd5c77-zpzdn:8080 http-request FORWARDED (HTTP/1.1 GET http://echo-same-node:8080/)

Dec 18 14:45:18.859: cilium-test/client2-5998d566b4-hrhrb:42266 -> cilium-test/echo-same-node-745bd5c77-zpzdn:8080 to-proxy FORWARDED (TCP Flags: ACK, FIN)

Dec 18 14:45:18.859: cilium-test/client2-5998d566b4-hrhrb:42266 -> cilium-test/echo-same-node-745bd5c77-zpzdn:8080 to-proxy FORWARDED (TCP Flags: ACK)In fact, it is more convenient to look at the request header:

➜ cilium-mesh kubectl exec -it -n cilium-test $CLIENT2 -- curl -I echo-same-node:8080/

HTTP/1.1 403 Forbidden

content-length: 15

content-type: text/plain

date: Sat, 18 Dec 2021 14:47:39 GMT

server: envoyIt was as follows before:

## 没有 proxy

➜ cilium-mesh kubectl exec -it -n cilium-test $CLIENT2 -- curl -v echo-same-node:8080/

* Trying 10.96.136.252:8080...

* Connected to echo-same-node (10.96.136.252) port 8080 (#0)

> GET / HTTP/1.1

> Host: echo-same-node:8080

> User-Agent: curl/7.78.0

> Accept: */*

>

* Mark bundle as not supporting multiuse

< HTTP/1.1 200 OK

< X-Powered-By: Express

< Vary: Origin, Accept-Encoding

< Access-Control-Allow-Credentials: true

< Accept-Ranges: bytes

< Cache-Control: public, max-age=0

< Last-Modified: Sat, 26 Oct 1985 08:15:00 GMT

< ETag: W/"809-7438674ba0"

< Content-Type: text/html; charset=UTF-8

< Content-Length: 2057

< Date: Sat, 18 Dec 2021 14:07:37 GMT

< Connection: keep-alive

< Keep-Alive: timeout=5 Request a non-existent address:

The request response used to be 404, now it is 403, and I get the following content

➜ cilium-mesh kubectl exec -it -n cilium-test $CLIENT2 -- curl -v echo-same-node:8080/foo

* Trying 10.96.136.252:8080...

* Connected to echo-same-node (10.96.136.252) port 8080 (#0)

> GET /foo HTTP/1.1

> Host: echo-same-node:8080

> User-Agent: curl/7.78.0

> Accept: */*

>

* Mark bundle as not supporting multiuse

< HTTP/1.1 403 Forbidden

< content-length: 15

< content-type: text/plain

< date: Sat, 18 Dec 2021 14:50:38 GMT

< server: envoy

<

Access denied

* Connection #0 to host echo-same-node left intactThe words to-proxy are also in the log.

Dec 18 14:50:39.185: cilium-test/client2-5998d566b4-hrhrb:37683 -> kube-system/coredns-78fcd69978-7lbwh:53 L3-L4 REDIRECTED (UDP)

Dec 18 14:50:39.185: cilium-test/client2-5998d566b4-hrhrb:37683 -> kube-system/coredns-78fcd69978-7lbwh:53 to-proxy FORWARDED (UDP)

Dec 18 14:50:39.185: cilium-test/client2-5998d566b4-hrhrb:37683 -> kube-system/coredns-78fcd69978-7lbwh:53 to-proxy FORWARDED (UDP)

Dec 18 14:50:39.185: cilium-test/client2-5998d566b4-hrhrb:37683 -> kube-system/coredns-78fcd69978-7lbwh:53 dns-request FORWARDED (DNS Query echo-same-node.cilium-test.svc.cluster.local. AAAA)

Dec 18 14:50:39.185: cilium-test/client2-5998d566b4-hrhrb:37683 -> kube-system/coredns-78fcd69978-7lbwh:53 dns-request FORWARDED (DNS Query echo-same-node.cilium-test.svc.cluster.local. A)

Dec 18 14:50:39.186: cilium-test/client2-5998d566b4-hrhrb:42274 -> cilium-test/echo-same-node-745bd5c77-zpzdn:8080 L3-L4 REDIRECTED (TCP Flags: SYN)

Dec 18 14:50:39.186: cilium-test/client2-5998d566b4-hrhrb:42274 -> cilium-test/echo-same-node-745bd5c77-zpzdn:8080 to-proxy FORWARDED (TCP Flags: SYN)

Dec 18 14:50:39.186: cilium-test/client2-5998d566b4-hrhrb:42274 -> cilium-test/echo-same-node-745bd5c77-zpzdn:8080 to-proxy FORWARDED (TCP Flags: ACK)

Dec 18 14:50:39.186: cilium-test/client2-5998d566b4-hrhrb:42274 -> cilium-test/echo-same-node-745bd5c77-zpzdn:8080 to-proxy FORWARDED (TCP Flags: ACK, PSH)

Dec 18 14:50:39.186: cilium-test/client2-5998d566b4-hrhrb:42274 -> cilium-test/echo-same-node-745bd5c77-zpzdn:8080 http-request DROPPED (HTTP/1.1 GET http://echo-same-node:8080/foo)

Dec 18 14:50:39.186: cilium-test/client2-5998d566b4-hrhrb:42274 -> cilium-test/echo-same-node-745bd5c77-zpzdn:8080 to-proxy FORWARDED (TCP Flags: ACK, FIN)

Dec 18 14:50:39.187: cilium-test/client2-5998d566b4-hrhrb:42274 -> cilium-test/echo-same-node-745bd5c77-zpzdn:8080 to-proxy FORWARDED (TCP Flags: ACK)We use the following content as the Envoy configuration file, which contains the rewrite strategy.

apiVersion: cilium.io/v2alpha1

kind: CiliumEnvoyConfig

metadata:

name: envoy-lb-listener

spec:

services:

- name: echo-other-node

namespace: cilium-test

- name: echo-same-node

namespace: cilium-test

resources:

- "@type": type.googleapis.com/envoy.config.listener.v3.Listener

name: envoy-lb-listener

filter_chains:

- filters:

- name: envoy.filters.network.http_connection_manager

typed_config:

"@type": type.googleapis.com/envoy.extensions.filters.network.http_connection_manager.v3.HttpConnectionManager

stat_prefix: envoy-lb-listener

rds:

route_config_name: lb_route

http_filters:

- name: envoy.filters.http.router

- "@type": type.googleapis.com/envoy.config.route.v3.RouteConfiguration

name: lb_route

virtual_hosts:

- name: "lb_route"

domains: ["*"]

routes:

- match:

prefix: "/"

route:

weighted_clusters:

clusters:

- name: "cilium-test/echo-same-node"

weight: 50

- name: "cilium-test/echo-other-node"

weight: 50

retry_policy:

retry_on: 5xx

num_retries: 3

per_try_timeout: 1s

regex_rewrite:

pattern:

google_re2: {}

regex: "^/foo.*$"

substitution: "/"

- "@type": type.googleapis.com/envoy.config.cluster.v3.Cluster

name: "cilium-test/echo-same-node"

connect_timeout: 5s

lb_policy: ROUND_ROBIN

type: EDS

outlier_detection:

split_external_local_origin_errors: true

consecutive_local_origin_failure: 2

- "@type": type.googleapis.com/envoy.config.cluster.v3.Cluster

name: "cilium-test/echo-other-node"

connect_timeout: 3s

lb_policy: ROUND_ROBIN

type: EDS

outlier_detection:

split_external_local_origin_errors: true

consecutive_local_origin_failure: 2

When testing the request, I found that I can get the response correctly.

➜ cilium-mesh kubectl exec -it -n cilium-test $CLIENT2 -- curl -X GET -I echo-same-node:8080/

HTTP/1.1 200 OK

x-powered-by: Express

vary: Origin, Accept-Encoding

access-control-allow-credentials: true

accept-ranges: bytes

cache-control: public, max-age=0

last-modified: Sat, 26 Oct 1985 08:15:00 GMT

etag: W/"809-7438674ba0"

content-type: text/html; charset=UTF-8

content-length: 2057

date: Sat, 18 Dec 2021 15:00:01 GMT

x-envoy-upstream-service-time: 1

server: envoy

And when you request the /foo , you can get a correct response.

➜ cilium-mesh kubectl exec -it -n cilium-test $CLIENT2 -- curl -X GET -I echo-same-node:8080/foo

HTTP/1.1 200 OK

x-powered-by: Express

vary: Origin, Accept-Encoding

access-control-allow-credentials: true

accept-ranges: bytes

cache-control: public, max-age=0

last-modified: Sat, 26 Oct 1985 08:15:00 GMT

etag: W/"809-7438674ba0"

content-type: text/html; charset=UTF-8

content-length: 2057

date: Sat, 18 Dec 2021 15:01:40 GMT

x-envoy-upstream-service-time: 2

server: envoy

At the same time: When requesting /foo , the traffic is as follows: The direct conversion is successful and the access to /

Dec 18 15:02:22.541: cilium-test/client2-5998d566b4-hrhrb:38860 -> kube-system/coredns-78fcd69978-2ww28:53 L3-L4 REDIRECTED (UDP)

Dec 18 15:02:22.541: cilium-test/client2-5998d566b4-hrhrb:38860 -> kube-system/coredns-78fcd69978-2ww28:53 to-proxy FORWARDED (UDP)

Dec 18 15:02:22.541: cilium-test/client2-5998d566b4-hrhrb:38860 -> kube-system/coredns-78fcd69978-2ww28:53 to-proxy FORWARDED (UDP)

Dec 18 15:02:22.541: cilium-test/client2-5998d566b4-hrhrb:38860 -> kube-system/coredns-78fcd69978-2ww28:53 dns-request FORWARDED (DNS Query echo-same-node.cilium-test.svc.cluster.local. AAAA)

Dec 18 15:02:22.541: cilium-test/client2-5998d566b4-hrhrb:38860 -> kube-system/coredns-78fcd69978-2ww28:53 dns-request FORWARDED (DNS Query echo-same-node.cilium-test.svc.cluster.local. A)

Dec 18 15:02:22.542: cilium-test/client2-5998d566b4-hrhrb:53062 -> cilium-test/echo-same-node:8080 none REDIRECTED (TCP Flags: SYN)

Dec 18 15:02:22.542: cilium-test/client2-5998d566b4-hrhrb:53062 -> cilium-test/echo-same-node:8080 to-proxy FORWARDED (TCP Flags: SYN)

Dec 18 15:02:22.542: cilium-test/client2-5998d566b4-hrhrb:53062 -> cilium-test/echo-same-node:8080 to-proxy FORWARDED (TCP Flags: ACK)

Dec 18 15:02:22.542: cilium-test/client2-5998d566b4-hrhrb:53062 -> cilium-test/echo-same-node:8080 to-proxy FORWARDED (TCP Flags: ACK, PSH)

Dec 18 15:02:22.542: cilium-test/client2-5998d566b4-hrhrb:53048 -> cilium-test/echo-same-node-745bd5c77-zpzdn:8080 to-proxy FORWARDED (TCP Flags: ACK, PSH)

Dec 18 15:02:22.542: cilium-test/client2-5998d566b4-hrhrb:53048 -> cilium-test/echo-same-node-745bd5c77-zpzdn:8080 http-request FORWARDED (HTTP/1.1 GET http://echo-same-node:8080/)

Dec 18 15:02:22.543: cilium-test/client2-5998d566b4-hrhrb:53062 -> cilium-test/echo-same-node:8080 to-proxy FORWARDED (TCP Flags: ACK, FIN)

Dec 18 15:02:22.544: cilium-test/client2-5998d566b4-hrhrb:53062 -> cilium-test/echo-same-node:8080 to-proxy FORWARDED (TCP Flags: ACK)

Multiple requests to see the log:

Dec 18 15:07:20.883: cilium-test/client2-5998d566b4-hrhrb:49656 -> kube-system/coredns-78fcd69978-2ww28:53 L3-L4 REDIRECTED (UDP)

Dec 18 15:07:20.883: cilium-test/client2-5998d566b4-hrhrb:49656 -> kube-system/coredns-78fcd69978-2ww28:53 to-proxy FORWARDED (UDP)

Dec 18 15:07:20.883: cilium-test/client2-5998d566b4-hrhrb:49656 -> kube-system/coredns-78fcd69978-2ww28:53 to-proxy FORWARDED (UDP)

Dec 18 15:07:20.883: cilium-test/client2-5998d566b4-hrhrb:49656 -> kube-system/coredns-78fcd69978-2ww28:53 dns-request FORWARDED (DNS Query echo-same-node.cilium-test.svc.cluster.local. A)

Dec 18 15:07:20.884: cilium-test/client2-5998d566b4-hrhrb:49656 -> kube-system/coredns-78fcd69978-2ww28:53 dns-request FORWARDED (DNS Query echo-same-node.cilium-test.svc.cluster.local. AAAA)

Dec 18 15:07:20.885: cilium-test/client2-5998d566b4-hrhrb:53070 -> cilium-test/echo-same-node:8080 none REDIRECTED (TCP Flags: SYN)

Dec 18 15:07:20.885: cilium-test/client2-5998d566b4-hrhrb:53070 -> cilium-test/echo-same-node:8080 to-proxy FORWARDED (TCP Flags: SYN)

Dec 18 15:07:20.885: cilium-test/client2-5998d566b4-hrhrb:53070 -> cilium-test/echo-same-node:8080 to-proxy FORWARDED (TCP Flags: ACK)

Dec 18 15:07:20.885: cilium-test/client2-5998d566b4-hrhrb:53070 -> cilium-test/echo-same-node:8080 to-proxy FORWARDED (TCP Flags: ACK, PSH)

Dec 18 15:07:20.885: cilium-test/client2-5998d566b4-hrhrb:53064 -> cilium-test/echo-same-node-745bd5c77-zpzdn:8080 to-proxy FORWARDED (TCP Flags: ACK, PSH)

Dec 18 15:07:20.885: cilium-test/client2-5998d566b4-hrhrb:53064 -> cilium-test/echo-same-node-745bd5c77-zpzdn:8080 http-request FORWARDED (HTTP/1.1 GET http://echo-same-node:8080/)

Dec 18 15:07:20.886: cilium-test/client2-5998d566b4-hrhrb:53070 -> cilium-test/echo-same-node:8080 to-proxy FORWARDED (TCP Flags: ACK, FIN)

Dec 18 15:07:20.886: cilium-test/client2-5998d566b4-hrhrb:53070 -> cilium-test/echo-same-node:8080 to-proxy FORWARDED (TCP Flags: ACK)

Dec 18 15:07:26.086: cilium-test/client2-5998d566b4-hrhrb:53048 -> cilium-test/echo-same-node-745bd5c77-zpzdn:8080 to-proxy FORWARDED (TCP Flags: ACK)

Dec 18 15:07:44.739: cilium-test/client2-5998d566b4-hrhrb:39057 -> kube-system/coredns-78fcd69978-7lbwh:53 L3-L4 REDIRECTED (UDP)

Dec 18 15:07:44.739: cilium-test/client2-5998d566b4-hrhrb:39057 -> kube-system/coredns-78fcd69978-7lbwh:53 to-proxy FORWARDED (UDP)

Dec 18 15:07:44.740: cilium-test/client2-5998d566b4-hrhrb:39057 -> kube-system/coredns-78fcd69978-7lbwh:53 to-proxy FORWARDED (UDP)

Dec 18 15:07:44.740: cilium-test/client2-5998d566b4-hrhrb:39057 -> kube-system/coredns-78fcd69978-7lbwh:53 dns-request FORWARDED (DNS Query echo-same-node.cilium-test.svc.cluster.local. AAAA)

Dec 18 15:07:44.740: cilium-test/client2-5998d566b4-hrhrb:39057 -> kube-system/coredns-78fcd69978-7lbwh:53 dns-request FORWARDED (DNS Query echo-same-node.cilium-test.svc.cluster.local. A)

Dec 18 15:07:44.741: cilium-test/client2-5998d566b4-hrhrb:53072 -> cilium-test/echo-same-node:8080 none REDIRECTED (TCP Flags: SYN)

Dec 18 15:07:44.741: cilium-test/client2-5998d566b4-hrhrb:53072 -> cilium-test/echo-same-node:8080 to-proxy FORWARDED (TCP Flags: SYN)

Dec 18 15:07:44.741: cilium-test/client2-5998d566b4-hrhrb:53072 -> cilium-test/echo-same-node:8080 to-proxy FORWARDED (TCP Flags: ACK)

Dec 18 15:07:44.741: cilium-test/client2-5998d566b4-hrhrb:53072 -> cilium-test/echo-same-node:8080 to-proxy FORWARDED (TCP Flags: ACK, PSH)

Dec 18 15:07:44.742: cilium-test/client2-5998d566b4-hrhrb:53068 -> cilium-test/echo-other-node-f4d46f75b-bqpcb:8080 to-proxy FORWARDED (TCP Flags: ACK, PSH)

Dec 18 15:07:44.742: cilium-test/client2-5998d566b4-hrhrb:53068 -> cilium-test/echo-other-node-f4d46f75b-bqpcb:8080 http-request FORWARDED (HTTP/1.1 GET http://echo-same-node:8080/)

Dec 18 15:07:44.744: cilium-test/client2-5998d566b4-hrhrb:53072 -> cilium-test/echo-same-node:8080 to-proxy FORWARDED (TCP Flags: ACK, FIN)

Dec 18 15:07:44.744: cilium-test/client2-5998d566b4-hrhrb:53072 -> cilium-test/echo-same-node:8080 to-proxy FORWARDED (TCP Flags: ACK)You can see that it has really successfully carried out load balancing.

Summarize

In this article, I took you to deploy Cilium Service Mesh, and through two examples, take you to experience the work of Cilium Service Mesh.

On the whole, this method can bring some convenience, but its inter-service traffic configuration mainly relies on CiliumEnvoyConfig, which is not too convenient.

Let's look forward to its follow-up evolution!

Welcome to subscribe to my article public account【MoeLove】

**粗体** _斜体_ [链接](http://example.com) `代码` - 列表 > 引用。你还可以使用@来通知其他用户。