1 Offline scheduling system

In the entire big data system, after the original data is collected, it needs to use various logics for integration and calculation to output the actual and effective data, and then it can be used for commercial purposes and realize the value of big data. In the entire processing flow, whether it is the extraction, conversion, and loading (ETL) process, or the data user analysis and processing process, it needs to contain a large number of processing tasks, and these tasks are not isolated, but there are interdependence and Constraint relationship. How to efficiently schedule and manage these tasks is very critical and affects the timeliness and accuracy of data in each process. In this process, the efficient management and scheduling of tasks is very critical, which will affect the timeliness and accuracy of the data in each process.

One of the simplest task scheduling system is the crontab that comes with the Linux system, which is simple to use and stable.

There is nothing wrong with using crontab at the beginning of the project. With the increase of scheduling tasks and mutual dependence, crontab is far from being able to meet the needs of development. These tasks have various forms, and there are also various dependencies between tasks. The execution of a task requires the completion of a series of pre-tasks. For example, after an upstream task A completes a specific logic, the downstream task B depends on the data results output by task A to generate its own data and results. Therefore, in order to ensure the accuracy and reliability of the data, it is necessary to perform orderly execution from upstream to downstream according to the dependencies between these tasks. How to make a large number of tasks accurately complete the scheduling without problems, even in the case of an error in the task scheduling execution, the task can complete self-recovery and even execute error alarms and complete log queries. The big data offline task scheduling system is to play such a role.

The core functions of the scheduling system are mainly as follows:

Organize and manage task processes, schedule and execute tasks regularly, and handle dependencies between tasks.

For a complete offline scheduling system, the following core functions are required:

- As a command center in the big data system, it is responsible for scheduling tasks based on time, dependency, task priority, resources and other conditions;

- Need to be able to handle multiple dependencies of the task, including time dependence, task upstream and downstream dependence, self-dependence, etc.;

- The amount of data is huge, there are many types of tasks, and multiple types of tasks need to be executed, such as MapReduce, hive, spark, shell, python, etc.;

- A complete monitoring system is needed to monitor the entire scheduling and execution process to ensure the entire chain of task scheduling and execution. An alarm notification can be sent even if there is an abnormal situation in the process.

Our OFLOW system is to achieve the above requirements.

2 Application of OFLOW system in OPPO

The core functions currently provided by OFLOW are mainly as follows:

- Efficient and punctual task scheduling;

- Flexible scheduling strategy: time, upstream and downstream dependence, task dependence;

- Multiple task types: data integration, Hive, Python, Java, MapReduce, Spark, SparkSQL, Sqoop, machine learning tasks, etc.;

- Isolation between businesses and between task processes;

- Highly available and scalable;

- Task configuration: parameters, failure retry (times, interval), failure and timeout alarms, different levels of alarms, task callbacks;

- Rich and comprehensive operation pages, graphical pages for task development, operation and maintenance, monitoring and other operations;

- authority management;

- View task status and analyze logs in real time, and perform various operation and maintenance operations such as stopping, re-running, and re-recording;

- Task historical data analysis;

- Script development, testing, and release process;

- Alarm monitoring: state monitoring of a variety of abnormal conditions, flexible configuration;

- Focus on monitoring of core tasks to ensure punctuality;

- Support API access.

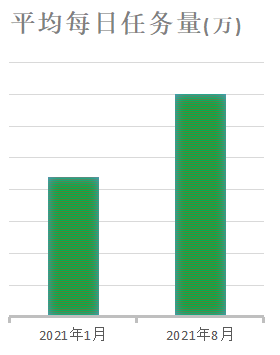

At present, OFLOW has undertaken a lot of task scheduling in our company.

OFLOW currently has 5 major clusters in China, Singapore, India, EU and North America. The EU and North America clusters have been launched recently, and the tasks have not yet been increased. At present, the main clusters are China, Singapore and India.

Currently, users can access OFLOW in the following ways:

- oflow's webserver;

- Nantianmen platform, including data development-offline task module, data integration task module, offline script development module. The task scheduling and execution of the back-end are all also on the oflow system;

- Oflow also supports access through api, and currently there are already multiple businesses that use oflow system through api;

3 Design and evolution of OFLOW system

According to the previous information, it can be seen that the core of the entire offline scheduling system is two components, one is the scheduling engine and the other is the execution engine.

The scheduling engine schedules tasks according to task attributes (period, delay, dependency, etc.), and distributes them to different execution nodes according to task priority, queue and resource conditions;

The execution engine obtains the tasks that meet the execution conditions, executes the tasks, outputs logs during the task execution process, and monitors the task execution process.

Among the common offline scheduling systems currently on the market, airflow can be said to be the best. After years of development, the function has been very complete, and it is also very active in the open source community.

Airflow was started by Maxime Beauchemin of Airbnb in October 2014;

Announced officially joining Airbnb Github in June 2015;

Joined the incubation program of Apache Software Foundation in March 2016;

The current update iteration version has reached version 1-10; version 2-1.

Our oppo offline scheduling system was introduced in airflow 1.8 version.

The following are several concepts in the airflow system, and there are similar concepts in other offline scheduling systems.

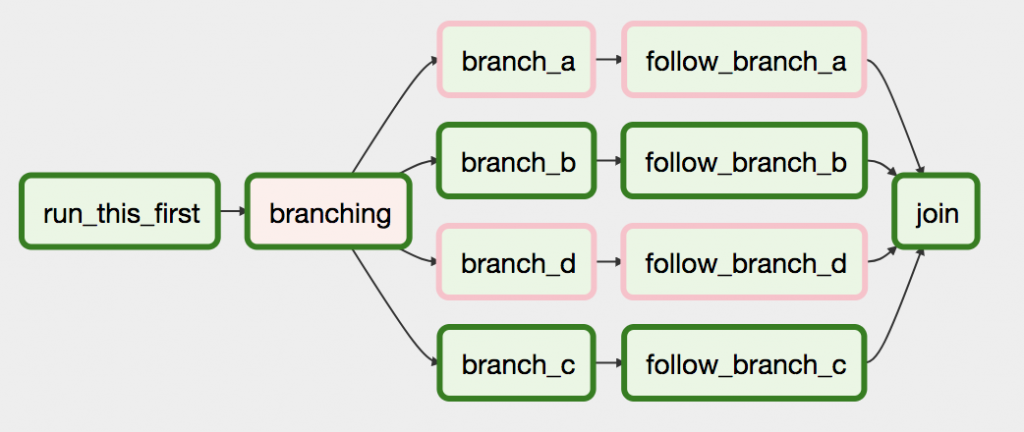

- DAG: Directed Acyclic Graph, which organizes all tasks that need to be run according to dependencies, and describes the dependencies of all tasks execution. In airflow, DAG is defined by an executable python script.

- Operators: It can be understood as a task template that describes what a specific task in the DAG should do. Airflow has many built-in operators, such as BashOperator to execute bash commands, PythonOperator to call any Python function, EmailOperator to send emails, HTTPOperator to send HTTP requests, SqlOperator to execute SQL commands... At the same time, users can customize Operators. The user provides great convenience. His role is like a class file in java.

- Sensor is a special type of Operator, which is triggered by specific conditions, such as ExternalTaskSensor, TimeSensor, TimeDeltaSensor.

- Tasks: Task is an instance of Operator, that is, a node in DAGs. When a user instantiates an operator, that is, specializes an operator with some parameters, a task is generated.

- DagRun: When the dag file is recognized and scheduled by airflow, the running DAG is dagRun. It can be seen in the webUi interface.

- Task Instance: A run of task. The task that is running, the task instance has its own status, including "running", "success", "failed", "skipped", "up for retry", etc.

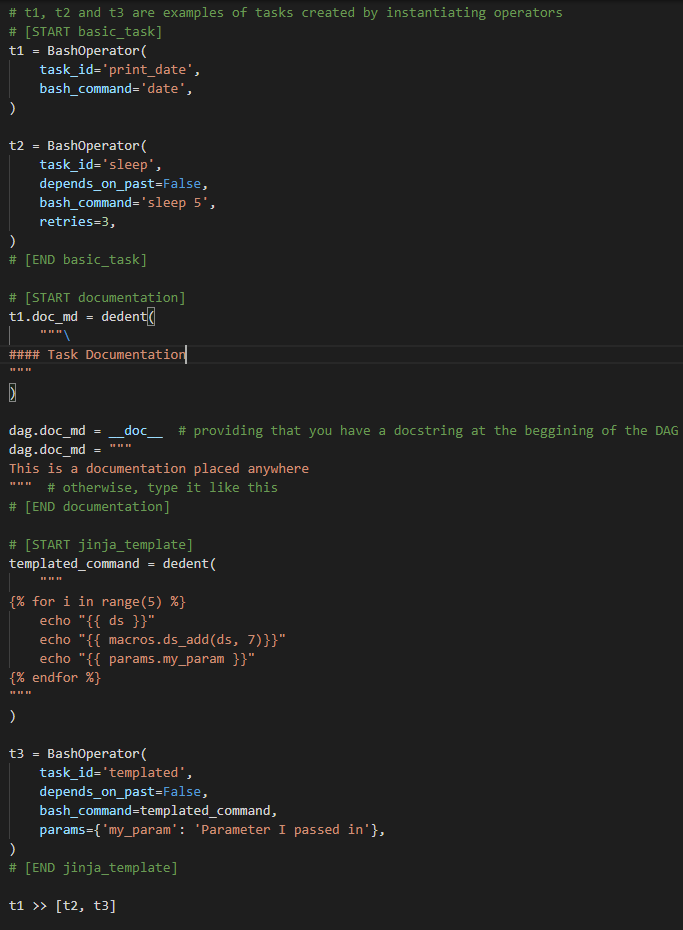

In airflow, defining dag and dag tasks is achieved through a python file, this is an example.

This py file is used to define dag. Although it is also open source and runs directly, running it alone has no effect. It just checks whether the python syntax is correct. He also does not perform specific tasks, but only describes the dependencies between tasks, as well as the scheduling time interval and other requirements.

This needs to be parsed during task scheduling and execution before it can be run in accordance with the set logic scheduling and execution steps set by the user.

Such a python file places relatively high requirements on data developers, and requires platform users to be proficient in python coding.

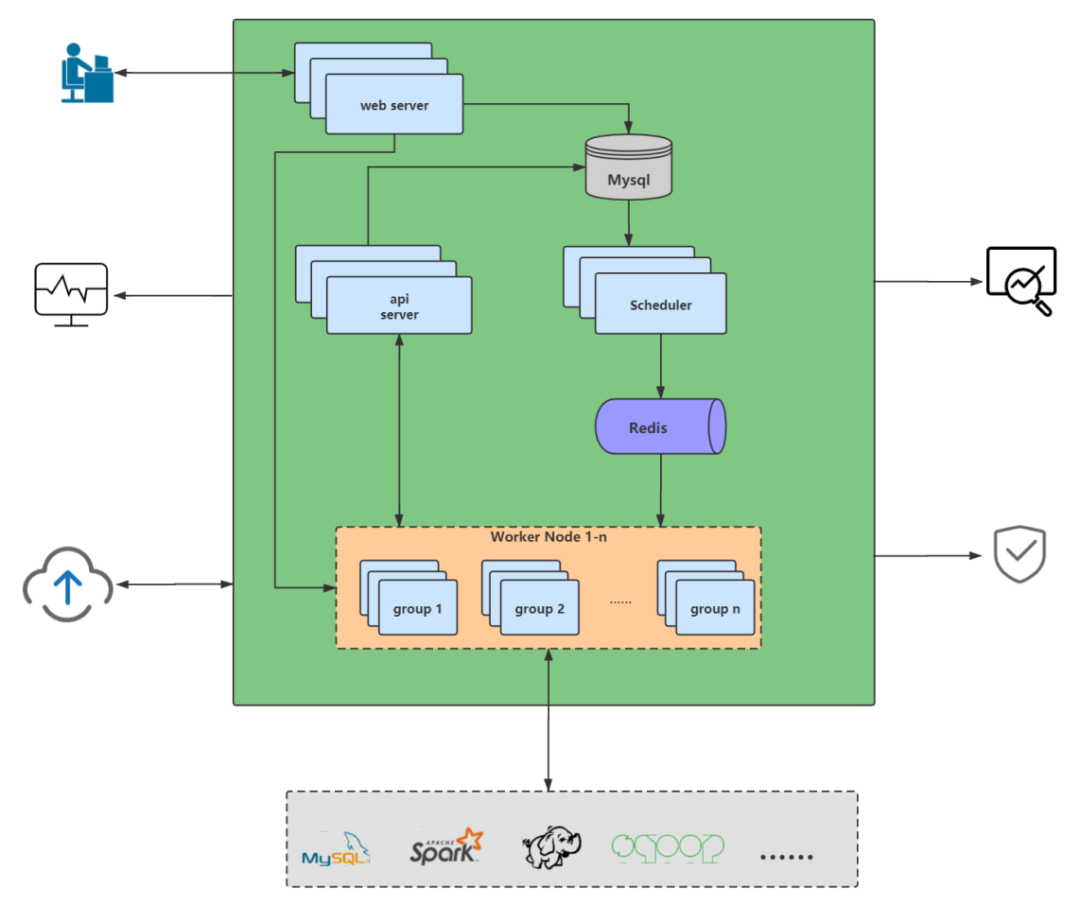

The following figure shows the overall architecture design of airflow, where airflow home dags is used to store python files that define dag and tasks, and webserver is used to provide web services, display dag views, examples, logs and other information. Airflow’s web pages are also very good. Perfect. The scheduler is the scheduling node, which performs dag analysis and task scheduling; the worker node is the execution node, which can have many groups and can monitor different queues, and its role is to execute tasks scheduled by the scheduler.

Our oppo offline scheduling system oflow is developed on the basis of open source airflow.

Several core problems solved during development are:

- Modify the definition of dag and task from python file to web configuration database storage, and the parsing of dag is also modified from parsing python file to query and parse from the database.

- The other is to combine with the company's big data development platform to realize the development, testing and release process, which facilitates the user's development, test verification and release flow.

- In addition, a lot of monitoring alarms have been added for more comprehensive monitoring of the entire process of task scheduling and execution;

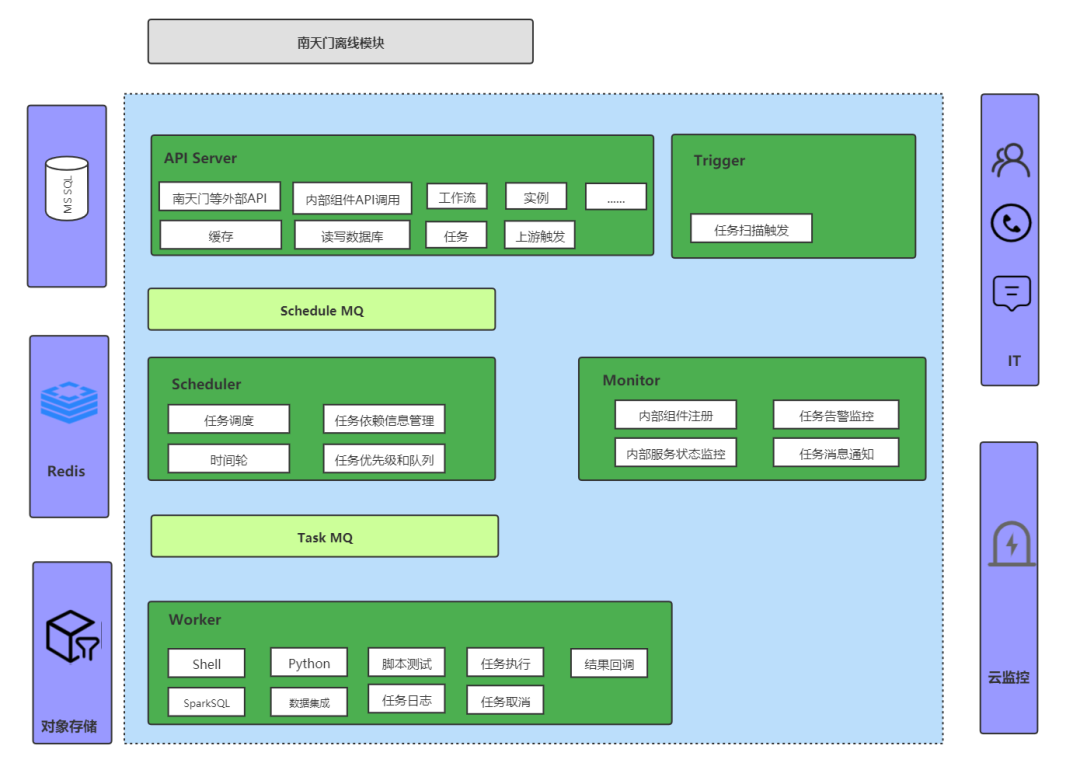

The following is the entire architecture of our OFLOW platform:

- Webserver is used to provide web services, which is convenient for users to configure dag and task and query a lot of information;

- Scheduler is the scheduling node, responsible for task scheduling, by analyzing dag and task, a series of logic is performed to determine whether the task meets the scheduling conditions;

- Workers are execution nodes, responsible for the execution of task instances;

- The api server is a new component in our later development, used to decouple the operation of the worker and our database, and also undertake some other functions in the follow-up;

- Use mysql to store all metadata information such as dag, task, task_instance, etc.;

- Use celery as a message queue, broker uses redis; at the same time, redis also acts as a cache;

- Oflow also accesses cloud monitoring to send alarm information, and uses ocs to store logs and user script files;

- At the same time, oflow is also connected to the diagnosis platform, which is the latest one to assist users in diagnosing abnormal oflow tasks;

The following figure shows the entire process of task scheduling and execution:

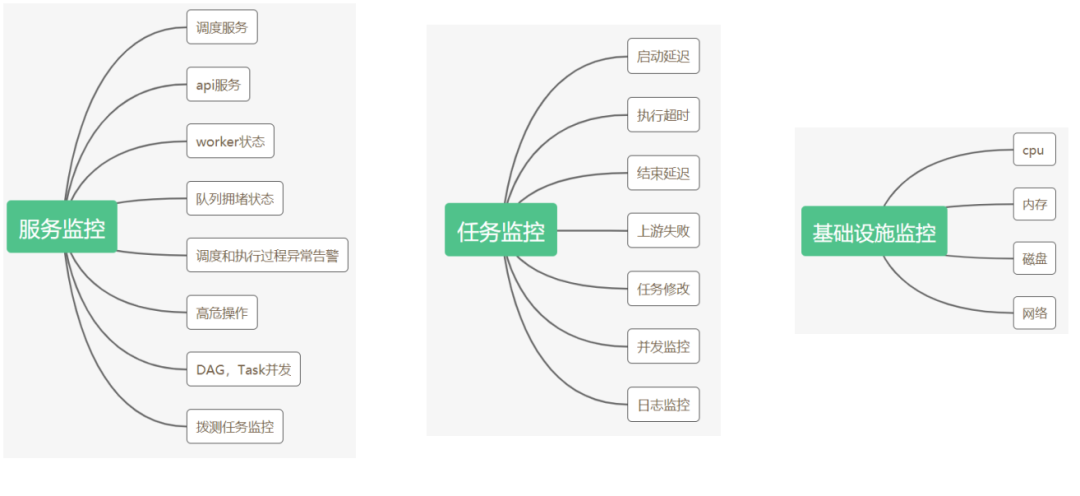

At present, OFLOW also has a more comprehensive monitoring:

The above is the overall architecture of OFLOW, task scheduling and the entire process of execution.

At present, OFLOW’s entire service also has some problems:

- Task scheduling interval problem:

According to the previous task scheduling process, we can see that oflow task scheduling is to analyze dag and task through scheduler periodic scanning. This method will cause a certain time delay between upstream and downstream tasks. For example, after task A is completed, task B directly downstream cannot be scheduled for execution immediately. It needs to wait for the scheduler to scan for the task in the next scan before it can be triggered. If the dependency depth of the tasks is relatively deep, the upstream and downstream chains are very long, and there is a certain interval between every two tasks, the overall interval will be longer. Especially in the early morning task scheduling peak time. - Service high availability issues:

Native oflow does not support high availability. At present, our plan is to prepare a standby node. When an abnormal scheduler is detected, the standby node can be pulled up. - Dispatch pressure caused by business growth:

At present, the daily task volume of oflow is very large, and it is also growing rapidly. The scheduling pressure of oflow is also getting higher and higher. The current plan horizontally expands the scheduler, allowing different schedulers to schedule different dags; - The cost of scheduling peaks and valleys:

An obvious feature of offline scheduling tasks is that there are peaks and valleys of tasks. Oflow’s day-level and hour-level scheduling tasks are the most, which will result in the large peak of task scheduling in the early morning of each day, the small peak of scheduling in the first period of each hour, and the trough in other time periods. . Tasks in the peak state will have queue congestion, while in the low period, the machine is in a relatively idle state. How to use system resources more effectively is also a point worthy of our follow-up thinking and optimization.

4 The new offline scheduling system OFLOW 2.0

Let me introduce to you the special product and architecture design of OFLOW 2.0, which has been launched for trial recently.

The problems we want to solve on the oflow 2.0 platform are as follows:

- Tasks are triggered in real time, reducing the delay between upstream and downstream tasks;

- No longer use dag to organize and schedule tasks. With dag as the scheduling dimension, there will be a problem of cross-cycle dependency. In reality, there are many tasks that depend on other dag tasks. For example, a task of one day level needs to depend on a task of another hour level dag to be completed in 24 cycles. The current oflow solution is implemented through a cross-dag dependent task ExternalTaskSensor. There are some problems whether it is task configuration or understanding of concepts;

- In addition, I hope to simplify the configuration. The dag and task functions of oflow are relatively powerful, but there are many configurations. The user needs to understand many concepts and input a lot of information to complete a dag and the configuration of a task. This has the advantage of being more flexible, but the disadvantage is that it is very inconvenient. We 2.0 hope to simplify the configuration and hide some unnecessary concepts and configurations;

- At the same time, we also hope to make it more convenient for users to develop, test and release a series of tasks;

- The various components of 2.0 can be more convenient and simple in terms of high availability and scalability.

The oflow 2.0 system achieves these requirements through a design that is very different from 1.0:

- Tasks are triggered in real time;

- Organize tasks in a business process mode, not dag, and no longer need the concept of cross-dag dependency;

- The scalability of each component;

- System standardization: The configuration of many tasks is simplified, and the operating threshold is lower. Standardize the task execution environment, reduce environmental problems, and reduce operation and maintenance costs.

The overall architecture design of oflow 2.0 is as follows:

Oflow 2.0 currently has no front-end page for users to use. It calls the api server of oflwo 1.0 through the offline module of Nantianmen 2.0. So when you use the offline module of oflow 2.0, a series of processes such as back-end data storage, task triggering, scheduling, and execution are all implemented on the oflow 2.0 platform.

- The first component is the api server. In addition to Nantianmen calls, the worker execution nodes inside oflow 2.0 also interact with the api server; apiserver mainly implements the interaction with the 2.0 database, business processes, tasks, instances, and other internal operations, as well as upstream task triggering. logic;

- The function of the Trigger component is relatively pure, that is, it is responsible for triggering the scanning task;

- The scheduler scheduling node is responsible for task scheduling analysis, analyzing and scheduling tasks through a series of services and management such as time wheel, task dependency information management, task priority and queue;

- The logic of worker node is relatively close to 1.0, responsible for the actual execution process of the task. It supports four major types of tasks including shell, python, sparkSQL and data integration tasks. It also supports users to test and execute the developed scripts. Log processing supports the stop operation of the task being executed, as well as the callback logic after the task execution ends;

- On the one hand, the Monitor component is responsible for monitoring various internal components. All other components will register with the monitor after startup. If there is a problem with the node, the monitor can process the tasks scheduled and executed on the node. The monitor is also responsible for the processing of various alarm information and the sending of some informative information during the execution of the task;

There are also two message queues,

- One is Schedule MQ, which is responsible for receiving tasks that can start scheduling when part of the scheduling conditions are met and forwarding them to the scheduler for processing;

- The other is Task MQ, which is responsible for receiving tasks that meet all dependent conditions and can be executed. The worker side obtains tasks from the queue and consumes them.

In addition to these developed components, oflow 2.0 also uses some common products, including MySQL, Redis, and object storage presence, cloud monitoring system, and some APIs that call the company's IT system.

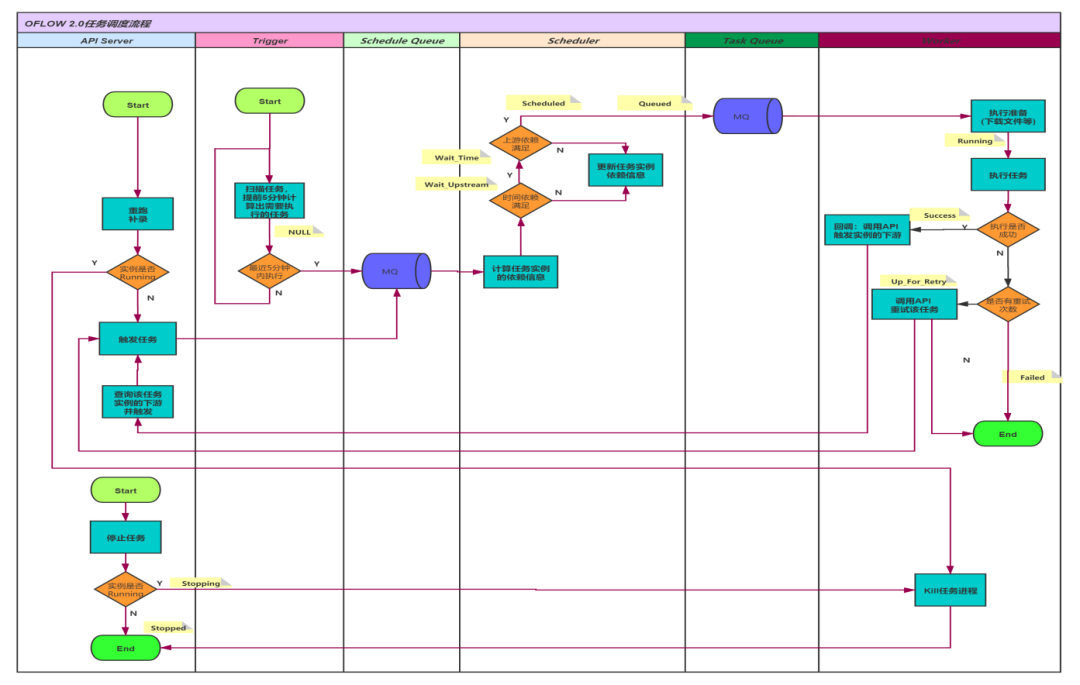

This picture shows the entire process of OFLOW's task scheduling and execution:

Among them, there are two scheduling start entries, one is trigger and the other is webserver.

Trigger is responsible for scanning the task to be executed 5 minutes in advance, and placing it in schedule mq after scanning;

The webserver is responsible for multiple trigger logics. On the one hand, the user manually triggers the task rerun and re-recording operations, and the other is that after a certain upstream task is completed, it is directly obtained downstream and placed in the schedule mq;

These messages will be consumed by the scheduler in schedule mq, and schedule will analyze all dependencies of the task instance, including time dependencies, upstream and downstream dependencies, self-dependence and other information. If the various dependency conditions of the task are met, it will be put into task mq and consumed by the worker; tasks that do not meet the time dependency will be put into the time wheel, and will be automatically triggered when the corresponding time scale is reached; execution is not satisfied All the dependent information of the task of the condition is stored in redis. When the subsequent time arrives or the dependent upstream task is completed, the dependent information of the instance will be updated continuously until all the dependent conditions are met. For tasks that meet the dependent conditions, schedule will also analyze configuration information such as the project to which the task belongs and task priority, and put the task into different message queues in task mq;

Worker will consume tasks from task mq. After getting the task, pass the detailed information of the obtained task, and then execute it. Judge the execution result of the task. If the execution is successful, it will notify the api server. In addition to updating the instance status and other information, the api server will also query the direct downstream of the task and put it directly downstream into the schedule mq; if the task fails , It will decide whether to retry according to whether there are still retry times. If there is no retry times, the task will be deemed as failed, and the api server and api serer will be notified to update the instance status.

At present, OFLOW 2.0 has completed all the design, development and test environments, and it should go through a period of internal testing and stress testing. It has also been opened for trial recently. Everyone is welcome to try the 2.0 system and give feedback and suggestions during the trial process.

At present, if users want to use our OFLOW 2.0 system, they can log on to the Nantianmen 2.0 platform for a trial.

5 Conclusion

The above is some information of OFLOW that I shared with you.

Here I also look forward to the development of our follow-up OFLOW platform:

1) The scheduling performance problem of OFLOW 1.0. Due to the great changes in the 2.0 and 1.0 systems, the follow-up OFLOW 1.0 and 2.0 platforms will coexist for a long period of time. Therefore, we also need to continuously optimize the scheduling performance of the 1.0 system to cope with the rapidly increasing task volume;

On the one hand, find ways to shorten the scheduling interval between tasks to improve task execution efficiency;

On the other hand, I hope to explore more convenient and effective expansion methods to cope with the increase in the amount of scheduling tasks.

2) Interactive experience

Improve the friendliness of page interaction; add a series of batch task operation and operation and maintenance functions; at the same time, hope to display historical statistical information in dimensions such as dag or task for user reference; in addition, it is for task operation audit, task To optimize the monitoring system;

3) Cost optimization

The other is the cost optimization mentioned earlier. The figure below reflects the concurrent execution of tasks for 24 hours a day. There are very obvious peaks and valleys in tasks.

Follow-up consideration is to find ways to perform tasks off-peak, such as in the billing model to encourage users to perform tasks that are not demanding in timeliness in the low task valley; the other is to explore the dynamic expansion and contraction of resources to achieve cost optimization.

4) In addition, I hope that the follow-up OFLOW will not only play a role in task scheduling, but also hope that there will be more interactions with the back-end big data cluster in the future;

5) Another point is that we hope to further improve the monitoring. One of the more critical is the identification and monitoring of the core mission link.

It is not only the core task that can be monitored, but also all the upstream logic of the core task. Once a certain link in the link is abnormal, an alarm can be quickly issued; another point is the processing when the user receives an alarm. Many users do not know how to handle the task after receiving the task alert, and oflow will find ways to guide the user to deal with it.

Author profile

Chengwei OPPO Senior Backend Engineer

Mainly responsible for the development of OPPO's big data offline task scheduling system, and has relatively rich development experience in big data offline scheduling system.

For more exciting content, please scan the QR code to follow the [OPPO Digital Intelligence Technology] public account

**粗体** _斜体_ [链接](http://example.com) `代码` - 列表 > 引用。你还可以使用@来通知其他用户。