1 background

With the rapid expansion of the company's business in the past two years, the amount of business data and data processing requirements have also grown geometrically, which puts forward higher requirements for the construction of underlying storage and computing infrastructure. This article focuses on computing cluster resource usage and resource scheduling, and will take you to understand the overall process of cluster resource scheduling, the problems we face, and a series of development and optimization work we have done at the bottom.

2 Resource scheduling framework---Yarn

2.1 The overall structure of Yarn

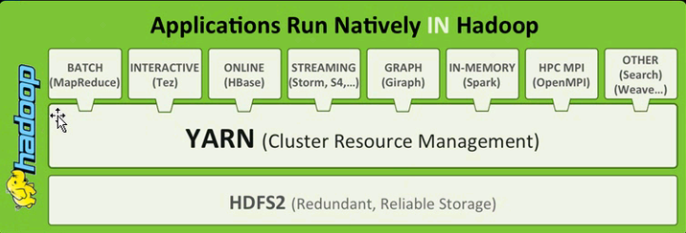

From the perspective of the entire ecosystem of big data, hadoop is at the core of offline computing. In order to realize the storage and calculation of big data, hadoop1.0 provides hdfs distributed storage and mapreduce computing framework. Although the whole has the prototype of big data processing, it does not support multiple types of computing frameworks, such as later spark, flink, At this time, there is no concept of resource scheduling.

In Hadoop 2.0, in order to reduce the scheduling pressure of a single service node and be compatible with various types of scheduling frameworks, Hadoop has extracted a distributed resource scheduling framework ---YARN (Yet Another Resource Negotiator). The position of Yarn in the entire architecture is shown in Figure 1:

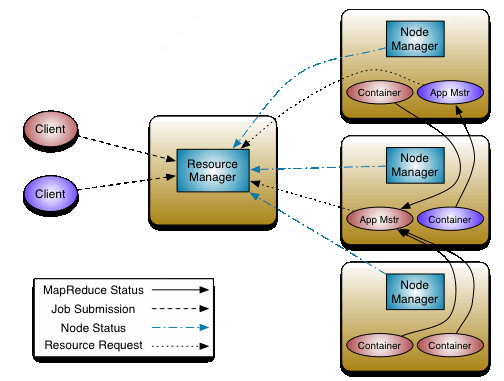

Through the optimized two-layer scheduling framework, Yarn divides the single-point JobTracker that originally needed to perform resource scheduling and task scheduling in Hadoop 1.0 into two roles: Resourcemanager and ApplicationMaster, which are responsible for the overall resource scheduling of the cluster and the management and scheduling of individual tasks. The newly added Nodemanager role is responsible for the management of each computing node. The Yarn task submission process is shown in Figure 2:

The tasks submitted by the client are actually processed directly by the Resourcemanager. The Resourcemanager starts the ApplicationMaster for each task. The ApplicationMaster is directly responsible for the task resource application. In this way, each framework manages tasks by implementing its own ApplicationMaster and applies for the container as a resource. The cluster runs the task, the resource acquisition is completely transparent to the task, and the task framework and yarn are completely decoupled.

2.2 Yarn's scheduling strategy

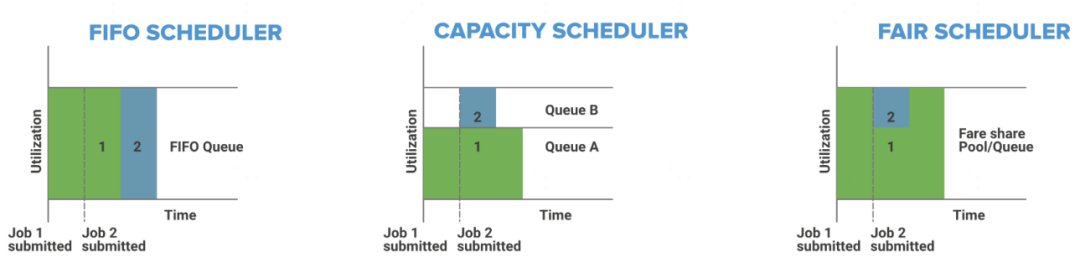

Yarn implements three strategies for task scheduling in the open source version, namely, FIFO Scheduler, Capacity Scheduler, and Fair Scheduler. After the community version has evolved, fair scheduling has been based on queue level. A fair mechanism is realized, and our cluster adopts a fair scheduling strategy.

Before understanding several scheduling strategies, we first understand a concept: queues. In Yarn, the queue actually refers to the resource pool. If the resources of the entire cluster are regarded as a large resource pool, Yarn will further divide this resource pool into small resource pools according to the user configuration; the parent queue can be further divided down, The child queue will inherit the resources of the parent queue and will not exceed the maximum resource of the parent queue. The organization of the entire resource queue is like a polytree.

The first-in first-out and capacity scheduling strategies organize tasks according to the order of task submission and the way of dividing queues for tasks. These two methods are not very suitable for the production environment, because our goal is to make as many tasks as possible run. , And make full use of cluster resources, and these two strategies will lead to task jam and resource waste respectively.

Fair scheduling in the production environment follows a rule: to ensure the fair distribution of task resources, when a small task is submitted and no resources are available, the scheduler will leave the resources released by the large task to the small task, ensuring that the large task will not be allowed to continue. Occupied resources are not released. The organization of the three scheduling strategies is shown in Figure 3:

In addition to the above-mentioned guarantee of resource fairness among tasks, fair scheduling also dynamically adjusts the size of queues to ensure fairness of resources between queues. The adjustment is based on the real-time load of the cluster. When the cluster is idle, the queue can basically obtain the configured maximum resource value. ; When the cluster is busy, the scheduler will give priority to meeting the minimum value of the queue.

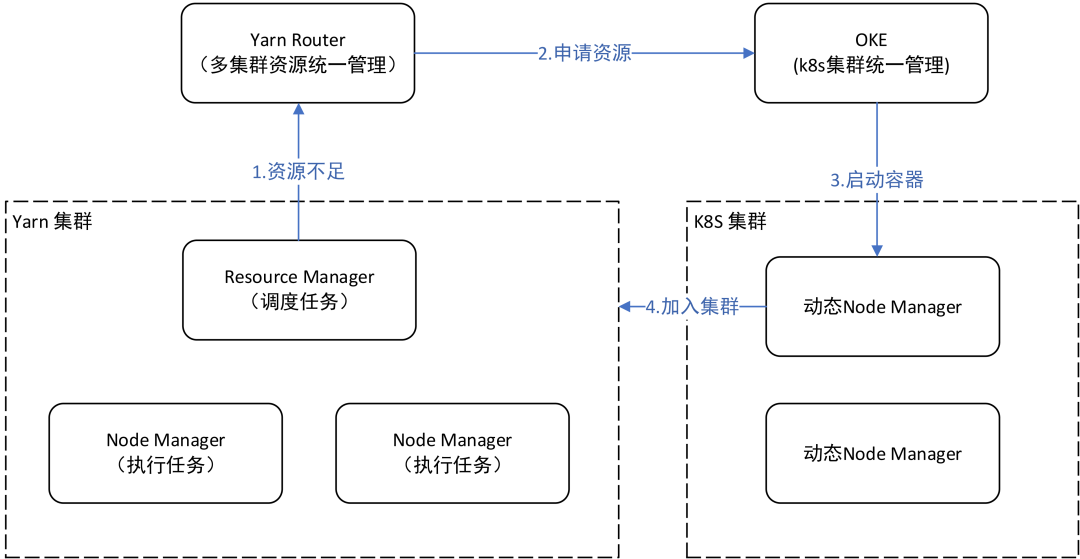

2.3 Yarn's federated scheduling

When a single cluster reaches the scale of thousands of nodes, a single Resourcemanager is actually close to the performance bottleneck of scheduling. In order to further expand the cluster size, the community's solution is to expand horizontally through federated scheduling. The core of the solution lies in the Router. Externally, the Router provides a unified entry for task submission; internally, the Router manages multiple clusters. The actual tasks are forwarded by the Router, and the cluster to which they are forwarded is determined by the Router. The actual workflow of the Router is shown in Figure 4:

It can be seen that through the federated scheduling method managed by Router, the concept of cluster is actually transparent to users, and when a problem occurs in a cluster, Router can perform failover in time through configuration and route tasks to a healthy cluster to ensure The external service stability of the overall cluster is improved.

3 Status of OPPO computing cluster

3.1 Cluster size and status quo

After more than two years of cluster construction, our cluster has reached a considerable scale. Under such a cluster scale, maintaining stability is actually the first priority. We have done a lot of stability work on the cluster, such as Authority control, monitoring of various important indicators, etc.

In addition to part of the stability construction work, another focus is on the utilization rate of cluster resources. At present, most of the cluster resource usage has a relatively obvious periodic law. From the cluster monitoring in Figure 5, it can be seen that the cluster is often busy in the early morning and during the day. Relatively idle.

The problem of resource shortage during the peak period of the cluster only depends on the internal coordination of the cluster. In fact, the effect that can be achieved is limited. For this situation, we consider joint scheduling with k8s during the early morning peak period to coordinate the idle online resources to the offline cluster. The program is described in detail.

3.2 Cluster pending problem

In addition to the shortage of resources during the peak period, we found that a large number of pending tasks also occur during the day. We analyzed specific pending tasks and summarized the following three problems that lead to pending tasks:

- Unreasonable queue configuration:

According to the fair scheduling mechanism, the real-time available resources of the queue are largely determined by the minimum configured value. If the minimum configured value of some queues is too small or 0, it will directly cause the queue to fail to obtain sufficient resources. In addition, if the CPU and memory ratio of the queue configuration and the actual execution of the task CPU memory ratio is too large, it will also cause insufficient resources to run the task. - The user suddenly submits a large number of tasks to a certain queue, and the resource usage exceeds the upper limit of the queue:

This situation is actually related to the user's own use. When the amount of their own tasks increases, it is more appropriate to apply to us to expand the queue. - A large task occupies the resource and is not released, which causes the subsequent submission of the task to apply for the start resource:

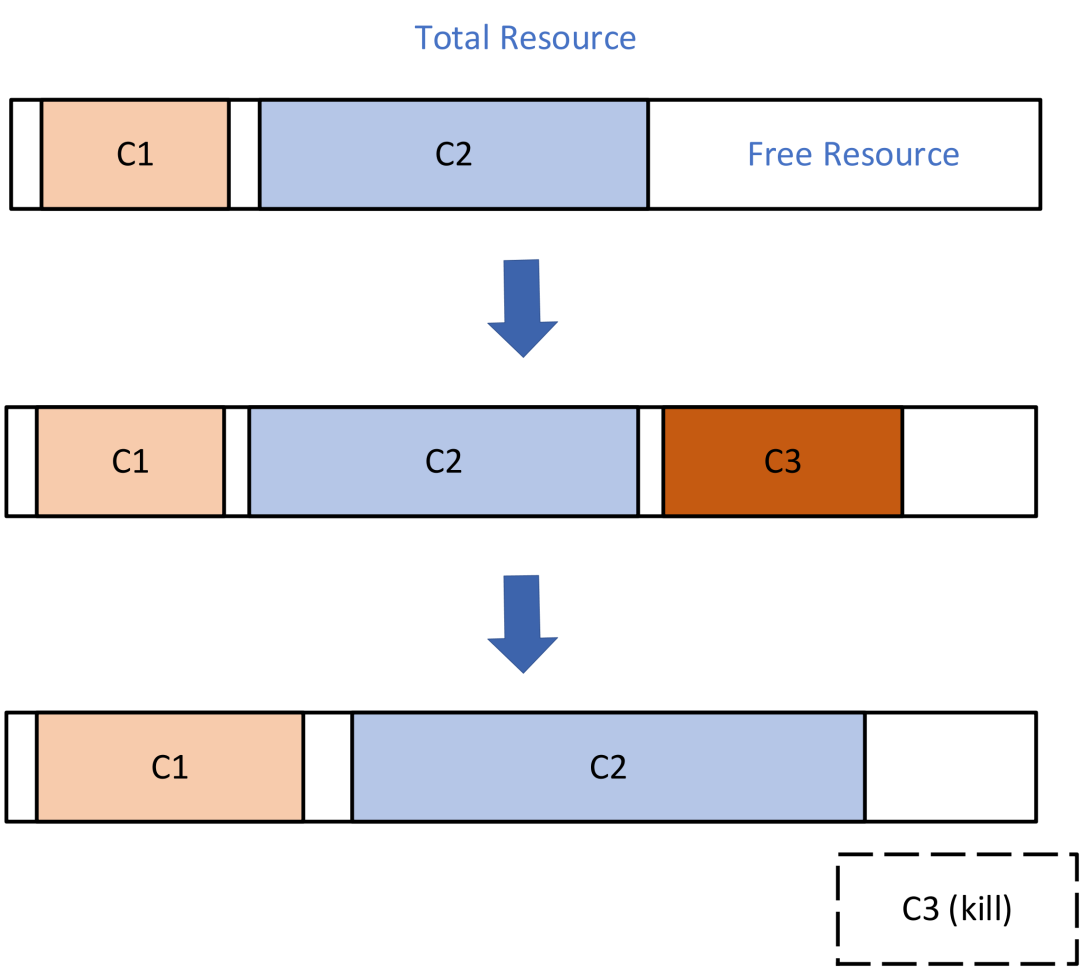

Spark or Flink tasks are different from the traditional Mapreduce task operating mechanism. These two types of tasks will occupy resources for a long time and will not be released, resulting in the inability to obtain resources in time for newly submitted tasks. In response to this problem, we have also designed a corresponding resource preemption mechanism, which will be described in detail later.

Generally speaking, the queue configuration greatly affects the operation of the job, and reasonable optimization of the configuration can often achieve the effect of getting twice the result with half the effort.

3.3 Status Quo of Cluster Resource Utilization

It can be seen from the above that the monitoring cluster is busy most of the time, but we found that there are still a lot of free resources through statistics on the resource usage of a single server, as shown in Figure 6:

The reason for this problem is actually that the resources requested by users for operations often far exceed the actual resources needed. Our statistics found that the actual utilization of 95% of the Containers is lower than 76%, and the actual utilization of 99% of the Containers is lower than 90%. It shows that there is a "puffiness" problem in the size of the resources requested by the homework. If this part of the wasted resources can be used, it can actually greatly increase the utilization rate of cluster resources. We will discuss the optimal use of this part of wasted resources in the follow-up.

4 Yarn's optimization road

4.1 Optimization of Yarn Federation Scheduling

The community's federated scheduling scheme is too simple for us, it just implements simple weighted random routing. In the follow-up plan, we will connect more resources to the routing cluster. The Router service will provide a unified queue and task management. Users only need to submit tasks to the Router cluster, regardless of which cluster is specific. Router will count the status and load of all clusters and find suitable resources for scheduling tasks.

4.2 Resource allocation and oversold

The purpose of resource allocation and resource oversold is to improve the actual resource usage efficiency of each node.

Resource allocation: Based on historical statistical values, when scheduling resources, adjust the resources allocated to the container to be closer to the actual requirements of the container. Through our practice, resource allocation can increase the efficiency of resource usage by 10-20%.

Resource oversold: When each container runs, a certain amount of fragments will be generated. Nodemanager can collect the fragments of its own management resources and insert some additional containers. We plan to use an additional fragment scheduler to complete this process. When there are more fragments, some additional containers will be started. If the fragments are reduced, these additional containers will be recycled.

4.3 Dynamic scaling of resources

In order to solve the problem of resource peaks and valleys, we are cooperating with cloud platforms to implement a hybrid framework of online and offline resources, and improve the overall utilization rate of resources through peak-shift resource scheduling. The scheduling process is mainly coordinated by the two services of Yarn Router and OKE. When real-time resources are free and offline resources are in short supply, Yarn will apply for resources from OKE. When real-time resources are in short supply, OKE will apply to YARN for recycling these resources. At the same time, we introduced the self-developed Remote Shuffle Service to improve the stability of dynamic Nodemanager.

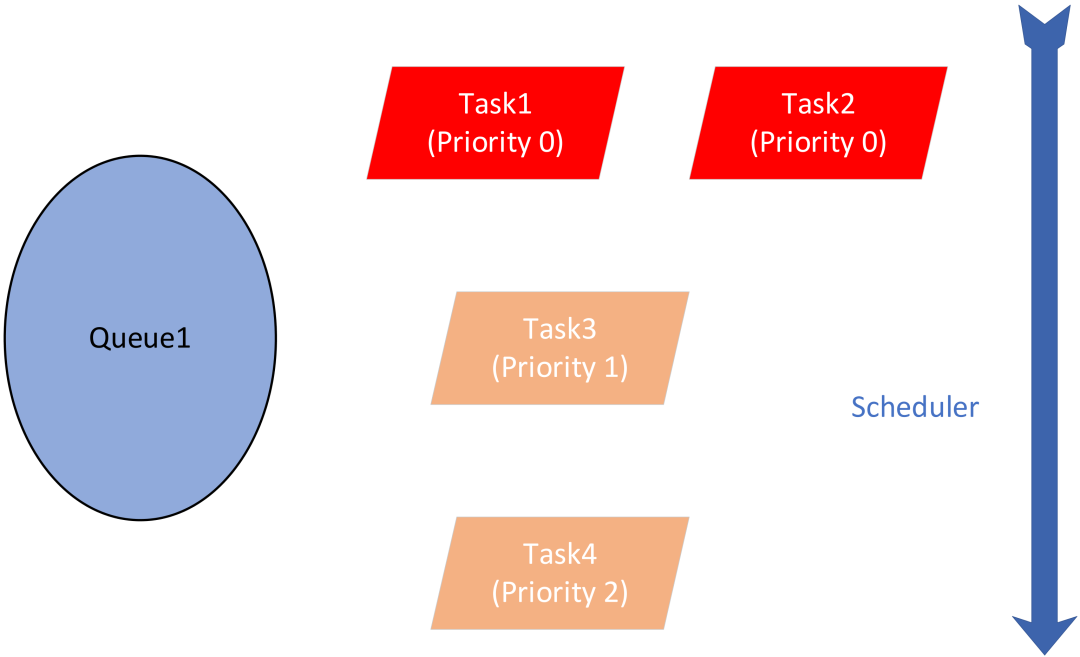

4.4 Task priority management

In practice, we found that user tasks have obvious priority differences, so we plan to implement a priority-based fair scheduler to ensure the running delay and efficiency of high-priority tasks. The resources under a queue will give priority to high-priority tasks, and resources with uniform priority will be allocated fairly among tasks. At the same time, we have also implemented the task preemption function. Even if the low-level tasks have already obtained the resources, the high-level tasks can also use preemptive scheduling to forcibly obtain resources.

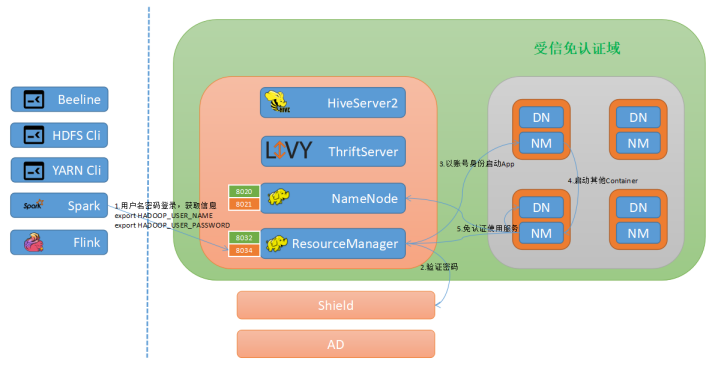

4.5 Queue authority management

Oppo yarn permissions use Aegis unified authentication, and each user group applies for queue permissions in Aegis. Tasks directly submitted by users through clients such as hive, livy, or flink will be authenticated and queued on the Aegis system, and users without permissions will be denied access.

5 Summary

This article mainly introduces the basic working principle of Yarn and the practice of Yarn in OPPO. Generally speaking, it can be summarized as the following points:

- Yarn, as a typical two-tier scheduling architecture, breaks through the resource and frame limitations of the single-tier scheduling architecture, making job operation and resource allocation more flexible.

- How to optimize job pending and how to maximize resource utilization is a problem that all scheduling systems will face. For this, we can analyze the characteristics of our own system and find a solution that suits us. We believe that the analysis can be based on existing statistics.

- The off-peak characteristics of offline and online services determine that a single service cannot make full use of all server resources. Organizing these resources through a suitable scheduling strategy will greatly reduce server costs.

Author profile

Cheng OPPO back-end engineer

Mainly responsible for the development and construction of the YARN offline resource scheduling platform, and has rich experience in the construction of the big data basic platform.

Zhejia OPPO Senior Data Platform Engineer

He is mainly responsible for the development and optimization of the OPPO YARN cluster, and has rich experience in the scheduling of big data computing tasks.

For more exciting content, please scan the QR code to follow the [OPPO Digital Intelligence Technology] public account

**粗体** _斜体_ [链接](http://example.com) `代码` - 列表 > 引用。你还可以使用@来通知其他用户。