This article is the original article of CSDN blogger "Pray Qing Xiaoyi" (Huang Xinxin: Tencent Cloud CODING DevOps R&D engineer, core developer of Nocalhost project), and is organized according to the author’s speech at CSDN Cloud Native Meetup Shenzhen Station. Mainly share Nocalhost's ideas and explorations on solving cloud-native development problems, and show the brand-new experience that Nocalhost brings to cloud-native development.

Development pain points in cloud-native scenarios

When our application architecture transitions from a traditional application to a cloud-native application, we will find that the complexity of the application architecture is greatly increased. The original traditional application components are few and the deployment is simple. We can often develop a traditional application locally, It can run on the server. For cloud-native applications, the application is split into microservices with smaller granularity, and there are intricate relationships between services, which makes it extremely difficult to build a development environment and debug services.

Local deployment VS cluster deployment

When we want to develop cloud-native microservice applications, how do we set up our development environment? There are two common ways: local deployment and cluster deployment.

Local deployment is to deploy a complete set of microservice applications to a local development machine, as shown in the following figure:

This approach will bring the following problems:

1. Affect the performance of the development machine . Microservice applications are often large in scale, with dozens or hundreds of services at every turn, soaking them on your own development machine may cause your computer to become stuck and affect work efficiency.

2. The environment cannot be shared, and resources are wasted seriously . When we need to deploy a set of relatively large-scale microservice applications locally, we need to use a development machine with a higher configuration, and the development environment of each development machine can only be used by one developer, even if the developer only needs to develop One or several services cannot be shared with other developers.

3. For some very large-scale microservice applications, the local machine may not be able to run.

Another way to deploy microservice applications to the K8s cluster on the cloud, as shown in the following figure:

This deployment method can better improve resource utilization, but it will greatly lengthen the feedback link when developing and debugging applications.

Our workflow when developing traditional applications is: write the code locally -> compile the code -> run the program to view the results, as shown in the following figure:

This process is often very fast, so we can run it to view the results after making a small change to the code.

But when developing the application on the K8s cluster, the workflow becomes: modify the code -> compile the program -> package the program into the Docker image -> push the Docker image to the mirror warehouse -> modify the image version of the container in the cluster, wait K8s will deploy the new version of the image -> view the result, as shown in the figure below:

This process may take a few minutes. When this feedback loop is greatly lengthened, it will undoubtedly greatly reduce the efficiency of development.

The current mainstream cloud-native development method

Manually package and push the image

This way is the most primitive way, the workflow is roughly as follows:

After writing the code, compile and generate a binary file or jar package locally, then build a Docker image through Dockerfile, then push the image to the Docker warehouse, and then modify the image version in the yaml definition of the workload to deploy the new version of the container. The task is left to K8s, but the deployment and scheduling process may be a bit long. We need to wait for K8s to run the new version of Pod scheduling before we can see the effect of the code modification. If the code changes frequently, this process is obviously very cumbersome.

CI/CD pipeline

This method is basically the same as the process of the first method, except that the manual operation is changed to an automated process through the ability of CI/CD:

The workflow of this method is: modify the code locally, push the code to the code warehouse, trigger the CI process configured in the code warehouse, compile and build the code into an application (such as a binary or Jar package), and package it into a mirror image After that, the so-called Continuous Delivery mechanism will be triggered to push the image to the product warehouse, and finally the Continuous Delivery process will be triggered to deploy the new version of the container to the cluster. Although the use of CI/CD can reduce most of the manual operations, the entire process still takes a lot of time. In fact, CI/CD is more suitable for use in the publishing and application process, rather than in the development and application process . The development link pays more attention to being able to quickly get feedback to verify our ideas. When our changes need to be submitted to the code warehouse, the effect will not be seen until the CI/CD pipeline is completed. This will restrict us from using simple attempts. Find the best one among the various solutions, or locate the cause of the bug.

Traffic forwarding

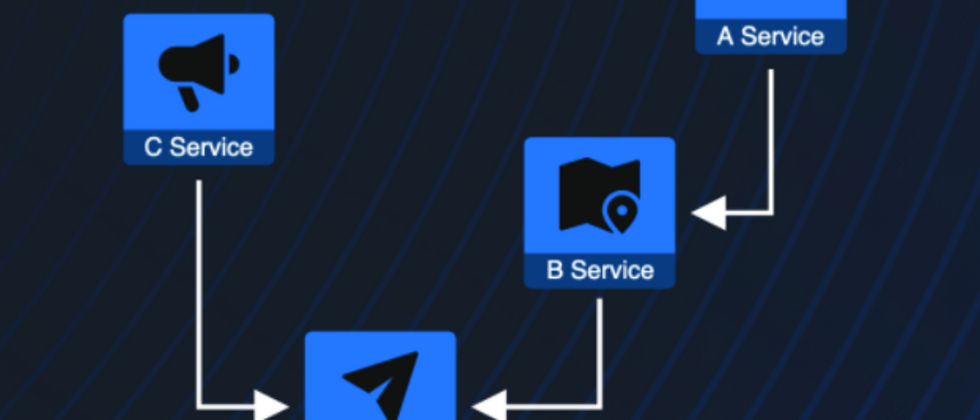

The idea of traffic forwarding is to forward the traffic that accesses the service under development in the cluster to the local. As shown below:

When the D service needs to be developed, forward the traffic accessing the D service in the cluster to a certain port on the local development machine, and after writing the code locally, you can directly run the application locally. Related products that implement this method are: kt-connect and telepresence .

Running the application directly locally can shorten feedback loops and improve development efficiency, but this approach also has a big problem: many services running on a K8s cluster rely on other K8s resources, such as ServiceAccount, ConfigMap, Secret, and PVC. Wait, it's not easy for such a service to run locally.

Develop in a container

The idea of this approach is: when we want to enter the development of a service, first let the service to be developed enter the development mode, and then synchronize the code to the container, and run the code under development directly in the container:

This method also solves the problems of slow development loop feedback and service dependence on clusters. It is a good practice in cloud native development and one of the main development methods supported by Nocalhost.

Nocalhost first experience

The third part is mainly to take everyone to experience the features of Nocalhost by way of Demo. Interested students can go to Shenzhen station Meetup video playback , and start watching 01:26:15

https://live.csdn.net/room/csdnnews/yCHrYqnM

Nocalhost core mechanism

How does Nocalhost realize application development in containers? When a service enters the development mode, the core work done by Nocalhost has the following 4 steps.

Reduce the number of copies

When developing an application, we only need to run the application under development in a container. If there are multiple copies, when we Service , we cannot control the traffic and only access the application we are developing The copy that is running, so Nocalhost needs to reduce the number of copies of the workload to 1.

Replace the development container image

Containers running in the production environment often use very lightweight images. The images only contain the components necessary to run business programs, but lack the relevant tools (such as JDK) required to compile and build business programs. When developing a certain workload, Nocalhost will replace the container image with a development image containing a complete development tool.

Add SideCar container

In order to synchronize local source code changes to the container, we need to run a file synchronization server in the container. In order to decouple the file synchronization server process from the business process, Nocalhost runs the file synchronization server in a separate sidecar container, which mounts the same synchronization directory as the business container. Therefore, the source code synchronized to the sidecar container is in the business It is also accessible in the container.

Start the file synchronization client

Since the file synchronization server listens on a certain port in the container, we cannot directly access it locally, so Nocalhost will forward a local random port to the port monitored by the file synchronization server in the container, open up the network between the file synchronization server and the client, and then Then start the local file synchronization client. After the file synchronization client is started, it will establish communication with the file synchronization server through the local random port just forwarded, and then it will start to synchronize the files.

After the above steps are completed, Nocalhost will automatically open a terminal to enter the remote container. Through this terminal, we can synchronize real-time to the container and run the source code directly.

Nocalhost advanced features

Duplicate development model

Nocalhost's default development model is to replace the normally running services in the cluster with development containers, as shown in the following figure:

This method has the following problems:

that will affect the environment during development. When developing a service, the service may become abnormal or even crash due to code modification problems during the development process, and there are other services in the cluster that depend on the service, which affects the use of the entire environment.

2. Cannot support multiple people to develop the same service.

To this end, we can use the Duplicate development mode. In this mode, Nocalhost will not make any changes to the original service, but will copy a copy of the original service for development, as shown in the following figure:

In this mode, multiple people can develop the same service, and everyone has their own development copy, and the original environment of the cluster will not be affected in any way.

Nocalhost Server

In addition to providing plug-ins to facilitate the development of K8s applications through the IDE, Nocalhost also provides a Nocalhost Server suitable for enterprise-level development environment management. The following is the management interface Nocalhost Server

Nocalhost Server can provide management of clusters, applications, and personnel permissions. For Nocalhost Server , please refer to the introduction on the official document.

Mesh mode

As we mentioned earlier, if you want multiple people to develop the same service, you can use the Duplicate development model, but this method also has a limitation, that is, you can only access the copy in development through the API interface request locally. There is no way. Access through the entry address of the application. For scenarios that need to access services under development through a unified application portal, we can use Nocalhost's Mesh mode.

Mesh mode will allocate a MeshSpace for everyone, and different MeshSpaces can control the access link of the traffic coming in from the application entrance by bringing different Headers into the traffic.

The use of Mesh mode requires the development environment to be Nocalhost Server , and the application needs to have Header transparent transmission and the ability to use Istio for traffic forwarding. For the use of Mesh mode, please refer to the official website document (the document is not very complete at present, more detailed information You can contact Nocalhost's development team directly through GitHub).

Click to read the original text, one-click to open the cloud native development environment

**粗体** _斜体_ [链接](http://example.com) `代码` - 列表 > 引用。你还可以使用@来通知其他用户。