1 What is a pretrained model? Why do we need pretrained models?

Pre-trained models are a common way to improve the performance of deep learning algorithms. The so-called pre-training model can be summarized as a certain deep learning network architecture, and includes a set of weights trained by this network architecture on massive data. Once we have the network architecture and weights, we can use it as the backbone network for a specific vision task and provide initialization parameters. In this way, specific downstream tasks have a good starting point for training, which can achieve better algorithm performance while reducing the exploration space.

So why do we need pretrained models? To make an analogy here, if the deep learning algorithm is compared to martial arts, then the pre-training model is his inner strength. With a solid foundation of inner strength, it is easier and faster to master all kinds of martial arts moves and exert them to the fullest. utility. The process of pre-training the model is the process of deep learning algorithms cultivating internal skills.

2 OPPO self-developed large-scale cv pre-training model

2.1 Overview

There are two main reasons for self-developed pre-training models: First, the pre-training models currently used by data scientists are all open source online, and change every year. The latest research is often not open source and cannot guarantee the best results; The second is the online open source pre-training models, which are all trained based on open source datasets, such as the well-known imagenet. Not taking full advantage of company-owned data. Therefore, it is very meaningful to develop a self-developed pre-training model.

OPPO's self-developed large-scale cv pre-training model technical solution mainly includes the following three parts:

- Network architecture innovation: mainly to study the current mainstream model architectures in the cv world, such as CNN, Transformer and MLP, etc., to combine and optimize the current SOTA network architectures of different structures, and try to get the best performance network architecture as a pre-training model. backbone network.

- Self-supervised learning and training: The main purpose is to make full use of oppo's own massive unlabeled data and pre-train under the massive unlabeled data, so as to obtain a more general feature expression, so that the model can better overcome OOD (with the training set different distributions), a more robust pre-trained model is obtained.

- Supervised fine-tuning training: When using specific task datasets (labeled samples) to fine-tune the network parameters of the pre-trained model, using appropriate training methods and regularization methods can make the model achieve optimal results in specific downstream tasks.

2.2 Key Technologies

2.2.1 Network Architecture Design

In terms of network architecture, our goal is to design an appropriate network architecture to reduce the feature exploration space and improve network performance. In order to be more suitable for accessing different dense scene vision tasks, our network architecture needs to be designed as a multi-stage hierarchical structure to provide multi-scale feature maps, and our network needs to be easily extended to variant models with different parameter magnitudes , to meet the needs of different business scenarios, and to improve the performance of the network as much as possible under the condition that the amount of parameters and floating-point calculation is lower.

At present, the three mainstream model architectures in the field of computer vision include convolutional neural network CNN, Transfomer and multi-layer perceptron MLP. Among them, CNN has been the dominant network architecture in computer vision tasks for many years. CNN is good at extracting local detailed features and has the advantages of deformation, translation and scaling invariance. Transformer has achieved great success in the field of NLP. It debuted in the CV world last year and started to lead a new trend. The advantages of Transformer are that it is good at capturing global information, has a larger model capacity, and its operating mechanism is closer to humans. At the same time as the rise of Transformer, part of the research focused on the research of MLP replacing Transformer components to build networks, opening up another research direction. MLP can achieve performance close to Transformer at a small model scale, but when the scale is enlarged, it will be affected by Severe overfitting effect.

After researching and analyzing these three mainstream model architectures, we have obtained the following conclusions: For MLP, its research focuses on replacing Transformer components to obtain relatively competitive results, but the actual effect does not exceed the transformer-based method, this series of work has only opened up a new idea to a certain extent. The convolution operation in CNN is good at extracting the local information of the picture, and the Transformer extracts the global representation of the picture by constructing image tokens, so CNN and Transformer can form a good complement to a certain extent. In addition, Transformer has the largest model capacity among the three architectures, and is more suitable for large-scale pre-training models. Therefore, the Transformer plus CNN architecture may be the optimal solution.

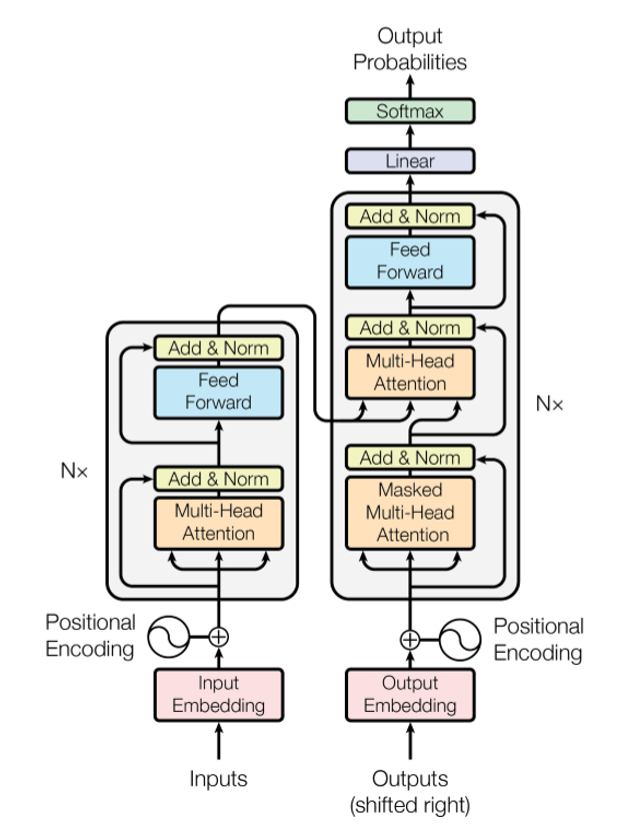

Since we're going to focus on the Transformer method, we first need to have some understanding of the fundamentals of the Transformer in order to improve it. So next I will give a brief introduction to Transformer. The Transformer structure was proposed by Google in the Attention Is All You Need paper in 2017, and it has achieved very good results on multiple tasks of NLP. Its biggest feature is that the entire network structure is completely composed of the Self-Attention mechanism. As shown in Figure 1, the Transformer adopts the Encoder-Decoder structure. After the input is embedded, position encoding is required, then the multi-head self-attention mechanism, and then Feed Forward. Each sub-layer has residual connections, and finally passes through Linear and softmax. output probability.

in:

- The residual structure is to solve the problem of vanishing gradient, which can increase the complexity of the model.

- The Norm refers to the LayerNorm operation, and LayerNorm is used to normalize the distribution of the output of the attention layer. BatchNorm, which is often used in computer vision, is to normalize the samples in a batchsize once, and LayerNorm is to normalize a layer once. The functions of the two are the same, but the dimensions are different.

- Feed Forward is a two-layer fully connected structure with an activation function. is to fit the data using a non-linear function. The first layer of full connection is used for nonlinear function fitting, and the second layer of full connection is used to adjust the output dimension.

- The addition of positional encoding is mainly because the self-attention mechanism cannot capture positional information, so it needs to be improved by positional encoding.

In the Transformer structure, we most need to pay attention to the principle of the self Attention mechanism. The so-called Self Attention means that a word in the sentence makes an Attention to all the words in the sentence itself. When Self Attention is performed with a word as the center, each word is subjected to a linear transformation through three matrices Wq, Wk, Wv, divided into three, and three vectors of query, key, and value of each word are generated. That is, the big Q, K, V in the formula, and then calculated by the following formula as the output of this word. Finally, each Self Attention accepts the input of n word vectors and outputs n aggregated vectors. The purpose of this is to preserve the value of concerned words and weaken the value of unrelated words.

And Multi-Head self-Attention is to do the above Attention h times, and then concat the h outputs to get the final output. In the engineering implementation of Transformer, in order to improve the efficiency of Multi-Head, W is expanded by h times, and then the Q, K, and V of different heads of the same word are arranged together through reshape and transpose operations for simultaneous calculation. The stitching is completed by reshape and transpose, which is equivalent to a parallel process for all heads. So far we have a preliminary understanding of the Transformer structure.

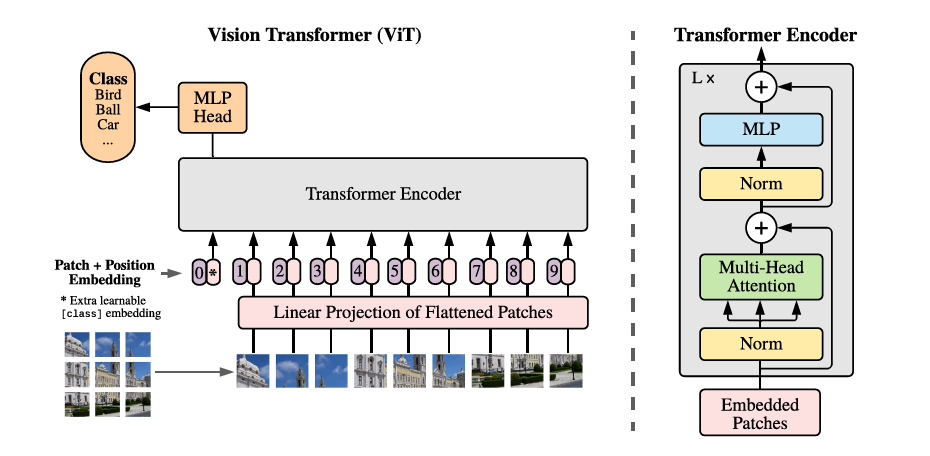

In 20 years, the proposed ViT is the first network based on the pure Transformer structure to do image classification tasks.

The structure of ViT is shown in Figure 2, which is a typical Transformer structure mentioned above:

First, divide an image into non-overlapping equal-sized image blocks, then flatten each block and perform a linear transformation on each flattened block vector to reduce the dimension as the input of the Transformer. Each tile here is a Token, which is a word in a sentence in NLP.

ViT adds a classification vector to the input Token, which is used for class information learning in the Transformer training process. It is input into the Transformer encoder together with other tile vectors, and finally the first vector is taken as the class prediction result.

In order to maintain the spatial position information between the input image blocks, a position coding vector is added to the image blocks. This method of adding position information before self-attention is called absolute position coding, and some position coding will be performed later. Introduction, which will not be repeated here.

Since ViT is a non-hierarchical network, it is not suitable for vision tasks in dense scenes, and the computational complexity is relatively high. In subsequent studies, researchers have used various methods to improve him from different angles.

So how do we do it?

First, we want to get a more general backbone network architecture instead of designing different networks for different vision tasks, so we need to design a hierarchical network that can provide multi-scale feature maps. The way to realize the hierarchical structure can use pixelShuffle, convolution downsampling and other means to adjust the feature map scale and output dimension, so as to obtain a multi-stage hierarchical network;

The second point is that we need to transform the attention mechanism. The original Transformer needs to calculate the relationship between a Token and all other Tokens when calculating the attention mechanism, and the computational complexity is the quadratic type of the number of Tokens. In order to model more efficiently, some studies have modified the calculation of the attention mechanism, mainly including: halo, shifted window, CrossShape, etc.;

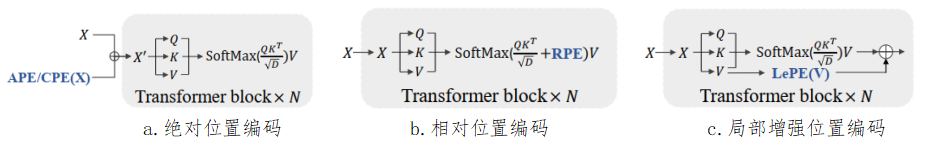

The third point is to effectively integrate Transformer with CNN. Local feature modeling is very effective and crucial in vision tasks, and local feature modeling is what CNN is good at. Studies have confirmed that stacking convolutional layers and Transformer layers in an appropriate way can effectively improve the overall performance of the network. There are also studies that integrate convolution into Transformer, and use convolution instead of linear transformation to calculate Q, K, and V matrices, which can improve Transformer's ability to model local features. Finally, there are improvements to positional encoding. Since the self-attention mechanism has permutation invariance, the output results of different permutations are the same. to make up for this defect. As shown in Figure 3(a), the absolute position encoding adds position information before self-attention. Figure 3(b) shows the relative position encoding, which is to add relative position information in the process of calculating the weight matrix. Figure 3(c) shows the position enhancement encoding, which directly adds the position information to the Value. The specific implementation is to convolve the value with a Depth-wise Conv, and then add the result to the self attention result.

For our network architecture, it can be seen as a four-stage architecture:

First, a set of convolution sequences are used to block the image to generate Tokens. In order to generate a hierarchical representation, we use another set of convolution sequences between two adjacent stages to halve the number of Tokens and double the channel dimension. This results in a multi-stage hierarchy that provides multi-scale feature maps for easy access as a backbone network for dense scene vision tasks.

From the perspective of combining with CNNs, it can be seen as a multi-stage Transformer module nested with an EfficientNetV2-like structure, which realizes the effective fusion of convolution and Transformer. The lower case greatly improves network performance.

When calculating multi-head self-attention, we use an improved calculation mechanism, shifted-window + conv and CrossShape, to improve the computational efficiency of Trasnformer and further improve the network performance.

For the position encoding part, we use two methods: relative position encoding and local enhanced position encoding. It has been verified by experiments that these two position encoding methods are basically indistinguishable in classification tasks, but in dense scene tasks (such as detection, segmentation, etc.), Locally enhanced positional coding has better performance.

Finally, we designed three sets of model architectures of different magnitudes: Tiny model, Small model and Base model through different depth or width configurations to meet the needs of different business scenarios.

Table 1 shows the performance of our network architecture on the Imagenet dataset. We compared with the latest network architectures with different parameter magnitudes. We can see that the network architecture we designed has lower parameters and less computation. In this case, all three variants of the magnitude model can achieve the best results.

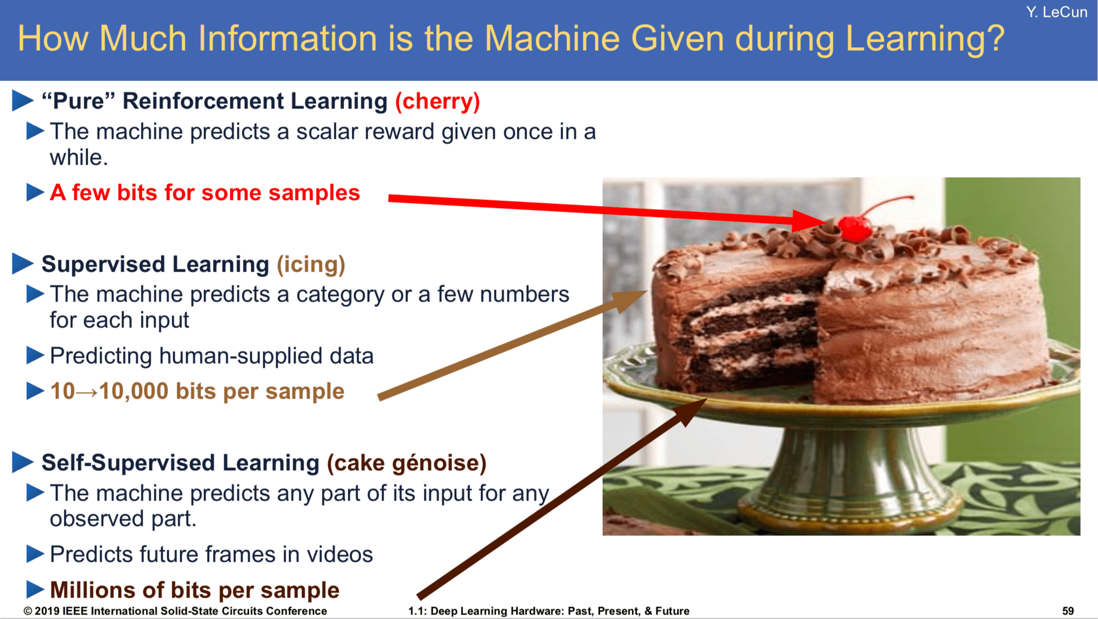

2.2.2 Self-supervised learning

Next, we introduce another key technology of the pre-training model, self-supervised learning. The so-called self-supervised learning is a kind of unsupervised learning. On the father of deep learning at the 43rd International Information Retrieval Annual Conference, Hinton proposed that the next generation of artificial intelligence belongs to unsupervised contrastive learning. For three learning tasks, Hinton's proud disciple Yann LeCun likened it to a cake. Reinforcement learning is just a cherry on the cake. Supervised learning can be compared to the cream on the cake, and unsupervised learning is the cake embryo to show its foundation. The role and importance of sex.

Take us humans as an example, when we see something, all our perceptions are subtly feeding us massive amounts of data for us to learn, reason and judge. We encounter many "questions" and receive information all the time, but we have very few "answers". We may have seen all kinds of animals, until one day someone told us in three words, "this is a cat". There may be only a handful of times in your life that others have pointed out to you that this is a cat. But with just one or two prompts, you can remember these concepts for the rest of your life. Even if people never tell us that this is a cat, you know that this should not be a dog or any other animal. This kind of learning without an answer is unsupervised learning, and the learning that others tell us the answer is supervised learning, which shows the basic nature of unsupervised learning. how important it is. Since supervised learning relies heavily on manually labeled data, we hope that the neural network can learn more from a large amount of unlabeled data, thereby improving the data learning efficiency and model generalization ability. Therefore, the pre-training improvement based on self-supervised learning is used as the One of the key directions.

Self-supervised learning can be divided into methods based on Pretext Task, methods based on Contrastive Learning, methods based on Clustering and methods based on Contrastive + Cluster. The method based on Pretext Task allows the neural network to solve a pretext task. In this process, the model can learn rich feature representations, which can then be used for downstream tasks, but learning feature representations only by a single pretext task will not be the best choice and The design differences between the different pre-tasks are very large, and the difficulty is different. The method based on Contrastive Learning learns the feature representation of the samples by comparing the data with positive samples and negative samples in the feature space respectively. The amount of computation is very large due to the feature-level comparison of the pairs that need to be displayed. The clustering-based method performs clustering in the feature space to see which pictures are similar in the feature space, feature clustering + prediction cluster assignment, cluster (codes) in all datasets according to image feature, and in one training step will be To cluster many image views, this method usually needs to scan the dataset many times. We mainly improve the model by self-supervised learning pre-training based on the latest research of facebook SwAV method, which is a method based on Contrastive + Cluster.

The difference between the SwAV method and some of the previous comparative learning methods is mainly in the comparison of features. SwAV uses a code to express the features to maintain consistency.

Usually based on the clustering method, clustering (codes) is generally performed in all data sets based on image features, and many image views are clustered in one training step. The Swav method does not consider using codes as the target, but learns by keeping the codes of different views of a picture consistent. It can be understood that multiple different views of a picture need to correspond to the same code instead of directly using the same code. They feature to do it.

The training mainly consists of two parts:

How z(features) gets Q(codes) by mapping c(prototypes);

With z and q, in theory, the z and q generated by different views of the same picture can also be predicted from each other, so the author defines a new loss as shown in the formula.

Where z is feature, q is codes, s and t subscripts indicate that the image is converted through different augmentations, and the calculation formula of sub-item loss is:

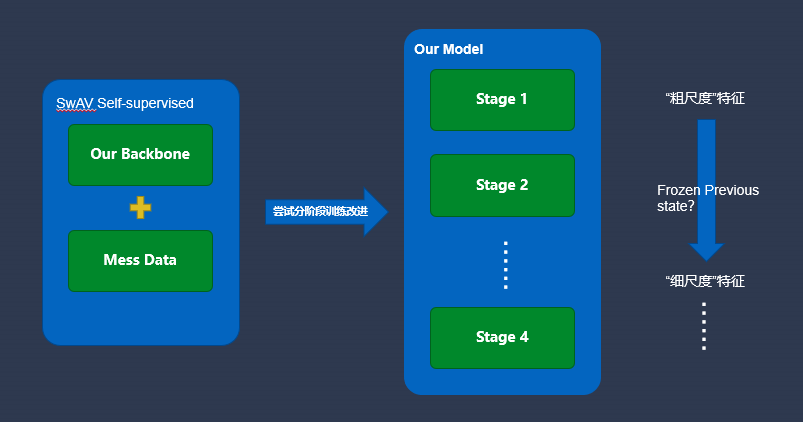

We mainly adopt the SwAV method to pre-train our designed network architecture with self-checking and self-supervised learning. And try a "staged training" approach, decomposing the learning process into related subtasks completed step by step, and gradually injecting information into the network, so that the "coarse-scale" features of the data are captured in the initial stage of training, and the "fine-scale" features of the data are learned in the subsequent stages. scale” feature, and the training results of each stage can be used as a prerequisite for the next stage, which will produce regularization effects and enhance generalization. The work on this part is currently still in progress, and the performance statistics are coming soon.

2.2.3 Supervised fine-tuning

The performance of the visual model is the combined result of the network architecture, training method, and regularization method. When doing specific tasks, supervised fine-tuning aims to use its labeled samples to adjust the parameters of the pre-trained network. After loading the network structure and initializing the network with pre-trained weights, it is necessary to set a reasonable hyperparameter configuration, optimization method, data enhancement method, and regularization method. New network architectures are often the basis for many advances, and often more advanced training methods, data augmentation methods, and regularization methods appear at the same time as new network architectures. Only some past and recent emerging methods are listed here. For specific vision tasks, they can be configured according to their own experience or the experience of others.

3 Business Applications

At present, we are looking for two existing internal businesses, and try to use our pre-training model alignment for optimization and upgrading, which mainly include theme resource style full-scene marking and PGC small video primary and secondary classification. In the theme resource style full-scene marking business, our tiny pre-trained model is used to do the theme style multi-label classification task; compared with the original EfficientNet-based version, the number of parameters and floating-point operations of the model are only slightly increased. In this case, the marking accuracy rate increased from 87.7% to 95%, a significant increase of 7.3%. In addition, the base pre-training model + BERT is used for multi-modal fusion classification in the primary and secondary classification business of PGC small video;

Compared with the original method, the primary and secondary classification accuracy of small videos has been improved. The primary accuracy has increased from 86.5% to 89.7%, an increase of 3.2%; the secondary accuracy has increased from 61.6% to 75.4%, an increase of 13.8%. It fully verifies the effectiveness and business value of our pre-trained model.

4 Summary

The pre-training model is a deep learning network architecture + weights trained on massive data, and it is a common method to improve the performance of a specific task algorithm. The key techniques of pre-training models include: network architecture, self-supervised pre-training, and supervised fine-tuning, each of which has a lot to study. In addition, the scales of pre-training models required by different business scenarios are often different, and multiple variant models need to be designed for pre-training separately, so that the pre-training model can be applied to more businesses to achieve more value.

5 References

[1] Ashish Vaswani, Noam Shazeer, Niki Parmar, Jakob Uszkoreit, Llion Jones, Aidan N Gomez, Łukasz Kaiser, and Illia Polosukhin. Attention is all you need. In Advances in neural information processing systems, pages 5998–6008, 2017.

[2] Alexey Dosovitskiy, Lucas Beyer, Alexander Kolesnikov, Dirk Weissenborn, Xiaohua Zhai, Thomas Unterthiner, Mostafa Dehghani, Matthias Minderer, Georg Heigold, ylvain Gelly, et al. An image is worth 16x16 words: Transformers for image recognition at scale. arXiv preprint arXiv:2010.11929, 2020.

[3] Mathilde Caron, Ishan Misra, Julien Mairal, Priya Goyal, Piotr Bojanowski, and Armand Joulin. Unsupervised learningof visual features by contrasting cluster assignments.arXiv preprint arXiv:2006.09882, 2020.

About the Author

Darren OPPO Senior Algorithm Engineer

He has been deeply involved in the field of computer vision algorithms for many years, and currently focuses on the research of cv model architecture and training methods.

For more exciting content, please scan the code and follow the [OPPO Digital Intelligence Technology] public account

**粗体** _斜体_ [链接](http://example.com) `代码` - 列表 > 引用。你还可以使用@来通知其他用户。