foreword

MongoDB is a popular schemaless, in-memory database with a wide range of applications. As an important part of MongoDB, MongoDB Aggregate is mainly used for complex queries, statistical data, data analysis, etc. With the development of business, it will accumulate A large amount of data requires writing various complex query statements, which requires us to have a deep understanding of the principle of Aggregate, the core idea of Aggregate, and the performance analysis of Aggregate, and how to write more efficient query statements? How can I improve the performance of the query? The methods and methods require in-depth exploration. Next, let us do a comprehensive analysis of MongoDB Aggregate.

The development history of MongoDB Aggregate

The core idea of MongoDB Aggregation

MongoDB Aggregation Framework

MongoDB Aggregation Framework mainly uses the Aggregate Language to write Pipeline to provide data analysis and processing capabilities. It consists of two parts:

- The application defines the Pipeline through the Aggregate Api provided by the MongoDB driver and passes it to the Aggregate Runtime

- Aggregate Runtime accepts the request from the application, and then executes the Stage in the PipeLine on the stored data to query the data

The schematic diagram is as follows:

The application accepts the user's query request through the MQL API or Agg API provided by the MongoDB Driver, and then hands it to the MongoDB Database Runtime for execution. The Aggregation Runtime is a part of the Query Runtime. The Aggregation Runtime reuses some of the engine capabilities of the Query Runtime. It is reflected in the first stage $match of the Pipeline executed by the Aggregation Runtime. The first $match stage of the Aggregate Pipeline is processed by the query analysis engine in MQL.

MongoDB Aggregation Language

For beginners, the Aggregate Framework is difficult to understand, and its learning curve is relatively steep. You must overcome it to improve your Aggregate programming ability and disassemble complex business into each stage in the Aggregate Pipeline. , and can understand the responsibilities of each stage, then correctly combine the order of each stage, and finally complete the data processing through the way of Pipeline Stream, which is its core.

Aggregate Language idea:

- Database-oriented programming languages are not business-oriented programming languages

- Declarative programming languages Non-imperative programming languages

- functional programming languages non-procedural programming languages

Aggregate Language Features:

A functional programming language, Aggregate Pipeline declares a series of ordered Stages, and uses the data generated by the previous Stage as the input data of the next Stage. This behavior itself is the characteristic behavior of the function, and the Operator in each Stage It can also accept the return value of other Oprators as input parameters. The core of Aggregate programming is to split the business logic into stages, and then complete the data transformation through various built-in Operators in the Stage stage. Each Operator can be understood as a built-in Function.

Aggregate Language difficulties:

- verbose to write

- difficult to understand

- Because more of our development scenarios are more familiar procedural programming, but for Aggregate, you must think about problems with functional programming thinking, which is a change in development thinking.

Advantages of Aggregate Language:

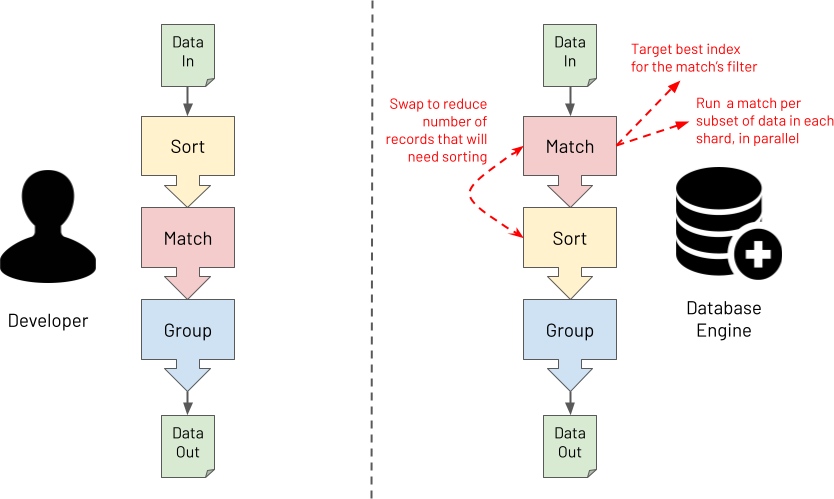

It is precisely because of the declarative and functional characteristics of Aggregate that it can flexibly handle various complex business scenarios. We only need to care about how to define what each Stage does? You don't need to care about how the Stage itself works, as long as you clearly declare each Stage, and then hand it over to the Aggregate Runtime, the Aggregate Runtime clearly knows how each Stage works, precisely because of this declarative feature , so the Aggregate runtime has the ability to re-optimize the order of Stages to better handle performance issues. At the same time, with this declarative Stage feature, we can also use Shards to execute different Stages concurrently, which can effectively reduce the response time. , to improve performance, as shown in the figure below which describes the optimization capabilities of Aggregate Runtime.

When to use Aggregation Framework

- Generate report, Sum, Average, Count

- Join different collections to query data

- Data Discovery and Data Mining

- Filter sensitive data

- Implement various BI Connectors

- machine learning, etc.

programming specification

It is precisely because of the complexity of Aggregate PipeLine and its difficult to maintain characteristics that we need to formulate some specifications to allow us to better constrain our code. The specifications may be different for different companies. The following is the author's unit Some specifications developed:

- Do not write another Stage at the beginning or end

- Add "," after each field in Stage

- Add a blank line to each Stage

- For complex Stages, write comments via //

- For unneeded Stages, and Stages that need to be disabled in test development, use /**/ to comment out

- Stage to abide by a single responsibility

Example:

// BAD

var pipeline = [

{"$unset": [

"_id",

"address"

]}, {"$match": {

"dateofbirth": {"$gte": ISODate("1970-01-01T00:00:00Z")}

}}//, {"$sort": {

// "dateofbirth": -1

//}}, {"$limit": 2}

];

// GOOD

var pipeline = [

{"$unset": [

"_id",

"address",

]},

// Only match people born on or after 1st January 1970

{"$match": {

"dateofbirth": {"$gte": ISODate("1970-01-01T00:00:00Z")},

}},

/*

{"$sort": {

"dateofbirth": -1,

}},

{"$limit": 2},

*/

];

// GOOD

var unsetStage = {

"$unset": [

"_id",

"address",

]};

var matchStage = {

"$match": {

"dateofbirth": {"$gte": ISODate("1970-01-01T00:00:00Z")},

}};

var sortStage = {

"$sort": {

"dateofbirth": -1,

}};

var limitStage = {"$limit": 2};

var pipeline = [

unsetStage,

matchStage,

sortStage,

limitStage,

];

We have finished talking about the design ideas and language features of Mongo Aggregate. Next, we will discuss how to write the Pipeline in Aggregate. Here are some guidelines for your reference as follows:

Pipeline Programming Guidelines

Embrace Combinations and Combination Techniques

Aggregate Pipeline contains some declarative and ordered Statements, which we call Stage. The complete output of one Stage will be used as the complete input of the next Stage. Each Stage has no influence on each other and exists independently. Stage This high degree of free composition and the cohesion of a single Stage fully satisfy the data processing of complex business scenarios, and can greatly increase the possibility of testing Stages, because they are all independent, for Aggregate For a complex Pipeline, we first need to divide it into clear Stages, and then conduct independent testing and development for each Stage, as shown below

In this way, even if it is complex business logic, you can split it into specific independent stages, and then debug, analyze, and observe what the data at each step looks like. For this combination, the declared characteristics , has some very significant advantages:

- It is very convenient to annotate and debug a Stage

- Can easily Copy, Paste to add a new Stage

- More clarity on the specific purpose of each Stage

- The built-in Operator provided by Mongo is called in the specific Stage, and the behavior of the data is controlled by logical expressions

Project Stage

In the MQL language, $Project specifies which fields to return and which fields are to be ignored. In Aggregate, specify those fields to exclude or return in $Project Stage

Notable disadvantages:

$Project is verbose and inflexible, if you want to add a new field in the $Project stage and keep the original field, you must write all the original fields

Notable advantages:

In the $Project Stage stage, flexibly define which fields to include and which fields to ignore

When to use $Project:

$Project is advantageous when you need to keep a few fields, for example:

// INPUT (a record from the source collection to be operated on by an aggregation)

{

_id: ObjectId("6044faa70b2c21f8705d8954"),

card_name: "Mrs. Jane A. Doe",

card_num: "1234567890123456",

card_expiry: "2023-08-31T23:59:59.736Z",

card_sec_code: "123",

card_provider_name: "Credit MasterCard Gold",

transaction_id: "eb1bd77836e8713656d9bf2debba8900",

transaction_date: ISODate("2021-01-13T09:32:07.000Z"),

transaction_curncy_code: "GBP",

transaction_amount: NumberDecimal("501.98"),

reported: true

}

// OUTPUT (a record in the results of the executed aggregation)

{

transaction_info: {

date: ISODate("2021-01-13T09:32:07.000Z"),

amount: NumberDecimal("501.98")

},

status: "REPORTED"

}

// BAD

[

{"$set": {

// Add some fields

"transaction_info.date": "$transaction_date",

"transaction_info.amount": "$transaction_amount",

"status": {"$cond": {"if": "$reported", "then": "REPORTED", "else": "UNREPORTED"}},

}},

{"$unset": [

// Remove _id field

"_id",

// Must name all other existing fields to be omitted

"card_name",

"card_num",

"card_expiry",

"card_sec_code",

"card_provider_name",

"transaction_id",

"transaction_date",

"transaction_curncy_code",

"transaction_amount",

"reported",

]},

]

// GOOD

[

{"$project": {

// Add some fields

"transaction_info.date": "$transaction_date",

"transaction_info.amount": "$transaction_amount",

"status": {"$cond": {"if": "$reported", "then": "REPORTED", "else": "UNREPORTED"}},

// Remove _id field

"_id": 0,

}},

]

When to use $set, $unset

$set, $unset are newly added functions in MongoDB 4.2. When you want to keep more fields in Stage, and want to add, modify, or remove the smallest set of fields, at this time $set, $unset are Most used, for example:

// INPUT (a record from the source collection to be operated on by an aggregation)

{

_id: ObjectId("6044faa70b2c21f8705d8954"),

card_name: "Mrs. Jane A. Doe",

card_num: "1234567890123456",

card_expiry: "2023-08-31T23:59:59.736Z",

card_sec_code: "123",

card_provider_name: "Credit MasterCard Gold",

transaction_id: "eb1bd77836e8713656d9bf2debba8900",

transaction_date: ISODate("2021-01-13T09:32:07.000Z"),

transaction_curncy_code: "GBP",

transaction_amount: NumberDecimal("501.98"),

reported: true

}

// OUTPUT (a record in the results of the executed aggregation)

{

card_name: "Mrs. Jane A. Doe",

card_num: "1234567890123456",

card_expiry: ISODate("2023-08-31T23:59:59.736Z"), // Field type converted from text

card_sec_code: "123",

card_provider_name: "Credit MasterCard Gold",

transaction_id: "eb1bd77836e8713656d9bf2debba8900",

transaction_date: ISODate("2021-01-13T09:32:07.000Z"),

transaction_curncy_code: "GBP",

transaction_amount: NumberDecimal("501.98"),

reported: true,

card_type: "CREDIT" // New added literal value field

}

// BAD

[

{"$project": {

// Modify a field + add a new field

"card_expiry": {"$dateFromString": {"dateString": "$card_expiry"}},

"card_type": "CREDIT",

// Must now name all the other fields for those fields to be retained

"card_name": 1,

"card_num": 1,

"card_sec_code": 1,

"card_provider_name": 1,

"transaction_id": 1,

"transaction_date": 1,

"transaction_curncy_code": 1,

"transaction_amount": 1,

"reported": 1,

// Remove _id field

"_id": 0,

}},

]

// GOOD

[

{"$set": {

// Modified + new field

"card_expiry": {"$dateFromString": {"dateString": "$card_expiry"}},

"card_type": "CREDIT",

}},

{"$unset": [

// Remove _id field

"_id",

]},

]

When to use $AddFields

$AddFields is a new function added in 3.4. It mainly wants to increase the ability to modify data on the basis of $Porject. It has many similar capabilities to $set, but it can only add a new multi-field and cannot be used to modify , Under normal circumstances, we do not recommend using it, which may be a product of Mongo's overdue period.

After understanding PipeLine and the execution order of Stages in PipeLine, how do we specifically write a Stage? Which Expression is its core.

What is Expression?

Expression is the core capability of Aggrgate Pipeline Stage. During the development process, we generally check the official Mongo documents, find corresponding examples, and then copy and modify them. It lacks in-depth thinking, but if you want to use Aggregate Expression proficiently, it is necessary to Deep understanding of Expression.

Aggregate Expression mainly includes 3 aspects:

- Operator-Operator, prefixed with $, accesses the key of an Object, for example: $arrayElementAt , $cond, $dateToString

- Field Path, the embedded path to access an object is prefixed with $, for example:

$account.sortcode,$addresses.address.city - Variables, prefixed with $$ when accessed

3.1 System variables are mainly derived from the system environment rather than a specific operation data record, for example: "$$NOW", "$$CLUSTER_TIME"

3.2 Marking system variables, mainly marking the value of data processing, the data behavior when retransmitted to the next Stage, for example: "$$ROOT", "$$REMOVE", "$$PRUNE"

3.3 User variables, mainly to store user-defined variables, variables defined by $let, and temporary variables defined in the middle of $Lookup, $Map, and

You can easily process various logic and calculate data by combining these 3 different categories of variables, for example:

"customer_info": {"$cond": {

"if": {"$eq": ["$customer_info.category", "SENSITIVE"]},

"then": "$$REMOVE",

"else": "$customer_info",

}}

What is the return value of Expression?

The return value of the expression is the data type of Json/Bson

- a Number (including integer, long, float, double, decimal128)

- a String (UTF-8)

- a Boolean

- a DateTime (UTC)

- an Array

- an Object

A specific expression can return several specified data types, such as

- The return value type of $contact is string | null , $ROOT can only return the root document involved in the Pipeline Stage

- For Field Path, its return value type is different, mainly depends on the data structure of the document you input, if Address is an object, the return value is Object, if it is String, the return value is String, in short Say, for Field Path, or user-defined variable, its return value type depends on the context of the running environment, which is very critical, this is very similar to Javascript, it is a weak constraint.

- For Operator's Expression, it can accept other Operator Expression return values as input parameters, which is also an important manifestation of functional programming

{"$dayOfWeek": ISODate("2021-04-24T00:00:00Z")}

{"$dayOfWeek": "$person_details.data_of_birth"}

{"$dayOfWeek": "$$NOW"}

{"$dayOfWeek": {"$dateFromParts": {"year" : 2021, "month" : 4, "day": 24}}} **Where $limit, $skip, $sort, $count, $out Stage cannot use expressions.

And you need to pay special attention to the problem that using $expr in $Match may not hit the index. This depends on the specified Operator and the version of Mongo you are using. The following is using $expr in $match:

[

{ _id: 1, width: 2, height: 8 },

{ _id: 2, width: 3, height: 4 },

{ _id: 3, width: 20, height: 1 }

]

var pipeline = [

{"$match": {

"$expr": {"$gt": [{"$multiply": ["$width", "$height"]}, 12]},

}},

];

Expression Advanced Techniques for Handling Arrays

For MongoDB, the embedded array itself is its core capability. It is different from relational databases. Its characteristic is to treat the entire original data as a document, which is more in line with the real-world data description. Such a storage There are too many documents of data types. It is very important for developers to get the data they want. Agrregate provides Expression to operate on Array and enhance this ability. In processing array elements, we are more important. The change of thinking mode, from the original procedural thinking mode to a functional thinking mode to think about problems, so as to understand and deal with complex business requirements, and also more in line with the thinking logic of Mongo Agreage.

"IF-ELSE" conditional expression

let order = {"product" : "WizzyWidget", "price": 25.99, "qty": 8};

// Procedural style JavaScript

if (order.qty > 5) {

order.cost = order.price * order.qty * 0.9;

} else {

order.cost = order.price * order.qty;

}

db.customer_orders.insertOne(order);

// Aggregate

var pipeline = [

{"$set": {

"cost": {

"$cond": {

"if": {"$gte": ["$qty", 5 ]},

"then": {"$multiply": ["$price", "$qty", 0.9]},

"else": {"$multiply": ["$price", "$qty"]},

}

},

}},

];

db.customer_orders.aggregate(pipeline);

// Functional style JavaScript

order.cost = (

(order.qty > 5) ?

(order.price * order.qty * 0.9) :

(order.price * order.qty)

);

// output

{product: 'WizzyWidget', qty: 8, price: 25.99, cost: 187.128}

"FOR-EACH" iterates through each element in an array

let order = {

"orderId": "AB12345",

"products": ["Laptop", "Kettle", "Phone", "Microwave"]

};

// Procedural style JavaScript

for (let pos in order.products) {

order.products[pos] = order.products[pos].toUpperCase();

}

db.orders.insertOne(order);

// Aggregate

var pipeline = [

{"$set": {

"products": {

"$map": {

"input": "$products",

"as": "product",

"in": {"$toUpper": "$$product"}

}

}

}}

];

db.orders.aggregate(pipeline);

// Functional style JavaScript

order.products = order.products.map(

product => {

return product.toUpperCase();

}

);

// Output

{orderId: 'AB12345', products: ['LAPTOP', 'KETTLE', 'PHONE', 'MICROWAVE']}

"FOR-EACH" calculates the value after the elements in the array are accumulated

let order = {

"orderId": "AB12345",

"products": ["Laptop", "Kettle", "Phone", "Microwave"]

};

order.productList = "";

// Procedural style JavaScript

for (const pos in order.products) {

order.productList += order.products[pos] + "; ";

}

db.orders.insertOne(order);

// Aggregate

var pipeline = [

{"$set": {

"productList": {

"$reduce": {

"input": "$products",

"initialValue": "",

"in": {

"$concat": ["$$value", "$$this", "; "]

}

}

}

}}

];

db.orders.aggregate(pipeline);

// Functional style JavaScript

order.productList = order.products.reduce(

(previousValue, currentValue) => {

return previousValue + currentValue + "; ";

},

""

);

// output

{

orderId: 'AB12345',

products: [ 'Laptop', 'Kettle', 'Phone', 'Microwave' ],

productList: 'Laptop; Kettle; Phone; Microwave; '

}

"FOR-EACH", loop through the array to find the position of the specific element in the array

// 找出room_sizes 数组中第一个面积大于60M 的元素所在的数组中的顺序

db.buildings.insertOne({

"building": "WestAnnex-1",

"room_sizes": [

{"width": 9, "length": 5},

{"width": 8, "length": 7},

{"width": 7, "length": 9},

{"width": 9, "length": 8},

]

});

// Aggregate

var pipeline = [

{"$set": {

"firstLargeEnoughRoomArrayIndex": {

"$reduce": {

"input": {"$range": [0, {"$size": "$room_sizes"}]},

"initialValue": -1,

"in": {

"$cond": {

"if": {

"$and": [

// IF ALREADY FOUND DON'T CONSIDER SUBSEQUENT ELEMENTS

{"$lt": ["$$value", 0]},

// IF WIDTH x LENGTH > 60

{"$gt": [

{"$multiply": [

{"$getField": {"input": {"$arrayElemAt": ["$room_sizes", "$$this"]}, "field": "width"}},

{"$getField": {"input": {"$arrayElemAt": ["$room_sizes", "$$this"]}, "field": "length"}},

]},

60

]}

]

},

// IF ROOM SIZE IS BIG ENOUGH CAPTURE ITS ARRAY POSITION

"then": "$$this",

// IF ROOM SIZE NOT BIG ENOUGH RETAIN EXISTING VALUE (-1)

"else": "$$value"

}

}

}

}

}}

];

db.buildings.aggregate(pipeline);

// output

{

building: 'WestAnnex-1',

room_sizes: [

{ width: 9, length: 5 },

{ width: 8, length: 7 },

{ width: 7, length: 9 },

{ width: 9, length: 8 }

],

firstLargeEnoughRoomArrayIndex: 2

}

// summary

1. 找到元素之后不会中断,如果是大数组,可能会有性能上面的损失

Differences between $map and $reduce

// sourc data

db.deviceReadings.insertOne({

"device": "A1",

"readings": [27, 282, 38, 22, 187]

});

// output

{

device: 'A1',

readings: [ 27, 282, 38, 22, 187 ],

deviceReadings: [ 'A1:27', 'A1:282', 'A1:38', 'A1:22', 'A1:187' ]

}

// $map

var pipeline = [

{"$set": {

"deviceReadings": {

"$map": {

"input": "$readings",

"as": "reading",

"in": {

"$concat": ["$device", ":", {"$toString": "$$reading"}]

}

}

}

}}

];

db.deviceReadings.aggregate(pipeline);

// $reduce

var pipeline = [

{"$set": {

"deviceReadings": {

"$reduce": {

"input": "$readings",

"initialValue": [],

"in": {

"$concatArrays": [

"$$value",

[{"$concat": ["$device", ":", {"$toString": "$$this"}]}]

]

}

}

}

}}

];

db.deviceReadings.aggregate(pipeline);

// output

{

device: 'A1',

readings: [ 27, 282, 38, 22, 187 ],

deviceReadings: [ 'A1-0:27', 'A1-1:282', 'A1-2:38', 'A1-3:22', 'A1-4:187' ]

}

// $reduce

var pipeline = [

{"$set": {

"deviceReadings": {

"$reduce": {

"input": {"$range": [0, {"$size": "$readings"}]},

"initialValue": [],

"in": {

"$concatArrays": [

"$$value",

[{"$concat": [

"$device",

"-",

{"$toString": "$$this"},

":",

{"$toString": {"$arrayElemAt": ["$readings", "$$this"]}},

]}]

]

}

}

}

}}

];

db.deviceReadings.aggregate(pipeline);

$map adds a new field to each object in the array

db.orders.insertOne({

"custid": "jdoe@acme.com",

"items": [

{

"product" : "WizzyWidget",

"unitPrice": 25.99,

"qty": 8,

},

{

"product" : "HighEndGizmo",

"unitPrice": 33.24,

"qty": 3,

}

]

});

// aggregate

var pipeline = [

{"$set": {

"items": {

"$map": {

"input": "$items",

"as": "item",

"in": {

"product": "$$item.product",

"unitPrice": "$$item.unitPrice",

"qty": "$$item.qty",

"cost": {"$multiply": ["$$item.unitPrice", "$$item.qty"]}},

}

}

}

}

];

db.orders.aggregate(pipeline);

// output

{

custid: 'jdoe@acme.com',

items: [

{

product: 'WizzyWidget',

unitPrice: 25.99,

qty: 8,

cost: 187.128

},

{

product: 'HighEndGizmo',

unitPrice: 33.24,

qty: 3,

cost: 99.72

}

]

}

// 缺点和$project 类似,需要你指定输出的字段,如果字段特别多就会特别的繁琐

// 改进的方式

var pipeline = [

{"$set": {

"items": {

"$map": {

"input": "$items",

"as": "item",

"in": {

"$mergeObjects": [

"$$item",

{"cost": {"$multiply": ["$$item.unitPrice", "$$item.qty"]}},

]

}

}

}

}}

];

db.orders.aggregate(pipeline);

// 等同的其它写法

var pipeline = [

{"$set": {

"items": {

"$map": {

"input": "$items",

"as": "item",

"in": {

"$arrayToObject": {

"$concatArrays": [

{"$objectToArray": "$$item"},

[{

"k": "cost",

"v": {"$multiply": ["$$item.unitPrice", "$$item.qty"]},

}]

]

}

}

}

}}

}

];

db.orders.aggregate(pipeline);

// 动态组合字段

var pipeline = [

{"$set": {

"items": {

"$map": {

"input": "$items",

"as": "item",

"in": {

"$arrayToObject": {

"$concatArrays": [

{"$objectToArray": "$$item"},

[{

"k": {"$concat": ["costFor", "$$item.product"]},

"v": {"$multiply": ["$$item.unitPrice", "$$item.qty"]},

}]

]

}

}

}

}}

}

];

db.orders.aggregate(pipeline);

// output

{

custid: 'jdoe@acme.com',

items: [

{

product: 'WizzyWidget',

unitPrice: 25.99,

qty: 8,

costForWizzyWidget: 207.92

},

{

product: 'HighEndGizmo',

unitPrice: 33.24,

qty: 3,

costForHighEndGizmo: 99.72

}

]

}

reflection returns the data type of each element, grouped

db.customers.insertMany([

{

"_id": ObjectId('6064381b7aa89666258201fd'),

"email": 'elsie_smith@myemail.com',

"dateOfBirth": ISODate('1991-05-30T08:35:52.000Z'),

"accNnumber": 123456,

"balance": NumberDecimal("9.99"),

"address": {

"firstLine": "1 High Street",

"city": "Newtown",

"postcode": "NW1 1AB",

},

"telNums": ["07664883721", "01027483028"],

"optedOutOfMarketing": true,

},

{

"_id": ObjectId('734947394bb73732923293ed'),

"email": 'jon.jones@coolemail.com',

"dateOfBirth": ISODate('1993-07-11T22:01:47.000Z'),

"accNnumber": 567890,

"balance": NumberDecimal("299.22"),

"telNums": "07836226281",

"contactPrefernece": "email",

},

]);

// aggregate

var pipeline = [

{"$project": {

"_id": 0,

"schema": {

"$map": {

"input": {"$objectToArray": "$$ROOT"},

"as": "field",

"in": {

"fieldname": "$$field.k",

"type": {"$type": "$$field.v"},

}

}

}

}}

];

db.customers.aggregate(pipeline);

// output

{

schema: [

{fieldname: '_id', type: 'objectId'},

{fieldname: 'email', type: 'string'},

{fieldname: 'dateOfBirth', type: 'date'},

{fieldname: 'accNnumber', type: 'int'},

{fieldname: 'balance', type: 'decimal'},

{fieldname: 'address', type: 'object'},

{fieldname: 'telNums', type: 'array'},

{fieldname: 'optedOutOfMarketing', type: 'bool'}

]

},

{

schema: [

{fieldname: '_id', type: 'objectId'},

{fieldname: 'email', type: 'string'},

{fieldname: 'dateOfBirth', type: 'date'},

{fieldname: 'accNnumber', type: 'int'},

{fieldname: 'balance', type: 'decimal'},

{fieldname: 'telNums', type: 'string'},

{fieldname: 'contactPrefernece', type: 'string'}

}

// group

var pipeline = [

{"$project": {

"_id": 0,

"schema": {

"$map": {

"input": {"$objectToArray": "$$ROOT"},

"as": "field",

"in": {

"fieldname": "$$field.k",

"type": {"$type": "$$field.v"},

}

}

}

}},

{"$unwind": "$schema"},

{"$group": {

"_id": "$schema.fieldname",

"types": {"$addToSet": "$schema.type"},

}},

{"$set": {

"fieldname": "$_id",

"_id": "$$REMOVE",

}},

];

db.customers.aggregate(pipeline);

// output

{fieldname: '_id', types: ['objectId']},

{fieldname: 'address', types: ['object']},

{fieldname: 'email', types: ['string']},

{fieldname: 'telNums', types: ['string', 'array']},

{fieldname: 'contactPrefernece', types: ['string']},

{fieldname: 'accNnumber', types: ['int']},

{fieldname: 'balance', types: ['decimal']},

{fieldname: 'dateOfBirth', types: ['date']},

{fieldname: 'optedOutOfMarketing', types: ['bool']}

After we write the Aggregate Pipeline, we need to perform performance testing on it, so that we can analyze the performance of the Aggregate Pipeline through the Explain plan:

Explain Plans

For MQL query statements, you can easily view the execution process through the query plan, view the behavior of the index, adjust the defined query through the feedback of the query plan, and adjust the data model accordingly. The same is true for the Aggregate Pipeline. , but Aggregate Pipeline is relatively more complex because it has complex business logic. By analyzing the query plan, you can locate performance bottlenecks. MongoDb Aggrgate Runtime has its own query optimization logic, but it is preferred to guarantee It is the Function Behavior that is correct. For some complex logical calculations, it is impossible to know how to optimize it. It is because of this shortcoming that we need to analyze the query plan to clarify the logic and adjust the corresponding performance.

- View execution plan

db.coll.explain().aggregate([{"$match": {"name": "Jo"}}]);

// QueryPlanner verbosity (default if no verbosity parameter provided)

db.coll.explain("queryPlanner").aggregate(pipeline);

// ExecutionStats verbosity

db.coll.explain("executionStats").aggregate(pipeline);

// AllPlansExecution verbosity

db.coll.explain("allPlansExecution").aggregate(pipeline);

- Analyze query plans

{

"customer_id": "elise_smith@myemail.com",

"orders": [

{

"orderdate": ISODate("2020-01-13T09:32:07Z"),

"product_type": "GARDEN",

"value": NumberDecimal("99.99")

},

{

"orderdate": ISODate("2020-05-30T08:35:52Z"),

"product_type": "ELECTRONICS",

"value": NumberDecimal("231.43")

}

]

}

// pipeline

var pipeline = [

// Unpack each order from customer orders array as a new separate record

{"$unwind": {

"path": "$orders",

}},

// Match on only one customer

{"$match": {

"customer_id": "tonijones@myemail.com",

}},

// Sort customer's purchases by most expensive first

{"$sort" : {

"orders.value" : -1,

}},

// Show only the top 3 most expensive purchases

{"$limit" : 3},

// Use the order's value as a top level field

{"$set": {

"order_value": "$orders.value",

}},

// Drop the document's id and orders sub-document from the results

{"$unset" : [

"_id",

"orders",

]},

];

// output

[

{

customer_id: 'tonijones@myemail.com',

order_value: NumberDecimal("1024.89")

},

{

customer_id: 'tonijones@myemail.com',

order_value: NumberDecimal("187.99")

},

{

customer_id: 'tonijones@myemail.com',

order_value: NumberDecimal("4.59")

}

]

// execute query plan

db.customer_orders.explain("queryPlanner").aggregate(pipeline);

stages: [

{

'$cursor': {

queryPlanner: {

parsedQuery: { customer_id: { '$eq': 'tonijones@myemail.com' } },

winningPlan: {

stage: 'FETCH',

inputStage: {

stage: 'IXSCAN',

keyPattern: { customer_id: 1 },

indexName: 'customer_id_1',

direction: 'forward',

indexBounds: {

customer_id: [

'["tonijones@myemail.com", "tonijones@myemail.com"]'

]

}

}

},

}

}

},

{ '$unwind': { path: '$orders' } },

{ '$sort': { sortKey: { 'orders.value': -1 }, limit: 3 } },

{ '$set': { order_value: '$orders.value' } },

{ '$project': { _id: false, orders: false } }

]

//executionStats

db.customer_orders.explain("executionStats").aggregate(pipeline);

executionStats: {

nReturned: 1,

totalKeysExamined: 1,

totalDocsExamined: 1,

executionStages: {

stage: 'FETCH',

nReturned: 1,

works: 2,

advanced: 1,

docsExamined: 1,

inputStage: {

stage: 'IXSCAN',

nReturned: 1,

works: 2,

advanced: 1,

keyPattern: { customer_id: 1 },

indexName: 'customer_id_1',

direction: 'forward',

indexBounds: {

customer_id: [

'["tonijones@myemail.com", "tonijones@myemail.com"]'

]

},

keysExamined: 1,

}

}

}

In order to further improve the performance of our Aggregate Pipeline, we need to clearly understand the principle of the Pipeline:

Pipe flow and blockage

When Mongo Aggregate Runtime starts to execute the Pipeline, it loads the first batch of data through the Aggregate Init Query Cursor, and then hands it over to the first Stage, which is directly handed over to the second Stage after processing, and so on. And the latter stage does not need to wait for all the data of the previous stage to be loaded, it will be directly handed over to the next stage for processing, we call it Stream processing, but $Sort, $Group are blocking, that is to say, these 2 In this stage, the previous Stage must load all the qualified data into the memory before sorting or grouping, which consumes a lot of memory of the database server, as shown in the following figure:

$Sort memory consumption and improvements

Since $Sort is blocking, it is required to load all eligible data into memory before sorting, so if the amount of data is too large, it will cause database memory overflow, and the memory usage constrained by Pipeline Stage It is 100MB, if it exceeds this, an error will be reported. Only by setting the parameter, "allowDiskUse:true", to break through this memory limit and load data to the maximum, but as the amount of data increases, it will become slower and slower. This behavior is difficult to avoid to a certain extent, but there are some principles that can help us improve performance

- Sort by index

If $Sort does not depend on the previous $Unwind, $Project, $Group Stage, we can move $Sort to the closest place to the first Stage, which is equal to when we load the data, it will be loaded according to the index, instead of Computed in memory, the guidelines are as follows:

- Use with Limit at the same time to limit the amount of data

- Reduce the amount of sorted data. If there is a complex query and $Sort cannot hit the index, try to move $Sort to the last Stage of the entire Pipeline for sorting

$Group memory consumption and improvements

The behavior of $Group and $Sort is actually the same, because they are both blocking, we can't group in batches, because the scene of grouping is used to count accumulated values, such as summing, averaging, etc. , to improve performance, the guidelines are as follows:

- Avoid $Unwind , $ReGroup to deal with elements of array

- The responsibility of $Group is more single, just processing some accumulated values

[

{

customer_id: 'elise_smith@myemail.com',

orderdate: ISODate('2020-05-30T08:35:52.000Z'),

value: NumberDecimal('9999')

}

{

customer_id: 'elise_smith@myemail.com',

orderdate: ISODate('2020-01-13T09:32:07.000Z'),

value: NumberDecimal('10101')

}

]

// SUBOPTIMAL

var pipeline = [

{"$set": {

"value_dollars": {"$multiply": [0.01, "$value"]}, // Converts cents to dollars

}},

{"$unset": [

"_id",

"value",

]},

{"$match": {

"value_dollars": {"$gte": 100}, // Peforms a dollar check

}},

];

// OPTIMAL

var pipeline = [

{"$set": {

"value_dollars": {"$multiply": [0.01, "$value"]},

}},

{"$match": { // Moved to before the $unset

"value": {"$gte": 10000}, // Changed to perform a cents check

}},

{"$unset": [

"_id",

"value",

]},

];

//

Avoid $Unwind , $ReGroup to deal with elements of array

// source collection

[

{

_id: 1197372932325,

products: [

{

prod_id: 'abc12345',

name: 'Asus Laptop',

price: NumberDecimal('429.99')

}

]

},

{

_id: 4433997244387,

products: [

{

prod_id: 'def45678',

name: 'Karcher Hose Set',

price: NumberDecimal('23.43')

},

{

prod_id: 'jkl77336',

name: 'Picky Pencil Sharpener',

price: NumberDecimal('0.67')

},

{

prod_id: 'xyz11228',

name: 'Russell Hobbs Chrome Kettle',

price: NumberDecimal('15.76')

}

]

}

]

// SUBOPTIMAL

var pipeline = [

// Unpack each product from the each order's product as a new separate record

{"$unwind": {

"path": "$products",

}},

// Match only products valued over 15.00

{"$match": {

"products.price": {

"$gt": NumberDecimal("15.00"),

},

}},

// Group by product type

{"$group": {

"_id": "$_id",

"products": {"$push": "$products"},

}},

];

// OPTIMAL

var pipeline = [

// Filter out products valued 15.00 or less

{"$set": {

"products": {

"$filter": {

"input": "$products",

"as": "product",

"cond": {"$gt": ["$$product.price", NumberDecimal("15.00")]},

}

},

}},

];

// output

[

{

_id: 1197372932325,

products: [

{

prod_id: 'abc12345',

name: 'Asus Laptop',

price: NumberDecimal('429.99')

}

]

},

{

_id: 4433997244387,

products: [

{

prod_id: 'def45678',

name: 'Karcher Hose Set',

price: NumberDecimal('23.43')

},

{

prod_id: 'xyz11228',

name: 'Russell Hobbs Chrome Kettle',

price: NumberDecimal('15.76')

}

]

}

]

Use more Filters early in the Pipeline

Explore if it's possible to make $match all hit the index

[

{

customer_id: 'elise_smith@myemail.com',

orderdate: ISODate('2020-05-30T08:35:52.000Z'),

value: NumberDecimal('9999')

}

{

customer_id: 'elise_smith@myemail.com',

orderdate: ISODate('2020-01-13T09:32:07.000Z'),

value: NumberDecimal('10101')

}

]

// SUBOPTIMAL

var pipeline = [

{"$set": {

"value_dollars": {"$multiply": [0.01, "$value"]}, // Converts cents to dollars

}},

{"$unset": [

"_id",

"value",

]},

{"$match": {

"value_dollars": {"$gte": 100}, // Peforms a dollar check

}},

];

// OPTIMAL

var pipeline = [

{"$set": {

"value_dollars": {"$multiply": [0.01, "$value"]},

}},

{"$match": { // Moved to before the $unset

"value": {"$gte": 10000}, // Changed to perform a cents check

}},

{"$unset": [

"_id",

"value",

]},

];

Explore if it's possible to make the $match part hit the index

The field you want to query is not the native field of the database. At this time, you may need to add a $Match to match the native field and hit the index to filter the data.

[

{

date_of_birth: ISODate('2019-05-30T08:35:52.000Z'),

}

{

date_of_birth: ISODate('2019-05-31T08:35:52.000Z'),

}

{

date_of_birth: ISODate('2019-06-01T08:35:52.000Z'),

}

]

由于出生日期是个敏感字段,我们需要加一个随机数来脱敏,我们需要用masked_date来代替,(0-7)

masked_date > 2019-06-05

// OPTIMAL

var pipeline = [

// extra $match

{"$match": {

"date_of_birth": {"$gt": 2019-05-30 },

}},

{"$match": {

"masked_date": {"$gt": 2019-06-05},

}},

];

If your Aggregate relies on the intermediate fields of the calculation, you should add extra $match as much as possible at this time to get as little data as possible.

Summarize

So far, the content related to MongoDB Aggregate has been introduced. In-depth understanding of the principle of MongoDB Aggregate is very helpful for us to handle complex business queries and maintain high performance. If you have any questions, please feel free to communicate in the comment area. If there is anything that needs to be corrected, you are also welcome to point it out. I hope this article can help you better understand MongoDB Aggregate.

Finally, I recommend our intelligent R&D management tool PingCode to everyone.

About PingCode

PingCode is an intelligent R&D management tool created by the domestic veteran SaaS manufacturer Worktile. It has launched Agile (agile development), Testhub (test management), Wiki (knowledge base), Plan (programme), Goals (goal) around the needs of enterprise R&D management. Management), Flow (automation management), Access (directory management) seven sub-products and the application market, which realize the coverage of the whole process of R&D management such as projects, tasks, requirements, defects, iteration planning, testing, and target management, as well as code hosting. Tools, CI/CD pipelines, automated testing and many other mainstream development tools are connected.

Since its official release, there have been more than 13 well-known companies such as Kugou Music, SenseTime, Dianxing Information, 51 Social Security, Wanguo Data, Golden Eagle Cartoon, UFIDA, Guoqi Intelligent Control, Wisdom Teeth Customer Service, and Yikuaibao. Many companies in the industry choose PingCode to implement R&D management.

**粗体** _斜体_ [链接](http://example.com) `代码` - 列表 > 引用。你还可以使用@来通知其他用户。