Author: Shi Jianping (Chu Yi)

last review: " Taobao widgets: brand new open card technology! ", " The large-scale application of Taobao widgets in 2021 Double Eleven "

This article mainly describes the rendering principle of Canvas under widgets from a technical perspective.

Before entering the text, you need to explain what [widget] is. A widget is an open solution at the Taobao module/card level. It mainly provides standard & consistent production, opening, and operation capabilities for small programs for private domains. , it has a variety of business forms, such as commodity cards, rights cards and interactive cards, etc. The widgets developed by ISV can be deployed to business scenarios such as stores, details, subscriptions, etc. at a very low cost, which greatly improves the efficiency of operation and distribution.

From the perspective of on-device technology, a widget is first and foremost a business container, which is characterized by DSL standardization, cross-platform rendering, and cross-scene circulation:

- DSL standardization means that widgets are fully compatible with the DSL of small programs (not only DSL, but also atomic API capabilities, production links, etc.), and developers can quickly get started without additional learning;

- Cross-platform rendering As the name suggests, the widget kernel (based on weex2.0) can render completely consistent effects on different operating systems such as Android and iOS through a scheme similar to flutter's self-drawing, and developers do not need to care about compatibility issues;

- Finally, cross-scenario circulation means that widget containers can be "embedded" into other business containers of various technology stacks, such as Native, WebView, applet, etc., so as to shield developers from underlying container differences and achieve one-time development. The effect of multiple runs.

Coincidentally, there are many similarities between the technical solution of Canvas under widgets and the technical solutions of widget containers embedded in other business containers, so the author will talk about Canvas rendering below.

Principles revealed

Device-side overall technical architecture

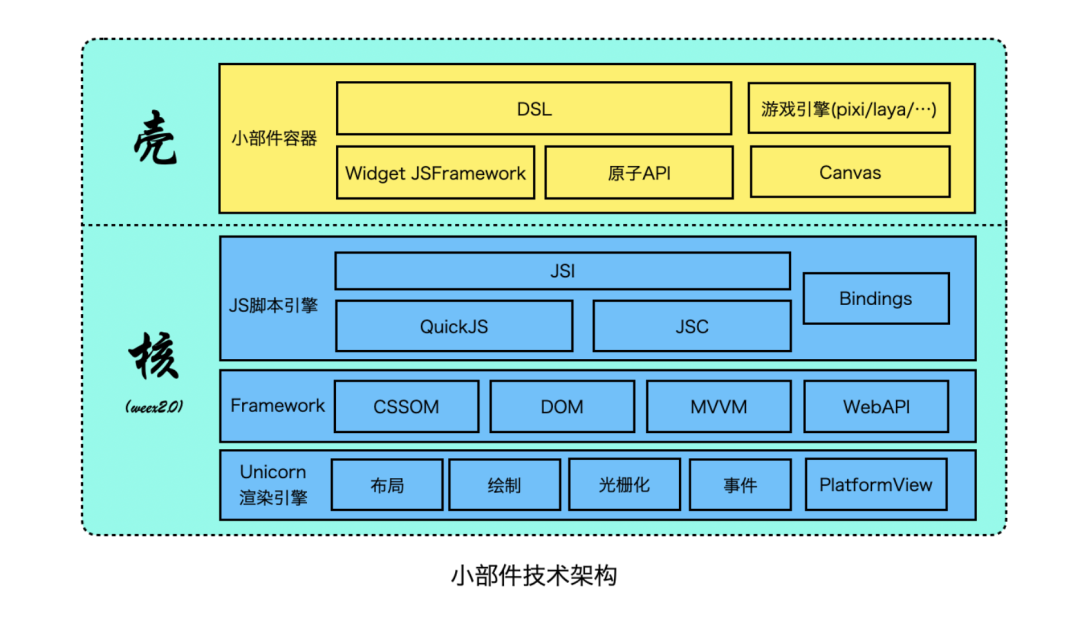

The overall structure of the widget technology side is shown in the figure below. From a macro perspective, it can be divided into two layers "shell" and "core"

"Shell" is a widget container, mainly including DSL, widget JSFramework, atomic API, and extension modules such as Canvas.

"Core" is the kernel of the widget, based on the new weex2.0. In weex1.0, we used the RN-like native rendering scheme, and in weex2.0, we upgraded to the Flutter-like self-drawing rendering scheme, so weex2.0 assumed the core responsibilities of widget JS execution, rendering, events, etc. , and subdivided into three modules: JS script engine, Framework and rendering engine. The JS engine uses lightweight QuickJS on the Android side, and JavaScriptCore on the iOS side, and supports writing Bindings independent of the script engine through JSI; the Framework layer provides CSSOM and DOM capabilities consistent with browsers, as well as the C++ MVVM framework and some WebAPI, etc. (Console, setTimeout, ...); finally, the rendering engine called Unicorn internally, which mainly provides rendering-related capabilities such as layout, drawing, synthesis, rasterization, etc. The Framework and rendering engine layers are developed in C++, and The platform is abstracted to better support cross-platform.

It is worth mentioning that the unicorn rendering engine has a built-in PlatformView capability, which allows embedding another Surface on the Surface rendered by weex. The content of the Surface is completely provided by the PlatformView developer. Through this extension capability, components such as Camera and Video can be Low-cost access, Canvas is also based on this ability to quickly migrate the Native Canvas (internally called FCanvas) under the applet to the widget container.

View the rendering process from multiple perspectives

For more details, you can also refer to the author's previous article " Design and Thinking of Cross-Platform Web Canvas Rendering Engine Architecture (Including Implementation Scheme) "

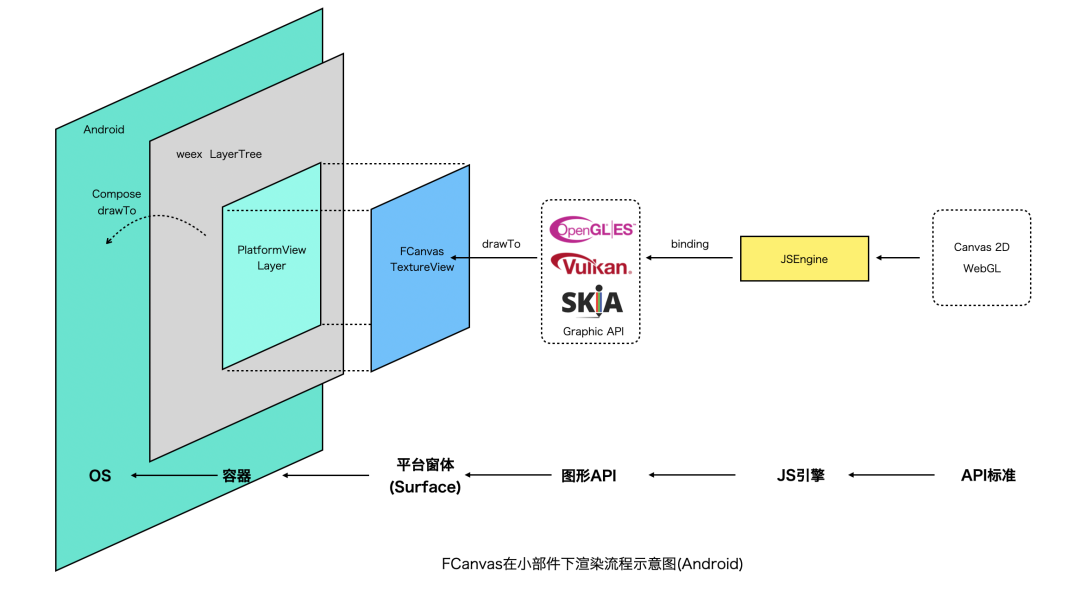

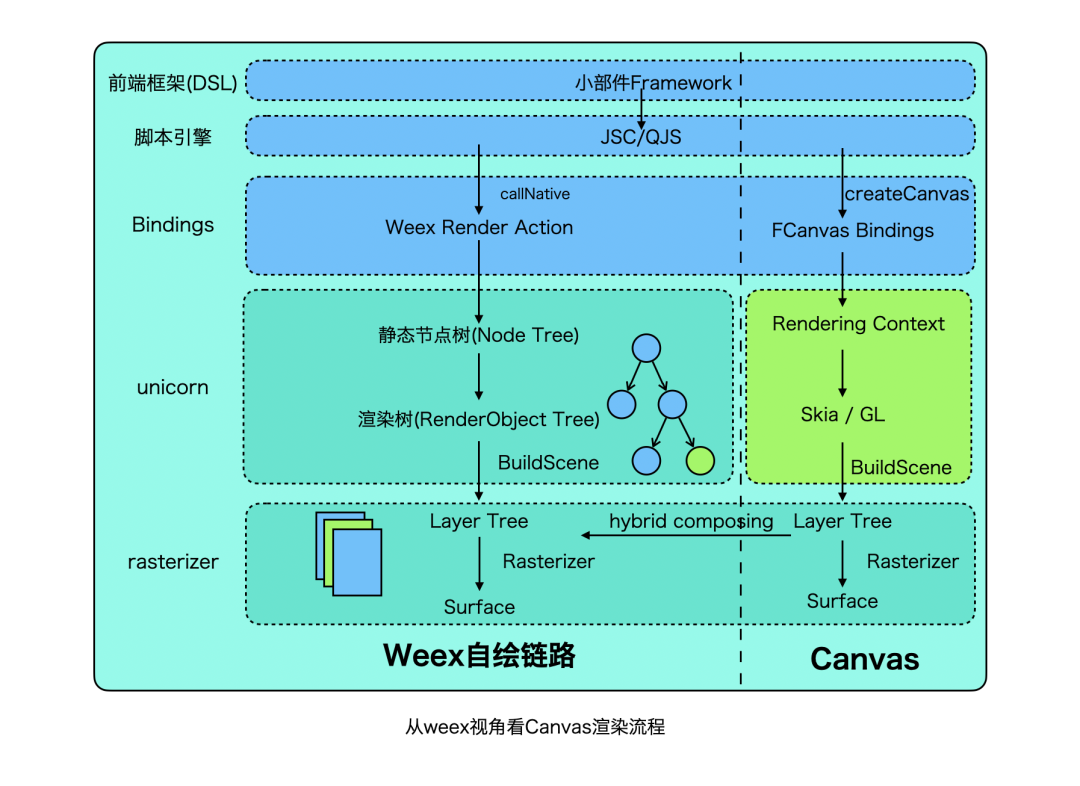

Reaching the focus of this article, first of all, let's look at the general rendering process of Canvas from a macro perspective. Please see the figure below. We look at it from right to left.

For developers, the direct contact is the Canvas API, including the Canvas2D API formulated by w3c and the WebGL API formulated by the khronos group, which are obtained through canvas.getContext('2d') and canvas.getContext('webgl') respectively. JS API will be bound to the implementation of Native C++ through JSBinding, 2D is implemented based on Skia and WebGL directly calls the OpenGLES interface. The graphics API needs to be bound to the platform form environment, that is, Surface, which can be SurfaceView or TextureView on the Android side.

Further left is the widget container layer. For weex, the basic unit of rendering synthesis is LayerTree, which describes the page hierarchy and records each node drawing command. Canvas is a Layer in this LayerTree -- PlatformViewLayer (this Layer defines the position and size of Canvas. information), LayerTree is synthesized to the Surface of weex through the unicorn rasterization module, and finally the Surface of weex and Canvas both participate in the rendering of the Android rendering pipeline and are rasterized to Display by the SurfaceFlinger compositor.

The above is the macro rendering link. Below, the author tries to describe the entire rendering process from different perspectives such as Canvas/Weex/Android platforms.

Canvas's own perspective

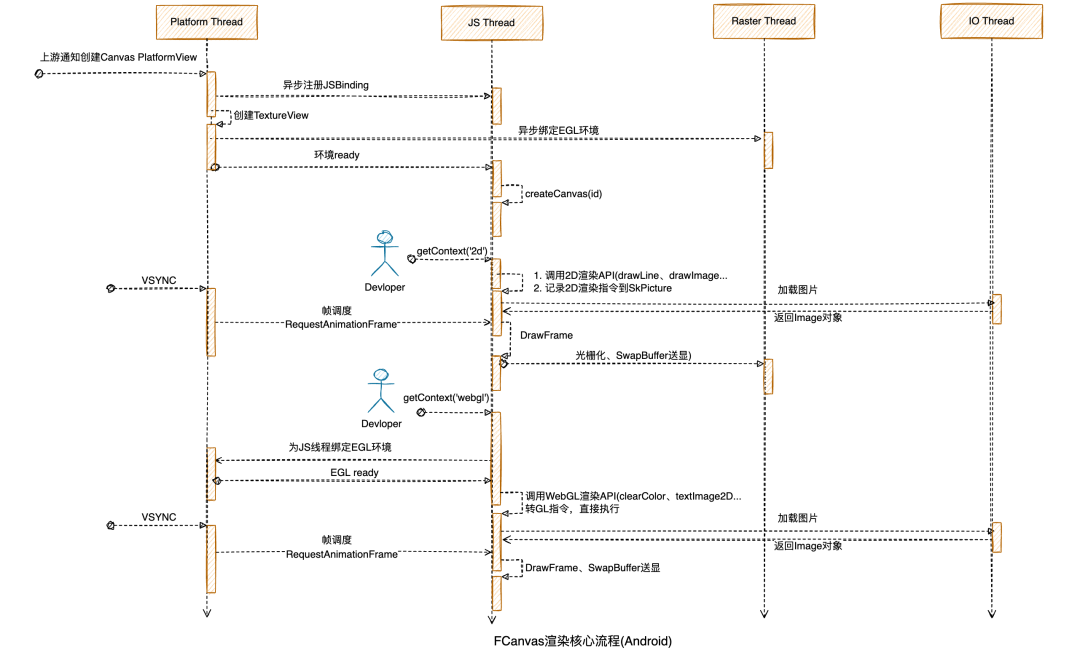

From the perspective of Canvas itself, the platform and container parts can be temporarily ignored. There are two key points, one is the creation of the Rendering Surface, and the other is the Rendering Pipeline process. The following shows this process by means of a sequence diagram, which involves a total of four threads, the Platform thread (ie, the platform UI thread), the JS thread, the rasterization thread, and the IO thread.

- Rendering Surface Setup: When receiving the upstream message of creating PlatformView, it will first bind the Canvas API in the JS thread asynchronously, and then create the TextureView/SurfaceView in the Platform thread. When the SurfaceCreated signal is received, the EGL environment will be initialized in advance in the Raster thread and bound to the Surface. At this time, the Rendering Surafce is created, and the JS thread environment is notified of Ready, and rendering can be performed. Different from 2D, if it is WebGL Context, Rendering Surace will be created in JS thread by default (when Command Buffer is not enabled);

- Rendering Pipeline Overview: After receiving the Ready event, the developer can get the Canvas handle and select 2d or WebGL Rendering Context through getContextAPI. For 2d, when the developer calls the rendering API on the JS thread, the developer only records the rendering instructions, but does not perform rendering. The real rendering occurs in the rasterization thread. For WebGL, by default, the GL will be called directly on the JS thread. Graphics API. However, both 2d and WebGL rendering are driven by the platform VSYNC signal. After receiving the VSYNC signal, a RequestAnimationFrame message will be sent to the JS thread, and then the rendering of a frame will start. For 2D, the previous rendering command will be played back in the rasterization thread, the real rendering command will be submitted to the GPU, and the swapbuffer will be sent for display, while WebGL will be directly sent to the JS thread swapbuffer for display. If the image needs to be rendered, it will be downloaded and decoded in the IO thread and finally used in the JS or rasterization thread.

Weex engine perspective

From the perspective of the Weex engine, Canvas is an extension component. Weex does not even perceive the existence of Canvas. It only knows that an area of the current page is embedded through PlatformView. It does not care what the specific content is. All PlatformView components have The rendering process is the same.

The left half of the following figure describes the core process of the Weex2.0 rendering link: The widget JS code is executed through the script engine, and the widget DOM structure is converted into a series of Weex rendering instructions (such as AddElement creation) through the weex CallNative universal binding interface. node, UpdateAttrs to update node attributes, etc.), and then Unicorn restores to a static node tree (Node Tree) based on rendering instructions, which records information such as parent-child relationship, node style & attributes. The static node tree will further generate a RenderObject rendering tree in the Unicorn UI thread. The rendering tree will generate multiple Layers through layout, drawing and other processes to form a LayerTree layer structure. Through the BuildScene interface of the engine, the LayerTree will be sent to the rasterization module for synthesis and final rendering. to the Surface and send it to display through SwapBuffer.

The right half is the rendering process of Canvas. The Canvas perspective has been introduced above the general process, so I won't repeat it. Here we focus on the Canvas embedding scheme. Canvas is embedded through the PlatformView mechanism, which will generate the corresponding Layer in Unicorn and participate in subsequent synthesis. , but PlatformView has a variety of implementation schemes, and the process of each scheme is quite different. Let's talk about it below.

Weex2.0 provides a variety of PlatformView embedded technical solutions on the Android platform. Here are two of them: VirtualDisplay and Hybrid Composing. In addition, there are self-developed digging solutions.

VirtualDisplay

In this mode, the PlatformView content will eventually be converted into an external texture to participate in the Unicorn synthesis process. The specific process: first create a SurfaceTexture and store it on the Unicorn engine side, then create android.app.Presentation, and use the PlatformView (such as Canvas TextureView) as the Presentation , and render it to the VirtualDisplay. As we all know, VirtualDisplay needs to provide a Surface as Backend, so the Surface here is created based on SurfaceTexture. When the SurfaceTexture is filled with content, the engine side receives a notification and converts the SurfaceTexture to OES texture, participates in the Unicorn rasterization process, and finally combines with other Layers to synthesize the SurfaceView or TextureView corresponding to Unicorn.

The performance of this mode is acceptable, but the main drawbacks are the inability to respond to Touch events, the loss of a11y features, and the inability to obtain the focus of TextInput. It is precisely because of these compatibility issues that the application scenarios of this solution are relatively limited.

Hybrid Composing

In this mode, widgets are no longer rendered to SurfaceView or TextureView, but are rendered to one or more Surfaces associated with android.media.ImageReader. Unicorn encapsulates an Android custom View based on ImageReader, and uses the Image object produced by ImageReader as a data source, and continuously converts it to Bitmap to participate in the Android native rendering process. So, why is it possible to have multiple ImageReaders? Because of the possibility of layout cascading, there may be DOM nodes above and below the PlatformView. Correspondingly, PlatformView itself (such as Canvas) is no longer converted to texture but also participates in the rendering process of the Android platform as a normal View.

The Hybrid Composing mode solves most of the compatibility problems of the VirtualDisplay mode, but it also brings new problems. There are two main disadvantages of this mode. One is that threads need to be merged. After PlatformView is enabled, the tasks of the Raster thread will be thrown to the Android master Thread execution increases the pressure on the main thread; second, the Android native View (that is, the UnicornImageView mentioned below) based on ImageReader needs to continuously create and draw Bitmaps, especially before Android 10, Bitmaps need to be generated by software copying. Performance has some impact.

In general, Hyrbid Composing is more compatible, so the engine currently uses this mode to implement PlatformView by default.

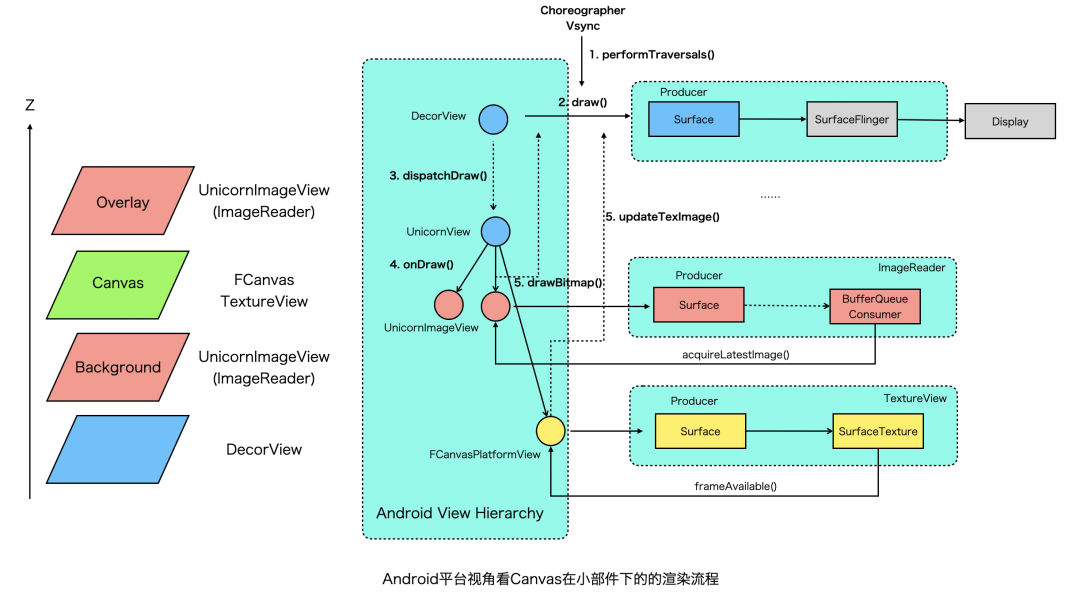

Android platform perspective

Next, the author tries to re-examine this process from the perspective of the Android platform (taking the Weex + Hybrid Composing PlatformView mode as an example).

As mentioned above, in Hybrid Composing mode, widgets are rendered to one or more Unicorn ImageViews, arranged from top to bottom according to Z-index are UnicornImageView(Overlay) -> FCanvasTextureView -> UnicornImageView(Background) -> DecorView, then from From the perspective of the Android platform, the view structure is shown in the figure above. The weex root view (UnicornView) is nested under the Android root view DecorView, which in turn contains multiple UnicornImageViews and an FCanvasPlatformView (TextureView).

From a platform perspective, we don't even need to care about the content of UnicornImageView and FCanvas, just know that they both inherit from android.view.View and follow Android's native rendering process. The native rendering is driven by the VSYNC signal, and the Measure, Layout, and Draw processes are triggered through the ViewRootImpl#PerformTraversal top-level function. Taking drawing as an example, the message is first distributed to the root view DecorView, and top-down DispatchDraw calls back the onDraw function of each View in turn.

- For FCanvas PlatformView, it is a TextureView, which is essentially a SurfaceTexture. When the SurfaceTexture finds new content to fill its internal buffer, it will trigger the frameAvailable callback to notify the view to invalidate, and then the Android rendering thread will transfer the SurfaceTexture through updateTexImage. It is a texture and is synthesized by the system;

- For UnicornImageView, it is a custom View, which is essentially the encapsulation of ImageReader. When the internal buffer of the Surface associated with ImageReader is filled, the latest frame data can be obtained through acquireLatestImage. In UnicornImageView#onDraw, it is Convert the latest frame data to Bitmap and hand it over to android.graphics.Canvas for rendering.

And Android's own View Hierarchy is also associated with a Surface, usually called Window Surface. After the above-mentioned View Hierarchy passes through the drawing process, a DisplayList will be generated, and the Android rendering thread will parse the DisplayList through the HWUI module to generate the actual graphics rendering instructions and hand it over to the GPU for hardware rendering. The final content is drawn to the above Window Surface, and then together with other Surfaces (such as Status bar, SurfaceView, etc.) are synthesized into FrameBuffer through the system SurfaceFlinger and finally displayed on the device. The above is the rendering process from the perspective of the Android platform.

Summary and Outlook

After the analysis from multiple perspectives above, I believe that readers have a preliminary understanding of the rendering process. Here is a brief summary. Canvas, as the core capability of widgets, is supported by the weex kernel PlatformView extension mechanism. This loosely coupled and pluggable architectural pattern On the one hand, the project can be iterated agilely, allowing Canvas to quickly enable businesses in new scenarios, and on the other hand, it also makes the system more flexible and scalable.

But at the same time, readers can also see that PlatformView itself actually has some performance defects, and performance optimization is one of our subsequent evolution goals. Next, we will try to deeply integrate Canvas and Weex kernel rendering pipeline, so that Canvas and The Weex kernel shares Surface and is no longer embedded through PlatformView extension. In addition, we will provide a more streamlined rendering link for interactive widgets in the future, so stay tuned.

pay attention to [Alibaba Mobile Technology] WeChat public account, 3 mobile technology practices & dry goods every week for you to think about!

**粗体** _斜体_ [链接](http://example.com) `代码` - 列表 > 引用。你还可以使用@来通知其他用户。