This article was originally shared by the Rongyun technical team, with revisions and changes.

1 Introduction

In the live video scene, barrage interaction, chat with the host, various business instructions, etc., constitute the interaction between ordinary users and the host.

From a technical point of view, the underlying logic of these real-time interaction methods is the distribution of real-time chat messages or instructions. If the technical architecture is analogous to IM applications, it is equivalent to the IM chat room function.

The first part of this series of articles, "Technical Practice of Real-time Chat Message Distribution in the Live Room with Millions of People Online", mainly shared the strategy of message distribution and discarding. This article will mainly share the practical experience of the technical difficulties of architectural design of massive chat messages in the live broadcast room from the perspectives of high availability, elastic expansion and contraction, user management, message distribution, and client optimization.

study Exchange:

- Introductory article on mobile IM development: "One entry is enough for beginners: developing mobile IM from scratch"

- Open source IM framework source code: https://github.com/JackJiang2011/MobileIMSDK

(This article has been simultaneously published on: http://www.52im.net/thread-3835-1-1.html )

2. Series of articles

This article is the seventh in a series of articles:

"Chat Technology of Live Broadcasting System (1): The Road to Real-time Push Technology Practice of the Million Online Meipai Live Broadcasting Barrage System"

"Chat technology of live broadcast system (2): Alibaba e-commerce IM messaging platform, technical practice in the scene of group chat and live broadcast"

"Chat technology of live broadcast system (3): The evolution of the message structure of WeChat live chat room with 15 million online messages in a single room"

"Chat Technology of Live Broadcasting System (4): Real-time Messaging System Architecture Evolution Practice for Massive Users of Baidu Live Broadcasting"

"Chat technology of live broadcast system (5): Cross-process rendering and streaming practice of WeChat mini-game live broadcast on Android side"

"Chat technology of live broadcast system (6): technology practice of real-time chat message distribution in live broadcast room with millions of people online"

"Chat Technology of Live Broadcasting System (7): Difficulty and Practice of Architecture Design of Massive Chat Messages in Live Broadcasting Room" (* this article)

3. Main functions and technical characteristics of the live broadcast room

Today's video live broadcast room is no longer just a technical problem of video streaming media, it also includes tasks such as multi-type message sending and management, user management and other tasks that users can perceive. At the moment when everything can be broadcast live, super-large live broadcast scenes are not uncommon, and even scenes with an unlimited number of people appear. Faced with the concurrent challenges of such massive real-time messages and instructions, the technical difficulties brought about by unconventional means can no longer be solved.

Let's first summarize the main functional features and technical features of today's typical live video rooms compared to traditional live broadcast rooms.

Rich message types and advanced functions:

1) Traditional chat functions such as text, voice, and pictures can be sent;

2) Message types that can realize non-traditional chat functions such as likes and gifts;

3) Manage content security, including sensitive word settings, chat content anti-spam processing, etc.

Chat management features:

1) User management: including creating, joining, destroying, banning, querying, banning (kicking people), etc.;

2) User whitelist: Whitelisted users will not be automatically kicked out when they are in a protected state, and the priority of sending messages is the highest;

3) Message management: including message priority, message distribution control, etc.;

4) Real-time statistics and message routing capabilities.

Personnel Caps and Behavioral Characteristics:

1) There is no upper limit on the number of people: some large-scale live broadcast scenes, such as the Spring Festival Gala, the National Day military parade, etc., can be watched by tens of millions of people in the live broadcast room, and the number of viewers at the same time can reach millions;

2) User advance and retreat behavior: Users enter and exit the live broadcast room very frequently, and the number of people entering and exiting the live broadcast room with high popularity may be tens of thousands of seconds concurrently, which poses a great challenge to the service's ability to support user online and offline and user management.

Massive message concurrency:

1) Large amount of concurrent messages: There is no obvious upper limit on the number of live chat rooms, which brings about the problem of massive concurrent messages (a chat room with a million people has a huge amount of upstream messages, and the amount of message distribution has increased exponentially);

2) High message real-time: If the server only performs peak-shaving processing of messages, the accumulation of peak messages will increase the overall message delay.

For point 2) above, the cumulative effect of the delay will cause a deviation between the news and the live video stream on the timeline, which in turn affects the real-time interaction of users watching the live broadcast. Therefore, the server's ability to quickly distribute massive messages is very important.

4. Architecture design of live chat room

A high-availability system needs to support the automatic transfer of service failures, precise fuse and downgrade of services, service governance, service current limiting, service rollback, and automatic service expansion/reduction capabilities.

The system architecture of the live chat room system aiming at serving high availability is as follows:

As shown in the figure above, the system architecture is mainly divided into three layers:

1) Connection layer: mainly manages the long link between the service and the client;

2) Storage layer: Redis is currently used, as the second-level cache, which mainly stores the information of chat rooms (such as personnel list, black and white list, ban list, etc.). When the service is updated or restarted, the backup of the chat room can be loaded from Redis information);

3) Business layer: This is the core of the entire chat room. In order to achieve disaster recovery across computer rooms, services are deployed in multiple availability zones, and are divided into chat room services and message services according to their capabilities and responsibilities.

Specific responsibilities of chat room service and messaging service:

1) Chat room service: mainly responsible for processing management requests, such as the entry and exit of chat room personnel, ban/ban, uplink message processing review, etc.;

2) Message service: It mainly caches the user information and message queue information that this node needs to process, and is responsible for the distribution of chat room messages.

In a high-concurrency scenario with a large number of users, the message distribution capability will determine the performance of the system. Taking a live chat room with millions of users as an example, an uplink message corresponds to a million times distribution. In this case, the distribution of massive messages cannot be achieved by relying on a single server.

Our optimization idea is: split the personnel of a chat room into different message services, spread the message to the message service after the chat room service receives the message, and then distribute it to the user by the message service.

Take the live chat room of Million Online as an example: Assuming that there are 200 message services in the chat room, each message service manages about 5,000 people on average. Users can distribute it.

The selection logic of service placement:

1) In the chat room service: the uplink signaling of the chat room is based on the chat room ID using a consistent hash algorithm to select nodes;

2) In the message service: according to the user ID, the consistent hash algorithm is used to determine which message service the user belongs to.

The location of consistent hash selection is relatively fixed, which can aggregate the behavior of chat rooms to one node, which greatly improves the cache hit rate of the service.

The process of entering and leaving the chat room, black/white list setting, and judgment when sending a message can directly access the memory, without accessing the third-party cache every time, thus improving the response speed and distribution speed of the chat room.

Finally: Zookeeper is mainly used for service discovery in the architecture, and each service instance is registered with Zookeeper.

5. The ability to expand and shrink the chat room of the live broadcast room

5.1 Overview

As the form of live broadcast is accepted by more and more people, there are more and more situations in which the chat room in the live broadcast room faces a surge in the number of people, which gradually increases the pressure on the server. Therefore, it is very important to be able to smoothly expand/shrink the capacity in the process of gradually increasing/decreasing the service pressure.

In terms of automatic expansion and contraction of services, the solutions provided in the industry are generally the same: that is, to understand the bottleneck point of a single server through stress testing → to determine whether expansion or contraction is required by monitoring business data → to trigger an alarm after the set conditions are triggered. Automatically expand and shrink.

In view of the strong business nature of the chat room in the live broadcast room, it should be ensured that the overall chat room business will not be affected during the expansion and contraction of the specific implementation.

5.2 Chat room service expansion and contraction

When the chat room service is expanding or shrinking, we use Redis to load the member list, ban/black and white list and other information.

It should be noted that when the chat room is automatically destroyed, it is necessary to first determine whether the current chat room should belong to this node. If not, skip the destruction logic to avoid data loss in Redis due to the destruction logic.

The details of the chat room service expansion and contraction scheme are shown in the following figure:

5.3 Message Service Expansion and Reduction

When the message service is expanding or shrinking, most members need to be routed to the new message service node according to the principle of consistent hashing. This process will upset the current personnel balance and make an overall personnel transfer.

1) When expanding: We gradually transfer personnel according to the activity level of the chat room.

2) When there is a message: [The message service will traverse all users cached on this node to pull the notification of the message, and in the process, determine whether the user belongs to this node (if not, add this user to the his node).

3) When pulling a message: When a user pulls a message, if there is no such user in the local cache list, the message service will send a request to the chat room service to confirm whether the user is in the chat room (if so, join the message service synchronously). , if it is not, it will be discarded directly).

4) When scaling down: the message service will obtain all members from the public Redis, and filter out the users of this node according to the placement calculation and put them in the user management list.

6. Online and offline and management of massive users

Chat room service: manages the entry and exit of all personnel, and changes in the list of personnel will also be asynchronously stored in Redis.

Message service: maintain their own chat room personnel. When users actively join and leave the room, they need to calculate the landing point according to the consistent hash and synchronize to the corresponding message service.

After the chat room gets the message: The chat room service broadcasts to all chat room message services, and the message service pulls the notification of the message. The message service will detect the user's message pulling status. When the chat room is active, if the person does not pull within 30s or a total of 30 messages have not been pulled, the message service will determine that the current user is offline, and then kick the person out. And synchronize to the chat room service to do offline processing for this member.

7. Distribution strategy for massive chat messages

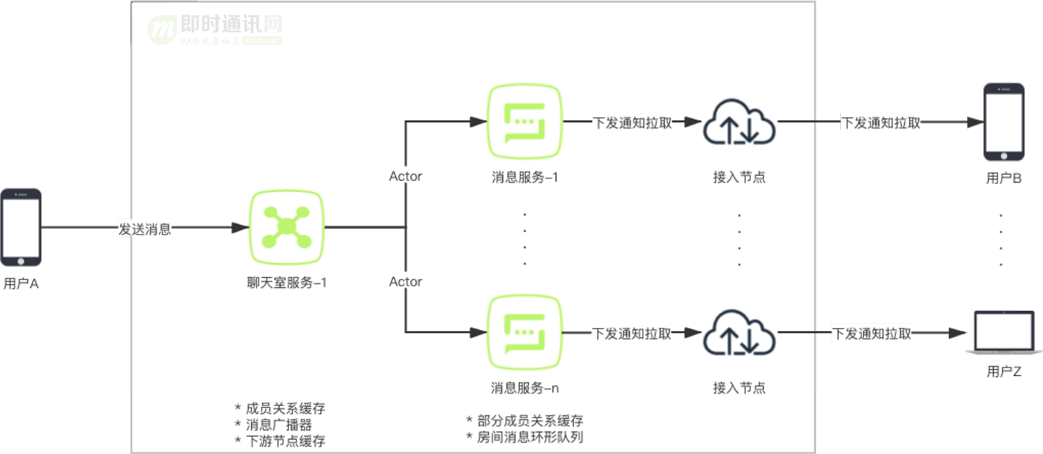

The message distribution and pull scheme of the live chat room service is as follows:

7.1 Pulling of message notifications

In the above figure: User A sends a message in the chat room, which is first processed by the chat room service. The chat room service synchronizes the message to each message service node, and the message service sends a notification pull to all members cached in this node (Fig. The server sends a notification to User B and User Z).

During the message distribution process, the server does notification merging.

The detailed process of notification pulling is as follows:

1) The client successfully joins the chat and adds all members to the queue to be notified (if it already exists, update the notification message time);

2) Distribute threads and take turns to obtain queues to be notified;

3) Send a notification pull to users in the queue.

Through this process, it can be ensured that only one notification pull will be sent to the same user in one round of the issuing thread (that is, multiple messages will be combined into one notification pull), which effectively improves the performance of the server and reduces the network consumption of the client and the server. .

7.2 Pulling of messages

The user's message pulling process is as follows:

As shown in the figure above, user B sends a pull message request to the server after receiving the notification. The request will eventually be processed by message node 1, and message node 1 will return from the message queue according to the timestamp of the last message passed by the client. Message list (refer to the figure below).

Client pull message example:

The maximum local time on the client side is 1585224100000, and two messages larger than this number can be pulled from the server side.

7.3 Message speed control

When the server is dealing with massive messages, it needs to control the speed of the messages.

This is because: in the live chat room, a large number of messages sent by a large number of users at the same time period generally have basically the same content. If all messages are distributed to the client, the client is likely to experience problems such as freezing and message delay, which seriously affects the user experience.

Therefore, the server performs rate-limiting processing on both the upstream and downstream of the message.

Message speed control principle:

The specific speed limit control strategy is as follows:

1) Server upstream speed limit control (drop) strategy: For the upstream message speed limit control of a single chat room, we default to 200 messages per second, which can be adjusted according to business needs. Messages sent after reaching the speed limit will be discarded in the chat room service and will no longer be synchronized to each message service node;

2) Server downlink rate limit (discard) strategy: The downlink rate limit control of the server is mainly based on the length of the message ring queue. After reaching the maximum value, the "oldest" messages will be eliminated and discarded.

After every time a notification is pulled, the server marks the user as being pulled, and removes the mark after the user actually pulls the message.

If the user has a pull flag when a new message is generated:

1) Within 2 seconds from the set mark time, the notification will not be issued (reduce the client pressure, discard the notification but not discard the message);

2) If it exceeds 2 seconds, the notification will continue to be sent (if the notification is not pulled for multiple times in a row, the user will be kicked out of the policy, which will not be repeated here).

Therefore: whether the message is discarded depends on the client's pull speed (affected by client performance and network), and the client pulls the message in time without the discarded message.

8. Message priority in the live chat room

The core of message speed control is the choice of messages, which requires prioritization of messages.

The division logic is roughly as follows:

1) Whitelist message: This type of message is the most important and has the highest level. Generally, system notifications or management information will be set as whitelist messages;

2) High-priority messages: second only to whitelist messages, messages without special settings are high-priority;

3) Low-priority messages: messages with the lowest priority, most of which are text messages.

Specifically how to divide, it should be possible to open a convenient interface for setting.

The server implements different rate-limiting strategies for the three types of messages. When the concurrency is high, the low-priority message is most likely to be discarded.

The server stores three kinds of messages in three message buckets: the client pulls messages in the order of whitelisted messages > high-priority messages > low-priority messages.

9. The client is optimized for receiving and rendering a large number of messages

9.1 Message reception optimization

In terms of message synchronization mechanism, if the live chat room receives a message directly to the client, it will undoubtedly bring great performance challenges to the client. Especially in the concurrent scenario of thousands or tens of thousands of messages per second, continuous message processing will occupy the limited resources of the client and affect other aspects of user interaction.

Considering the above problems, a notification pull mechanism is designed separately for the chat room. After the server performs a series of controls such as frequency division and speed limit aggregation, the client is notified to pull.

Specifically divided into the following steps:

1) The client successfully joins the chat room;

2) The server sends notification pull signaling;

3) The client pulls the message from the server according to the maximum timestamp of the message stored locally.

It should be noted here: when you join the live chat room for the first time, there is no valid timestamp locally. At this time, 0 will be passed to the service to pull the latest 50 messages and store them in the database. The maximum timestamp of the message stored in the database will only be passed when the data is pulled again, and the differential pull will be performed.

After the client pulls the message: it will process the re-arrangement, and then throw the re-arranged data to the business layer to avoid repeated display of the upper layer.

In addition: the messages in the chat room of the live broadcast room are very instant. After the live broadcast ends or the user exits the chat room, most of the previously pulled messages do not need to be viewed again. Therefore, when the user exits the chat room, the chat room in the database will be cleared. to save storage space.

9.2 Message Rendering Optimization

In terms of message rendering, the client has also adopted a series of optimizations to ensure that it still performs well in the scene where a large number of messages are swiped in the chat room of the live broadcast room.

Taking the Android side as an example, the specific measures are:

1) Adopt MVVM mechanism: strictly distinguish business processing and UI refresh. Every time a message is received, the page refresh is notified only after the child thread of the ViewModel has processed all the business and prepared the data required for the page refresh;

2) Reduce the burden on the main thread: use the setValue() and postValue() methods of LiveData precisely: the events already in the main thread notify the View to refresh through the setValue() method, so as to avoid excessive postValue() causing the main thread to be overburdened;

3) Reduce unnecessary refreshes: For example, when the message list slides, it is not necessary to refresh the new messages received, just prompt;

4) Update of identification data: Use Google's data comparison tool DiffUtil to identify whether the data has been updated, and only update some of the changed data;

5) Control the number of global refreshes: try to update the UI through local refreshes.

Through the above mechanism: From the stress test results, on a mid-range mobile phone, when there are 400 messages per second in the chat room of the live broadcast room, the message list is still smooth and there is no lag.

10. Optimized for custom attributes other than traditional chat messages

10.1 Overview

In the live chat room scene, in addition to the traditional chat message sending and receiving, the business layer often needs to have some business attributes of its own, such as the anchor's wheat position information, role management, etc. in the voice live chat room scene, as well as werewolf killing, etc. In the card game scene, the user's role and the state of the game are recorded.

Compared with traditional chat messages, custom attributes have certain requirements and timeliness. For example, information such as wheat positions and roles need to be synchronized to all members of the chat room in real time, and then the client can refresh the local business according to the custom attributes.

10.2 Storage of custom properties

Custom properties are passed and stored in the form of key and value. There are two main operation behaviors for custom attributes: setting and deleting.

The server stores custom properties also in two parts:

1) A full set of custom attributes;

2) Custom attribute collection change record.

The custom attribute storage structure is shown in the following figure:

For these two pieces of data, two query interfaces should be provided, namely, querying full data and querying incremental data. The combined application of these two interfaces can greatly improve the attribute query response and custom distribution capabilities of the chat room service.

10.3 Pulling custom properties

The full amount of data in memory is mainly used by members who have never pulled custom attributes. Members who have just entered the chat room can directly pull the full amount of custom attribute data and display it.

For members who have already pulled the full amount of data, if the full amount of data is pulled every time, the client needs to compare the full amount of custom attributes on the client side with the full amount of custom attributes on the server side if the client wants to obtain the modified content. Which end of the comparison behavior is placed will increase a certain computational pressure.

Therefore, in order to realize the synchronization of incremental data, it is necessary to construct a set of attribute change records. This way: most members can get incremental data when they receive changes to custom properties to pull.

The attribute change record uses an ordered map set: the key is the change timestamp, and the value stores the type of change and the content of the custom attribute. This ordered map provides all the actions of the custom attribute during this period. .

The distribution logic of custom attributes is the same as that of messages: both are notification pulls. That is, after the client receives the notification of custom attribute change and pull, it pulls it with the timestamp of its own local maximum custom attribute. For example, if the timestamp sent by the client is 4, two records with timestamp 5 and timestamp 6 will be pulled. The client pulls the incremental content and plays it back locally, and then modifies and renders its own local custom properties.

11. Multi-group chat reference materials

[1] Should "Push" or "Pull" be used for online status synchronization in IM single chat and group chat?

[2] IM group chat messages are so complicated, how to ensure that they are not lost or heavy?

[3] How to ensure the efficiency and real-time performance of large-scale group message push in mobile IM?

[4] Discussion on Synchronization and Storage Scheme of Chat Messages in Modern IM System

[5] Discussion on the disorder of IM instant messaging group chat messages

[6] How to realize the read receipt function of IM group chat messages?

[7] Are IM group chat messages stored in one copy (ie, diffusion reading) or multiple copies (ie, diffusion writing)?

[8] A set of high-availability, easy-to-scale, high-concurrency IM group chat, single chat architecture design practice

[9] In the IM group chat mechanism, what other ways are there other than circularly sending messages? How to optimize?

[10] Netease Yunxin Technology Sharing: Summary of the Practice of Ten Thousand People Chatting Technology Solutions in IM

[11] Alibaba DingTalk Technology Sharing: The King of Enterprise-Level IM——DingTalk’s Superiority in Back-End Architecture

[12] Discussion on the realization of the read and unread function of IM group chat messages in terms of storage space

[13] The secret of IM architecture design of enterprise WeChat: message model, ten thousand people, read receipt, message withdrawal, etc.

[14] Rongyun IM Technology Sharing: Thinking and Practice of the Message Delivery Scheme for Ten Thousand People Chat

(This article has been published simultaneously at: http://www.52im.net/thread-3835-1-1.html )

**粗体** _斜体_ [链接](http://example.com) `代码` - 列表 > 引用。你还可以使用@来通知其他用户。